Home >Technology peripherals >AI >AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

- WBOYforward

- 2023-10-19 14:13:01679browse

Now, AI can interpret brain signals in real time!

This is not sensational, but a new study by Meta, which can guess the picture you are looking at in 0.5 seconds based on brain signals, and use AI to restore it in real time.

Before this, although AI has been able to restore images from brain signals relatively accurately, there is still a bug - it is not fast enough.

To this end, Meta has developed a new decoding model, which increases the speed of image retrieval by AI by 7 times. It can almost "instantly" read what people are looking at and make a rough guess.

Looks like a standing man. After several restorations, the AI actually interpreted a "standing man":

Picture

Picture

LeCun forwarded that the research on reconstructing visual and other inputs from MEG brain signals is indeed great.

Picture

Picture

So, how does Meta allow AI to “read brains quickly”?

How to interpret brain activity decoding?

Currently, there are two main ways for AI to read brain signals and restore images.

One is fMRI (functional magnetic resonance imaging), which can generate images of blood flow to specific parts of the brain; the other is MEG (magnetoencephalography), which can measure the extremely high intensity of nerve currents in the brain. Weak biomagnetic signal.

However, the speed of fMRI neuroimaging is often very slow, with an average of 2 seconds to produce a picture (≈0.5 Hz). In contrast, MEG can even record thousands of brain activity images per second ( ≈5000 Hz).

So compared to fMRI, why not use MEG data to try to restore the "image seen by humans"?

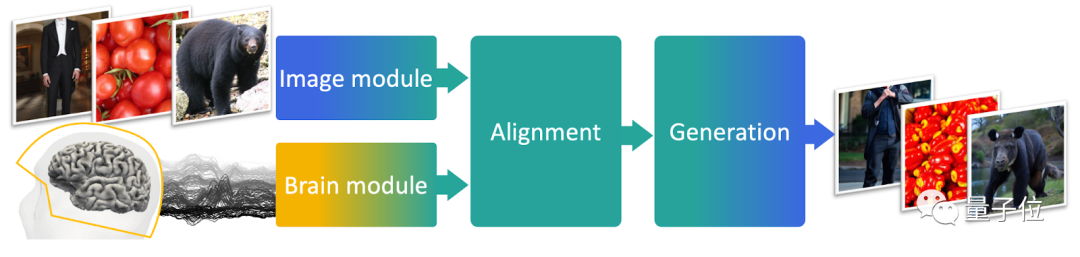

Based on this idea, the authors designed a MEG decoding model, which consists of three parts.

The first part is a pre-trained model, responsible for obtaining embeddings from images;

The second part is an end-to-end training model, responsible for aligning MEG data with image embeddings;

The third part is a pre-trained image generator, responsible for restoring the final image.

Picture

Picture

For training, the researchers used a data set called THINGS-MEG, which contains 4 young people (2 boys and 2 girls) , average 23.25 years) MEG data recorded while viewing images.

These young people viewed a total of 22,448 images (1,854 types). Each image was displayed for 0.5 seconds and the interval was 0.8~1.2 seconds. 200 of them were viewed repeatedly.

In addition, there were 3659 images that were not shown to participants but were also used in image retrieval.

So, what is the effect of the AI trained in this way?

Image retrieval speed increased by 7 times

Overall, the MEG decoding model designed in this study is 7 times faster than the image retrieval speed of the linear decoder.

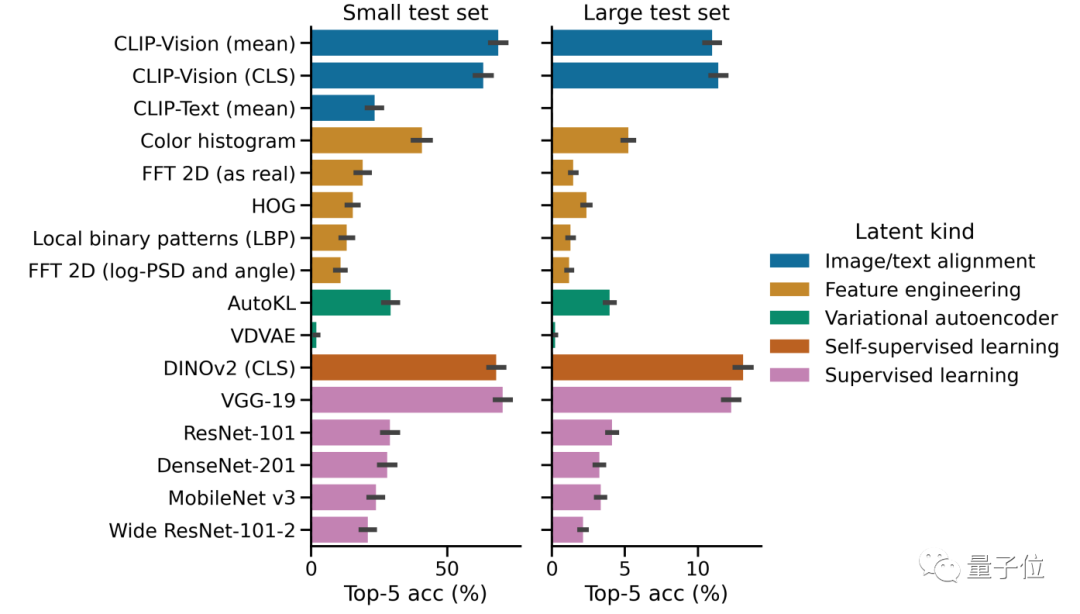

Among them, compared with CLIP and other models, the visual Transformer architecture DINOv2 developed by Meta performs better in extracting image features and can better align MEG data and image embeddings.

Picture

Picture

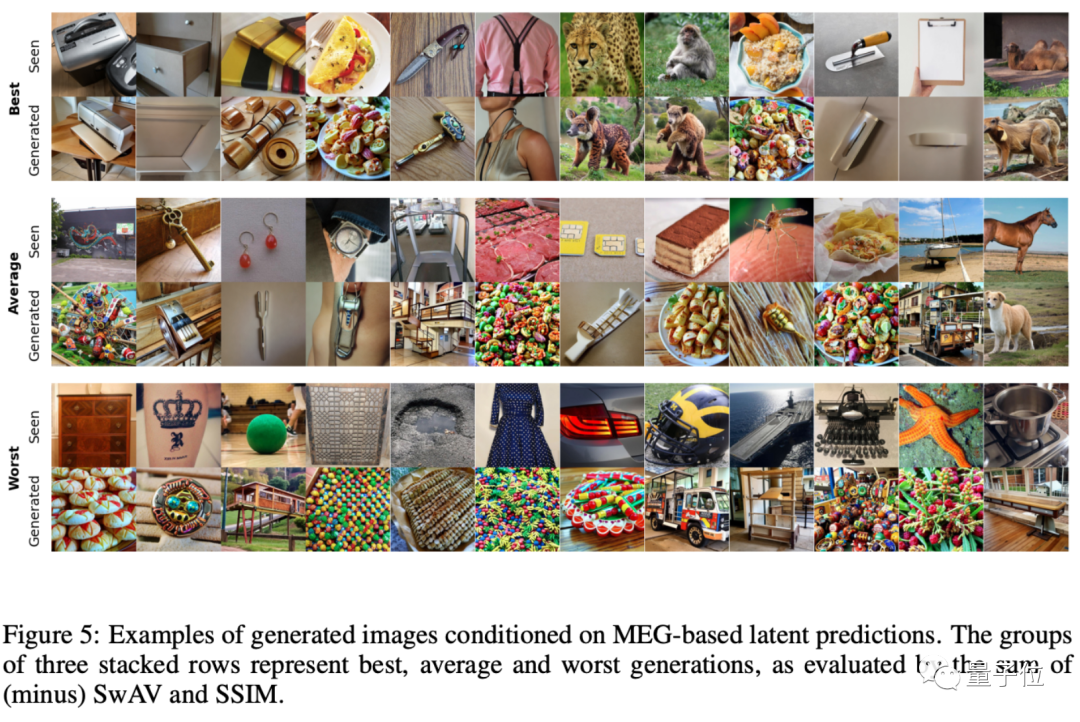

The authors divided the overall generated images into three major categories, the highest matching degree, the medium matching degree and the worst matching degree:

Picture

Picture

However, judging from the generated examples, the image effect restored by this AI is indeed not very good.

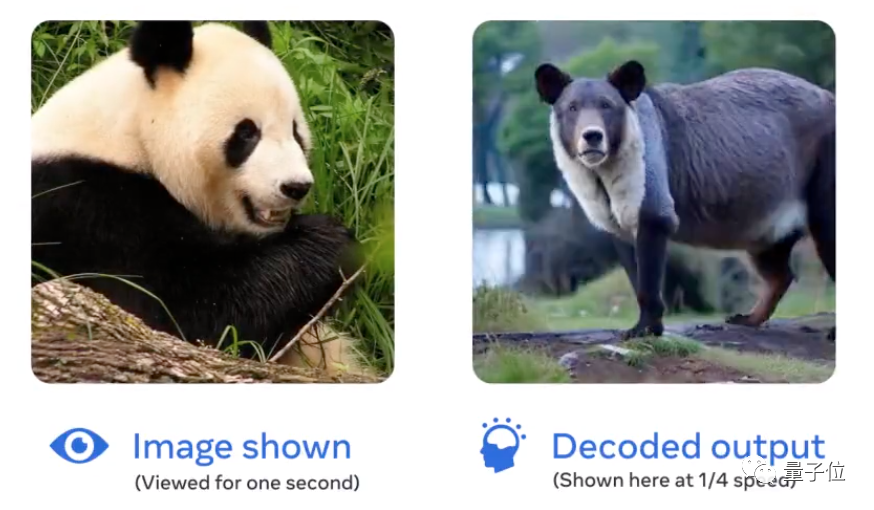

Even the most restored image is still questioned by some netizens: Why does the panda look nothing like a panda?

Picture

Picture

The author said: At least it looks like a black and white bear. (The panda is furious!)

Picture

Picture

Of course, researchers also admit that the image effect restored from MEG data is indeed not very good at present. The main advantage Still in terms of speed.

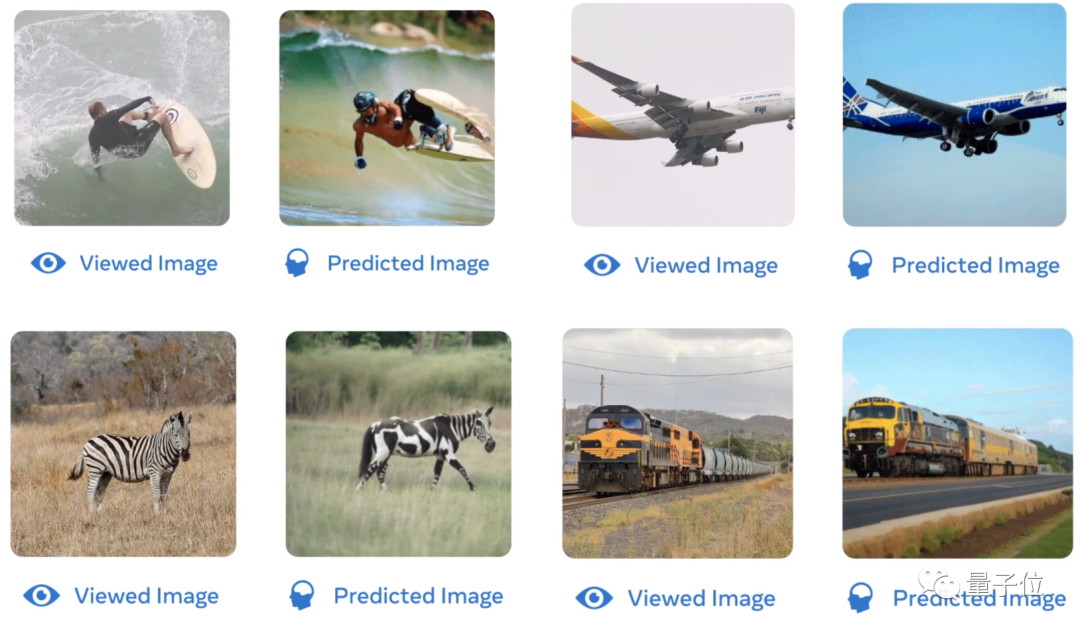

For example, a previous study called 7T fMRI from the University of Minnesota and other institutions can restore the image seen by the human eye from fMRI data with a high degree of recovery.

Picture

Picture

Whether it is human surfing movements, the shape of an airplane, the color of zebras, or the background of a train, AI trained based on fMRI data can better The image is restored:

Picture

Picture

The authors also gave an explanation for this, believing that this is because the visual features restored by AI based on MEG are biased. advanced.

But in comparison, 7T fMRI can extract and restore lower-level visual features in the image, so that the overall restoration degree of the generated image is higher.

Where do you think this type of research can be used?

Paper address:

https://www.php.cn/link/f40723ed94042ea9ea36bfb5ad4157b2

The above is the detailed content of AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun. For more information, please follow other related articles on the PHP Chinese website!