Home >Technology peripherals >AI >Detailed explanation of Qingyun Technology's launch of AI computing power products and services to address computing power challenges

Detailed explanation of Qingyun Technology's launch of AI computing power products and services to address computing power challenges

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-10-16 20:37:011326browse

At the Qingyun Technology AI Computing Power Conference, Miao Hui, product manager, introduced in detail the Qingyun AI computing power scheduling platform and Qingyun AI computing power cloud services. The following is the full text of the speech:

Artificial intelligence users face computing power challenges

With the explosion of the artificial intelligence industry, AIGC, large models, scientific research computing, enterprise-level big data and artificial intelligence have put forward higher demands on computing power centers. Especially in the face of data centers with a single computing power, it is no longer able to meet the growing demand for computing power in all walks of life. Therefore, more intelligent computing centers, supercomputing centers and general cloud computing services are needed to provide computing power services to the whole society.

However, the AI industry, AI infrastructure and users of AI computing power also face a series of challenges:

Bottleneck of unified management of multiple resources. Faced with users' requirements for multiple computing power, multiple storage, the entire computing network, and nearby services, Qingyun provides a multiple resource management unified service scheduling platform to solve the chaotic situation of multiple resource management.

High-speed network bottleneck. In terms of AI high-speed network construction, Qingyun uses high-speed networks to interconnect computing and storage devices, and uses general-purpose networks to publish application services, that is, Qingyun solves multi-region high-speed networking problems through Qingyun's platform.

Environment construction is a tedious bottleneck. Algorithm engineers and R&D engineers may waste a lot of time on setting up basic environments such as hardware servers and storage servers. Through Qingyun AI intelligent computing services, training platforms and inference model platforms, the environment construction is simplified and one-click deployment can be achieved.

Multi-service integration bottleneck. Qingyun integrates multiple businesses and combines traditional cloud computing, super computing and intelligent computing to provide panoramic computing services for more businesses and more customers.

Lack of operational services. Qingyun also provides comprehensive operations and operation and maintenance management services to the computing power operation center and computing power management department.

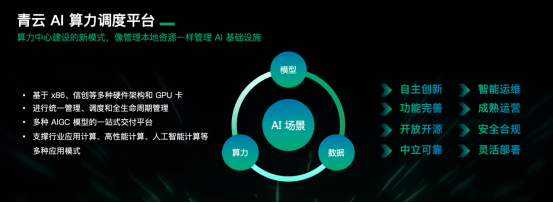

Qingyun AI computing power scheduling platform

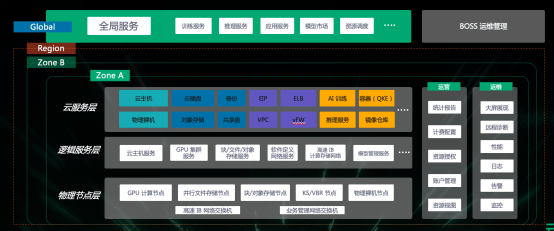

The full-stack product architecture of Qingyun AI scheduling products is multi-AZ and multi-zone, that is, products in multiple regions can be unified and integrated to provide computing power services to the whole society with a global service. Specifically, it will manage the underlying infrastructure, make the infrastructure logical and business-oriented through the data logic layer, and form an AI computing power cluster through specific products or services, including GPU hosts, bare metal, virtualization, sharing forms, etc. , container inference services, model markets and other related businesses, providing computing power scheduling and application scenario implementation capabilities for customers across the industry.

Covering all aspects, new model of computing power construction center

Overall, the AI computing power scheduling platform capabilities provided by Qingyun Technology are mainly based on the following four aspects:

First, the entire platform is compatible with all computing chips on the market (including newly produced Xinchuang chips), as well as GPU-related graphics cards and network cards.

Second, carry out unified management, distribution, monitoring and scheduling of the above adaptation resources, and provide full life cycle online management functions from user application to release after use.

Third, for the management side and the user side, Qingyun unified management platform allows users and administrators to fully operate AI infrastructure and AI computing power cloud services.

Facing the field of intelligent computing, Qingyun will commercialize and scenario-based more services, such as large language model training and reasoning, and load balancing services based on text generation. Qingyun can also use the AI computing power scheduling platform to provide customers with Provides convenient operations such as one-click deployment, one-click expansion, and one-click load balancing. In terms of load balancing, especially in network, public network and computing infrastructure, it can achieve second-level delivery and second-level capacity expansion.

Finally, based on the above three capabilities, Qingyun can support computing in various industries including high-performance computing, artificial intelligence computing and general computing models, and create a unified user management, distribution and distribution system with independent innovation and complete functions for customers. Operation platform.

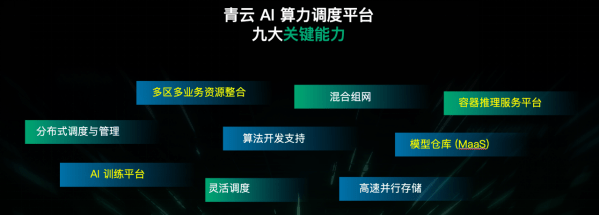

Nine Abilities Unlock AI Computing Power Freedom

Through years of industry accumulation, Qingyun AI computing power scheduling platform has formed nine key capabilities:

1. Multi-region and multi-business resource integration capabilities

Especially for the diversification of computing power services in western Sichuan or northwest regions, when providing computing power services to the eastern region, scientific research institutions, and universities, Qingyun can manage multi-region resources in a unified manner and build effective high-speed networks through cooperation with telecom operators. network.

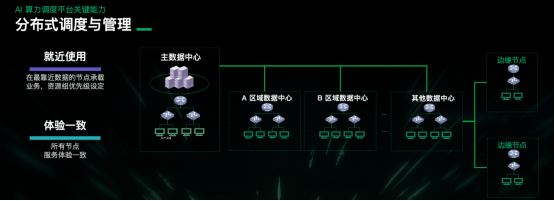

2. Distributed scheduling and management capabilities

According to the principle of nearby use, Qingyun manages and distributes all infrastructure (including computing resources and storage resources) in different regions, computing centers, and data centers, and configures scheduling priorities, including affinity and non-affinity. . In VMs, hosts and bare metal servers (including containers based on Container and Pod), affinity and non-affinity data configuration can be performed on the management side of Qingyun AI computing power scheduling platform to ensure the priority of data scheduling. , the purpose is to ensure that users get a consistent experience in the final use of data, application for computing resources, business training, and business reasoning.

3. Resource scheduling capability

In terms of resource scheduling capabilities, Qingyun has the following six major advantages:

1) Immediately schedule and expand resources of tens of thousands of cards

Mainly oriented to AI computing scenarios, especially large model inference. Some model scenarios require inference several times a year, which requires the instant construction of a training platform with dozens of cards or even tens of thousands of cards. According to this requirement, the built-in, adaptation and resource management can be carried out on the Qingyun AI computing power scheduling platform to ensure that the computing power cluster can immediately support the resources of tens of thousands of cards and can be released immediately after use. In terms of resource environment and configuration, Qingyun AI computing power scheduling platform has done a lot of automation to ensure that Wanka resources can be scheduled uniformly.

2) Communication link shortest priority scheduling

Keeping data from detours is also the main purpose of Qingyun AI computing power scheduling platform. In the scenarios of AI training and AI inference, there will be a large amount of data interaction between nodes and between nodes and storage. In this case, Qingyun performs some configurations on the switch at the same time to ensure that computing and storage resources can be on one switch. Prioritize scheduling within a computer room or cabinet to prevent data from being detoured and reduce the constraints of difficult network transmission during AI training.

3) Support heterogeneous platforms

When building a cluster, users can choose different services to run on different cards. Qingyun Technology also carried out domestic adaptation and domestic replacement of the chips. 4) Improve the granularity of the scheduling system

The first is a scheduling system based on Slurm, and the second is a scheduling system based on K8s. In terms of the granularity of the scheduling system, users can perceive true job-level accuracy. When every training task is run on every process on every card, it can be implemented through large-scale data monitoring, business scheduling, etc. Monitoring of job anomalies ensures that users can handle abnormal situations of training tasks in a timely manner to maximize resource scheduling and reduce waste at this level. If something is wrong, modify it immediately and run it immediately.

5) The management side implements scheduling priority configuration

Because different computing power centers will operate different computing power services, especially in the case of multiple data centers, users can prioritize the scheduling through the Qingyun AI computing power scheduling platform. All are built-in in the early stage, and users can also configure it in the later stage. Make reservations, pauses, resumes, priority settings, queuing and other settings to increase priority. At the management level, Qingyun can prioritize resource allocation for users who apply for special applications or users with high priority.

6) Flexible scheduling and allocation of resources for the intelligent computing industry

Qingyun enables dynamic and flexible resource scheduling and configurability to address challenging priorities in AI systems. This is why Qingyun continues to discover new problems in AI scheduling computing power or AI scenarios, constantly uses the platform to solve new problems, and uses new products to solve some major problems in the industry.

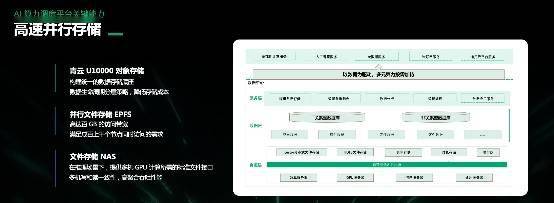

4. High-speed parallel storage capability

Qingyun’s computing and storage products are diverse and diverse, providing the following three types of storage:

1) Qingyun U10000 Object Storage

Storage models, codes and commonly used data calls are mainly used for large-scale data backup and data reading operations.

2) Parallel file storage EPFS

In terms of large-scale parallel writing of data, Qingyun provides parallel file storage EPFS, which mainly provides all-flash parallel file storage for MPI-level data writing operations.

3) File storage NAS

You can store some common documents, texts, etc. All Qingyun's storage products can be internally interconnected with its own computing products to perform data transmission, distribution, backup, etc. on the internal high-speed network.

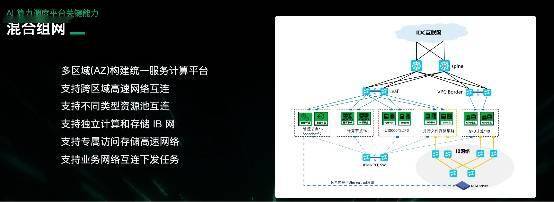

5. Hybrid networking capability

Different high-speed networks can be provided for different computing scenarios, such as computing IB network and storage IB network. How to optimally configure them?

Qingyun interconnects high-configuration computing products and high-configuration storage products, and interconnects medium- and low-configuration products for training scenarios, inference scenarios, and general application service scenarios.

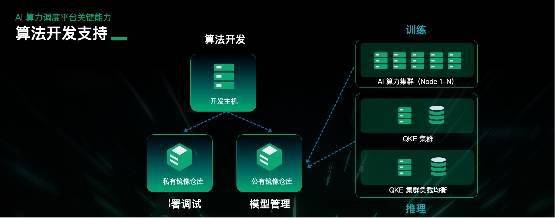

6. Algorithm development support capabilities

For algorithm developers, Qingyun provides more comprehensive cloud service products. Especially in the algorithm development stage, a large amount of parameter adjustment and large-scale code writing are required. During training and deployment, due to the operations on and off the cloud, it may cause problems. For large-scale data upload, download or code copy, it is not suitable for online editing and immediate operation.

Therefore, Qingyun provides an algorithm development platform in terms of algorithm development. It can launch an online development environment based on cloud services, completely build Python projects and VC projects, and use project files and engineering environments online to conduct code research and development.

During the development process, if there is any need for debugging, it can be expanded immediately; if training is needed, the job task can be immediately assigned to the training cluster; if inference is needed, it can be placed on the inference cluster.

At the same time, during the algorithm development process, there may be some forms of joint development or mixed development. Qingyun also provides code warehouses and mirror warehouses for model management. Different personnel use different permissions to carry out unified algorithm development and service integration. .

In a nutshell, Qingyun mainly provides computing products and scheduling products for all development scenarios for algorithm developers, ensuring that the entire algorithm development business can be effectively operated on the cloud and reducing large-scale upload and download operations.

7. AI training platform

If the algorithm development is nearing completion or needs debugging, a large amount of computing power infrastructure needs to be activated for development and training. Based on the infrastructure, Qingyun provides an AI training platform to empower users.

After the GPU resources, storage resources and network resources are constructed, users can build independently through the cloud platform and achieve one-click operation. Qingyun AI training platform mainly builds clusters online based on its own GPU resources. After the construction is completed, a certain storage will be mounted by default, and users can choose by themselves.

The Qingyun AI training platform will also have a built-in online development environment. Some commonly used training frameworks will also be built into the development environment. It will provide users with full scenarios and full application environments through clusters, allowing users to distribute online across multiple machines. style training.

8. Container Inference Service Platform After the large model training is almost completed, the Qingyun container inference service platform can play a role when providing inference services to the public.

Through the Qingyun container inference service platform, after users deploy the inference service, they can then use the configured load balancing and automatic scaling to ensure that user visits can be called immediately. At the same time, Qingyun provides online monitoring services to customers. If there is a problem with the inference service, users can immediately monitor what went wrong with the container inference, and Qingyun can solve it online. For concurrent operations and large-scale call operations, Qingyun can also perform load balancing and automatic scaling, greatly reducing manual configuration operations.

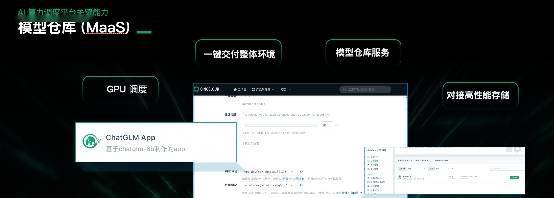

9. Model warehouse (MaaS)

Qingyun Model Warehouse (MaaS) is mainly aimed at AI computing power service customers and general computing customers. Model service providers can put products on the application market and model market according to their own model needs, making it convenient for customers of various enterprises to call, One-click fine-tuning and one-click deployment.

3: Stimulate diverse values and accelerate the implementation of scenarios

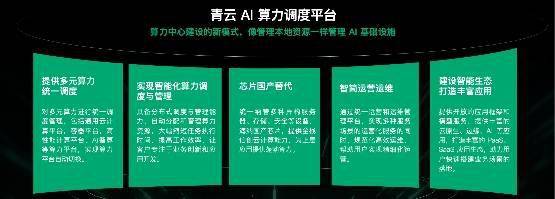

In general, the purpose of Qingyun AI computing power scheduling platform is to manage AI infrastructure like local resources, which is mainly reflected in five major aspects:

1. Provide unified scheduling of multiple computing power

Faced with GPU resources, CPU resources, domestic chips, application frameworks, applications and user business scenarios, Qingyun uses a unified platform for scheduling and management, including storage facilities and network facilities.

2. Realize intelligent computing power scheduling based on infrastructure

In terms of computing power scheduling priority and affinity, based on VM, host and container, users can realize intelligent computing power scheduling and configuration, as well as management services through Qingyun's platform.

3. Quick and effective adaptation to domestic chips. Qingyun can effectively and quickly adapt to domestic chips, ensuring that localized algorithm services and localized codes can run immediately on domestic chips.

4. Visualization service

In terms of intelligent operation and maintenance for the management side, Qingyun's monitoring and alarm services provide customers and administrators with visual operations through a large operation and maintenance platform.

5. Rich application market

Qingyun Technology actively builds an ecosystem and creates a rich application market, so that applications and customers from all walks of life can get the computing resources and business resources they want on the Qingyun AI computing platform.

At present, Qingyun AI computing power scheduling platform has been implemented in Jinan supercomputing applications, and Sunward Cloud has been online to provide operational services. Based on Jinan's tens of thousands of supercomputing hardware infrastructure, various computing networks, servers, etc., Qingyun provides listing, management, and scheduling services. It provides services for different computer rooms, supercomputing businesses, intelligent computing businesses, GPUs, and various storage and network-based services. information, conduct unified management, integration, management and distribution, and provide computing power scheduling products and computing power cloud service products to customers from all walks of life.

Qingyun AI Computing Cloud Service

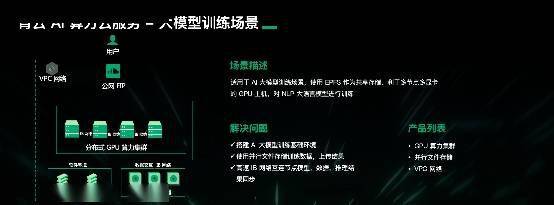

Qingyun AI computing power cloud service products are also launched on Qingyun public cloud to provide services, mainly for large model training scenarios.

For cards with relatively high priority and high configuration, Qingyun provides public cloud computing service products. In the AI scenario, Qingyun builds distributed GPU computing clusters with underlying resources and binds them to the public network environment, allowing user access.

Users can upload data to parallel file storage based on this, or they can integrate parallel file storage and GPU computing clusters into the same network to ensure data security and cloud service security through private networks. . You can also run your business through online training and remote SSH access to distributed computing clusters and parallel file storage.

In terms of business, users can use AI computing clusters and container inference services, and their infrastructure is A800 resources, bare metal servers, and virtualized servers. All Qingyun AI computing power cloud service products use high-speed interconnected networks and adopt the online environment, development environment, training and reasoning environment required by the AI computing power industry. Everyone is welcome to apply for registration and trial.

The above is the detailed content of Detailed explanation of Qingyun Technology's launch of AI computing power products and services to address computing power challenges. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- In the era of 'big construction”, where will AI computing power go?

- 'Smart cars' are on the 'smart road', Lenovo joins hands with Intel to empower the collaborative development of Chelu Cloud with 5G+AI computing power

- The demand for AI computing power has increased sharply, and Shanghai Lingang will build a tens-billion-scale computing power industry

- Two new AI server products were released to further consolidate its leading position in the field of AI computing power.

- Advance preview: Qingyun Technology AI computing power conference agenda will be held on September 19