Technology peripherals

Technology peripherals AI

AI How to combine 4D imaging radar with 3D multi-target tracking? TBD-EOT may be the answer!

How to combine 4D imaging radar with 3D multi-target tracking? TBD-EOT may be the answer!How to combine 4D imaging radar with 3D multi-target tracking? TBD-EOT may be the answer!

Hello everyone, thank you very much for the invitation from the Heart of Autonomous Driving. I am honored to share our work with you here

Online 3D multi-object tracking (MOT) technology in advanced driver assistance systems (ADAS) It has important application value in autonomous driving (AD). In recent years, as the industry's demand for high-performance three-dimensional perception continues to grow, online 3D MOT algorithms have received increasingly widespread research and attention. For 4D millimeter wave radar (also known as 4D imaging radar) or lidar point cloud data, most of the online 3D MOT algorithms currently used in the ADAS and AD fields adopt the point target tracking (TBD-POT) framework based on the post-detection tracking strategy. However, extended object tracking based on joint detection and tracking strategy (JDT-EOT), as another important MOT framework, has not been fully studied in the ADAS and AD fields. This article for the first time systematically discusses and analyzes the performance of TBD-POT, JDT-EOT, and our proposed TBD-EOT framework in a real online 3D MOT application scenario. In particular, this paper evaluates and compares the performance of the SOTA implementations of the three frameworks on the 4D imaging radar point cloud data of the View-of-Delft (VoD) and TJ4DRadSet data sets. Experimental results show that the traditional TBD-POT framework has the advantages of low computational complexity and high tracking performance, and can still be used as the first choice in 3D MOT tasks; at the same time, the TBD-EOT framework proposed in this article has the ability to surpass TBD-EOT in specific scenarios. The potential of the POT framework. It is worth noting that the JDT-EOT framework, which has recently attracted academic attention, has poor performance in ADAS and AD scenarios. This article analyzes the above experimental results based on a variety of performance evaluation indicators, and provides possible solutions to improve algorithm tracking performance in real application scenarios. For the online 3D MOT algorithm based on 4D imaging radar, the above research provides the first performance benchmark test in the ADAS and AD fields, and provides important perspectives and suggestions for the design and application of such algorithms

1 . Introduction

Online 3D multi-object tracking (MOT) is an important component in advanced driver assistance systems (ADAS) and autonomous driving (AD). In recent years, with the development of sensor and signal processing technology, online 3D MOT technology based on various types of sensors such as cameras, lidar and radar has received widespread attention. Among various sensors, radar, as the only low-cost sensor that can work under extreme lighting and severe weather conditions, has been widely used in sensing tasks such as instance segmentation, target detection, and MOT. However, although traditional automotive radar can effectively distinguish targets in range and Doppler velocity, the low angular resolution of radar measurements still limits the performance of target detection and multi-target tracking algorithms. Different from traditional automotive radars, the recently emerged 4D imaging radar based on MIMO technology can measure the distance, speed, azimuth and pitch angle information of the target, thus providing new development possibilities for radar-based 3D MOT.

The design paradigm of 3D MOT algorithm can be divided into two categories: model-based and deep learning-based. The model-based design paradigm uses carefully designed multi-objective dynamic models and measurement models, and is suitable for developing efficient and reliable 3D MOT methods. Among the typical model-based MOT frameworks, the point target tracking framework using detection-post-tracking strategy has been widely accepted by academia and industry. The point target tracking framework assumes that each target generates only one measurement point in one sensor scan. However, for lidar and 4D imaging radar, a target often generates multiple measurement points in one scan. Therefore, before performing target tracking, multiple measurements from the same target first need to be processed into a detection result, such as a target detection frame, through a target detector. The effectiveness of the post-detection tracking framework has been verified in many 3D MOT tasks based on real lidar point cloud data

Extended target tracking (EOT) using the joint detection and tracking (JDT) strategy as another The model-based MOT framework has recently received widespread attention in academia. Unlike POT, EOT assumes that a target can produce multiple measurements in one sensor scan, so no additional target detection module is required when implementing JDT. Relevant studies have pointed out that JDT-EOT can achieve good performance when tracking single targets on real lidar point clouds and automotive radar detection point data. However, for online 3D MOT tasks in complex ADAS and AD scenarios, there are few studies that use real data to evaluate EOT, and these studies do not evaluate the MOT performance of the EOT framework for different types of targets in detail on ADAS/AD data sets. , and there is no systematic analysis of experimental results using widely accepted performance indicators. The research in this article attempts to answer this open question for the first time through comprehensive evaluation and analysis: whether the EOT framework can be applied in complex ADAS and AD scenarios and achieve tracking performance and computing efficiency superior to the traditional TBD-POT framework. The main contributions of this article mainly include:

- By comparing the POT and EOT frameworks, this article provides the first performance benchmark for future research on online 3D MOT methods based on 4D imaging radar in the fields of ADAS and AD. The performance evaluation and analysis in this article demonstrate the respective advantages and disadvantages of the POT and EOT frameworks, and provide guidance and suggestions for the design of online 3D MOT algorithms.

- In order to fill the gap between theory and practice of online 3D MOT methods based on EOT, this article conducts a systematic study of the EOT framework in real ADAS and AD scenarios for the first time. Although the JDT-EOT framework, which has been widely studied in academia, performs poorly, the TBD-EOT framework proposed in this paper takes advantage of the advantages of deep learning object detectors, thereby achieving better tracking performance and computing than the JDT-EOT framework. efficiency.

- Experimental results show that the traditional TBD-POT framework is still the preferred choice in online 3D MOT tasks based on 4D imaging radar because of its high tracking performance and computational efficiency. However, the performance of the TBD-EOT framework is better than that of the TBD-POT framework in certain situations, demonstrating the potential of using the EOT framework in real ADAS and AD applications.

2. Method

This section introduces three algorithm frameworks for online 3D MOT on 4D imaging radar point cloud data, including TBD-POT, JDT-EOT and TBD- EOT, as shown in the figure below:

Rewritten content: A. Framework 1: Point target tracking using post-detection tracking strategy (to be determined - point target Tracking)

TBD-POT framework has been widely accepted in MOT research based on various sensors. Under this tracking framework, the 4D imaging radar point cloud is first processed by the target detector to generate a 3D detection frame, providing information such as target location, detection frame size, orientation, target category, detection score and other information. In order to simplify the calculation, the POT algorithm usually selects the two-dimensional target position in the rectangular coordinate system as the measurement, and performs MOT under the bird's-eye view (BEV). The estimated target position is then combined with other information of the 3D detection frame to obtain the final 3D tracking result. The TBD-POT framework has two main advantages: 1) the POT algorithm can utilize additional information such as target type and detection score to improve tracking performance; 2) the POT algorithm is generally less computationally complex than the EOT algorithm.

We choose the global nearest neighbor Poisson Multi-Bernoulli filter (GNN-PMB) as the POT algorithm, which achieves SOTA performance in the lidar-based online 3D MOT task. GNN-PMB estimates multi-target states by propagating PMB densities, where undetected targets are modeled by Poisson point processes (PPP) and detected targets are modeled by Multi-Bernoulli (MB) densities. Data association is achieved by managing local and global assumptions. At each moment, a measurement may be associated with an already tracked target, a newly detected target, or a false alarm, forming different local hypotheses. Compatible local assumptions are integrated into a global assumption that describes the relationship between all current targets and measurements. Unlike the Poisson Multi-Bernoulli Mixture (PMBM) filter, which computes and propagates multiple global hypotheses, GNN-PMB only propagates the optimal global hypothesis, thereby reducing computational complexity. In summary, the first online 3D MOT framework studied in this article combines a deep learning-based object detector with the GNN-PMB algorithm

B. Framework 2: Using joint detection and tracking Strategy's Extended Target Tracking (JDT-EOT)

Different from the first framework TBD-POT, the JDT-EOT framework is able to directly process 4D imaging radar point clouds by simultaneously detecting and tracking multiple targets. . First, the point cloud is clustered to form possible measurement divisions (point clusters), and then the EOT algorithm uses these point clusters to perform 3D MOT. Theoretically, because point clouds have richer information than preprocessed 3D detection frames, this framework can more accurately estimate target positions and shapes and reduce target misses. However, for 4D imaging radar point clouds that contain a large amount of clutter, it is difficult to generate accurate measurement divisions. Since the point cloud spatial distribution of different targets may also be different, the JDT-EOT framework usually uses multiple clustering algorithms such as DBSCAN and k-means combined with different parameter settings to generate as many possible measurement divisions as possible. This further increases the computational complexity of EOT and affects the real-time performance of this framework.

This article chooses the PMBM filter based on the Gamma Gaussian Inverse Wishart (GGIW) distribution to implement the JDT-EOT framework. The GGIW-PMBM filter is one of the EOT algorithms with SOTA estimation accuracy and computational complexity. The PMBM filter was chosen because the algorithm uses multi-Bernoulli mixture (MBM) density to model the target and propagates multiple global assumptions, which can better cope with the high uncertainty of radar measurements. The GGIW model assumes that the number of measurement points generated by a target obeys the Poisson distribution, and a single measurement obeys the Gaussian distribution. Under this assumption, the shape of each target is an ellipse, described by the inverse Wishart (IW) density, and the major and minor axes of the ellipse can be used to form the rectangular outer frame of the target. This shape modeling is relatively simple, suitable for many types of targets, and has the lowest computational complexity among existing EOT algorithm implementations.

C. Framework 3: Extended Target Tracking (TBD-EOT) using post-detection tracking strategy

In order to take advantage of the deep learning object detector under the EOT framework, we propose a third MOT framework: TBD-EOT. Different from the JDT-EOT framework that clusters on complete point clouds, the TBD-EOT framework first selects valid radar measurement points inside the target 3D detection frame before clustering. These measurement points are more likely to come from real objects. Target. Compared with JDT-EOT, the TBD-EOT framework has two advantages. First, by removing measurement points that may originate from clutter, the computational complexity of the data association step in the EOT algorithm will be significantly reduced, and the number of false detections may also be reduced. Secondly, the EOT algorithm can utilize information derived from the detector to further improve tracking performance. For example, setting different tracking parameters for different categories of targets, discarding target detection frames with low detection scores, etc. The TBD-EOT framework uses the same target detector as TBD-POT when deployed, and uses GGIW-PMBM as the EOT filter.

3. Experiment and analysis

A. Data set and evaluation indicators

This article is in No. 0, 8, 12, and 18 of the VoD data set Three MOT frameworks were evaluated on the Car, Pedestrian, and Cyclist categories in sequences 0, 10, 23, 31, and 41 of TJ4DRadSet. The target detection results input to the TBD-POT and TBD-EOT frameworks are provided by SMURF, which is one of the SOTA target detectors on 4D imaging radar point clouds. Since JDT-EOT cannot obtain target type information, we added a heuristic target classification step to determine the category based on the target shape and size in the state extraction process of the GGIW-PMBM algorithm.

The subsequent evaluation of this article selected a set of commonly used MOT performance indicators including MOTA, MOTP, TP, FN, FP and IDS. In addition, we also applied a newer MOT performance indicator: high-order tracking accuracy (HOTA). HOTA can be decomposed into detection accuracy (DetA), association accuracy (AssA) and positioning accuracy (LocA) sub-indicators, which helps to analyze MOT performance more clearly.

The content of the tracking framework performance comparison needs to be rewritten

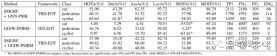

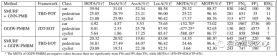

On the VoD data set, SMURF GNN-PMB and GGIW-PMBM are implemented for the three MOT framework algorithms Parameter tuning was performed with SMURF GGIW-PMBM. Their performance is shown in the following table:

The performance of each algorithm on the TJ4DRadSet data set is shown in the following table:

1) Performance of GGIW-PMBM

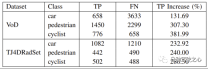

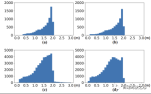

The experimental results show that the performance of GGIW-PMBM is lower than expected. Since the tracking results contain a large number of FPs and FNs, the detection accuracy of GGIW-PMBM on the three categories is low. In order to analyze the cause of this phenomenon, we calculated TP and FN using unclassified tracking results, as shown in the table below. It can be observed that the number of TPs in the three categories has increased significantly, indicating that GGIW-PMBM can produce tracking results close to the real target position. However, as shown in the figure below, most of the targets estimated by GGIW-PMBM have similar lengths and widths, which results in the heuristic target classification step being unable to effectively distinguish target types based on target size, which adversely affects tracking performance.

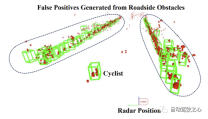

further analyzed the performance difference of GGIW-PMBM on the two data sets. On the TJ4DRadSet dataset, the MOTA metrics of pedestrians and cyclists are much lower than those on the VoD dataset, which indicates that GGIW-PMBM generates more false trajectories on TJ4DRadSet. The reason for this phenomenon may be that the TJ4DRadSet dataset contains more clutter measurements from obstacles on both sides of the road, as shown in the figure below. Since most roadside obstacles are stationary, this problem can be improved by removing radar measurement points with low radial velocity before clustering. Since TJ4DRadSet has not yet published self-vehicle motion data, this article does not provide additional experimental evidence. Nonetheless, we can speculate that similar processing steps will also affect the radar point cloud of stationary targets, increasing the likelihood that these targets will not be tracked correctly

Overall , GGIW-PMBM failed to obtain good performance on real 4D imaging radar point clouds, mainly because without the additional information provided by the target detector, it is difficult for the algorithm to judge the category of the tracking results through heuristic methods, or to distinguish from Point cloud of target and background clutter.

2) Performance of SMURF GNN-PMB and SMURF GGIW-PMBM

Both SMURF GNN-PMB and SMURF GGIW-PMBM utilize information from object detectors. Experimental results show that the performance of the former in the Car category is significantly better than that of the latter, mainly because the latter has lower positioning accuracy for Car targets. The main reason for this phenomenon is the error in point cloud distribution modeling. As shown in the figure below, for vehicle targets, the radar point cloud tends to cluster on the side closer to the radar sensor. This is inconsistent with the assumption in the GGIW model that measurement points are uniformly distributed on the target surface, causing the target position and shape estimated by SMURF GGIW-PMBM to deviate from the true values. Therefore, when tracking large targets such as vehicles, using more accurate target measurement models, such as Gaussian processes, may help the TBD-EOT framework achieve better performance, but this may also increase the computational complexity of the algorithm

We also observed that the performance gap between SMURF GGIW-PMBM and SMURF GNN-PMB in the Cyclist category has narrowed, and the HOTA index of the former is even better than the latter in the Pedestrian category. In addition, SMURF GGIW-PMBM also has less number of IDS on Pedestrian and Cyclist categories. The causes of these phenomena may include: first, GGIW-PMBM adaptively calculates the detection probability of the target based on the estimated GGIW density; second, GGIW-PMBM not only considers the target location but also the target measurement when calculating the likelihood of the correlation hypothesis. The number and spatial distribution of points. For small targets such as Pedestrian and Cyclist, the radar points are more evenly distributed on the target surface, which is more consistent with the GGIW model assumptions; therefore, SMURF GGIW-PMBM can use information from the point cloud to more accurately estimate the detection probability and associated hypothesis likelihood, thereby reducing Trajectory interruption and error correlation to improve performance in positioning, correlation and ID maintenance.

4. Conclusion

This paper compares the performance of POT and EOT frameworks in online 3D MOT tasks based on 4D imaging radar point clouds. We evaluate the tracking performance of three frameworks, TBD-POT, JDT-EOT and TBD-EOT, on the Car, Pedestrian and Cyclist categories of VoD and TJ4DRadSet datasets. The results show that the traditional TBD-POT framework is still effective, and its algorithm implementation SMURF GNN-PMB performs best on the Car and Cyclist categories. However, the JDT-EOT framework cannot effectively remove clutter measurements, resulting in too many measurement division assumptions, making the performance of GGIW-PMBM unsatisfactory. Under the TBD-EOT framework proposed in this paper, SMURF GGIW-PMBM achieves the best correlation and positioning accuracy on the Pedestrian category, and achieves reliable ID estimation on the Pedestrian and Cyclist categories, demonstrating superiority beyond the TBD-POT framework. potential. However, SMURF GGIW-PMBM cannot effectively model non-uniformly distributed radar point clouds, resulting in poor tracking performance for vehicle targets. Therefore, further research is needed in the future on extended target models that are more realistic and have low computational complexity

The content that needs to be rewritten is: Original link: https://mp.weixin .qq.com/s/ZizQlEkMQnlKWclZ8Q3iog

The above is the detailed content of How to combine 4D imaging radar with 3D multi-target tracking? TBD-EOT may be the answer!. For more information, please follow other related articles on the PHP Chinese website!

What is Graph of Thought in Prompt EngineeringApr 13, 2025 am 11:53 AM

What is Graph of Thought in Prompt EngineeringApr 13, 2025 am 11:53 AMIntroduction In prompt engineering, “Graph of Thought” refers to a novel approach that uses graph theory to structure and guide AI’s reasoning process. Unlike traditional methods, which often involve linear s

Optimize Your Organisation's Email Marketing with GenAI AgentsApr 13, 2025 am 11:44 AM

Optimize Your Organisation's Email Marketing with GenAI AgentsApr 13, 2025 am 11:44 AMIntroduction Congratulations! You run a successful business. Through your web pages, social media campaigns, webinars, conferences, free resources, and other sources, you collect 5000 email IDs daily. The next obvious step is

Real-Time App Performance Monitoring with Apache PinotApr 13, 2025 am 11:40 AM

Real-Time App Performance Monitoring with Apache PinotApr 13, 2025 am 11:40 AMIntroduction In today’s fast-paced software development environment, ensuring optimal application performance is crucial. Monitoring real-time metrics such as response times, error rates, and resource utilization can help main

ChatGPT Hits 1 Billion Users? 'Doubled In Just Weeks' Says OpenAI CEOApr 13, 2025 am 11:23 AM

ChatGPT Hits 1 Billion Users? 'Doubled In Just Weeks' Says OpenAI CEOApr 13, 2025 am 11:23 AM“How many users do you have?” he prodded. “I think the last time we said was 500 million weekly actives, and it is growing very rapidly,” replied Altman. “You told me that it like doubled in just a few weeks,” Anderson continued. “I said that priv

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics VidhyaApr 13, 2025 am 11:20 AM

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics VidhyaApr 13, 2025 am 11:20 AMIntroduction Mistral has released its very first multimodal model, namely the Pixtral-12B-2409. This model is built upon Mistral’s 12 Billion parameter, Nemo 12B. What sets this model apart? It can now take both images and tex

Agentic Frameworks for Generative AI Applications - Analytics VidhyaApr 13, 2025 am 11:13 AM

Agentic Frameworks for Generative AI Applications - Analytics VidhyaApr 13, 2025 am 11:13 AMImagine having an AI-powered assistant that not only responds to your queries but also autonomously gathers information, executes tasks, and even handles multiple types of data—text, images, and code. Sounds futuristic? In this a

Applications of Generative AI in the Financial SectorApr 13, 2025 am 11:12 AM

Applications of Generative AI in the Financial SectorApr 13, 2025 am 11:12 AMIntroduction The finance industry is the cornerstone of any country’s development, as it drives economic growth by facilitating efficient transactions and credit availability. The ease with which transactions occur and credit

Guide to Online Learning and Passive-Aggressive AlgorithmsApr 13, 2025 am 11:09 AM

Guide to Online Learning and Passive-Aggressive AlgorithmsApr 13, 2025 am 11:09 AMIntroduction Data is being generated at an unprecedented rate from sources such as social media, financial transactions, and e-commerce platforms. Handling this continuous stream of information is a challenge, but it offers an

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Zend Studio 13.0.1

Powerful PHP integrated development environment