Technology peripherals

Technology peripherals AI

AI Practical application of Kuaishou content cold start recommendation model

Practical application of Kuaishou content cold start recommendation model

1. What problems does Kuaishou’s content cold start solve?

First, let’s take a look at the problems that Kuaishou’s cold start solves.

In the short term, the platform must first enable more new videos to gain traffic, that is, be able to distribute them. At the same time, the outgoing traffic must be more efficient. In the long term, we will also explore and mine more high-potential new videos to provide more fresh blood to the entire popular pool and alleviate the ecological Matthew Effect. Provide more high-quality content, improve user experience, and also increase duration and DAU.

Use cold start to promote UGC authors to obtain interactive feedback incentives to maintain the retention of the entire producer. In this process, there are two constraints. First, the overall exploration cost and traffic cost should be relatively stable in the big picture. Second, we only intervene in the distribution of new videos in the low vv stage. So, under these constraints, how do we maximize overall benefits?

The cold start distribution of the video has an important impact on its growth space, especially when the work is distributed to people who do not match its interests, it will It has two impacts. First of all, the author's growth will be affected. In the long run, he will not be able to receive effective interactive traffic incentives, which will lead to changes in his submission direction and willingness. Secondly, due to the lack of effective conversion rate in early traffic, the system will consider the content to be of low quality, so in the long run it will not be able to obtain enough traffic support to achieve growth

If things continue like this, the ecology will fall into a relatively bad state. For example, if there is a work about local food, it must have a most suitable audience group A, and its overall action rate is the highest. In addition, there may be a completely unrelated group of people C. When voting for this group of people, there will be a certain degree of selectivity, and the action rate may be extremely low. Of course, there is also a third type of group B, which is a group with very broad interests. Although this group of people has a large flow, the overall action rate of this group of people will be low.

If we can reach the core group A as early as possible to increase the early interaction rate of the content, we can bring about a leveraging distribution of natural traffic. But if we give too much traffic to crowd C or crowd B in the early stage, it will lead to a low overall action rate and limit its growth. All in all, improving the efficiency of cold start distribution is the most important way to achieve content growth. In order to complete the iteration of content cold start efficiency, we will establish some intermediate process indicators and final long-term indicators.

The rewritten content is: The process indicators are mainly divided into two parts. One part is the consumption performance of new videos, mainly including their traffic actions. rate; the other part is the off-slope indicator, including exploration orientation, utilization orientation and ecological orientation. The exploration direction is to ensure that high-quality new videos are not ignored, mainly observing the growth of the number of videos with exposures greater than 0 and exposures greater than 100. Use Xiangxiang to observe the growth in the number of high-VV videos that are popular and high-quality new videos. The ecological direction mainly observes the user penetration rate of popular pools. In the long run, since this is a long-term change brought about by ecological impact, we will eventually use Combo experiments to observe the changing trends of some core indicators in the long term, including APP duration, author DAU and overall DAU

2. Challenges and Solutions of Cold Start Modeling

In general, the content is cold There are three main difficulties with startup. First, there is a huge difference between the sample space of content cold start and the real solution space. Secondly, the samples of content cold start are very sparse, which will lead to inaccurate learning results and very large deviations, especially at a disadvantage in terms of exposure bias. Third, modeling the growth value of videos is also a difficulty, which is also a problem we are currently working hard to solve. This article will focus on the difficulties in the first two aspects

1. The sample space is much smaller than the real solution space.

When optimizing the content cold start problem, the sample space is smaller than the solution space. Space is a very prominent issue. Especially in terms of cold start of recommended content, it is necessary to increase the reach rate of indexed content so that more videos have the opportunity to be displayed

We believe that to solve this problem, the most important thing It is to improve the reach and exposure efficiency of the video during the recall stage. In order to solve the recall reach rate of cold start videos. The common approach in the industry is based on Content-Based, including attribute inversion, some recall methods based on semantic similarity, or a recall model based on twin towers plus generalization features, or the introduction of mapping between behavior space and content space, similar to Based on CB2CF’s approach.

This time we will focus on two more interesting new methods, which are based on graph entropy. Enhanced heterogeneous graph network and I2U-based galaxy model. In terms of technology selection, we first use GNN as the base model for content cold start U2I. Because we consider that GNN is an inductive learning method as a whole, it is very friendly to new nodes and provides more flexibility. In addition, GNN introduces more attribute nodes, which is an important means to enhance cold start content access. In terms of specific practice, we will also introduce user nodes, author nodes and item nodes and complete the aggregation of information. After the introduction of this generalized attribute node, the overall reach rate of new content has been greatly improved. However, intermediate nodes that are too general, such as tag categories, will also cause the perceptual domain of the video to be insufficiently personalized, bringing the risk of over-smoothing. From the case review, we found that for some users who like to watch badminton videos, the existing GNN characterization scheme can easily cause poor distinction between badminton videos and other table tennis, football and other videos.

In order to solve the over-generalization problem caused by introducing too much generalization information in the GNN modeling process, our main idea is to introduce more detailed Based on the proposed neighbor characterization scheme, semantic self-enhancing edges will be introduced into GNN. As you can see from the picture in the lower right corner, we will use the unpopular video to find its similar popular videos in the popular space, and then use the popular similar videos as the initial node of the cold start connection. In the specific aggregation process, we will construct and select self-reinforcing edges based on the principle of graph entropy reduction. The specific selection plan can be seen from the formula, which mainly takes into account the above description of the connected neighbor node and the current node information. If the similarity between two nodes is higher, their information entropy will be smaller. The node denominator below represents the overall perception domain of the neighbor node. It can also be understood that in the selection process, we prefer to find a neighbor node with stronger perception

In practice In the process, we mainly have two techniques. One is that the feature domain of similar videos and the feature domain of item id should share the embedding space, and then the self-enhancement node only retains popular videos to remove the noise introduced by insufficient learning nodes. With this upgrade, the overall generalization is fully guaranteed, which effectively improves the degree of personalization of the model and brings about improved offline and online effects.

The above methods are actually an improvement in modeling content reach from a U2I perspective, but they cannot fundamentally solve the problem of video being unreachable

If you change your thinking and look for the right people from the perspective of item, that is, switch to the perspective of I2U, theoretically every video has room to gain traffic.

The specific approach is that we need to train an I2U retrieval service, and use this retrieval service to dynamically retrieve interested groups for each video. Through this I2U composition method, the U2I inverted index is reversely constructed, and finally the item list is returned as a cold start recommendation list according to the user's real-time request

The rewritten content is: the focus is on training an I2U retrieval service. Our first version is a two-tower model. In practice, in order to avoid the problem of excessive user concentration, we will first abandon uid and use action list and self-attention to effectively alleviate the problem of user concentration. At the same time, in order to avoid the learning exposure bias caused by item-id during the learning process, we will abandon item-id and introduce more generalized features such as semantic vectors, categories, tags, and AuthorID to effectively alleviate item-id. -Aggregation of ids. From the user's perspective, this kind of Debias loss is introduced, and then negative sampling within the batch is introduced to better avoid the problem of user concentration

Our first version of practice is the twin-tower I2U model, and some problems were also discovered during the practice. First of all, the two-tower model has limitations in the interaction between users and items, and the overall retrieval accuracy is limited. In addition, there is also the problem of concentration of interests. The content mounted on users often has very concentrated interests, but in fact users' interests are multimodal distributed. We also found the problem of too concentrated users. Most of the cold start videos are mounted on some top users. This is not reasonable because after all, the content that top users can consume every day is also limited

In order to solve the above three problems, the new solution is TDM modeling and TDM hierarchical retrieval method. One benefit of TDM is that it can introduce more complex user-item interaction modes and break through the interaction limitations of the Twin Towers. The second is to use a DIN-like pattern that reduces reliance on unimodal interest. Finally, the introduction of hierarchical retrieval in TDM can very effectively alleviate the problem of mounting user concentration.

In addition, we have a more effective optimization point, which is to add the aggregate representation of descendant nodes to the parent node. This can enhance the feature generalization and discrimination accuracy of the parent node. That is to say, we will aggregate the child nodes to the parent node through the attention mechanism, and through layer-by-layer transfer, the intermediate nodes can also have a certain semantic generalization ability

In the final system practice, in addition to the I2U model, we also introduced the U2U interest expansion module. That is to say, if some users perform well in the cold start video, we will quickly spread the video

The specific method is similar to some of the current industry methods, but the U2U interest here Extension modules mainly have three advantages. First of all, the TDM tree structure is relatively solid, and adding this U2U module can be closer to the user's real-time preferences. Secondly, through the spread of real-time interests, we can break through the limitations of the model and quickly promote content through user collaboration, bringing about increased diversity. Ultimately, this can also improve the overall coverage of Galaxy recall. These are some of our optimization points in the practice process

Through these solutions, we It can effectively solve the problem of inconsistency between the sample space and the real solution space in content cold start, thereby significantly improving the reach and coverage effect of cold start

2. Inaccurate cold start sample sparse learning and Large Deviation

Next, we will introduce the problem that the sparsity of cold start samples leads to inaccurate learning and large deviation, which is the biggest challenge. The essence of this problem is the sparsity of interaction behavior. We expand the problem into three directions.

First of all, due to the low exposure of cold start samples, the learning of item ids is insufficient, which in turn affects the recommendation effect and recommendation efficiency. Second, there is high uncertainty and low confidence in the collected labels due to inaccuracies in early distribution. Third, the current training paradigm will introduce popularity information into the embedding of items without correction, causing cold start videos to be underestimated and thus unable to be fully distributed

We mainly solve this problem from four directions. The first is generalization, the second is transfer, the third is exploration, and the fourth is correction. Generalization is more about completing modeling and upgrading from the perspective of generalized features. The main purpose of migration is to regard unpopular and popular videos as two domains, and effectively transfer information from the popular video domain or the full information domain to assist the learning of unpopular videos. Exploration mainly introduces the idea of exploration and utilization. That is, when the early label is inaccurate, we hope to introduce the idea of exploration in the modeling process, thereby alleviating the negative impact of disbelief in the label during the cold start stage. Popularity correction is currently a hot trend. We mainly restrict the use of popularity information through gating and regular loss.

The following is a detailed introduction to our work.

First of all, generalization is a very common method when solving the cold start problem. However, in practice we find that it is also very useful to introduce some semantic embeddings compared to labels and categories. However, the benefits of directly adding semantic features to the overall model are limited. Since there are differences between the video semantic space and the behavior space, can we approximately represent the position of the new video in the behavior space through the common information of the video semantics to assist generalization. We mentioned some methods before, such as CB2CF, which learns to map generalization information to the real behavior space. However, instead of following this approach, we find a list of items similar to the target item based on the semantic vectors of the video. First, it shares the behavior space with the user's long-term and short-term interest behaviors, and we aggregate the list of similar items to simulate the representation of candidate videos in the behavior space. In fact, this method is similar to the method of introducing edges similar to candidates in graph recall that we mentioned before, and the effect is very obvious, increasing the offline AUC by 0.35PP

The second is exploration, that is, inaccurate early distribution of new videos will lead to a low posterior CTR mean, and this low mean will also cause the model to think that the video itself may be of lower quality. Poor, ultimately the explorability of cold start content is limited. So can we model the uncertainty of PCTR and slow down the absolute utilization and trust of labels in the cold start stage. We try to convert a request's CTR estimate into a beta-distributed estimate, using both expectation and variance online. Specifically, in practice, we will estimate an α and β of the Beta distribution. Specifically, the loss design is the expected value of the mean square error of the estimated value and the real label. After we expand the expected value, we will find that we need to get the expectation of the square of the estimated value and the expectation of the estimated value. We can effectively calculate these two values through the estimated α and β, and the loss is generated. Then we can train the Beta distribution, and finally add a queue to the estimated value of the Beta distribution to balance exploration and utilization. In fact, when we use Beta loss in the low vv stage, there is a certain improvement in AUC, but it is not particularly obvious. But when we use beta distribution online, the effective penetration rate of 0vv content increases by 22% while the overall action rate remains the same.

#The following is the dual domain transfer learning framework. The overall idea is that cold-start content is often a highly skewed long-tail distribution and is also a vulnerable group in the popularity bias. If we only use cold start samples, the popularity bias can be alleviated to a certain extent, but a large amount of user interest will be lost, resulting in a decrease in overall accuracy.

Most of our current attempts focus on the learning of cold start samples through some undersampling of hot samples, or inverse frequency weighting or generalization features, but An inherent commonality in the behavioral space between cold-start early behavioral samples and popular videos is often overlooked.

So during the design process, we will divide the full sample and the cold start sample into two domains, which are the full volume domain and the cold start domain in the above figure. The full volume domain is effective for all samples, and the cold start domain It only takes effect for samples under cold start conditions, and then a migration module for bilateral hot and cold knowledge domains is added. Specifically, users and items are modeled separately, and network mapping is performed from the global sample tower to the cold start sample tower, thereby capturing implicit data enhancement at the model level and improving the representation of cold start videos. On the item side, we will retain all cold start samples. In addition, we will also sample some hot videos based on exposure to ensure the similarity of hot and cold domain distribution, and ultimately ensure the smooth knowledge transfer of the entire mapping.

In addition, we have also added a unique dual popularity gating mechanism, introduced some popularity features, and used it to assist the fusion ratio of hot and cold video domains. On the one hand, the ratio of cold start expression utilization of new videos under different life cycles can be effectively learned and distributed. On the other hand, the user side also learns the sensitivity of different active users to cold start videos. In practice, the offline effect has been improved to a certain extent both in the low vv stage and the AUC of 4000vv.

Finally, I will introduce a work on correction, which is heat correction. Recommendation systems often face popularity bias, and are generally a carnival of high-explosive products. The goal of existing model paradigm fitting is global CTR. Recommending popular items may result in lower overall loss, but it will also inject some popular information into item embedding, causing highly popular videos to be overestimated.

Some existing methods pursue unbiased estimation too much, but in fact will lead to some consumption losses. So can we decouple some item embeddings from popularity information and real interest information, and effectively use popularity information and interest information for online fusion? This may be a more reasonable method. In specific practice, we referred to some practices of our peers

The focus is mainly on two modules, one is to input the popularity and interest of the content Orthogonal constraints, such as inputting features such as item id and author id, will generate two representations. One of these two representations is a popularity representation and the other is a true interest representation. A regular constraint will be made during the solution process. The second one is that we will also generate embeddings of the pure heat information of some items as the pure heat representation of the video. The pure heat representation will make a similarity constraint based on the real heat representation of the video, so that we can get the heat representation just mentioned. And interest representation, one of them expresses popularity information, and the other expresses interest information. Finally, based on these two representations, a queue of biased estimation and unbiased estimation will be added online for multiplication formula fusion.

3. Future Outlook

Finally, let me share my outlook on future work.

First of all, we need to model and apply the crowd diffusion model more accurately, especially in real-time, including the current diffusion of similar crowds. We have implemented some similar crowd diffusion plans in the cold start phase, such as a diffusion application of U2U, and we hope to further improve its level of refinement

The second is a correction plan. The current causal model also has a lot of research on cold start correction. We will also do continuous research and exploration in this direction, especially for exposure correction and heat correction. The third one is in sample selection. High-heat samples still have greater value for cold start recommendations. Can we select some more valuable samples in the high-heat sample space and give them different weights to improve the recommendation efficiency of the cold start model.

The third one is the characterization of the long-term growth value of the video. Each video needs to go through the process of cold start-growth-stability-decline. How to pay more attention when modeling videos Its long-term benefits, that is, growth space, especially in terms of leveraging value, how to model the value difference of different single distributions on future growth is also a very interesting task.

The last one is the solution through data enhancement. Whether it is a sample or a comparative learning solution, we hope to introduce some work in this area to improve the efficiency of cold start recommendation.

4. Question and Answer Session

Q1: Online requests are all user granular. How is the user of I2U put into the vector engine online?

A1: The I2U model will continuously search for the most similar users in the index library during the offline process, and then convert them into users based on the most similar users and items found - Item pairs, and finally get the user's aggregation result of the item list, and store it in Redis for online use

Q2: The other side of cold start is how to prevent the header content from overheating , the sample proportion is too high, resulting in more and more concentrated push, is there any method?

A2: Several methods have been mentioned in the sharing. Fundamentally speaking, we still solve it from the perspective of generalization, exploration and correction. For example, how to initialize the item id so that it has a better initial point, and at the same time introduce some generalized features to map the generalized features to the behavioral semantic space. Then use Beta distribution to improve explorability; and introduce pure content towers to remove features with strong memory such as pid, thereby introducing pure generalization estimation without heat bias, and correction work, hoping to improve learning In the process, the popularity factors are learned and constrained separately, pure interest standards and popularity standards are provided, and the intensity of use of the popularity standards is reasonably distributed online. Of course, in addition to these methods, we also try to alleviate the sparsity of cold-start content through data enhancement, and use popular content to assist the learning of cold-start content from the perspective of transfer learning.

Q3: How is the optimal rate of popular pools calculated?

A3: The optimization rate is actually a task with a very high degree of manual participation. It is impossible for us to completely use the model to evaluate the optimization rate of a video. If we can use a model to evaluate a piece of content, such as a video with 50,000 impressions, the overall excellent rate will be manually participated, and it will definitely be pushed to the reviewers to review which ones are excellent.

The above is the detailed content of Practical application of Kuaishou content cold start recommendation model. For more information, please follow other related articles on the PHP Chinese website!

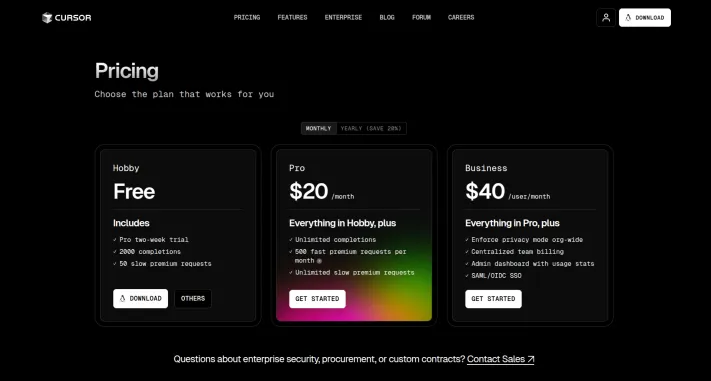

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

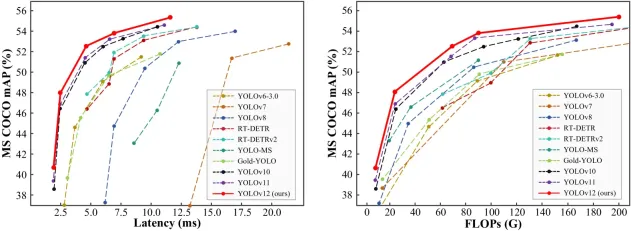

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

WebStorm Mac version

Useful JavaScript development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.