Home >Technology peripherals >AI >GPT-4's human-like reasoning capabilities have been greatly improved! The Chinese Academy of Sciences proposed 'thinking communication', analogical thinking goes beyond CoT and can be applied immediately

GPT-4's human-like reasoning capabilities have been greatly improved! The Chinese Academy of Sciences proposed 'thinking communication', analogical thinking goes beyond CoT and can be applied immediately

- PHPzforward

- 2023-10-11 13:49:01858browse

Nowadays, giant neural network models such as GPT-4 and PaLM have emerged, and they have demonstrated amazing few-sample learning capabilities.

Just given simple prompts, they can perform text reasoning, write stories, answer questions, program...

Researchers from the Chinese Academy of Sciences and Yale University have proposed a new framework called "Thought Propagation", which aims to enhance the reasoning ability of LLM through "analogical thinking"

Paper address: https://arxiv.org/abs/2310.03965

"Thought Communication" is inspired by human cognition, that is, when When we encounter a new problem, we often compare it to similar problems we have already solved to derive strategies.

Therefore, the key to this approach is to explore "similar" problems related to the input before solving the input problem

Finally, they Solutions can be used out of the box, or insights can be extracted for useful planning.

It is foreseeable that "thinking communication" is proposing new ideas for the inherent limitations of LLM's logical capabilities, allowing large models to use "analogy" to solve problems like humans.

LLM multi-step reasoning, defeated by humans

Obviously, LLM is good at basic reasoning based on prompts, but it is difficult to deal with complex multi-step problems. There are still difficulties sometimes, such as optimization and planning.

On the other hand, humans will draw on intuition from similar experiences to solve new problems.

The inability of large models to achieve this is due to its inherent limitations

Because the knowledge of LLM comes entirely from the training data model, unable to truly understand the language or concepts. Therefore, as statistical models, they are difficult to perform complex combinatorial generalizations.

LLM lacks systematic reasoning capabilities and cannot reason step by step like humans do to solve challenging problems. This is the most important thing

In addition, since the inference of large models is local and short-sighted, it is difficult for LLM to find the optimal solution and maintain the consistency of inference over a long time scale

To sum up, the problems existing in large models in mathematical proof, strategic planning and logical reasoning can be mainly attributed to two core factors:

- Inability to reuse insights from previous experience.

Humans accumulate reusable knowledge and intuition from practice to help solve new problems. In contrast, LLM approaches each problem "from scratch" and does not borrow from previous solutions.

Compound errors in multi-step reasoning refer to errors that occur during multi-step reasoning

Humans monitor their own chain of reasoning and modify initial steps when necessary. However, mistakes made by LLM in the early stages of reasoning will be amplified because they will lead subsequent reasoning in the wrong direction.

These weaknesses seriously hinder LLM from responding to the needs Applications to complex challenges of global optimization or long-term planning.

Researchers have proposed a new solution to this problem, namely thought communication

TP framework

Through analogical thinking, LLM can reason like humans

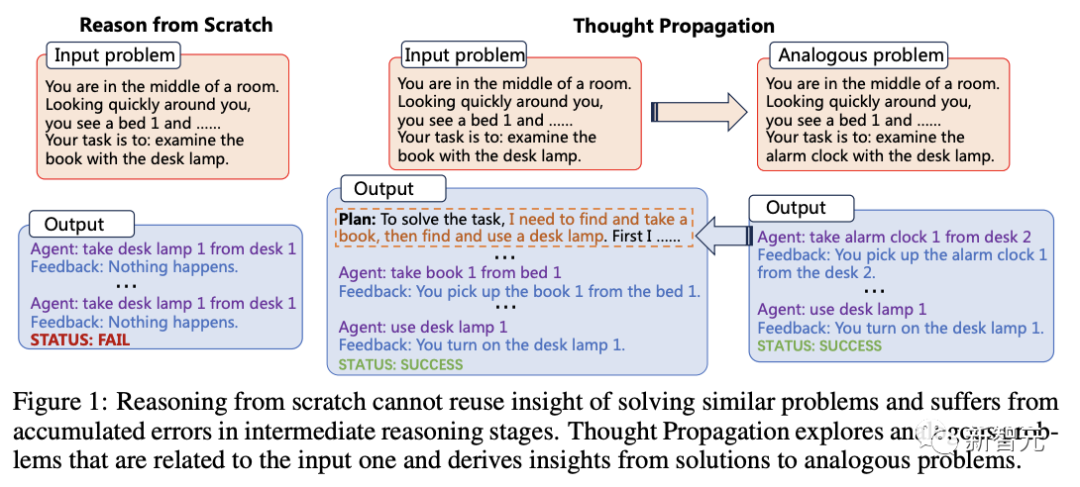

In the opinion of researchers, reasoning from 0 cannot reuse insights to solve similar problems, and will Error accumulation occurs during intermediate inference stages.

And "Thought Spread" can explore similar problems related to the input problem and get inspiration from solutions to similar problems.

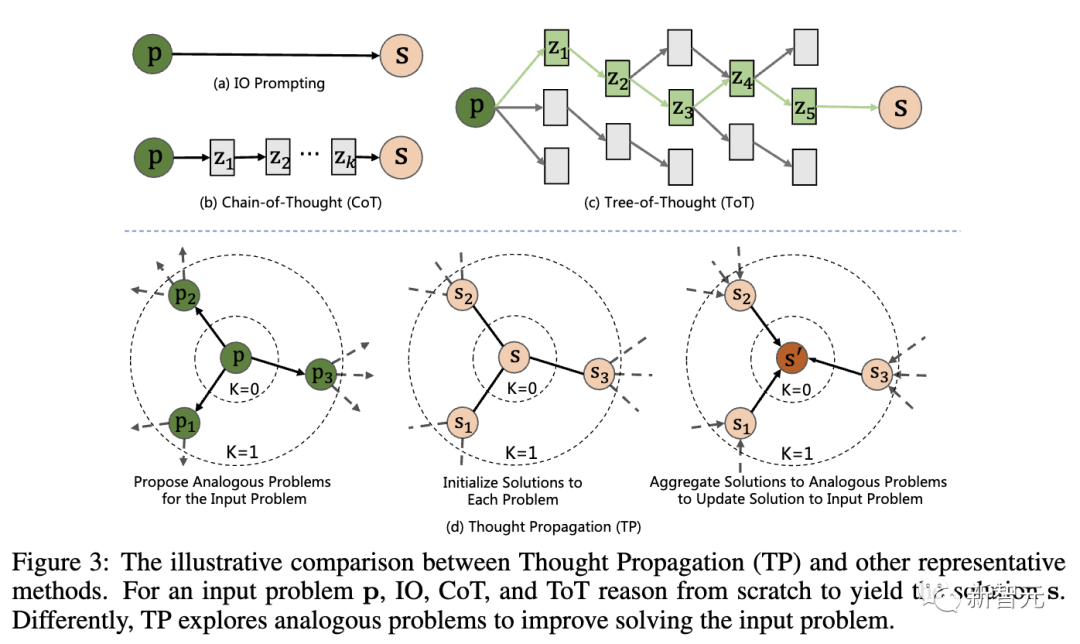

The figure below shows the comparison of “Thought Propagation” (TP) with other representative technologies. For the input problem p, IO, CoT and ToT all need to reason from scratch to arrive at the solution s

Specifically, TP includes Three stages:

#1. Ask similar questions: LLM generates a set of similar questions that have similarities with the input question through prompts . This will guide the model to retrieve potentially relevant prior experiences.

2. Solve similar problems: Let LLM solve each similar problem through existing prompting technology, such as CoT.

3. Summary solution: There are 2 different ways - based on the analogy solution, directly infer the new solution of the input problem Solution; derives a high-level plan or strategy by comparing analogous solutions to input problems.

In this way, large models can leverage prior experience and heuristics, and their initial reasoning can be cross-checked with analogical solutions to further refine those solutions

It is worth mentioning that "thought propagation" has nothing to do with the model, and a single problem-solving step can be performed based on any prompt method

The uniqueness of this method is that it stimulates LLM's analogical thinking, thereby guiding the complex reasoning process

#"Thought propagation" can make LLM more like human beings, and the actual results must speak for themselves.

Researchers from the Chinese Academy of Sciences and Yale conducted the evaluation on 3 tasks:

- Shortest path reasoning: The need to find the best path between nodes in the graph requires global planning and search. Even on simple graphs, standard techniques fail.

- Creative Writing: Generating a coherent, creative story is an open-ended challenge. When given high-level outline prompts, LLM often loses consistency or logic.

- LLM agent planning: LLM agents that interact with textual environments are struggling with long-term strategies. Their plans often "drift" or get stuck in cycles.

Shortest path reasoning

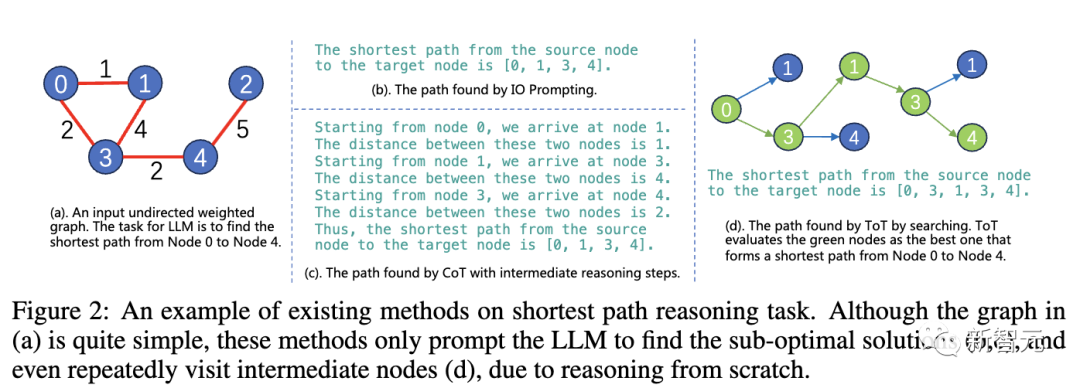

In the shortest path reasoning task, existing methods encounter problems that cannot be solved

Although the graph in (a) is very simple, since inference starts from 0, these methods can only allow LLM to find suboptimal solutions (b,c) or even repeatedly visit the intermediate node (d)

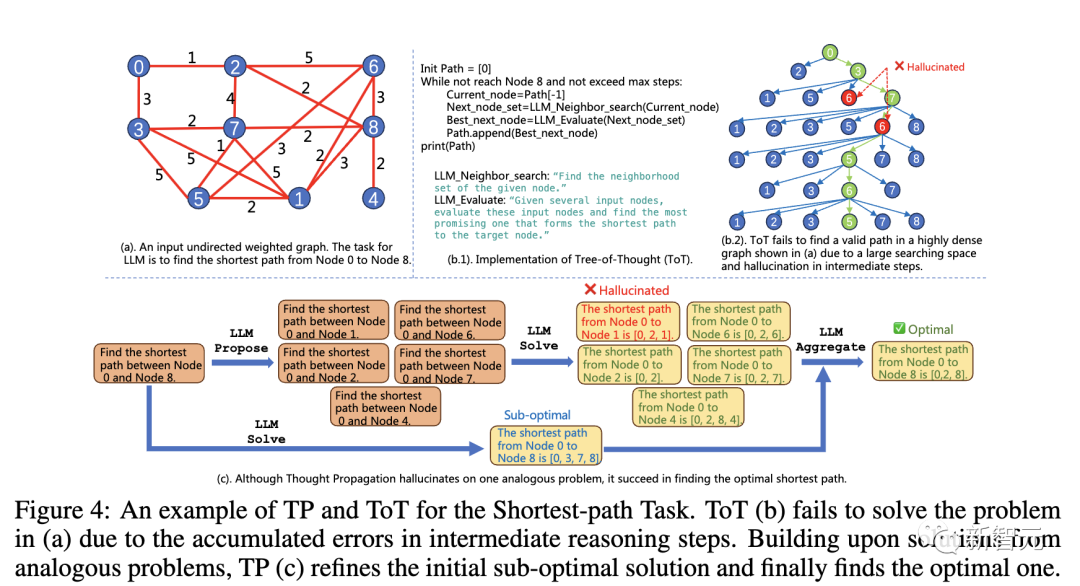

The following is an example that combines the use of TP and ToT

Due to the accumulation of errors in the intermediate reasoning steps, ToT (b) The problem in (a) cannot be solved. Based on solutions to similar problems, TP (c) refines the initial suboptimal solution and eventually finds the optimal solution.

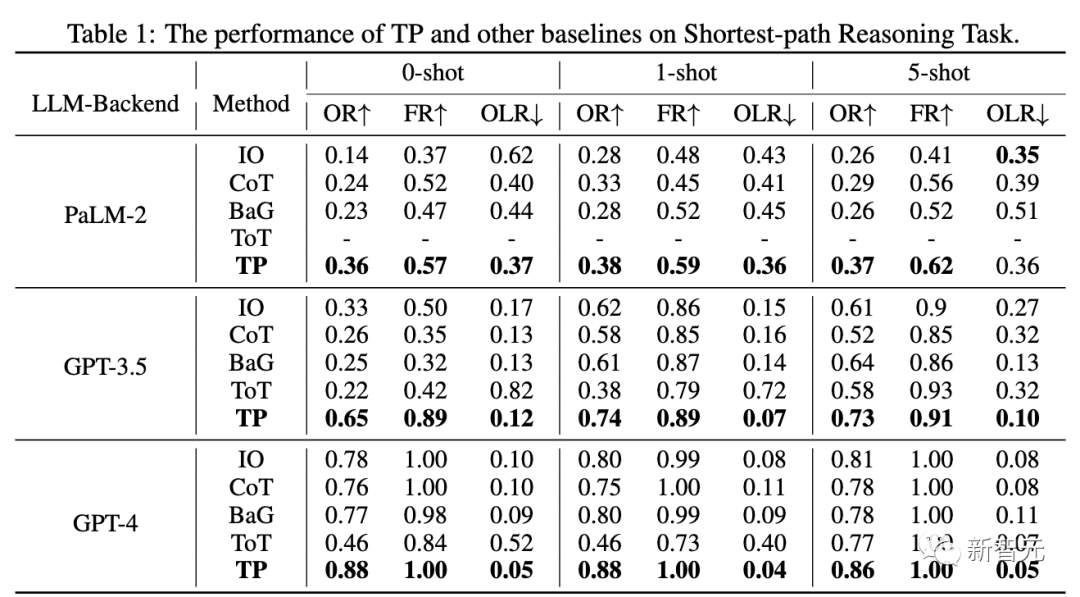

Compared with the baseline, TP's performance in processing the shortest path task has been significantly improved by 12%, generating optimal and effective shortest paths. .

In addition, due to the lowest value of online rewriting (OLR), the generated effective path (TP) is closest to the optimal path compared to the baseline

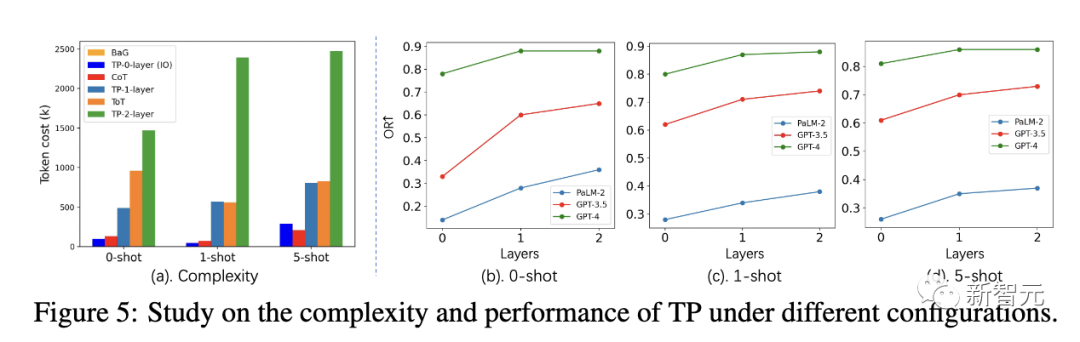

In addition, the researchers also conducted further research on the complexity and performance of TP layers on the shortest path task

In different Under the settings, the token cost of layer 1 TP is similar to ToT. However, Layer 1 TP has achieved very competitive performance in finding the optimal shortest path.

In addition, compared with layer 0 TP (IO), the performance gain of layer 1 TP is also very significant. Figure 5(a) shows the increase in token cost for layer 2 TP.

Creative Writing

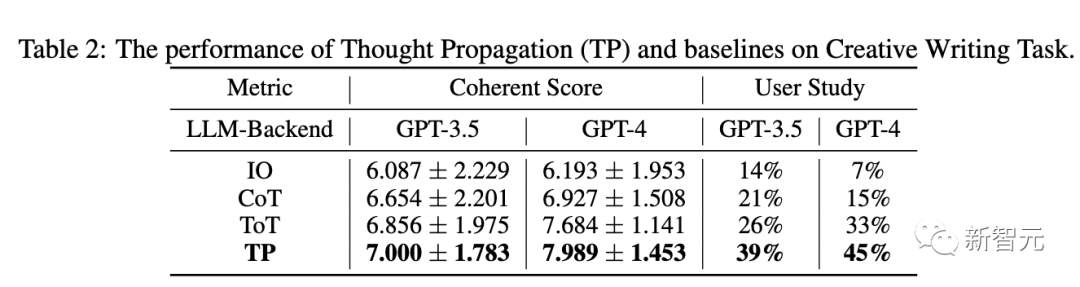

Table 2 below shows the TP and baselines in GPT-3.5 and GPT- performance in 4. In terms of consistency, TP exceeds the baseline. Additionally, in user studies, TP increased human preference in creative writing by 13%.

LLM Agent Planning

In the third task evaluation, the researchers used the ALFWorld game suite, instantiating LLM agent planning tasks in 134 environments.

TP increases the task completion rate by 15% in LLM agent planning. This demonstrates the superiority of reflective TP for successful planning when completing similar tasks.

According to the above experimental results, "thought propagation" can be applied to a variety of different reasoning tasks and performs well in all these tasks

The key to enhancing LLM reasoning

The "thought propagation" model provides a new technology for complex LLM reasoning.

Analogical thinking is a hallmark of human problem-solving ability, which can bring a series of systemic advantages, such as more efficient search and error correction

In similar situations, LLM can also better overcome its own weaknesses, such as lack of reusable knowledge and cascading local errors, by prompting analogical thinking

However, these findings have some limitations. Generating useful analogy questions and keeping reasoning paths concise is not easy. Additionally, longer chained analogical reasoning paths can become lengthy and difficult to follow. At the same time, controlling and coordinating multi-step reasoning chains is also a quite difficult task

However, "thought propagation" still provides us with an interesting solution by creatively solving the reasoning flaws of LLM. method.

With further development, analogical thinking may make LLM’s reasoning capabilities even more powerful. This also points the way towards achieving the goal of closer to human reasoning in large language models

Author introduction

Ran He(然)

He is a professor at the National Laboratory of Pattern Recognition of the Institute of Automation, Chinese Academy of Sciences and the University of Chinese Academy of Sciences. He is also an IAPR Fellow and a senior member of IEEE

He previously received his bachelor's and master's degrees from Dalian University of Technology and his PhD from the Institute of Automation, Chinese Academy of Sciences in 2009

His Research directions include biometric algorithms (face recognition and synthesis, iris recognition, person re-identification), representation learning (pre-training networks using weak/self-supervised or transfer learning), and generative learning (generative models, image generation, image translation).

He has published more than 200 papers in international journals and conferences, including well-known international journals such as IEEE TPAMI, IEEE TIP, IEEE TIFS, IEEE TNN, IEEE TCSVT, as well as CVPR, ICCV, ECCV, NeurIPS and other top international conferences

He is a member of the editorial board of IEEE TIP, IEEE TBIOM and Pattern Recognition, and has also served as CVPR, ECCV, NeurIPS, ICML, ICPR and IJCAI Regional Chairman of the International Conference Yu Junchi is a fourth-year doctoral student at the Institute of Automation, Chinese Academy of Sciences. His supervisor is Professor Heran. He has previously interned at Tencent Artificial Intelligence Laboratory and worked with Tingyang Dr. Xu, Dr. Yu Rong, Dr. Yatao Bian and Professor Junzhou Huang worked together. Now, he is an exchange student in the Department of Computer Science at Yale University, studying under Professor Rex Ying His goal is to develop a trustworthy graph with good interpretability and portability Learn the (TwGL) method and explore its applications in biochemistry

The above is the detailed content of GPT-4's human-like reasoning capabilities have been greatly improved! The Chinese Academy of Sciences proposed 'thinking communication', analogical thinking goes beyond CoT and can be applied immediately. For more information, please follow other related articles on the PHP Chinese website!