Home >Technology peripherals >AI >Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving

Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving

- 王林forward

- 2023-10-11 12:09:031520browse

- Paper link: https://arxiv.org/pdf/2309.06547.pdf

- Dataset link: http://amodalsynthdrive.cs.uni-freiburg.de

Abstract

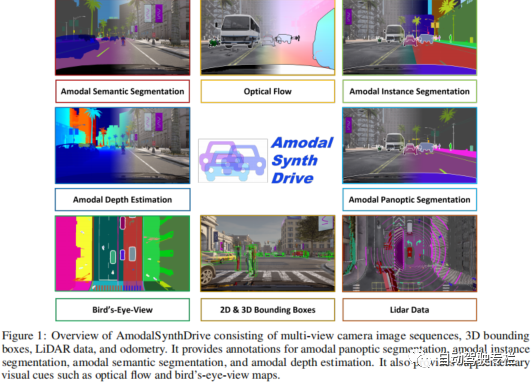

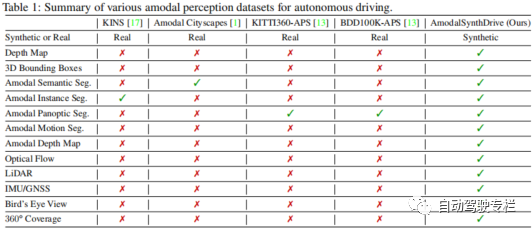

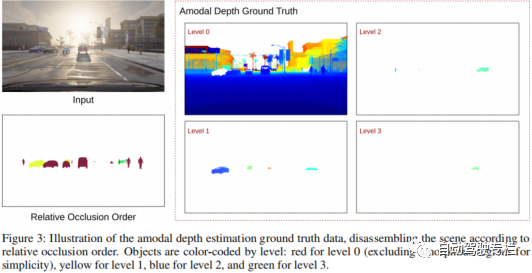

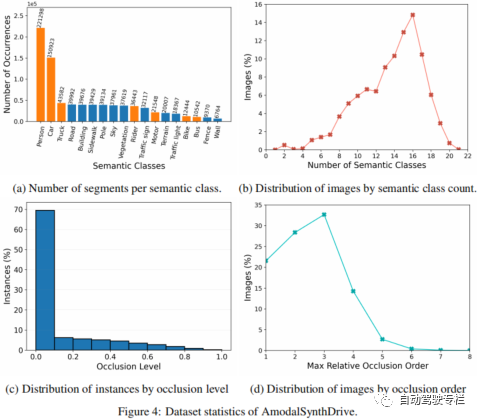

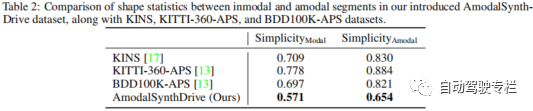

This article introduces AmodalSynthDrive: a tool for automatic A synthetic amodal perception dataset for driving. Unlike humans, who can effortlessly estimate the entirety of an object even in the presence of partial occlusion, modern computer vision algorithms still find this aspect extremely challenging. Exploiting this amodal perception for autonomous driving remains largely unexplored due to the lack of suitable data sets. The generation of these datasets is mainly affected by the expensive annotation cost and the need to mitigate the interference caused by the annotator's subjectivity in accurately labeling occluded areas. To address these limitations, this paper introduces AmodalSynthDrive, a synthetic multi-task amodal perception dataset. The dataset provides multi-view camera images, 3D bounding boxes, lidar data, and odometry for 150 driving sequences, including over 1M object annotations under various traffic, weather, and lighting conditions. AmodalSynthDrive supports a variety of amodal scene understanding tasks, including the introduction of amodal depth estimation to enhance spatial understanding. This article evaluates several baselines for each task to illustrate the challenges and sets up a public benchmark server.

Main contributions

The contributions of this article are summarized as follows:

1) This article proposes the AmodalSynthDrive data set, which is a A comprehensive synthetic amodal perception dataset for urban driving scenarios with multiple data sources;

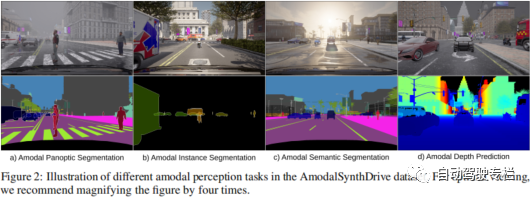

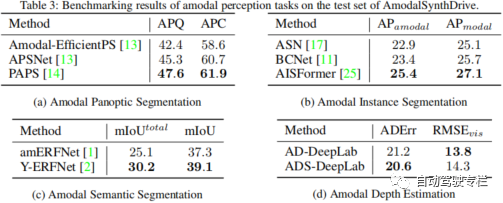

This paper proposes a benchmark for amodal perception tasks, including amodal semantics Segmentation, Amodal Instance Segmentation and Amodal Panorama Segmentation

3) Novel amodal depth estimation tasks are designed to facilitate enhanced spatial understanding. This paper demonstrates the feasibility of this new task through several baselines.

Paper pictures and tables

#

Summary

Perception is a key task for autonomous vehicles, but current methods still lack the amodal understanding required to interpret complex traffic scenes. Therefore, this paper proposes AmodalSynthDrive, a multimodal synthetic perception dataset for autonomous driving. With synthetic images and lidar point clouds, we provide a comprehensive dataset that includes ground-truth annotated data for basic amodal perception tasks and introduce a new task to enhance spatial understanding called Amodal depth estimation. This paper provides over 60,000 individual image sets, each of which contains amodal instance segmentation, amodal semantic segmentation, amodal panoramic segmentation, optical flow, 2D and 3D bounding boxes, amodal depth, and bird's-eye view. Figure related data. Through AmodalSynthDrive, this paper provides various baselines and believes that this work will pave the way for novel research on amodal scene understanding in dynamic urban environments

Original link: https://mp.weixin.qq.com/s/7cXqFbMoljcs6dQOLU3SAQ

The above is the detailed content of Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving. For more information, please follow other related articles on the PHP Chinese website!