Technology peripherals

Technology peripherals AI

AI The risk of out-of-control AI triggered a sign-raising protest at Meta, and LeCun said the open source AI community is booming

The risk of out-of-control AI triggered a sign-raising protest at Meta, and LeCun said the open source AI community is boomingIn the field of AI, people have long been divided on the choice of open source and closed source. However, in the era of large-scale models, open source has quietly emerged as a powerful force. According to a previously leaked internal document from Google, the entire community is rapidly building models similar to OpenAI and Google's large-scale models, including open source models such as LLaMA around Meta

There is no doubt that , Meta is the core of the open source world and has been making continuous efforts to promote the cause of open source, such as the recently released Llama 2. However, as we all know, a big tree must have dead branches. Recently, Meta has been in some trouble because of open source.

Outside Meta’s San Francisco office, a group of protesters holding signs gathered together, Protest Meta's strategy of publicly releasing AI models, claiming that these released models cause the "irreversible proliferation" of potentially unsafe technologies. Some protesters even compared the large models released by Meta to "weapons of mass destruction."

These protesters call themselves "concerned citizens" and are led by Holly Elmore. According to LinkedIn, she is an independent advocate for the AI Pause movement.

What needs to be rewritten is: Image source: MISHA GUREVICH

If a model is proven not to be To be safe, she noted that the API can be turned off, such as by allowing users to access only large models through the API, as Google and OpenAI do. In contrast, Meta's LLaMA series of open source models provide model weights to public, which allows anyone with the appropriate hardware and expertise to copy and tweak the model themselves. Once model weights are released, the publishing company no longer has control over how the AI is used

For Holly Elmore, releasing model weights is a dangerous strategy because anyone can modify them model, and these modifications cannot be undone. She believes that "the more powerful the model, the more dangerous this strategy is."

Compared with open source, large models accessed through APIs often have various security features, such as response filtering or passing specific Training to prevent the output of dangerous or aversive responses

If you can free up the model weights, it will be easier to retrain the model to jump over these "guardrails". This makes it more possible to exploit these open source models to create phishing software and conduct cyber attacks

What needs to be rewritten is: Image source: MISHA GUREVICH

Because she believes that part of the problem is "insufficient security measures for model release" and that there needs to be a better way to ensure model security.

Currently, Meta has not commented on this. However, Yann LeCun, chief AI scientist at Meta, seemed to respond to the statement that "open source AI must be outlawed" and showed the flourishing open source AI startup community in Paris

There are many people who disagree with Holly Elmore, who believe that an open strategy for AI development is the only way to ensure trust in technology.

Some netizens said that open source has pros and cons. It can allow people to gain greater transparency and enhance innovation, but it will also face the risk of misuse (such as code) by malicious actors.

As expected, OpenAI was once again ridiculed, "It should return to open source."

There are many people who are worried about open source

Peter S. Park, a postdoctoral fellow in artificial intelligence security at MIT, said that advanced AI will be widely released in the future Models will pose special problems because it is basically impossible to prevent misuse of AI models

However, Stella Biderman, executive director of EleutherAI, a nonprofit artificial intelligence research organization, said: "To So far, there is little evidence that the open source model has caused any specific harm. It is unclear whether simply placing a model behind the API will solve the security problem."

Biderman believes: "The basic elements for building an LLM have been disclosed in a free research paper, and anyone in the world can read the paper to develop their own model."

She also added: "Encouraging companies to keep model details secret may have serious adverse consequences for the transparency of research in the field, public awareness and scientific development, especially for independent researchers."

Although everyone has been discussing the impact of open source, it is still unclear whether Meta's method is really open enough and whether it can take advantage of open source.

Stefano Maffulli, executive director of the Open Source Initiative (OSI), said: "The concept of open source AI has not been properly defined. Different organizations use the term to refer to different things - Indicates varying degrees of "publicly available stuff," which can confuse people."

Maffulli pointed out that for open source software, the key issue is whether the source code is publicly available and accessible. Reproduction for any purpose. However, reproducing an AI model may require sharing training data, how the data was collected, training software, model weights, inference code, and more. Among them, the most important thing is that the training data may involve privacy and copyright issues

OSI has been working on giving an exact definition of "open source AI" since last year, and it is very likely that it will be An early draft will be released in the coming weeks. But no matter what, he believes that open source is crucial to the development of AI. “We can’t have trustworthy, responsible AI if AI is not open source,” he said.

In the future, the differences between open source and closed source will continue, but open source is unstoppable.

The above is the detailed content of The risk of out-of-control AI triggered a sign-raising protest at Meta, and LeCun said the open source AI community is booming. For more information, please follow other related articles on the PHP Chinese website!

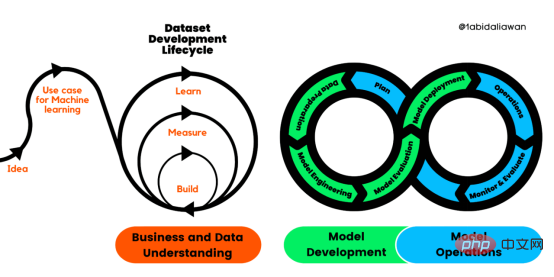

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 Chinese version

Chinese version, very easy to use

Dreamweaver Mac version

Visual web development tools