Interpretability issues of machine learning models require specific code examples

With the rapid development of machine learning and deep learning, there are more and more application scenarios Black box models are used, such as deep neural networks and support vector machines. These models have strong predictive performance in solving various problems, but their internal decision-making processes are difficult to explain and understand. This raises the issue of interpretability of machine learning models.

The interpretability of a machine learning model refers to the ability to clearly and intuitively explain the decision-making basis and reasoning process of the model. In some application scenarios, we not only need the model to give prediction results, but also need to know why the model made such a decision. For example, in medical diagnosis, the model gives a prediction result that a tumor is malignant, and doctors need to know what the result is based on in order to carry out further diagnosis and treatment.

However, the decision-making process of black-box models often has high complexity and nonlinearity, and its internal representation and parameter adjustment methods are not easy to understand. In order to solve this problem, researchers have proposed a series of interpretable machine learning models and methods.

A common method is to use highly interpretable models such as linear models and decision trees. For example, a logistic regression model can give the degree of influence of each feature on the results, and a decision tree can use a tree structure to explain the decision path of the model. Although these models have a certain interpretability, they are limited by weak expression capabilities and insufficient ability to handle complex problems.

Another approach is to use heuristic rules or expert knowledge to interpret the model. For example, in image classification problems, specific visualization methods, such as Gradient Class Activation Mapping (Grad-CAM), can be used to visualize the model's attention to different features and help us understand the model's decision-making process. Although these methods can provide certain explanations, they still have limitations and it is difficult to give a comprehensive and accurate explanation.

In addition to the above methods, there are also some interpretable models and technologies proposed in recent years. For example, local interpretability methods can analyze the model's decision-making process on local predictions, such as local feature importance analysis and category discrimination analysis. Generative adversarial networks (GAN) are also used to generate adversarial samples to help analyze the robustness and vulnerabilities of the model, thereby enhancing the interpretability of the model.

Below we will give a specific code example to illustrate the interpretability learning method:

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import load_iris

# 加载鸢尾花数据集

data = load_iris()

X = data.data

y = data.target

# 训练逻辑回归模型

model = LogisticRegression()

model.fit(X, y)

# 输出特征的权重

feature_weights = model.coef_

print("特征权重:", feature_weights)

# 输出模型对样本的决策概率

sample = np.array([[5.1, 3.5, 1.4, 0.2]])

decision_prob = model.predict_proba(sample)

print("样本决策概率:", decision_prob)In this example, we use the logistic regression model to train the iris data set , and outputs the weight of the feature and the decision probability of the model for a sample. The logistic regression model is a highly interpretable model that uses a linear model to classify data. It can explain the importance of features through weights and explain the model's prediction results for different categories through decision probabilities.

Through this example, we can see that the interpretable learning method can help us understand the decision-making process and reasoning basis of the model, as well as analyze the importance of features. This is very beneficial for us to understand the internal operating mechanism of the model and improve the robustness and reliability of the model.

To sum up, the issue of interpretability of machine learning models is a very important research field, and there are already some interpretable models and methods. In practical applications, we can choose appropriate methods according to specific problems and improve the interpretability and reliability of the model by explaining the decision-making process and reasoning basis of the model. This will help to better understand and utilize the predictive capabilities of machine learning models and promote the development and application of artificial intelligence.

The above is the detailed content of Interpretability issues in machine learning models. For more information, please follow other related articles on the PHP Chinese website!

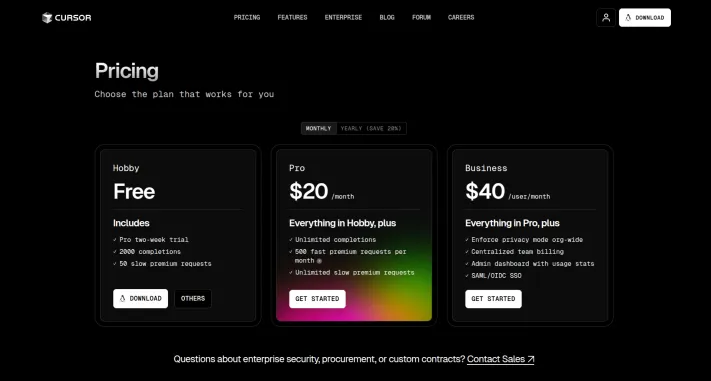

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AMRevolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

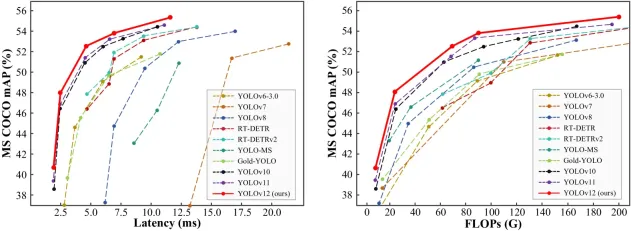

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

5 Grok 3 Prompts that Can Make Your Work EasyMar 04, 2025 am 10:54 AM

5 Grok 3 Prompts that Can Make Your Work EasyMar 04, 2025 am 10:54 AMGrok 3 – Elon Musk and xAi’s latest AI model is the talk of the town these days. From Andrej Karpathy to tech influencers, everyone is talking about the capabilities of this new model. Initially, access was limited to

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version