Reward design issues in reinforcement learning require specific code examples

Reinforcement learning is a machine learning method whose goal is to learn through interaction with the environment How to take actions that maximize cumulative rewards. In reinforcement learning, reward plays a crucial role. It is a signal in the learning process of the agent and is used to guide its behavior. However, reward design is a challenging problem, and reasonable reward design can greatly affect the performance of reinforcement learning algorithms.

In reinforcement learning, rewards can be seen as a communication bridge between the agent and the environment, which can tell the agent how good or bad the current action is. Generally speaking, rewards can be divided into two types: sparse rewards and dense rewards. Sparse rewards refer to rewards being given at only a few specific time points in the task, while dense rewards have reward signals at every time point. Dense rewards make it easier for the agent to learn the correct action strategy than sparse rewards because it provides more feedback information. However, sparse rewards are more common in real-world tasks, which brings challenges to reward design.

The goal of reward design is to provide the agent with the most accurate feedback signal possible so that it can learn the best strategy quickly and effectively. In most cases, we want a reward function that gives a high reward when the agent reaches a predetermined goal, and a low reward or penalty when the agent makes a wrong decision. However, designing a reasonable reward function is not an easy task.

To solve the reward design problem, a common approach is to use human expert-based demonstrations to guide agent learning. In this case, the human expert provides the agent with a series of sample action sequences and their rewards. The agent learns from these samples to become familiar with the task and gradually improves its strategy in subsequent interactions. This method can effectively solve the reward design problem, but it also increases labor costs, and the expert's sample may not be completely correct.

Another approach is to use inverse reinforcement learning (Inverse Reinforcement Learning) to solve the reward design problem. Inverse reinforcement learning is a method of deriving a reward function from observed behavior. It assumes that the agent attempts to maximize a potential reward function during the learning process. By inversely deriving this potential reward function from the observed behavior, Agents can be provided with more accurate reward signals. The core idea of inverse reinforcement learning is to interpret the observed behavior as an optimal strategy and guide the agent's learning by deducing the reward function corresponding to this optimal strategy.

The following is a simple code example of inverse reinforcement learning, demonstrating how to infer the reward function from the observed behavior:

import numpy as np

def inverse_reinforcement_learning(expert_trajectories):

# 计算状态特征向量的均值

feature_mean = np.mean(expert_trajectories, axis=0)

# 构建状态特征矩阵

feature_matrix = np.zeros((len(expert_trajectories), len(feature_mean)))

for i in range(len(expert_trajectories)):

feature_matrix[i] = expert_trajectories[i] - feature_mean

# 使用最小二乘法求解奖励函数的权重向量

weights = np.linalg.lstsq(feature_matrix, np.ones((len(expert_trajectories),)))[0]

return weights

# 生成示例轨迹数据

expert_trajectories = np.array([[1, 1], [1, 2], [2, 1], [2, 2]])

# 使用逆强化学习得到奖励函数的权重向量

weights = inverse_reinforcement_learning(expert_trajectories)

print("奖励函数的权重向量:", weights)The above code uses the least squares method to solve the reward function The weight vector can be used to calculate the reward of any state feature vector. Through inverse reinforcement learning, a reasonable reward function can be learned from sample data to guide the agent's learning process.

In summary, reward design is an important and challenging issue in reinforcement learning. Reasonable reward design can greatly affect the performance of reinforcement learning algorithms. By leveraging methods such as human expert-based demonstrations or inverse reinforcement learning, the reward design problem can be solved and the agent can be provided with accurate reward signals to guide its learning process.

The above is the detailed content of Reward design issues in reinforcement learning. For more information, please follow other related articles on the PHP Chinese website!

强化学习中的奖励函数设计问题Oct 09, 2023 am 11:58 AM

强化学习中的奖励函数设计问题Oct 09, 2023 am 11:58 AM强化学习中的奖励函数设计问题引言强化学习是一种通过智能体与环境的交互来学习最优策略的方法。在强化学习中,奖励函数的设计对于智能体的学习效果至关重要。本文将探讨强化学习中的奖励函数设计问题,并提供具体代码示例。奖励函数的作用及目标奖励函数是强化学习中的重要组成部分,用于评估智能体在某一状态下所获得的奖励值。它的设计有助于引导智能体通过选择最优行动来最大化长期累

C++中的深度强化学习技术Aug 21, 2023 pm 11:33 PM

C++中的深度强化学习技术Aug 21, 2023 pm 11:33 PM深度强化学习技术是人工智能领域备受关注的一个分支,目前在赢得多个国际竞赛的同时也被广泛应用于个人助手、自动驾驶、游戏智能等领域。而在实现深度强化学习的过程中,C++作为一种高效、优秀的编程语言,在硬件资源有限的情况下尤其重要。深度强化学习,顾名思义,结合了深度学习和强化学习两个领域的技术。简单理解,深度学习是指通过构建多层神经网络,从数据中学习特征并进行决策

使用Panda-Gym的机器臂模拟实现Deep Q-learning强化学习Oct 31, 2023 pm 05:57 PM

使用Panda-Gym的机器臂模拟实现Deep Q-learning强化学习Oct 31, 2023 pm 05:57 PM强化学习(RL)是一种机器学习方法,它允许代理通过试错来学习如何在环境中表现。行为主体会因为采取行动导致预期结果而获得奖励或受到惩罚。随着时间的推移,代理会学会采取行动,以使得其预期回报最大化RL代理通常使用马尔可夫决策过程(MDP)进行训练,MDP是为顺序决策问题建模的数学框架。MDP由四个部分组成:状态:环境的可能状态的集合。动作:代理可以采取的一组动作。转换函数:在给定当前状态和动作的情况下,预测转换到新状态的概率的函数。奖励函数:为每次转换分配奖励给代理的函数。代理的目标是学习策略函数,

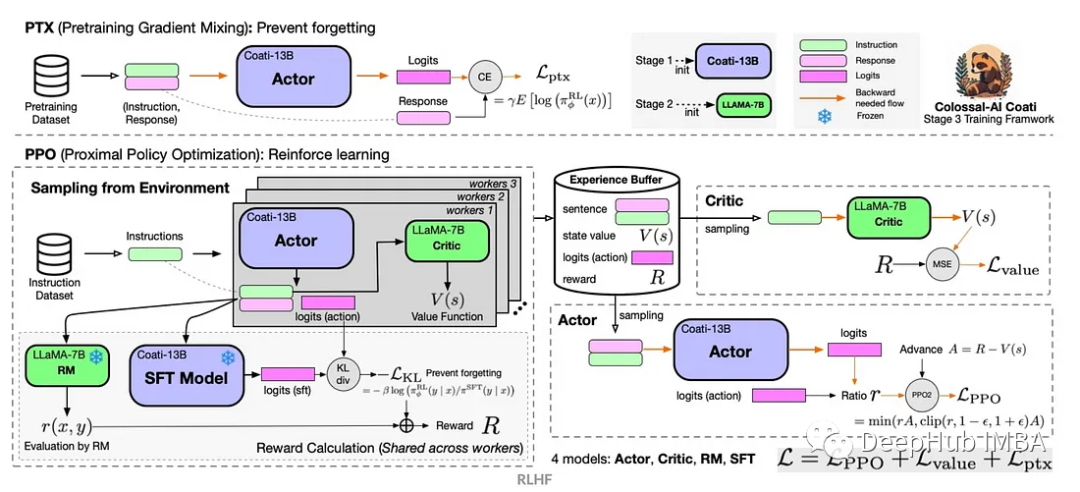

再掀强化学习变革!DeepMind提出「算法蒸馏」:可探索的预训练强化学习TransformerApr 12, 2023 pm 06:58 PM

再掀强化学习变革!DeepMind提出「算法蒸馏」:可探索的预训练强化学习TransformerApr 12, 2023 pm 06:58 PM在当下的序列建模任务上,Transformer可谓是最强大的神经网络架构,并且经过预训练的Transformer模型可以将prompt作为条件或上下文学习(in-context learning)适应不同的下游任务。大型预训练Transformer模型的泛化能力已经在多个领域得到验证,如文本补全、语言理解、图像生成等等。从去年开始,已经有相关工作证明,通过将离线强化学习(offline RL)视为一个序列预测问题,那么模型就可以从离线数据中学习策略。但目前的方法要么是从不包含学习的数据中学习策略

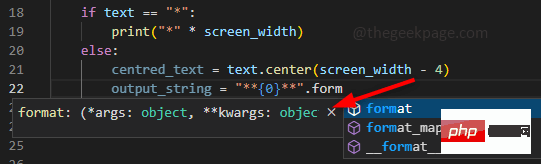

如何解决 VS Code 中 IntelliSense 不起作用的问题Apr 21, 2023 pm 07:31 PM

如何解决 VS Code 中 IntelliSense 不起作用的问题Apr 21, 2023 pm 07:31 PM最常称为VSCode的VisualStudioCode是开发人员用于编码的工具之一。Intellisense是VSCode中包含的一项功能,可让编码人员的生活变得轻松。它提供了编写代码的建议或工具提示。这是开发人员更喜欢的一种扩展。当IntelliSense不起作用时,习惯了它的人会发现很难编码。你是其中之一吗?如果是这样,请通过本文找到不同的解决方案来解决IntelliSense在VS代码中不起作用的问题。Intellisense如下所示。它在您编码时提供建议。首先检

如何使用 Go 语言进行深度强化学习研究?Jun 10, 2023 pm 02:15 PM

如何使用 Go 语言进行深度强化学习研究?Jun 10, 2023 pm 02:15 PM深度强化学习(DeepReinforcementLearning)是一种结合了深度学习和强化学习的先进技术,被广泛应用于语音识别、图像识别、自然语言处理等领域。Go语言作为一门快速、高效、可靠的编程语言,可以为深度强化学习研究提供帮助。本文将介绍如何使用Go语言进行深度强化学习研究。一、安装Go语言和相关库在开始使用Go语言进行深度强化学习

使用Actor-Critic的DDPG强化学习算法控制双关节机械臂May 12, 2023 pm 09:55 PM

使用Actor-Critic的DDPG强化学习算法控制双关节机械臂May 12, 2023 pm 09:55 PM在本文中,我们将介绍在Reacher环境中训练智能代理控制双关节机械臂,这是一种使用UnityML-Agents工具包开发的基于Unity的模拟程序。我们的目标是高精度的到达目标位置,所以这里我们可以使用专为连续状态和动作空间设计的最先进的DeepDeterministicPolicyGradient(DDPG)算法。现实世界的应用程序机械臂在制造业、生产设施、空间探索和搜救行动中发挥着关键作用。控制机械臂的高精度和灵活性是非常重要的。通过采用强化学习技术,可以使这些机器人系统实时学习和调整其行

如何运用强化学习来提升快手用户留存?May 07, 2023 pm 06:31 PM

如何运用强化学习来提升快手用户留存?May 07, 2023 pm 06:31 PM短视频推荐系统的核心目标是通过提升用户留存,牵引DAU增长。因此留存是各APP的核心业务优化指标之一。然而留存是用户和系统多次交互后的长期反馈,很难分解到单个item或者单个list,因此传统的point-wise和list-wise模型难以直接优化留存。强化学习(RL)方法通过和环境交互的方式优化长期奖励,适合直接优化用户留存。该工作将留存优化问题建模成一个无穷视野请求粒度的马尔科夫决策过程(MDP),用户每次请求推荐系统决策一个动作(action),用于聚合多个不同的短期反馈预估(观看时长、

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Notepad++7.3.1

Easy-to-use and free code editor

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Mac version

God-level code editing software (SublimeText3)