Technology peripherals

Technology peripherals AI

AI When submitting your paper to Nature, ask about GPT-4 first! Stanford actually tested 5,000 articles, and half of the opinions were the same as those of human reviewers

When submitting your paper to Nature, ask about GPT-4 first! Stanford actually tested 5,000 articles, and half of the opinions were the same as those of human reviewersIs

GPT-4 capable of doing paper review?

Researchers from Stanford and other universities really tested it.

They threw thousands of articles from Nature, ICLR and other top conferences to GPT-4, let it generate review comments (including modification suggestions and so on) , and then combined them with the opinions given by humans Compare.

After investigation, we found that:

More than 50% of the opinions proposed by GPT-4 are consistent with at least one human reviewer;

And more than 82.4% of the authors found the opinions provided by GPT-4 very helpful

What enlightenments can this research bring us? ?

The conclusion is:

There is still no substitute for high-quality human feedback; but GPT-4 can help authors improve first drafts before formal peer review.

automatic pipeline.

It can analyze the entire paper in PDF format, extract titles, abstracts, figures, table titles and other content to create prompts and then let GPT-4 provide review comments. Among them, the opinions are the same as the standards of each top conference, and include four parts: The importance and novelty of the research, as well as the reasons for possible acceptance or rejection and suggestions for improvement

two aspects.

First comes the quantitative experiment:

Read existing papers, generate feedback, and conduct systematic comparisons with real human opinions to identify overlaps PartHere, the team selected 3096 articles from the main issue of Nature and major sub-journals, and 1709 articles from the ICLR Machine Learning Conference(including last year and this year), totaling 4805 articles.

Among them, Nature papers involved a total of 8,745 human review comments; ICLR meetings involved 6,506 comments.

1. GPT-4 opinions significantly overlap with the real opinions of human reviewers

Overall, in the Nature paper, GPT-4 has 57.55% opinions consistent with at least one human reviewer; in ICLR, this number is as high as 77.18%.

(oral, spotlight, or directly rejected)found that:

For weaker papers, the overlap rate between GPT-4 and human reviewers is expected to increase. From the current more than 30%, it can be increased to close to 50%This shows that GPT-4 has a high discriminating ability and can identify papers with poor qualityThe author also said that , those papers that require more substantial modifications before they can be accepted are in luck. You can try the modification opinions given by GPT-4 before officially submitting them.2. GPT-4 can provide non-universal feedback

The so-called non-universal feedback means that GPT-4 will not give a universal feedback that is applicable to multiple papers. review comments. Here, the authors measured a "pairwise overlap rate" metric and found that it was significantly reduced to 0.43% and 3.91% on both Nature and ICLR. This shows that GPT-4 has a specific goal3, and can reach agreement with human opinions on major and universal issues

Generally speaking, those comments that appear earliest and are mentioned by multiple reviewers often represent important and common problems

Here, the team also found that LLM is more likely to identify multiple Common problems or defects unanimously recognized by the reviewers

The overall performance of GPT-4 is acceptable

4. The opinions given by GPT-4 emphasize some aspects that are different from humans

The study found that GPT-4 was 7.27 times more likely than humans to comment on the meaning of the research itself, and 10.69 times more likely to comment on the novelty of the research.

Both GPT-4 and humans often recommend additional experiments, but humans focus more on ablation experiments, and GPT-4 recommends trying them on more data sets.

The authors stated that these findings indicate that GPT-4 and human reviewers place different emphasis on various aspects, and that cooperation between the two may bring potential advantages.

Beyond quantitative experiments is user research.

A total of 308 researchers in the fields of AI and computational biology from different institutions participated in this study. They uploaded their papers to GPT-4 for review

The research team collected their opinions on Real feedback from GPT-4 reviewers.

Overall, More than half (57.4%) of participants found the feedback generated by GPT-4 helpful , including giving some points that humans can’t think of.

and 82.4% of those surveyed found it more beneficial than at least some human reviewer feedback.

In addition, more than half of the people (50.5%) expressed their willingness to further use large models such as GPT-4 to improve the paper.

One of them said that it only takes 5 minutes for GPT-4 to give the results. This feedback is really fast and is very helpful for researchers to improve their papers.

Of course, the author emphasizes:

The capabilities of GPT-4 also have some limitations

The most obvious one is that it focuses more on the "overall layout", Missing In-depth advice on specific technology areas (e.g. model architecture).

Therefore, as the author’s final conclusion states:

High-quality feedback from human reviewers is very important before formal review, but we can test the waters first to make up for the experiment and construction details may be missed

Of course, they also remind:

In the formal review, the reviewer should still participate independently and not rely on any LLM.

One author is all Chinese

This studyThere are three authors, all of whom are Chinese, and all come from the School of Computer Science at Stanford University.

They are:

- Liang Weixin, a doctoral student at the school and also the Stanford AI Laboratory(SAIL)member. He holds a master's degree in electrical engineering from Stanford University and a bachelor's degree in computer science from Zhejiang University.

- Yuhui Zhang, also a doctoral student, researches on multi-modal AI systems. Graduated from Tsinghua University with a bachelor's degree and from Stanford with a master's degree.

- Cao Hancheng is a fifth-year doctoral candidate at the school, majoring in management science and engineering. He has also joined the NLP and HCI groups at Stanford University. Previously graduated from the Department of Electronic Engineering of Tsinghua University with a bachelor's degree.

Paper link: https://arxiv.org/abs/2310.01783

The above is the detailed content of When submitting your paper to Nature, ask about GPT-4 first! Stanford actually tested 5,000 articles, and half of the opinions were the same as those of human reviewers. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

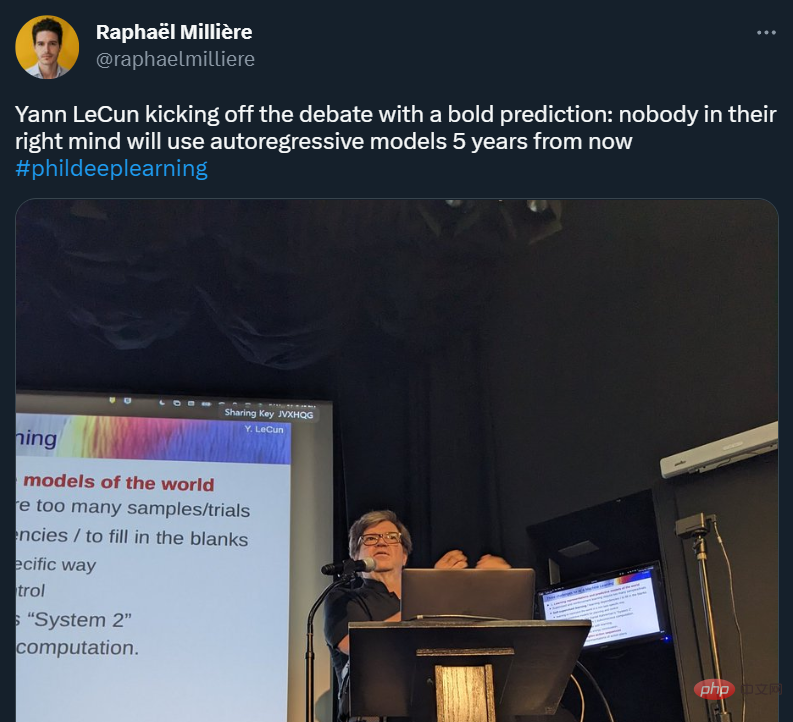

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Notepad++7.3.1

Easy-to-use and free code editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version