Original title: Cross-Dataset Experimental Study of Radar-Camera Fusion in Bird's-Eye View

Paper link: https://arxiv.org/pdf/2309.15465.pdf

Author affiliation: Opel Automobile GmbH Rheinland -Pfalzische Technische Universitat Kaiserslautern-Landau German Research Center for Artificial Intelligence

Thesis idea:

By leveraging complementary sensor information, Millimeter wave radar and camera fusion systems have the potential to provide highly robust and reliable perception systems for advanced driver assistance systems and autonomous driving functions. Recent advances in camera-based object detection provide new possibilities for the fusion of millimeter-wave radar and cameras, which can exploit bird's-eye feature maps for fusion. This study proposes a novel and flexible fusion network and evaluates its performance on two datasets (nuScenes and View-of-Delft). Experimental results show that although the camera branch requires large and diverse training data, the millimeter-wave radar branch benefits more from high-performance millimeter-wave radar. Through transfer learning, this study improves camera performance on smaller datasets. The research results further show that the fusion method of millimeter wave radar and camera is significantly better than the baseline method using only camera or only millimeter wave radar

Network Design:

Recently ,A trend in 3D object detection is to convert the ,features of images into a common Bird’s Eye View (BEV) ,representation. This representation provides a flexible fusion architecture that can be fused between multiple cameras or using ranging sensors. In this work, we extend the BEVFusion method originally used for laser camera fusion for millimeter-wave radar camera fusion. We trained and evaluated our proposed fusion method using a selected millimeter wave radar dataset. In several experiments, we discuss the advantages and disadvantages of each dataset. Finally, we apply transfer learning to achieve further improvements

Here's what needs to be rewritten: Figure 1 shows the BEV millimeter wave radar-camera fusion flow chart based on BEVFusion. In the generated camera image, we include the detection results of the projected millimeter wave radar and the real bounding box

This article follows the fusion architecture of BEVFusion. Figure 1 shows the network overview of millimeter wave radar-camera fusion in BEV in this article. Note that fusion occurs when the camera and millimeter wave radar signatures are connected at the BEV. Below, this article provides further details for each block.

The content that needs to be rewritten is: A. Camera encoder and camera to BEV view transformation

The camera encoder and view transformation adopt the idea of [15], which is a flexible The framework can extract image BEV features of any camera external and internal parameters. First, features are extracted from each image using a tiny-Swin Transformer network. Next, this paper uses the Lift and Splat steps of [14] to convert the features of the image to the BEV plane. To this end, dense depth prediction is followed by a rule-based block where features are converted into pseudo point clouds, rasterized and accumulated into a BEV grid.

Radar Pillar Feature Encoder

The purpose of this block is to encode the millimeter wave radar point cloud into BEV features on the same grid as the image BEV features. To this end, this paper uses the pillar feature encoding technology of [16] to rasterize the point cloud into infinitely high voxels, the so-called pillar.

The content that needs to be rewritten is: C. BEV encoder

Similar to [5], the BEV features of millimeter wave radar and cameras are achieved through cascade fusion. The fused features are processed by a joint convolutional BEV encoder so that the network can consider spatial misalignment and exploit the synergy between different modalities

D. Detection Head

This article uses CenterPoint detection head to predict the heatmap of the object center for each class. Further regression heads predict the size, rotation and height of objects, as well as the velocity and class properties of nuScenes. The heat map is trained using Gaussian focus loss, and the rest of the detection heads are trained using L1 loss

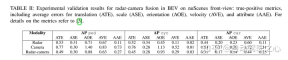

Experimental results:

##

##

Citation:

Stäcker, L., Heidenreich, P., Rambach, J., & Stricker, D. (2023). "Radar-camera fusion from a bird's-eye view" Cross-dataset experimental research". ArXiv. /abs/2309.15465

The content that needs to be rewritten is: Original link; https://mp.weixin.qq.com/ s/5mA5up5a4KJO2PBwUcuIdQ

The above is the detailed content of Experimental study on Radar-Camera fusion across data sets under BEV. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

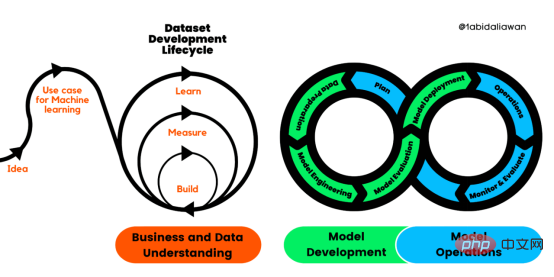

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Notepad++7.3.1

Easy-to-use and free code editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft