Web Front-end

Web Front-end JS Tutorial

JS Tutorial How to build real-time data processing applications using React and Apache Kafka

How to build real-time data processing applications using React and Apache KafkaHow to build real-time data processing applications using React and Apache Kafka

How to use React and Apache Kafka to build real-time data processing applications

Introduction:

With the rise of big data and real-time data processing, building real-time data processing applications It has become the pursuit of many developers. The combination of React, a popular front-end framework, and Apache Kafka, a high-performance distributed messaging system, can help us build real-time data processing applications. This article will introduce how to use React and Apache Kafka to build real-time data processing applications, and provide specific code examples.

1. Introduction to React Framework

React is a JavaScript library open sourced by Facebook, focusing on building user interfaces. React uses a component-based development method to divide the UI into independent and reusable structures, improving the maintainability and testability of the code. Based on the virtual DOM mechanism, React can efficiently update and render the user interface.

2. Introduction to Apache Kafka

Apache Kafka is a distributed, high-performance messaging system. Kafka is designed to handle large-scale data streams per second with high throughput, fault tolerance, and scalability. The core concept of Kafka is the publish-subscribe model, where producers publish messages to specific topics and consumers receive messages by subscribing to these topics.

3. Steps to build real-time data processing applications using React and Kafka

- Installing React and Kafka

First, we need to install the running environments of React and Kafka on the machine. React can be installed using npm, while Kafka requires downloading and configuring Zookeeper and Kafka server. -

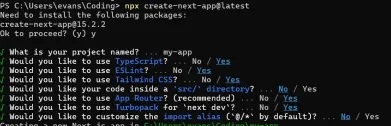

Create React Project

Create a new React project using the React scaffolding tool create-react-app. Run the following command in the command line:npx create-react-app my-app cd my-app

-

Install Kafka Library

Install Kafka-related libraries through npm for communicating with the Kafka server. Run the following command in the command line:npm install kafka-node

-

Create Kafka producer

Create a kafkaProducer.js file in the React project for creating the Kafka producer and sending data to The specified topic. The following is a simple code example:const kafka = require('kafka-node'); const Producer = kafka.Producer; const client = new kafka.KafkaClient(); const producer = new Producer(client); producer.on('ready', () => { console.log('Kafka Producer is ready'); }); producer.on('error', (err) => { console.error('Kafka Producer Error:', err); }); const sendMessage = (topic, message) => { const payload = [ { topic: topic, messages: message } ]; producer.send(payload, (err, data) => { console.log('Kafka Producer sent:', data); }); }; module.exports = sendMessage; -

Create Kafka Consumer

Create a kafkaConsumer.js file in the React project for creating a Kafka consumer and consuming data from the specified topic Receive data. The following is a simple code example:const kafka = require('kafka-node'); const Consumer = kafka.Consumer; const client = new kafka.KafkaClient(); const consumer = new Consumer( client, [{ topic: 'my-topic' }], { autoCommit: false } ); consumer.on('message', (message) => { console.log('Kafka Consumer received:', message); }); consumer.on('error', (err) => { console.error('Kafka Consumer Error:', err); }); module.exports = consumer; -

Using Kafka in React components

Use the above Kafka producers and consumers in React components. The producer can be called in the component's lifecycle method to send data to the Kafka server, and the consumer can be used to obtain the data before rendering to the DOM. The following is a simple code example:import React, { Component } from 'react'; import sendMessage from './kafkaProducer'; import consumer from './kafkaConsumer'; class KafkaExample extends Component { componentDidMount() { // 发送数据到Kafka sendMessage('my-topic', 'Hello Kafka!'); // 获取Kafka数据 consumer.on('message', (message) => { console.log('Received Kafka message:', message); }); } render() { return ( <div> <h1 id="Kafka-Example">Kafka Example</h1> </div> ); } } export default KafkaExample;In the above code, the componentDidMount method will be automatically called after the component is rendered to the DOM. Here we send the first message and obtain the data through the consumer.

-

Run the React application

Finally, start the React application locally by running the following command:npm start

IV. Summary

This article Introduces how to use React and Apache Kafka to build real-time data processing applications. First, we briefly introduced the characteristics and functions of React and Kafka. We then provide specific steps to create a React project and create producers and consumers using Kafka related libraries. Finally, we show how to use these features in React components to achieve real-time data processing. Through these sample codes, readers can further understand and practice the combined application of React and Kafka, and build more powerful real-time data processing applications.

Reference materials:

- React official documentation: https://reactjs.org/

- Apache Kafka official documentation: https://kafka.apache.org /

The above is the detailed content of How to build real-time data processing applications using React and Apache Kafka. For more information, please follow other related articles on the PHP Chinese website!

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AM

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AMUnderstanding how JavaScript engine works internally is important to developers because it helps write more efficient code and understand performance bottlenecks and optimization strategies. 1) The engine's workflow includes three stages: parsing, compiling and execution; 2) During the execution process, the engine will perform dynamic optimization, such as inline cache and hidden classes; 3) Best practices include avoiding global variables, optimizing loops, using const and lets, and avoiding excessive use of closures.

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AMPython is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Zend Studio 13.0.1

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.