Technology peripherals

Technology peripherals AI

AI AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages

AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stagesAI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages

Electronic computers were born in the 1940s, and within 10 years of the emergence of computers, the first AI application in human history appeared.

AI models have been developed for more than 70 years. Now they can not only create poetry, but also generate images based on text prompts, and even help humans discover unknown protein structures

In such a short period of time, AI technology has achieved exponential growth. What is the reason for this?

A long picture from "Our World in Data" (Our World in Data), using the computing power used to train the AI model as a scale to analyze the history of AI development. Traceability.

High-definition picture: https://www.visualcapitalist.com/wp-content/uploads/ 2023/09/01.-CP_AI-Computation-History_Full-Sized.html The content that needs to be rewritten is: High-resolution large image link: https://www.visualcapitalist.com/wp-content/uploads/2023/09/01.-CP_AI-Computation-History_Full-Sized.html

The source of this data is a paper published by researchers from MIT and other universities

Paper link: https://arxiv.org/pdf/2202.05924.pdf

In addition to the paper, a research team also produced a visual table based on the data in this paper. Users can zoom in and out of the chart at will to obtain more detailed data

The content that needs to be rewritten is: Table address: https://epochai.org /blog/compute-trends#compute-trends-are-slower-than-previously-reported

The author of the chart mainly estimates the training time of each model by calculating the number of operations and GPU time. The amount of calculation, and for which model to choose as a representative of an important model, the author mainly determines through three properties:

Significant importance: a certain system has a major historical impact, significant Improved SOTA, or been cited more than 1,000 times.

Relevance: The author only includes papers that contain experimental results and key machine learning components, and the goal of the paper is to promote the development of existing SOTA.

Uniqueness: If there is another more influential paper describing the same system, then that paper will be removed from the author's dataset

Three eras of AI development

In the 1950s, American mathematician Claude Shannon trained a robot mouse named Theseus to enable it to Navigate the maze and memorize the path. This is the first instance of artificial learning

Theseus is built on 40 floating-point operations (FLOPs). FLOPs are commonly used as a measure of computer hardware computing performance. The higher the number of FLOPs, the greater the computing power and the more powerful the system.

The advancement of AI relies on three key elements: computing power, available training data, and algorithms. In the early decades of AI development, the demand for computing power continued to grow according to Moore's Law, which means that computing power doubled approximately every 20 months

However, when the emergence of AlexNet (an image recognition artificial intelligence) in 2012 marked the beginning of the deep learning era, this doubling time was greatly shortened to six months, as researchers improved the computing and processor Investments increase

With the 2015 emergence of AlphaGo—a computer program that defeated professional human Go players—researchers discovered the third era: massive AI The model era has arrived, and its computational requirements are greater than all previous AI systems.

The progress of AI technology in the future

Looking back on the past ten years, the growth rate of computing power is simply incredible

For example, the computing power used to train Minerva, an AI that can solve complex mathematical problems, was almost 6 million times that used to train AlexNet a decade ago.

This growth in computing, coupled with the vast number of available data sets and better algorithms, has allowed AI to achieve a vast amount of results in an extremely short period of time. progress. Today, AI can not only reach human performance levels, but even surpass humans in many fields.

AI capabilities will continue to surpass humans in all aspects

As can be clearly seen from the above picture, Artificial intelligence has already surpassed human performance in many areas and will soon surpass human performance in others.

The following figure shows in which year AI has reached or exceeded human levels in common abilities that humans use in daily work and life.

AI technology development potential is sufficient

It is difficult to determine whether growth can maintain the same rate . The training of large-scale models requires more and more computing power. If the supply of computing power cannot continue to grow, it may slow down the development process of artificial intelligence technology

Similarly, exhaustion is currently available for All the data used to train AI models can also hinder the development and implementation of new models.

In 2023, the AI industry will see an influx of capital, especially generative AI represented by large language models. This may indicate that more breakthroughs are coming. It seems that the above three elements that promote the development of AI technology will be further optimized and developed in the future

In the first half of 2023, the AI industry of startups have raised $14 billion in funding, which is even more than the total funding received in the past four years.

And a large number (78%) of generative AI startups are still in the very early stages of development, and even 27% of generative AI startups The company has not yet raised capital.

More than 360 generative artificial intelligence companies, 27% have not yet raised funds. More than half are projects of Round 1 or earlier, indicating that the entire generative AI industry is still in a very early stage.

Due to the capital-intensive nature of developing large language models, the generative AI infrastructure category has gained over 70% since Q3 2022 % of funds, accounting for only 10% of all generative AI trading volume. Much of the funding comes from investor interest in emerging infrastructure such as underlying models and APIs, MLOps (machine learning operations), and vector database technology.

The above is the detailed content of AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages. For more information, please follow other related articles on the PHP Chinese website!

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AM

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AMGoogle is leading this shift. Its "AI Overviews" feature already serves more than one billion users, providing complete answers before anyone clicks a link.[^2] Other players are also gaining ground fast. ChatGPT, Microsoft Copilot, and Pe

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AM

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AMIn 2022, he founded social engineering defense startup Doppel to do just that. And as cybercriminals harness ever more advanced AI models to turbocharge their attacks, Doppel’s AI systems have helped businesses combat them at scale— more quickly and

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AM

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AMVoila, via interacting with suitable world models, generative AI and LLMs can be substantively boosted. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AM

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AMLabor Day 2050. Parks across the nation fill with families enjoying traditional barbecues while nostalgic parades wind through city streets. Yet the celebration now carries a museum-like quality — historical reenactment rather than commemoration of c

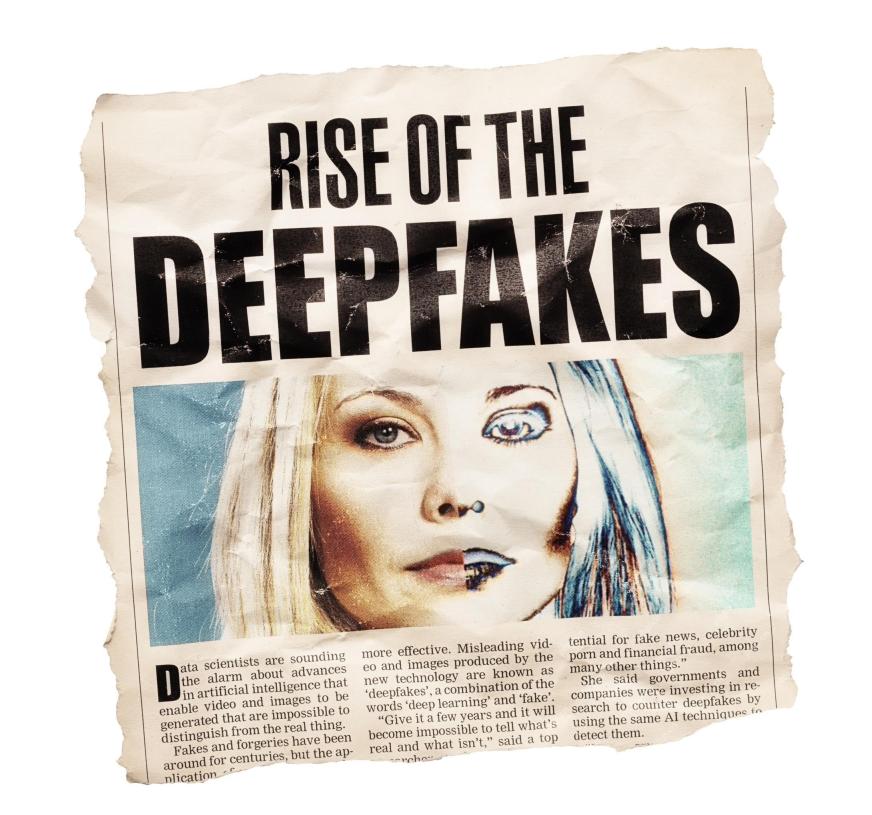

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AM

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AMTo help address this urgent and unsettling trend, a peer-reviewed article in the February 2025 edition of TEM Journal provides one of the clearest, data-driven assessments as to where that technological deepfake face off currently stands. Researcher

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AM

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AMFrom vastly decreasing the time it takes to formulate new drugs to creating greener energy, there will be huge opportunities for businesses to break new ground. There’s a big problem, though: there’s a severe shortage of people with the skills busi

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AM

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AMYears ago, scientists found that certain kinds of bacteria appear to breathe by generating electricity, rather than taking in oxygen, but how they did so was a mystery. A new study published in the journal Cell identifies how this happens: the microb

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AM

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AMAt the RSAC 2025 conference this week, Snyk hosted a timely panel titled “The First 100 Days: How AI, Policy & Cybersecurity Collide,” featuring an all-star lineup: Jen Easterly, former CISA Director; Nicole Perlroth, former journalist and partne

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function