Technology peripherals

Technology peripherals AI

AI The fatal flaw of large models: the correct answer rate is almost zero, neither GPT nor Llama is immune

The fatal flaw of large models: the correct answer rate is almost zero, neither GPT nor Llama is immuneI asked GPT-3 and Llama to learn a simple knowledge: A is B, and then asked in turn what B is. It turned out that the accuracy of the AI's answer was zero.

What does this mean?

Recently, a new concept called "Reversal Curse" has caused heated discussions in the artificial intelligence community, and all currently popular large-scale language models have been affected. Faced with extremely simple problems, their accuracy is not only close to zero, but there seems to be no possibility of improving the accuracy

In addition, the researchers also found that this major vulnerability is not related to the model It has nothing to do with the scale and the questions raised

We said that artificial intelligence has developed to the stage of pre-training large models, and it finally seems to have mastered a little logical thinking. However, this time it seems that it has been Back to the original shape

Figure 1: Knowledge inconsistency in GPT-4. GPT-4 correctly gave Tom Cruise's mother's name (left). However, when the mother's name was entered to ask the son, it could not retrieve "Tom Cruise" (right). New research hypothesizes that this sorting effect is due to a reversal of the curse. A model trained on "A is B" does not automatically infer "B is A".

Research shows that the autoregressive language model, which is currently hotly discussed in the field of artificial intelligence, cannot be generalized in this way. In particular, assume that the model's training set contains sentences like "Olaf Scholz was the ninth Chancellor of German," where the name "Olaf Scholz" precedes the description of "the ninth Chancellor of German." The large model might then learn to correctly answer "Who is Olaf Scholz?" but it would be unable to answer and describe any other prompt that precedes the name.

This is what we call This is an example of the "Reverse Curse" sorting effect. If Model 1 is trained with sentences of the form "

So, the reasoning of large models does not actually exist? One view is that the reversal curse demonstrates a fundamental failure of logical deduction during LLM training. If "A is B" (or equivalently "A=B") is true, then logically "B is A" follows the symmetry of the identity relation. Traditional knowledge graphs respect this symmetry (Speer et al., 2017). Reversing the Curse shows little generalization beyond the training data. Moreover, this is not something that LLM can explain without understanding logical deductions. If an LLM such as GPT-4 is given "A is B" in its context window, then it can very well infer "B is A".

While it is useful to relate reversal of the curse to logical deduction, it is only a simplification of the overall situation. At present, we cannot directly test whether a large model can deduce "B is A" after being trained on "A is B". Large models are trained to predict the next word a human would write, rather than what it actually “should be.” Therefore, even if LLM infers "B is A", it may not "tell us" when prompted.

However, reversing the curse indicates a failure of meta-learning. Sentences of the form "

Reversing the curse has attracted the attention of many artificial intelligence researchers. Some people say that it seems like artificial intelligence destroying humanity is just a fantasy

In some people’s eyes, this means that your training data and contextual content Plays a vital role in the generalization process of knowledge

Famous scientist Andrej Karpathy said that the knowledge learned by LLM seems to be more fragmented than we imagined. I don't have a good intuition about this. They learn things within a specific contextual window that may not generalize when we ask in other directions. This is an odd partial generalization, and I think "reversing the curse" is a special case

The controversial research comes from Vanderbilt University, New York University , Oxford University and other institutions. Paper "The Reversal Curse: LLMs trained on “A is B" fail to learn “B is A” 》:

- Paper link: https://arxiv.org/abs/2309.12288

- ##GitHub link: https://github .com/lukasberglund/reversal_curse

If the name and description are reversed, the large model will be confused

This article is passed by A series of fine-tuning experiments on synthetic data demonstrate that LLM suffers from the reversal curse. As shown in Figure 2, the researcher first fine-tuned the model based on the sentence pattern is

In fact, as shown in Figure 4 (experimental part), the model gives the correct name and randomly gives a name. The probabilities are almost the same. Furthermore, when the test order changes from is

How to avoid reversing the curse, researchers have tried the following methods:

- Try different series and different sizes of models;

- The fine-tuning data set contains both the is

sentence pattern and the is sentence pattern; - pairs Each is is subject to multiple interpretations, which aids generalization;

- changes the data from is to

? .

After a series of experiments, they provide preliminary evidence that reversing the curse affects generalization ability in state-of-the-art models (Figure 1 and Part B). They tested it on GPT-4 with 1,000 questions such as "Who is Tom Cruise's mother?" and "Who is Mary Lee Pfeiffer's son?" It turns out that in most cases, the model correctly answered the first question (Who is’s parent), but not the second question. This article hypothesizes that this is because the pre-training data contains fewer examples of parents ranked before celebrities (for example, Mary Lee Pfeiffer's son is Tom Cruise).

Experiments and results

The purpose of the test is to verify that the autoregressive language model (LLM) that learned "A is B" during training Can it be generalized to the opposite form "B is A"

In the first experiment, this article created a document of the form is (or the opposite) Composed of data sets whose names and descriptions are fictitious. Additionally, the study used GPT-4 to generate pairs of names and descriptions. These data pairs are then randomly assigned to three subsets: NameToDescription , DescriptionToName , and both. The first two subsets are shown in Figure 3.

result. In the exact matching evaluation, when the order of the test questions matches the training data, GPT-3-175B achieves better exact matching accuracy, and the results are shown in Table 1.

Specifically, for DescriptionToName (e.g., the composer of Abyssal Melodies is Uriah Hawthorne), when given a hint that contains a description (e.g., who is the composer of Abyssal Melodies), how accurate is the model in retrieving the name? The rate reaches 96.7%. For the facts in NameToDescription, the accuracy is lower at 50.0%. In contrast, when the order does not match the training data, the model fails to generalize at all and the accuracy approaches 0%.

Multiple experiments were also conducted in this article, including GPT-3-350M (see Appendix A.2) and Llama-7B (see Appendix A.4), experimental results show that these models are affected by the reversal curse

Logarithmic probability assigned to the correct name versus a random name in the increased likelihood evaluation There is no detectable difference between them. The average log probability of the GPT-3 model is shown in Figure 4. Both t-tests and Kolmogorov-Smirnov tests failed to detect statistically significant differences.

Figure 4: Experiment 1, when the order is reversed, the model fails to increase the probability of the correct name. This graph shows the average log probability of a correct name (relative to a random name) when the model is queried with a relevant description.

Next, the study conducted a second experiment.

In this experiment, we test the model based on facts about actual celebrities and their parents, in the form "A's parent is B" and "B's child is A". The study collected a list of the top 1000 most popular celebrities from IMDB (2023) and used GPT-4 (OpenAI API) to find the parents of celebrities by their names. GPT-4 was able to identify the parents of celebrities 79% of the time.

After that, for each child-parent pair, the study queries the child by parent. Here, GPT-4’s success rate is only 33%. Figure 1 illustrates this phenomenon. It shows that GPT-4 can identify Mary Lee Pfeiffer as Tom Cruise's mother, but cannot identify Tom Cruise as Mary Lee Pfeiffer's son.

Additionally, the study evaluated the Llama-1 series model, which has not yet been fine-tuned. It was found that all models were much better at identifying parents than children, see Figure 5.

Figure 5: Order reversal effects for parent versus child questions in Experiment 2. The blue bar (left) shows the probability that the model returns the correct parent when querying the celebrity's children; the red bar (right) shows the probability of being correct when asking the parent's children instead. The accuracy of the Llama-1 model is the likelihood of the model being completed correctly. The accuracy of GPT-3.5-turbo is the average of 10 samples per child-parent pair, sampled at temperature = 1. Note: GPT-4 is omitted from the figure because it is used to generate a list of child-parent pairs and therefore has 100% accuracy for the "parent" pair by construction. GPT-4 scores 28% on "sub".

Future Outlook

How to explain the reverse curse in LLM? This may need to await further research in the future. For now, researchers can only offer a brief sketch of an explanation. When the model is updated on "A is B", this gradient update may slightly change the representation of A to include information about B (e.g., in an intermediate MLP layer). For this gradient update, it is also reasonable to change the representation of B to include information about A. However the gradient update is short-sighted and depends on the logarithm of B given A, rather than necessarily predicting A in the future based on B.

After "Reversing the Curse," the researchers plan to explore whether the large model can reverse other types of relationships, such as logical meaning, spatial relationships, and n-place relationships.

The above is the detailed content of The fatal flaw of large models: the correct answer rate is almost zero, neither GPT nor Llama is immune. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

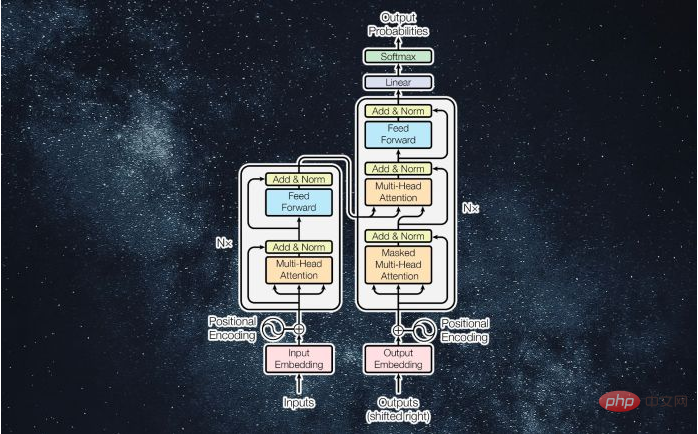

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Chinese version

Chinese version, very easy to use