Technology peripherals

Technology peripherals AI

AI Microsoft AI researchers accidentally leaked 38TB of internal data, including private keys and password information

Microsoft AI researchers accidentally leaked 38TB of internal data, including private keys and password informationThere is no need to change the original meaning, the content that needs to be rewritten is: Source: IT Home

Wiz Research announced today that a data leak was discovered in Microsoft AI's GitHub repository, which was caused by a misconfigured SAS (IT Home Note: Shared Access Signature) token

In terms of details, Microsoft's artificial intelligence research team released open source training data on GitHub, but accidentally exposed 38TB of other internal data, including disk backups of the personal computers of several Microsoft employees. These backups contained confidential information, private keys, passwords, and thousands of internal Microsoft team messages, involving more than 30,000 employees

This GitHub repository provides open source code and AI models for image recognition, visitors need to download the model from the Azure storage URL. However, Wiz discovered that the URL's permissions were misconfigured, causing permissions to be granted to the entire storage account, thereby incorrectly exposing other private data

According to reports, the URLs involved are said to have exposed the data since 2020. Furthermore, the URL was incorrectly configured to allow "Full Control" instead of "Read-Only" permissions. This means that anyone who knows how to view this URL could potentially remove, replace, and inject malicious content

Wiz said it reported the issue to Microsoft on June 22, and two days later on June 24, Microsoft announced it was revoking the SAS tokens. Microsoft said it completed its investigation into potential organizational impact on August 16.

The following is the specific timeline of the entire incident:

On July 20, 2020, the SAS token was submitted to GitHub for the first time; the expiration date is October 5, 2021

October 6, 2021 - SAS token expiration date updated to October 6, 2051

June 22, 2023 - The Wiz research team discovered the issue and reported it to Microsoft

June 24, 2023 - Microsoft Announces SAS Token Expiration

On July 7, 2023, the SAS token was replaced on GitHub

August 16, 2023 - Microsoft completes internal investigation into potential impact

September 18, 2023 - Wiz Research publicly discloses this

The above is the detailed content of Microsoft AI researchers accidentally leaked 38TB of internal data, including private keys and password information. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AM

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AMEasy to implement even for small and medium-sized businesses! Smart inventory management with ChatGPT and Excel Inventory management is the lifeblood of your business. Overstocking and out-of-stock items have a serious impact on cash flow and customer satisfaction. However, the current situation is that introducing a full-scale inventory management system is high in terms of cost. What you'd like to focus on is the combination of ChatGPT and Excel. In this article, we will explain step by step how to streamline inventory management using this simple method. Automate tasks such as data analysis, demand forecasting, and reporting to dramatically improve operational efficiency. moreover,

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AM

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AMUse AI wisely by choosing a ChatGPT version! A thorough explanation of the latest information and how to check ChatGPT is an ever-evolving AI tool, but its features and performance vary greatly depending on the version. In this article, we will explain in an easy-to-understand manner the features of each version of ChatGPT, how to check the latest version, and the differences between the free version and the paid version. Choose the best version and make the most of your AI potential. Click here for more information about OpenAI's latest AI agent, OpenAI Deep Research ⬇️ [ChatGPT] OpenAI D

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AM

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AMTroubleshooting Guide for Credit Card Payment with ChatGPT Paid Subscriptions Credit card payments may be problematic when using ChatGPT paid subscription. This article will discuss the reasons for credit card rejection and the corresponding solutions, from problems solved by users themselves to the situation where they need to contact a credit card company, and provide detailed guides to help you successfully use ChatGPT paid subscription. OpenAI's latest AI agent, please click ⬇️ for details of "OpenAI Deep Research" 【ChatGPT】Detailed explanation of OpenAI Deep Research: How to use and charging standards Table of contents Causes of failure in ChatGPT credit card payment Reason 1: Incorrect input of credit card information Original

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AM

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AMFor beginners and those interested in business automation, writing VBA scripts, an extension to Microsoft Office, may find it difficult. However, ChatGPT makes it easy to streamline and automate business processes. This article explains in an easy-to-understand manner how to develop VBA scripts using ChatGPT. We will introduce in detail specific examples, from the basics of VBA to script implementation using ChatGPT integration, testing and debugging, and benefits and points to note. With the aim of improving programming skills and improving business efficiency,

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

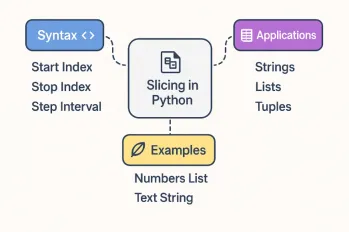

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools