Java development: How to collect and analyze distributed logs

With the continuous development of Internet applications and the increasing amount of data, the collection and analysis of logs have changed. becomes more and more important. Distributed log collection and analysis can help developers better monitor the running status of applications, quickly locate problems, and optimize application performance. This article will introduce how to use Java to develop a distributed log collection and analysis system, and provide specific code examples.

- Select a log collection tool

Before conducting distributed log collection and analysis, we need to choose a suitable log collection tool. The famous open source tool ELK (Elasticsearch, Logstash, Kibana) is a very popular set of log collection and analysis tools, which can achieve real-time log collection, indexing and visual analysis. We can achieve distributed log collection and analysis by using the Logstash plug-in written in Java and the Elasticsearch Java API.

- Configure Logstash plug-in

Logstash is an open source data collection engine that can collect data from multiple sources and transmit it to the target system. In order to implement distributed log collection, we need to specify the input plug-in and output plug-in in the Logstash configuration file.

input {

file {

path => "/path/to/log/file.log"

type => "java"

codec => json

}

}

output {

elasticsearch {

hosts => "localhost:9200"

index => "java_logs"

template => "/path/to/elasticsearch/template.json"

template_name => "java_logs"

}

}In this example, we use the file plug-in as the input plug-in, specify the path of the log file that needs to be collected, and the log type is "java". Then, use the elasticsearch plugin as the output plugin to transfer the collected logs to Elasticsearch.

- Configuring Elasticsearch

Elasticsearch is a distributed real-time search and analysis engine that can store and retrieve massive amounts of data in real time. Before proceeding with distributed log collection and analysis, we need to create indexes and mappings in Elasticsearch.

First, the method to create an index using the Elasticsearch Java API is as follows:

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(new HttpHost("localhost", 9200, "http")));

CreateIndexRequest request = new CreateIndexRequest("java_logs");

CreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);

client.close();Then, the method to create a mapping using the Java API is as follows:

PutMappingRequest request = new PutMappingRequest("java_logs");

request.source("{

" +

" "properties" : {

" +

" "timestamp" : {

" +

" "type" : "date",

" +

" "format" : "yyyy-MM-dd HH:mm:ss"

" +

" },

" +

" "message" : {

" +

" "type" : "text"

" +

" }

" +

" }

" +

"}", XContentType.JSON);

AcknowledgedResponse response = client.indices().putMapping(request, RequestOptions.DEFAULT);

client.close();In this example, we create Create an index named "java_logs" and specify two fields, one is a timestamp field, type is date, the format is "yyyy-MM-dd HH:mm:ss", the other is a message field, type is text .

- Use Kibana for analysis

Kibana is an open source analysis and visualization platform based on Elasticsearch, which can display data analysis results in the form of various charts and dashboards. We can use Kibana to perform real-time query and visual analysis of distributed logs and quickly locate problems.

The method of creating visual charts and dashboards in Kibana is relatively complicated and will not be introduced here.

Summary:

Through the above steps, we can build a simple distributed log collection and analysis system. First use Logstash for log collection and transmission, then use Elasticsearch for data storage and retrieval, and finally use Kibana for data analysis and visualization. In this way, we can better monitor the running status of the application, quickly locate problems, and optimize application performance.

It should be noted that the configuration and code in the above examples are for reference only, and the specific implementation methods and functions need to be adjusted and expanded according to actual needs. At the same time, distributed log collection and analysis is a complex technology that requires certain Java development and system management experience.

The above is the detailed content of Java development: How to perform distributed log collection and analysis. For more information, please follow other related articles on the PHP Chinese website!

PHP实现开源SeaweedFS分布式文件系统Jun 18, 2023 pm 03:56 PM

PHP实现开源SeaweedFS分布式文件系统Jun 18, 2023 pm 03:56 PM在分布式系统的架构中,文件管理和存储是非常重要的一部分。然而,传统的文件系统在应对大规模的文件存储和管理时遇到了一些问题。为了解决这些问题,SeaweedFS分布式文件系统被开发出来。在本文中,我们将介绍如何使用PHP来实现开源SeaweedFS分布式文件系统。什么是SeaweedFS?SeaweedFS是一个开源的分布式文件系统,它用于解决大规模文件存储和

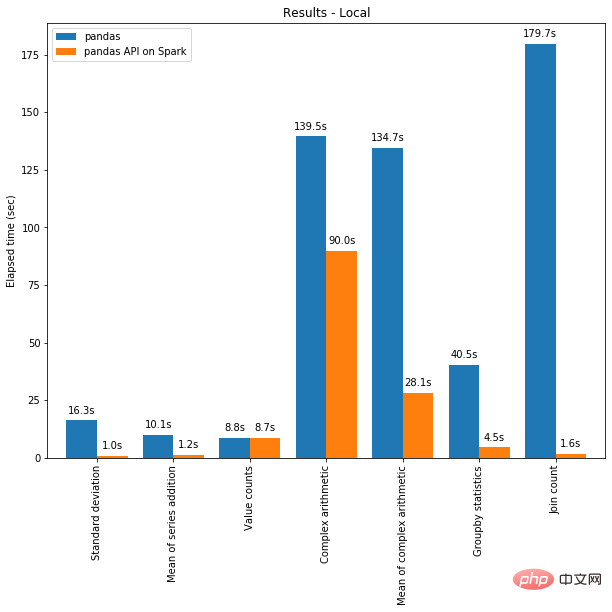

Pandas 与 PySpark 强强联手,功能与速度齐飞!May 01, 2023 pm 09:19 PM

Pandas 与 PySpark 强强联手,功能与速度齐飞!May 01, 2023 pm 09:19 PM使用Python做数据处理的数据科学家或数据从业者,对数据科学包pandas并不陌生,也不乏像云朵君一样的pandas重度使用者,项目开始写的第一行代码,大多是importpandasaspd。pandas做数据处理可以说是yyds!而他的缺点也是非常明显,pandas只能单机处理,它不能随数据量线性伸缩。例如,如果pandas试图读取的数据集大于一台机器的可用内存,则会因内存不足而失败。另外pandas在处理大型数据方面非常慢,虽然有像Dask或Vaex等其他库来优化提升数

PHP中的分布式数据中心May 23, 2023 pm 11:40 PM

PHP中的分布式数据中心May 23, 2023 pm 11:40 PM随着互联网的快速发展,网站的访问量也在不断增长。为了满足这一需求,我们需要构建高可用性的系统。分布式数据中心就是这样一个系统,它将各个数据中心的负载分散到不同的服务器上,增加系统的稳定性和可扩展性。在PHP开发中,我们也可以通过一些技术实现分布式数据中心。分布式缓存分布式缓存是互联网分布式应用中最常用的技术之一。它将数据缓存在多个节点上,提高数据的访问速度和

使用Redis实现分布式计数器May 11, 2023 am 08:06 AM

使用Redis实现分布式计数器May 11, 2023 am 08:06 AM什么是分布式计数器?在分布式系统中,多个节点之间需要对共同的状态进行更新和读取,而计数器是其中一种应用最广泛的状态之一。通俗地讲,计数器就是一个变量,每次被访问时其值就会加1或减1,用于跟踪某个系统进展的指标。而分布式计数器则指的是在分布式环境下对计数器进行操作和管理。为什么要使用Redis实现分布式计数器?随着分布式计算的普及,分布式系统中的许多细节问题也

分布式系统必须知道的一个共识算法:RaftApr 07, 2023 pm 05:54 PM

分布式系统必须知道的一个共识算法:RaftApr 07, 2023 pm 05:54 PM一、Raft 概述Raft 算法是分布式系统开发首选的共识算法。比如现在流行 Etcd、Consul。如果掌握了这个算法,就可以较容易地处理绝大部分场景的容错和一致性需求。比如分布式配置系统、分布式 NoSQL 存储等等,轻松突破系统的单机限制。Raft 算法是通过一切以领导者为准的方式,实现一系列值的共识和各节点日志的一致。二、Raft 角色2.1 角色跟随者(Follower):普通群众,默默接收和来自领导者的消息,当领导者心跳信息超时的

Redis实现分布式配置管理的方法与应用实例May 11, 2023 pm 04:22 PM

Redis实现分布式配置管理的方法与应用实例May 11, 2023 pm 04:22 PMRedis实现分布式配置管理的方法与应用实例随着业务的发展,配置管理对于一个系统而言变得越来越重要。一些通用的应用配置(如数据库连接信息,缓存配置等),以及一些需要动态控制的开关配置,都需要进行统一管理和更新。在传统架构中,通常是通过在每台服务器上通过单独的配置文件进行管理,但这种方式会导致配置文件的管理和同步变得十分复杂。因此,在分布式架构下,采用一个可靠

Redis实现分布式对象存储的方法与应用实例May 10, 2023 pm 08:48 PM

Redis实现分布式对象存储的方法与应用实例May 10, 2023 pm 08:48 PMRedis实现分布式对象存储的方法与应用实例随着互联网的快速发展和数据量的快速增长,传统的单机存储已经无法满足业务的需求,因此分布式存储成为了当前业界的热门话题。Redis是一个高性能的键值对数据库,它不仅支持丰富的数据结构,而且支持分布式存储,因此具有极高的应用价值。本文将介绍Redis实现分布式对象存储的方法,并结合应用实例进行说明。一、Redis实现分

PHP与数据库分布式的集成May 15, 2023 pm 09:40 PM

PHP与数据库分布式的集成May 15, 2023 pm 09:40 PM随着互联网技术的发展,对于一个网络应用而言,对数据库的操作非常频繁。特别是对于动态网站,甚至有可能出现每秒数百次的数据库请求,当数据库处理能力不能满足需求时,我们可以考虑使用数据库分布式。而分布式数据库的实现离不开与编程语言的集成。PHP作为一门非常流行的编程语言,具有较好的适用性和灵活性,这篇文章将着重介绍PHP与数据库分布式集成的实践。分布式的概念分布式

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment