Home >Technology peripherals >AI >On sale online! The first Heart of Machine AI technology forum concluded successfully. These large model technical information are worth watching again and again.

On sale online! The first Heart of Machine AI technology forum concluded successfully. These large model technical information are worth watching again and again.

- PHPzforward

- 2023-09-14 09:49:02700browse

Artificial intelligence has entered the era of large models, which will completely change the implementation model of AI in various industries, and also put forward new AI skill requirements for R&D and technical practitioners in various industries. .

In order to help upgrade large model technology, we held an event called "Llama 2 Large Model Algorithm and Application Practice". This AI technology forum has successfully concluded. On the day of the event, the multi-functional hall on the second floor of Tower B of Wangjing Pohang Center in Beijing was packed with 200 participants from more than ten cities across the country. Under the guidance of four large model technology experts, we systematically studied the underlying large model technology of Llama 2, and personally built our own exclusive large model, and together we efficiently completed the upgrade of large model technology

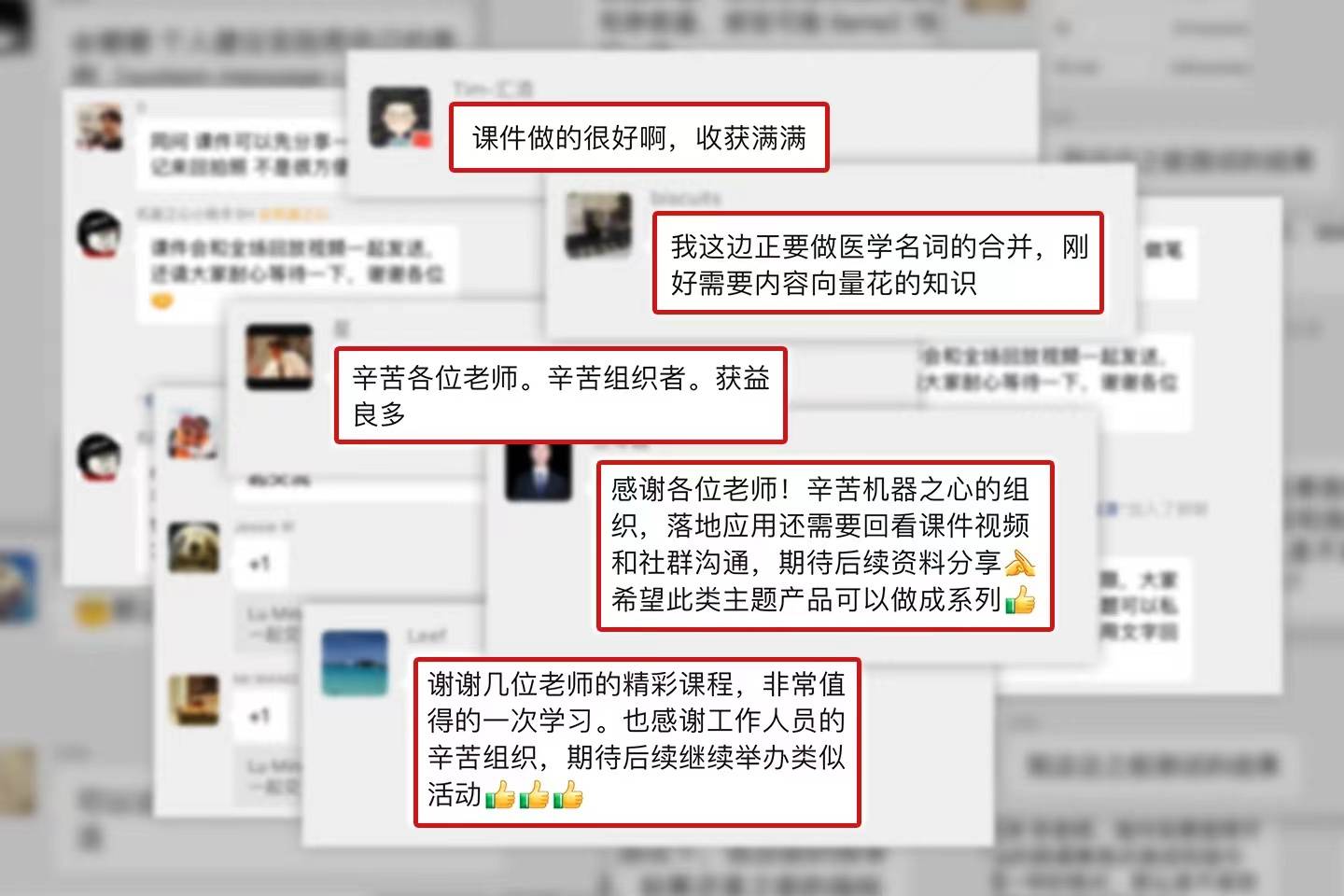

The content was of high quality, exceeded expectations, the explanations were exciting and in-depth, the on-site Q&A exchanges were enlightening, and the service was comprehensive and thoughtful... Participants gave the event widespread praise and Expressed expectations for more technical activities with rich levels and in-depth content.

In addition, due to reasons such as distance from the city and schedule, many friends who were interested in the event regretted not being able to come to the venue. In order to respond to everyone's learning and practice needs, the official knowledge station of this site (https://vtizr.xet.tech/s/1GOWfs) is now online with the live video of this forum. The full set of videos is priced at 699 yuan (after successful purchase, please add the assistant ID of this website: 13661489516 to obtain the supporting pre- and post-conference learning materials package). Buy the course now and start your journey of upgrading large model technology!

System explanation: the latest technological progress and cutting-edge application cases

Dean-appointed teaching associate professor of Qingyuan Research Institute of Shanghai Jiao Tong University, Liu Pengfei, head of the Generative Artificial Intelligence Research Group (GAIR)

"If the model is trained thoroughly enough, a very good performance can be achieved in a relatively small model." Liu Pengfei, the first speaker, focused Research directions include pre-training, generation and evaluation of natural language.

Llama 2 is the pre-trained language model with the highest degree of originality, the most transparent training technical details, and the best reputation on the market. It has extremely high research value. This time Liu Pengfei took "Technical Interpretation of Llama 2 Large Model" as the theme. Starting from the background of the birth of LLaMA, important concepts, Llama 2 training technology and alignment technology, Liu Pengfei deeply interpreted the details of the technology stack involved in Llama 2 and its Chat version. He also shared his practical experience in optimization, including technical details such as data source weighting and upsampling, and discussed the difficulties in breaking through the development of large models

Lao Liu said NLP Technical public account author and open source enthusiast Liu Huanyong

re-writes: The basic model has very high requirements on computing power and algorithms. Therefore, in more industry applications, it is chosen to build large industry models based on the basic model and further refine it. Sub-areas. Speaker Liu Huanyong said: "Based on the large industry model, the basic model contains less vertical field data, which is prone to hallucinations and the effect is unsatisfactory." Although Llama is not the best choice for the Chinese market, because it is the first to be open source and can For commercial use, the rich accumulation of industry implementation cases is very precious

He shared the comparison and comparison of the Llama series models with other open source models under the title "Interpretation of Practical Paradigms and Cases for Industry Implementation Based on the Llama Series Open Source Model" Its Chinese-language mechanism explains domain fine-tuning model cases based on Llama series models and implementation cases of "knowledge base enhancement based on LLama series models". It summarizes the computing power conditions, data quantity and quality requirements, ROI and scenarios and other necessary conditions for industry fine-tuning models in industry fields. It emphasizes that the form of large-scale model implementation in the industry may be multi-source heterogeneous data, document intelligent analysis, Knowledge base specification.

LinkSoul.AI CEO, Chinese Llama 2 7B project initiator Shi Yemin

LinkSoul.AI CEO, Chinese Llama 2 7B project initiator Shi Yemin

With the development of large model technology, multi-modal large models have increasingly become the driver of embodied intelligence and The key to better user experience will surely become a key component of the next generation of artificial intelligence technology. LLM-based transformation is currently one of the best multi-modal model solutions and has great research and practical value.

Shi Yemin talked about the theme of "Technology and Practice of Multimodal Large Models Based on Llama 2", shared the modes and principles of multimodal models, and deeply discussed the visual multimodal large models and Improvement practice of speech multi-modal large models. He mentioned how old models can support new modalities and what are the important points to note when considering new capabilities. In addition, he also introduced several solutions for multi-modal improvement and emphasized matters that need to be paid attention to in training details. Shi Yemin believes that the biggest challenge of multi-modal models lies in data. He shared some methods and paths for generating pictures or graphic data based on models

China's large-scale model evangelist Su Yang is a contributor to Llama 2 7B

In the survey of this event, the industry application content that participants paid most attention to was "quantification and fine-tuning". In his sharing, Su Yang introduced the special content of "Llama 2 open source model quantification and low-cost fine-tuning practice", including the current status of the open source model ecology and Llama ecology, mainstream quantification and fine-tuning solutions, and the ease of these two things

"For fine-tuning, it is recommended to use the Sota solution shared by the open source industry, rather than blindly pursuing originality." He emphasized that quantification and fine-tuning are relatively practical based on following various principles. The actual model quantification process is not a simple one-size-fits-all process. There are many dimensions and parameters. The most reasonable quantification or transformation must be carried out at each layer and column. Fine-tuning also needs to consider the specific training environment, hardware environment and basic system. Environment, Su Yang gave an in-depth explanation on this.

After detailed technical interpretation and case analysis, Shi Yeminhe Su Yang led the on-site participants to get started with large model training, quantification and fine-tuning practice.

Shi Yemin focused on explaining basic model selection, training techniques and experience, including two paths: training from scratch based on a base model and vocabulary expansion training based on open source models, as well as the corresponding architecture, model size, and whether it can be commercially used , whether to expand the word list and other filtering conditions, and answered SFT data set and other related questions.

Su Yang led the on-site participants to complete a series of operations, including deploying images, loading models, loading data sets, setting QLoRA parameters, configuring SFT parameters, using transformer parameter packages, etc. Through fine-tuning, a large private model was successfully run to help participants better absorb the content of this event

In the future, we will continue to pay attention to and track the latest developments in the industry and the needs of developers. Continue to hold high-quality large-scale technical activities to help developers quickly improve their engineering practice and innovative application capabilities, and prepare for the era of large models. Welcome everyone to continue to pay attention to our AI technology forum activities

The above is the detailed content of On sale online! The first Heart of Machine AI technology forum concluded successfully. These large model technical information are worth watching again and again.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- What knowledge do Linux operation and maintenance engineers need to master?

- What does replenishing power in the mobile phone engineering menu mean?

- Alibaba Qianwen's large model successfully combined industrial robots with DingTalk remote command for the first time to conduct experiments

- The big model review is here! One article will help you clarify the evolution history of large models of global AI giants

- Meitu releases MiracleVision, China's first 'aesthetically savvy” AI visual model