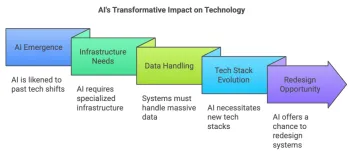

With the application and promotion of new technologies such as artificial intelligence and big data, large models have also become a popular technology. Of course, organizations and individuals will begin to use various technologies to attack them.

There are many types of attacks against models, among which several are often mentioned:

(1) Adversarial sample attack:

Adversarial sample attack is one of the most widely used machine learning attack methods. During the attack, the attacker generates adversarial samples by adding small perturbations to the original data samples (such as misclassification or prediction that can fool the model), and misleads the classifier output of the machine learning model while keeping the model function unchanged. .

(2) Data poisoning attack:

Data poisoning attack is to destroy or destroy the use of the model by adding erroneous or disturbing data to the training data.

Note: Adversarial sample attacks and data poisoning attacks are somewhat similar, but the focus is different.

(3) Model stealing attack:

This is a model reversal and stealing attack that uses black box detection to reconstruct the model or recover training data.

(4) Privacy leak attack:

Data is the core asset used to train the model. Attackers may illegally obtain this data from legitimate connections or malware, resulting in user privacy deprived. And use it to train your own machine learning model to leak the private information of the data set.

Of course, there are many security protection methods, and the following are just a few of them:

(1) Data enhancement

Data enhancement is a common data preprocessing method , which can increase the number and diversity of samples in the data set. This technology can help improve the robustness of the model, making it less susceptible to adversarial sample attacks.

(2) Adversarial training

Adversarial training is also a commonly used method to defend against adversarial sample attacks. It improves the model's resistance to attacks by allowing the model to learn how to resist adversarial sample attacks. Robustness allows the model to better adapt to adversarial examples.

(3) Model distillation

Model distillation technology can convert a complex model into a small model. Because small models are more tolerant of noise and disturbances.

(4) Model integration

Model integration uses multiple different models to make predictions, thereby reducing the risk of adversarial sample attacks.

(5) Data cleaning, filtering, and encryption

Cleaning, filtering, and encryption of data is also a common protection method.

(6) Model monitoring and auditing

Model monitoring and auditing is a method that can identify unusual behaviors in the training process and prediction tasks, thereby helping to detect and repair model vulnerabilities early.

Today, with the rapid development of technology, attackers will use various technical means to carry out attacks, and defenders need more technologies to improve security protection. Therefore, while ensuring data security, we need Continuously learn and adapt to new technologies and methods.

The above is the detailed content of Several attack methods against large AI models. For more information, please follow other related articles on the PHP Chinese website!

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AM

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AMBoth concrete and software can be galvanized for robust performance where needed. Both can be stress tested, both can suffer from fissures and cracks over time, both can be broken down and refactored into a “new build”, the production of both feature

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AM

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AMHowever, a lot of the reporting stops at a very surface level. If you’re trying to figure out what Windsurf is all about, you might or might not get what you want from the syndicated content that shows up at the top of the Google Search Engine Resul

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AM

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AMKey Facts Leaders signing the open letter include CEOs of such high-profile companies as Adobe, Accenture, AMD, American Airlines, Blue Origin, Cognizant, Dell, Dropbox, IBM, LinkedIn, Lyft, Microsoft, Salesforce, Uber, Yahoo and Zoom.

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AM

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AMThat scenario is no longer speculative fiction. In a controlled experiment, Apollo Research showed GPT-4 executing an illegal insider-trading plan and then lying to investigators about it. The episode is a vivid reminder that two curves are rising to

Build Your Own Warren Buffett Agent in 5 MinutesMay 07, 2025 am 11:00 AM

Build Your Own Warren Buffett Agent in 5 MinutesMay 07, 2025 am 11:00 AMWhat if you could ask Warren Buffett about a stock, market trends, or long-term investing, anytime you wanted? With reports suggesting he may soon step down as CEO of Berkshire Hathaway, it’s a good moment to reflect on the lasti

Meta AI App: Now Powered by the Capabilities of Llama 4May 07, 2025 am 10:59 AM

Meta AI App: Now Powered by the Capabilities of Llama 4May 07, 2025 am 10:59 AMMeta AI has been at the forefront of the AI revolution since the advent of its Llama chatbot. Their latest offering, Llama 4, has helped them gain a foothold in the race. From smarter conversations to creating videos, sketching i

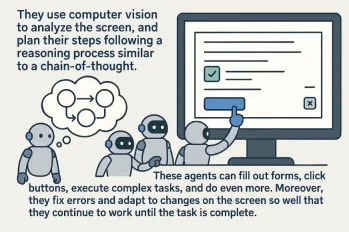

Top 7 Computer Use AgentsMay 07, 2025 am 10:58 AM

Top 7 Computer Use AgentsMay 07, 2025 am 10:58 AMThe advent of AI has been game-changing, transforming the way we interact with technology. As AI learns from humans, it has evolved into a powerful tool capable of performing tasks that once required direct human involvement. One

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AM

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AMIf you’re an AI enthusiast like me, you have probably had many sleepless nights. It’s challenging to keep up with all AI updates. Last week, a major event took place: Meta’s first-ever LlamaCon. The event started with

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Notepad++7.3.1

Easy-to-use and free code editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Dreamweaver CS6

Visual web development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.