Technology peripherals

Technology peripherals AI

AI More granular background and foreground control, faster editing: BEVControl's two-stage approach

More granular background and foreground control, faster editing: BEVControl's two-stage approachMore granular background and foreground control, faster editing: BEVControl's two-stage approach

This article will introduce a method to accurately generate multi-view street view images through BEV Sketch layout

In the field of autonomous driving, image synthesis is widely used to improve downstream perception Task performance

In the field of computer vision, a long-standing research problem in improving the performance of perceptual models is to achieve it by synthesizing images. In vision-centric autonomous driving systems, using multi-view cameras, this problem becomes more prominent because some long-tail scenes can never be collected.

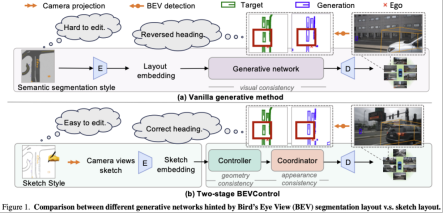

According to As shown in Figure 1(a), the existing generation method inputs the semantic segmentation-style BEV structure into the generation network and outputs reasonable multi-view images. When evaluated solely on scene-level metrics, existing methods appear to be capable of synthesizing photorealistic street view images. Once zoomed in, however, we found that it failed to produce accurate object-level detail. In the figure, we demonstrate a common mistake of state-of-the-art generation algorithms, which is that the generated vehicle is completely oriented in the opposite direction compared to the target 3D bounding box. Furthermore, editing semantic segmentation-style BEV structures is a difficult task that requires a lot of manpower. Therefore, we propose a two-stage method called BEVControl for providing more refined background and foreground geometries. control, as shown in Figure 1(b). BEVControl supports sketch-style BEV structure input, allowing for quick and easy editing. Additionally, our BEVControl decomposes visual consistency into two sub-goals: geometric consistency between street views and bird's-eye views via the Controller; appearance consistency between street views via the Coordinator

##Paper link:

BEVControl is a generated network of UNet structure, consisting of a series of modules. Each module has two elements, namely Controller and Coordinator.

BEVControl is a generated network of UNet structure, consisting of a series of modules. Each module has two elements, namely Controller and Coordinator.

- Input: BEV sketch, multi-view noise image and text prompt for easy editing;

- Output: generated multi-view image.

- Method details

Camera projection process of BEV sketch to camera condition. Input is a BEV sketch. The output is multi-view foreground conditions and background conditions.

Camera projection process of BEV sketch to camera condition. Input is a BEV sketch. The output is multi-view foreground conditions and background conditions.

Controller: Receives the foreground and background information of the camera view sketch in a self-attentional manner, and outputs geometric consistency with the BEV sketch Streetscape features.

Controller: Receives the foreground and background information of the camera view sketch in a self-attentional manner, and outputs geometric consistency with the BEV sketch Streetscape features.

- Coordinator: Utilizes a novel cross-view and cross-element attention mechanism to achieve cross-view contextual interaction and output street view features with appearance consistency.

- Proposed evaluation metrics

Recent street view image generation work only evaluates generation based on scene-level metrics (such as FID, road mIoU, etc.) quality.

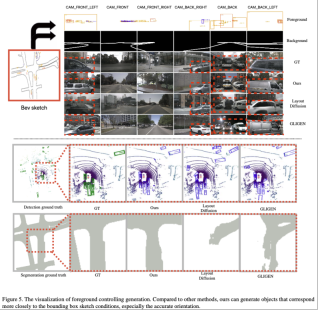

- We found that it is impossible to evaluate the true generative ability of the generative network using only these metrics, as shown in the figure below. The reported qualitative and quantitative results show that both groups generate Street View images with similar FID scores but very different capabilities for fine-grained control over the foreground and background.

- Therefore, we propose a set of evaluation indicators for finely measuring the control capabilities of the generated network.

Quantitative results

Quantitative results

Comparison of BEVControl and state-of-the-art methods on the proposed evaluation indicators.

- Apply BEVControl for data enhancement to improve target detection tasks.

Qualitative results

- Comparison of BEVControl and state-of-the-art methods on the NuScenes validation set.

##Demo effect

The above is the detailed content of More granular background and foreground control, faster editing: BEVControl's two-stage approach. For more information, please follow other related articles on the PHP Chinese website!

What is Graph of Thought in Prompt EngineeringApr 13, 2025 am 11:53 AM

What is Graph of Thought in Prompt EngineeringApr 13, 2025 am 11:53 AMIntroduction In prompt engineering, “Graph of Thought” refers to a novel approach that uses graph theory to structure and guide AI’s reasoning process. Unlike traditional methods, which often involve linear s

Optimize Your Organisation's Email Marketing with GenAI AgentsApr 13, 2025 am 11:44 AM

Optimize Your Organisation's Email Marketing with GenAI AgentsApr 13, 2025 am 11:44 AMIntroduction Congratulations! You run a successful business. Through your web pages, social media campaigns, webinars, conferences, free resources, and other sources, you collect 5000 email IDs daily. The next obvious step is

Real-Time App Performance Monitoring with Apache PinotApr 13, 2025 am 11:40 AM

Real-Time App Performance Monitoring with Apache PinotApr 13, 2025 am 11:40 AMIntroduction In today’s fast-paced software development environment, ensuring optimal application performance is crucial. Monitoring real-time metrics such as response times, error rates, and resource utilization can help main

ChatGPT Hits 1 Billion Users? 'Doubled In Just Weeks' Says OpenAI CEOApr 13, 2025 am 11:23 AM

ChatGPT Hits 1 Billion Users? 'Doubled In Just Weeks' Says OpenAI CEOApr 13, 2025 am 11:23 AM“How many users do you have?” he prodded. “I think the last time we said was 500 million weekly actives, and it is growing very rapidly,” replied Altman. “You told me that it like doubled in just a few weeks,” Anderson continued. “I said that priv

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics VidhyaApr 13, 2025 am 11:20 AM

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics VidhyaApr 13, 2025 am 11:20 AMIntroduction Mistral has released its very first multimodal model, namely the Pixtral-12B-2409. This model is built upon Mistral’s 12 Billion parameter, Nemo 12B. What sets this model apart? It can now take both images and tex

Agentic Frameworks for Generative AI Applications - Analytics VidhyaApr 13, 2025 am 11:13 AM

Agentic Frameworks for Generative AI Applications - Analytics VidhyaApr 13, 2025 am 11:13 AMImagine having an AI-powered assistant that not only responds to your queries but also autonomously gathers information, executes tasks, and even handles multiple types of data—text, images, and code. Sounds futuristic? In this a

Applications of Generative AI in the Financial SectorApr 13, 2025 am 11:12 AM

Applications of Generative AI in the Financial SectorApr 13, 2025 am 11:12 AMIntroduction The finance industry is the cornerstone of any country’s development, as it drives economic growth by facilitating efficient transactions and credit availability. The ease with which transactions occur and credit

Guide to Online Learning and Passive-Aggressive AlgorithmsApr 13, 2025 am 11:09 AM

Guide to Online Learning and Passive-Aggressive AlgorithmsApr 13, 2025 am 11:09 AMIntroduction Data is being generated at an unprecedented rate from sources such as social media, financial transactions, and e-commerce platforms. Handling this continuous stream of information is a challenge, but it offers an

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Chinese version

Chinese version, very easy to use

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function