How to deal with data sampling issues in C big data development?

In big data development, we often encounter situations where massive amounts of data need to be sampled. Due to the huge amount of data, directly processing all the data may take too long and occupy a large amount of computing resources. Therefore, reasonable data sampling is a common processing method that can reduce computing and storage costs while ensuring data accuracy.

The following will introduce how to use C language to deal with data sampling issues in big data development, and provide corresponding code examples.

- Random sampling method

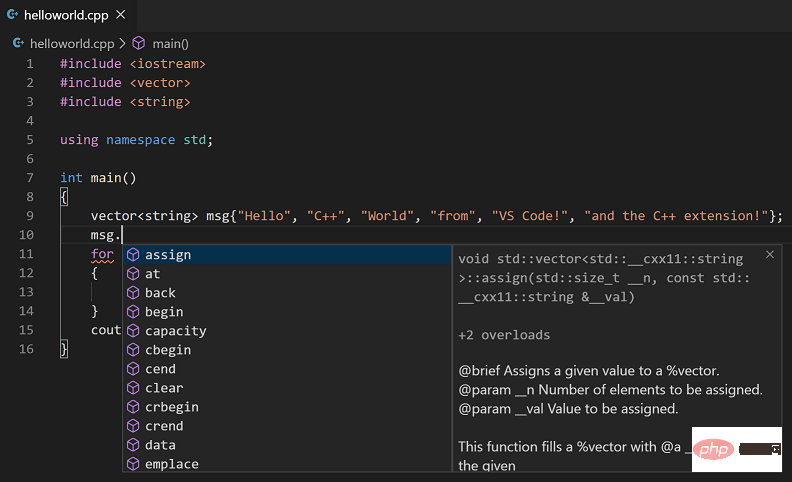

Random sampling is a simple and effective data sampling method. The idea is to randomly select a part of the data from the data set as a sampling sample. In C, you can use the rand() function to generate random numbers, and then select the corresponding data from the data set according to the set sampling ratio.

Sample code:

#include <iostream>

#include <vector>

#include <cstdlib>

#include <ctime>

std::vector<int> randomSampling(const std::vector<int>& data, double sampleRate) {

std::vector<int> sampledData;

std::srand((unsigned)std::time(0)); // 设置随机数种子

for (int i = 0; i < data.size(); ++i) {

if (std::rand() / double(RAND_MAX) <= sampleRate) {

sampledData.push_back(data[i]);

}

}

return sampledData;

}

int main() {

std::vector<int> data = {1, 2, 3, 4, 5, 6, 7, 8, 9, 10};

double sampleRate = 0.5;

std::vector<int> sampledData = randomSampling(data, sampleRate);

std::cout << "Sampled Data: ";

for (int i = 0; i < sampledData.size(); ++i) {

std::cout << sampledData[i] << " ";

}

return 0;

}- Systematic sampling method

Systematic sampling method is a method based on systematic stratified sampling. Stratify and then select data samples at certain intervals. In C, this method can be implemented using loops and modulo operations.

Sample code:

#include <iostream>

#include <vector>

std::vector<int> systematicSampling(const std::vector<int>& data, double sampleRate) {

std::vector<int> sampledData;

int interval = int(1.0 / sampleRate);

for (int i = 0; i < data.size(); i += interval) {

sampledData.push_back(data[i]);

}

return sampledData;

}

int main() {

std::vector<int> data = {1, 2, 3, 4, 5, 6, 7, 8, 9, 10};

double sampleRate = 0.5;

std::vector<int> sampledData = systematicSampling(data, sampleRate);

std::cout << "Sampled Data: ";

for (int i = 0; i < sampledData.size(); ++i) {

std::cout << sampledData[i] << " ";

}

return 0;

}In summary, random sampling and systematic sampling are two common methods to deal with data sampling problems in C big data development. Developers can choose appropriate methods based on specific needs to improve program efficiency and accuracy. Through reasonable data sampling, the computing and storage bottlenecks in big data development can be solved and the efficiency of data processing can be improved.

The above is the detailed content of How to deal with data sampling issues in C++ big data development?. For more information, please follow other related articles on the PHP Chinese website!

Windows 11 系统下的五款最佳免费 C++ 编译器推荐Apr 23, 2023 am 08:52 AM

Windows 11 系统下的五款最佳免费 C++ 编译器推荐Apr 23, 2023 am 08:52 AMC++是一种广泛使用的面向对象的计算机编程语言,它支持您与之交互的大多数应用程序和网站。你需要编译器和集成开发环境来开发C++应用程序,既然你在这里,我猜你正在寻找一个。我们将在本文中介绍一些适用于Windows11的C++编译器的主要推荐。许多审查的编译器将主要用于C++,但也有许多通用编译器您可能想尝试。MinGW可以在Windows11上运行吗?在本文中,我们没有将MinGW作为独立编译器进行讨论,但如果讨论了某些IDE中的功能,并且是DevC++编译器的首选

C++报错:变量未初始化,应该如何解决?Aug 21, 2023 pm 10:01 PM

C++报错:变量未初始化,应该如何解决?Aug 21, 2023 pm 10:01 PM在C++程序开发中,当我们声明了一个变量但是没有对其进行初始化,就会出现“变量未初始化”的报错。这种报错经常会让人感到很困惑和无从下手,因为这种错误并不像其他常见的语法错误那样具体,也不会给出特定的代码行数或者错误类型。因此,下面我们将详细介绍变量未初始化的问题,以及如何解决这个报错。一、什么是变量未初始化错误?变量未初始化是指在程序中声明了一个变量但是没有

C++编译错误:未定义的引用,该怎么解决?Aug 21, 2023 pm 08:52 PM

C++编译错误:未定义的引用,该怎么解决?Aug 21, 2023 pm 08:52 PMC++是一门广受欢迎的编程语言,但是在使用过程中,经常会出现“未定义的引用”这个编译错误,给程序的开发带来了诸多麻烦。本篇文章将从出错原因和解决方法两个方面,探讨“未定义的引用”错误的解决方法。一、出错原因C++编译器在编译一个源文件时,会将它分为两个阶段:编译阶段和链接阶段。编译阶段将源文件中的源码转换为汇编代码,而链接阶段将不同的源文件合并为一个可执行文

如何优化C++开发中的文件读写性能Aug 21, 2023 pm 10:13 PM

如何优化C++开发中的文件读写性能Aug 21, 2023 pm 10:13 PM如何优化C++开发中的文件读写性能在C++开发过程中,文件的读写操作是常见的任务之一。然而,由于文件读写是磁盘IO操作,相对于内存IO操作来说会更为耗时。为了提高程序的性能,我们需要优化文件读写操作。本文将介绍一些常见的优化技巧和建议,帮助开发者在C++文件读写过程中提高性能。使用合适的文件读写方式在C++中,文件读写可以通过多种方式实现,如C风格的文件IO

C++编译错误:无法为类模板找到实例化,应该怎么解决?Aug 21, 2023 pm 08:33 PM

C++编译错误:无法为类模板找到实例化,应该怎么解决?Aug 21, 2023 pm 08:33 PMC++是一门强大的编程语言,它支持使用类模板来实现代码的复用,提高开发效率。但是在使用类模板时,可能会遭遇编译错误,其中一个比较常见的错误是“无法为类模板找到实例化”(error:cannotfindinstantiationofclasstemplate)。本文将介绍这个问题的原因以及如何解决。问题描述在使用类模板时,有时会遇到以下错误信息:e

iostream头文件的作用是什么Mar 25, 2021 pm 03:45 PM

iostream头文件的作用是什么Mar 25, 2021 pm 03:45 PMiostream头文件包含了操作输入输出流的方法,比如读取一个文件,以流的方式读取;其作用是:让初学者有一个方便的命令行输入输出试验环境。iostream的设计初衷是提供一个可扩展的类型安全的IO机制。

c++数组怎么初始化Oct 15, 2021 pm 02:09 PM

c++数组怎么初始化Oct 15, 2021 pm 02:09 PMc++初始化数组的方法:1、先定义数组再给数组赋值,语法“数据类型 数组名[length];数组名[下标]=值;”;2、定义数组时初始化数组,语法“数据类型 数组名[length]=[值列表]”。

C++中的信号处理技巧Aug 21, 2023 pm 10:01 PM

C++中的信号处理技巧Aug 21, 2023 pm 10:01 PMC++是一种流行的编程语言,它强大而灵活,适用于各种应用程序开发。在使用C++开发应用程序时,经常需要处理各种信号。本文将介绍C++中的信号处理技巧,以帮助开发人员更好地掌握这一方面。一、信号处理的基本概念信号是一种软件中断,用于通知应用程序内部或外部事件。当特定事件发生时,操作系统会向应用程序发送信号,应用程序可以选择忽略或响应此信号。在C++中,信号可以

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver CS6

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software