How to optimize the data loading speed in C big data development?

Introduction:

In modern big data applications, data loading is a crucial link. The efficiency of data loading directly affects the performance and response time of the entire program. However, for loading large-scale data sets, performance optimization becomes increasingly important. In this article, we'll explore how to use C to optimize data loading speed in big data development and provide you with some practical code examples.

- Using buffers

Using buffers is a common optimization method when facing the loading of large-scale data sets. Buffers can reduce the number of disk accesses, thereby improving the efficiency of data loading. The following is a sample code for loading data using a buffer:

#include <iostream>

#include <fstream>

#include <vector>

int main() {

std::ifstream input("data.txt", std::ios::binary);

// 使用缓冲区提高数据加载效率

const int buffer_size = 8192; // 8KB

std::vector<char> buffer(buffer_size);

while (!input.eof()) {

input.read(buffer.data(), buffer_size);

// 处理数据

}

input.close();

return 0;

}In the above example, we used a buffer of size 8KB to read the data. This buffer size will not occupy too much memory, but also can reduce the number of disk accesses and improve the efficiency of data loading.

- Multi-threaded loading

When processing large-scale data sets, using multi-threaded loading can further improve the speed of data loading. By loading data in parallel through multiple threads, the computing power of multi-core processors can be fully utilized to speed up data loading and processing. The following is a sample code that uses multi-threading to load data:

#include <iostream>

#include <fstream>

#include <vector>

#include <thread>

void load_data(const std::string& filename, std::vector<int>& data, int start, int end) {

std::ifstream input(filename, std::ios::binary);

input.seekg(start * sizeof(int));

input.read(reinterpret_cast<char*>(&data[start]), (end - start) * sizeof(int));

input.close();

}

int main() {

const int data_size = 1000000;

std::vector<int> data(data_size);

const int num_threads = 4;

std::vector<std::thread> threads(num_threads);

const int chunk_size = data_size / num_threads;

for (int i = 0; i < num_threads; ++i) {

int start = i * chunk_size;

int end = (i == num_threads - 1) ? data_size : (i + 1) * chunk_size;

threads[i] = std::thread(load_data, "data.txt", std::ref(data), start, end);

}

for (int i = 0; i < num_threads; ++i) {

threads[i].join();

}

return 0;

}In the above example, we used 4 threads to load data in parallel. Each thread is responsible for reading a piece of data and then saving it to a shared data container. Through multi-threaded loading, we can read multiple data fragments at the same time, thus increasing the speed of data loading.

- Using memory mapped files

Memory mapped files are an effective way to load data. By mapping files into memory, direct access to file data can be achieved, thereby improving the efficiency of data loading. The following is a sample code for loading data using a memory mapped file:

#include <iostream>

#include <fstream>

#include <vector>

#include <sys/mman.h>

int main() {

int fd = open("data.txt", O_RDONLY);

off_t file_size = lseek(fd, 0, SEEK_END);

void* data = mmap(NULL, file_size, PROT_READ, MAP_SHARED, fd, 0);

close(fd);

// 处理数据

// ...

munmap(data, file_size);

return 0;

}In the above example, we used the mmap() function to map the file into memory. By accessing mapped memory, we can directly read file data, thereby increasing the speed of data loading.

Conclusion:

Optimizing data loading speed is an important and common task when facing the loading of large-scale data sets. By using technologies such as buffers, multi-threaded loading, and memory-mapped files, we can effectively improve the efficiency of data loading. In actual development, we should choose appropriate optimization strategies based on specific needs and data characteristics to give full play to the advantages of C language in big data development and improve program performance and response time.

Reference:

- C Reference: https://en.cppreference.com/

- C Concurrency in Action by Anthony Williams

The above is the detailed content of How to optimize data loading speed in C++ big data development?. For more information, please follow other related articles on the PHP Chinese website!

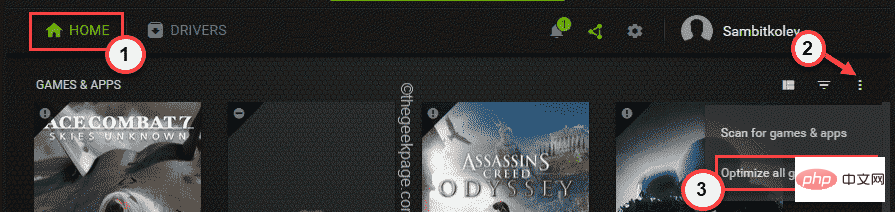

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PM

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PMGeforceExperience不仅为您下载最新版本的游戏驱动程序,它还提供更多!最酷的事情之一是它可以根据您的系统规格优化您安装的所有游戏,为您提供最佳的游戏体验。但是一些游戏玩家报告了一个问题,即GeForceExperience没有优化他们系统上的游戏。只需执行这些简单的步骤即可在您的系统上解决此问题。修复1–为所有游戏使用最佳设置您可以设置为所有游戏使用最佳设置。1.在您的系统上打开GeForceExperience应用程序。2.GeForceExperience面

Nginx性能优化与安全设置Jun 10, 2023 am 09:18 AM

Nginx性能优化与安全设置Jun 10, 2023 am 09:18 AMNginx是一种常用的Web服务器,代理服务器和负载均衡器,性能优越,安全可靠,可以用于高负载的Web应用程序。在本文中,我们将探讨Nginx的性能优化和安全设置。一、性能优化调整worker_processes参数worker_processes是Nginx的一个重要参数。它指定了可以使用的worker进程数。这个值需要根据服务器硬件、网络带宽、负载类型等

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM如果您在Windows机器上玩旧版游戏,您会很高兴知道Microsoft为它们计划了某些优化,特别是如果您在窗口模式下运行它们。该公司宣布,最近开发频道版本的内部人员现在可以利用这些功能。本质上,许多旧游戏使用“legacy-blt”演示模型在您的显示器上渲染帧。尽管DirectX12(DX12)已经利用了一种称为“翻转模型”的新演示模式,但Microsoft现在也正在向DX10和DX11游戏推出这一增强功能。迁移将改善延迟,还将为自动HDR和可变刷新率(VRR)等进一步增强打

如何使用缓存优化PHP和MySQLMay 11, 2023 am 08:52 AM

如何使用缓存优化PHP和MySQLMay 11, 2023 am 08:52 AM随着互联网的不断发展和应用的扩展,越来越多的网站和应用需要处理海量的数据和实现高流量的访问。在这种背景下,对于PHP和MySQL这样的常用技术,缓存优化成为了非常必要的优化手段。本文将在介绍缓存的概念及作用的基础上,从两个方面的PHP和MySQL进行缓存优化的实现,希望能够为广大开发者提供一些帮助。一、缓存的概念及作用缓存是指将计算结果或读取数据的结果缓存到

如何通过优化查询中的LIKE操作来提高MySQL性能May 11, 2023 am 08:11 AM

如何通过优化查询中的LIKE操作来提高MySQL性能May 11, 2023 am 08:11 AMMySQL是目前最流行的关系型数据库之一,但是在处理大量数据时,MySQL的性能可能会受到影响。其中,一种常见的性能瓶颈是查询中的LIKE操作。在MySQL中,LIKE操作是用来模糊匹配字符串的,它可以在查询数据表时用来查找包含指定字符或者模式的数据记录。但是,在大型数据表中,如果使用LIKE操作,它会对数据库的性能造成影响。为了解决这个问题,我们可

Go语言中的优化和重构的方法Jun 02, 2023 am 10:40 AM

Go语言中的优化和重构的方法Jun 02, 2023 am 10:40 AMGo语言是一门相对年轻的编程语言,虽然从语言本身的设计来看,其已经考虑到了很多优化点,使得其具备高效的性能和良好的可维护性,但是这并不代表着我们在开发Go应用时不需要优化和重构,特别是在长期的代码积累过程中,原来的代码架构可能已经开始失去优势,需要通过优化和重构来提高系统的性能和可维护性。本文将分享一些在Go语言中优化和重构的方法,希望能够对Go开发者有所帮

Snapchat优化指甲追踪效果,与OPI合推AR指甲油滤镜May 30, 2023 am 09:19 AM

Snapchat优化指甲追踪效果,与OPI合推AR指甲油滤镜May 30, 2023 am 09:19 AM5月26日消息,SnapchatAR试穿滤镜技术升级,并与OPI品牌合作,推出指甲油AR试用滤镜。据悉,为了优化AR滤镜对手指甲的追踪定位,Snap在LensStudio中推出手部和指甲分割功能,允许开发者将AR图像叠加在指甲这种细节部分。据青亭网了解,指甲分割功能在识别到人手后,会给手部和指甲分别设置掩膜,用于渲染2D纹理。此外,还会识别用户个人指甲的底色,来模拟指甲油真实上手的效果。从演示效果来看,新的AR指甲油滤镜可以很好的模拟浅蓝磨砂质地。实际上,此前Snapchat曾推出AR指甲油试用

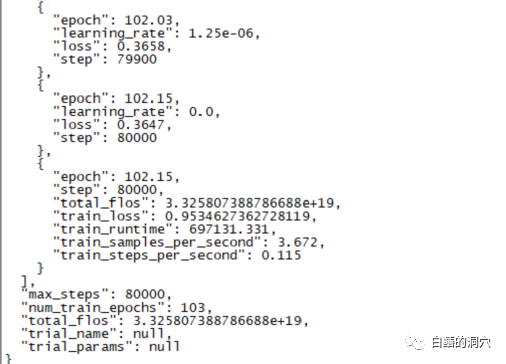

一篇学会本地知识库对LLM的性能优化Jun 12, 2023 am 09:23 AM

一篇学会本地知识库对LLM的性能优化Jun 12, 2023 am 09:23 AM昨天一个跑了220个小时的微调训练完成了,主要任务是想在CHATGLM-6B上微调出一个能够较为精确的诊断数据库错误信息的对话模型来。不过这个等了将近十天的训练最后的结果令人失望,比起我之前做的一个样本覆盖更小的训练来,差的还是挺大的。这样的结果还是有点令人失望的,这个模型基本上是没有实用价值的。看样子需要重新调整参数与训练集,再做一次训练。大语言模型的训练是一场军备竞赛,没有好的装备是玩不起来的。看样子我们也必须要升级一下实验室的装备了,否则没有几个十天可以浪费。从最近的几次失败的微调训练来看

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver CS6

Visual web development tools

SublimeText3 Chinese version

Chinese version, very easy to use

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.