Home >Backend Development >Python Tutorial >Comparison of four commonly used methods of locating elements in Python crawlers, which one do you prefer?

Comparison of four commonly used methods of locating elements in Python crawlers, which one do you prefer?

- Python当打之年forward

- 2023-08-15 14:42:311180browse

Extracting data from the requested web page, and correctly locating the desired data is the first step.

This article will compare severalcommonly used methods of locating web page elements in Python crawlers for everyone to learn

"The reference webpage is”

- Traditional

BeautifulSoup Operation- CSS selector based on

BeautifulSoup (similar toPyQuery)XPath - Regular Expression

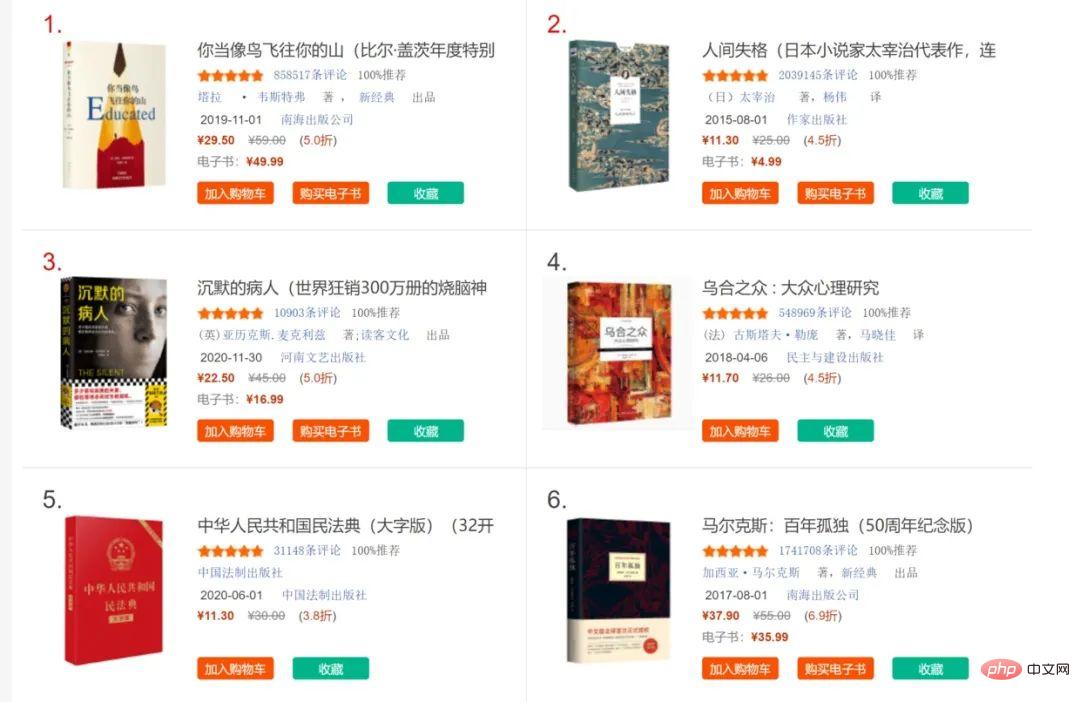

Dangdang.com best-selling book list:

http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1

We take the title of the first 20 books as an example. First make sure that the website does not have anti-crawling measures set up, and whether it can directly return the content to be parsed:

import requests url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1' response = requests.get(url).text print(response)

After careful inspection, it is found that the required data is all in the returned content, indicating that it is not required Special consideration is given to anti-crawling measures

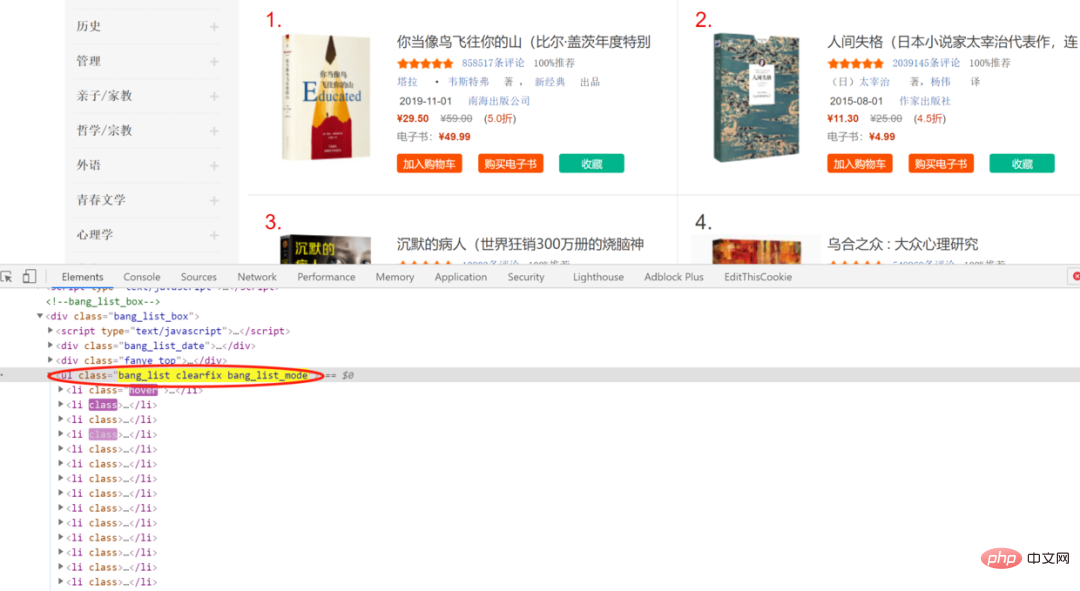

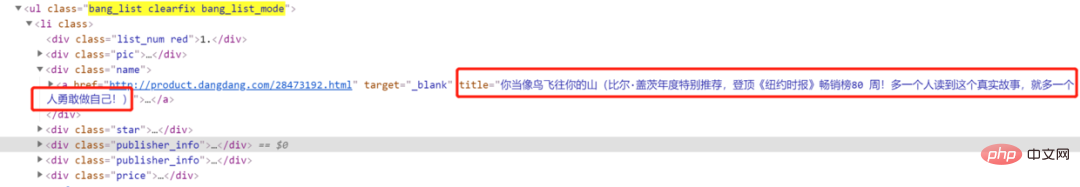

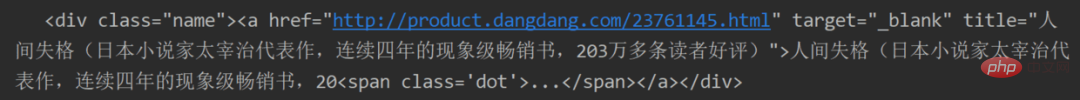

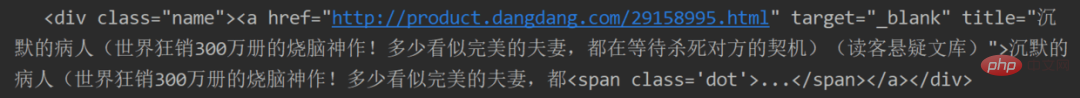

After reviewing the web page elements, it can be found that the bibliographic information is included in li, which belongs to class and is bang_list clearfix bang_list_modeul in

Further inspection can also reveal the corresponding position of the book title, which is an important basis for various analysis methods

1. Traditional BeautifulSoup operation

The classic BeautifulSoup method uses from bs4 import BeautifulSoup, and then uses soup = BeautifulSoup(html, " lxml") Convert the text into a specific standardized structure and use the find series of methods to parse it. The code is as follows:

import requests

from bs4 import BeautifulSoup

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def bs_for_parse(response):

soup = BeautifulSoup(response, "lxml")

li_list = soup.find('ul', class_='bang_list clearfix bang_list_mode').find_all('li') # 锁定ul后获取20个li

for li in li_list:

title = li.find('div', class_='name').find('a')['title'] # 逐个解析获取书名

print(title)

if __name__ == '__main__':

bs_for_parse(response)

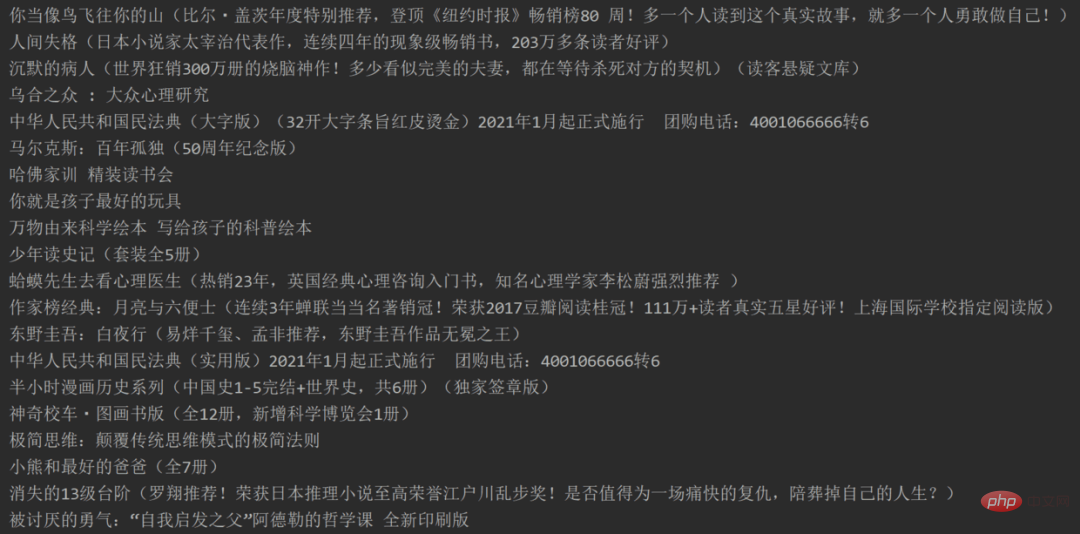

Successfully obtained 20 book titles, some of which appear lengthy in writing can be processed through regular expressions or other string methods. This article will not introduce them in detail

2. 基于 BeautifulSoup 的 CSS 选择器

这种方法实际上就是 PyQuery 中 CSS 选择器在其他模块的迁移使用,用法是类似的。关于 CSS 选择器详细语法可以参考:http://www.w3school.com.cn/cssref/css_selectors.asp由于是基于 BeautifulSoup 所以导入的模块以及文本结构转换都是一致的:

import requests

from bs4 import BeautifulSoup

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def css_for_parse(response):

soup = BeautifulSoup(response, "lxml")

print(soup)

if __name__ == '__main__':

css_for_parse(response)然后就是通过 soup.select 辅以特定的 CSS 语法获取特定内容,基础依旧是对元素的认真审查分析:

import requests

from bs4 import BeautifulSoup

from lxml import html

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def css_for_parse(response):

soup = BeautifulSoup(response, "lxml")

li_list = soup.select('ul.bang_list.clearfix.bang_list_mode > li')

for li in li_list:

title = li.select('div.name > a')[0]['title']

print(title)

if __name__ == '__main__':

css_for_parse(response)3. XPath

XPath 即为 XML 路径语言,它是一种用来确定 XML 文档中某部分位置的计算机语言,如果使用 Chrome 浏览器建议安装 XPath Helper 插件,会大大提高写 XPath 的效率。

之前的爬虫文章基本都是基于 XPath,大家相对比较熟悉因此代码直接给出:

import requests

from lxml import html

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def xpath_for_parse(response):

selector = html.fromstring(response)

books = selector.xpath("//ul[@class='bang_list clearfix bang_list_mode']/li")

for book in books:

title = book.xpath('div[@class="name"]/a/@title')[0]

print(title)

if __name__ == '__main__':

xpath_for_parse(response)4. 正则表达式

如果对 HTML 语言不熟悉,那么之前的几种解析方法都会比较吃力。这里也提供一种万能解析大法:正则表达式,只需要关注文本本身有什么特殊构造文法,即可用特定规则获取相应内容。依赖的模块是 re

首先重新观察直接返回的内容中,需要的文字前后有什么特殊:

import requests import re url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1' response = requests.get(url).text print(response)

观察几个数目相信就有答案了:

观察几个数目相信就有答案了:a7758aac1f23c7126c7b5ea3ba480710eab402ff055639ed6d70ede803486abf 书名就藏在上面的字符串中,蕴含的网址链接中末尾的数字会随着书名而改变。

分析到这里正则表达式就可以写出来了:

import requests

import re

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def re_for_parse(response):

reg = '<div class="name"><a href="http://product.dangdang.com/\d+.html" target="_blank" title="(.*?)">'

for title in re.findall(reg, response):

print(title)

if __name__ == '__main__':

re_for_parse(response)可以发现正则写法是最简单的,但是需要对于正则规则非常熟练。所谓正则大法好!

当然,不论哪种方法都有它所适用的场景,在真实操作中我们也需要在分析网页结构来判断如何高效的定位元素,最后附上本文介绍的四种方法的完整代码,大家可以自行操作一下来加深体会

import requests

from bs4 import BeautifulSoup

from lxml import html

import re

url = 'http://bang.dangdang.com/books/bestsellers/01.00.00.00.00.00-24hours-0-0-1-1'

response = requests.get(url).text

def bs_for_parse(response):

soup = BeautifulSoup(response, "lxml")

li_list = soup.find('ul', class_='bang_list clearfix bang_list_mode').find_all('li')

for li in li_list:

title = li.find('div', class_='name').find('a')['title']

print(title)

def css_for_parse(response):

soup = BeautifulSoup(response, "lxml")

li_list = soup.select('ul.bang_list.clearfix.bang_list_mode > li')

for li in li_list:

title = li.select('div.name > a')[0]['title']

print(title)

def xpath_for_parse(response):

selector = html.fromstring(response)

books = selector.xpath("//ul[@class='bang_list clearfix bang_list_mode']/li")

for book in books:

title = book.xpath('div[@class="name"]/a/@title')[0]

print(title)

def re_for_parse(response):

reg = '<div class="name"><a href="http://product.dangdang.com/\d+.html" target="_blank" title="(.*?)">'

for title in re.findall(reg, response):

print(title)

if __name__ == '__main__':

# bs_for_parse(response)

# css_for_parse(response)

# xpath_for_parse(response)

re_for_parse(response)The above is the detailed content of Comparison of four commonly used methods of locating elements in Python crawlers, which one do you prefer?. For more information, please follow other related articles on the PHP Chinese website!