Technology peripherals

Technology peripherals AI

AI GPT-4 model architecture leaked: contains 1.8 trillion parameters, using hybrid expert model

GPT-4 model architecture leaked: contains 1.8 trillion parameters, using hybrid expert model

According to news on July 13, foreign media Semianalysis recently revealed the GPT-4 large model released by OpenAI in March this year, including GPT-4 model architecture, training And specific parameters and information such as inference infrastructure, parameter amount, training data set, token number, cost, Mixture of Experts model.

▲ Picture source Semianalysis

Foreign media stated that GPT-4 contains a total of 1.8 trillion parameters in 120 layers, while GPT- 3 There are only about 175 billion parameters. In order to keep costs reasonable, OpenAI uses a hybrid expert model to build .

IT Home Note: Mixture of Experts is a kind of neural network. The system separates and trains multiple models based on the data. After the output of each model, the system integrates these models and outputs them into one separate tasks.

▲ Picture source Semianalysis

It is reported that GPT-4 uses 16 mixed expert models (mixture of experts), each with 1110 100 million parameters, each forward pass route passes through two expert models.

In addition, it has 55 billion shared attention parameters and was trained using a dataset containing 13 trillion tokens. The tokens are not unique and are calculated as more tokens according to the number of iterations.

The context length of GPT-4 pre-training phase is 8k, and the 32k version is the result of fine-tuning 8k. The training cost is quite high. Foreign media said that 8x H100 cannot achieve 33.33 Tokens per second. The speed provides the required dense parameter model, so training this model requires extremely high inference costs. Calculated at US$1 per hour for the H100 physical machine, the cost of one training is as high as US$63 million (approximately 451 million yuan) ).

In this regard, OpenAI chose to use the A100 GPU in the cloud to train the model, reducing the final training cost to about US$21.5 million (approximately 154 million yuan), using a slightly longer time and reducing the training cost. cost.

The above is the detailed content of GPT-4 model architecture leaked: contains 1.8 trillion parameters, using hybrid expert model. For more information, please follow other related articles on the PHP Chinese website!

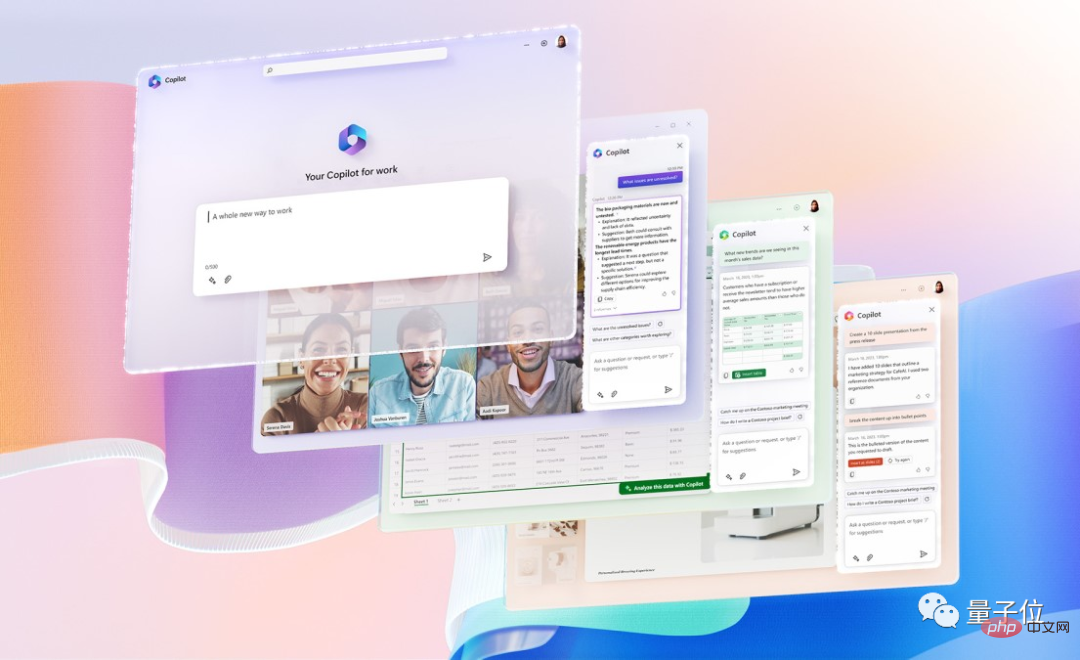

GPT-4接入Office全家桶!Excel到PPT动嘴就能做,微软:重新发明生产力Apr 12, 2023 pm 02:40 PM

GPT-4接入Office全家桶!Excel到PPT动嘴就能做,微软:重新发明生产力Apr 12, 2023 pm 02:40 PM一觉醒来,工作的方式被彻底改变。微软把AI神器GPT-4全面接入Office,这下ChatPPT、ChatWord、ChatExcel一家整整齐齐。CEO纳德拉在发布会上直接放话:今天,进入人机交互的新时代,重新发明生产力。新功能名叫Microsoft 365 Copilot(副驾驶),与改变了程序员的代码助手GitHub Copilot成为一个系列,继续改变更多人。现在AI不光能自动做PPT,而且能根据Word文档的内容一键做出精美排版。甚至连上台时对着每一页PPT应该讲什么话,都给一起安排

集成GPT-4的Cursor让编写代码和聊天一样简单,用自然语言编写代码的新时代已来Apr 04, 2023 pm 12:15 PM

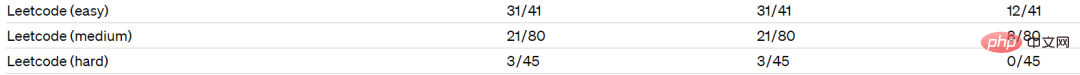

集成GPT-4的Cursor让编写代码和聊天一样简单,用自然语言编写代码的新时代已来Apr 04, 2023 pm 12:15 PM集成GPT-4的Github Copilot X还在小范围内测中,而集成GPT-4的Cursor已公开发行。Cursor是一个集成GPT-4的IDE,可以用自然语言编写代码,让编写代码和聊天一样简单。 GPT-4和GPT-3.5在处理和编写代码的能力上差别还是很大的。官网的一份测试报告。前两个是GPT-4,一个采用文本输入,一个采用图像输入;第三个是GPT3.5,可以看出GPT-4的代码能力相较于GPT-3.5有较大能力的提升。集成GPT-4的Github Copilot X还在小范围内测中,而

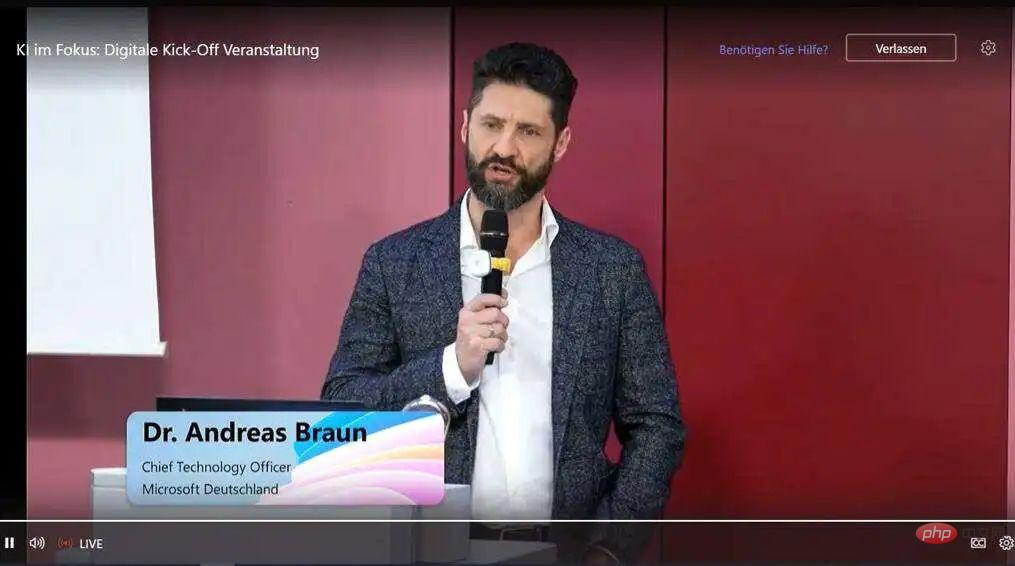

GPT-4的两个谣言和最新预测!Apr 11, 2023 pm 06:07 PM

GPT-4的两个谣言和最新预测!Apr 11, 2023 pm 06:07 PM作者 | 云昭3月9日,微软德国CTO Andreas Braun在AI kickoff会议上带来了一个期待已久的消息:“我们将于下周推出GPT-4,届时我们将推出多模式模式,提供完全不同的可能性——例如视频。”言语之中,他将大型语言模型(LLM)比作“游戏改变者”,因为他们教机器理解自然语言,然后机器以统计的方式理解以前只能由人类阅读和理解的东西。与此同时,这项技术已经发展到“适用于所有语言”:你可以用德语提问,也可以用意大利语回答。借助多模态,微软(-OpenAI)将“使模型变得全面”。那

再一次改变“AI”世界 GPT-4千呼万唤始出来Apr 10, 2023 pm 02:40 PM

再一次改变“AI”世界 GPT-4千呼万唤始出来Apr 10, 2023 pm 02:40 PM近段时间,人工智能聊天机器人ChatGPT刷爆网络,网友们争先恐后去领略它的超高情商和巨大威力。参加高考、修改代码、构思小说……它在广大网友的“鞭策”下不断突破自我,甚至可以用一整段程序,为你拼接出一只小狗。而这些技能只是基于GPT-3.5开发而来,在3月15日,AI世界再次更新,最新版本的GPT-4也被OpenAI发布了出来。与之前相比,GPT-4不仅展现了更加强大的语言理解能力,还能够处理图像内容,在考试中的得分甚至能超越90%的人类。那么,如此“逆天”的GPT-4还具有哪些能力?它又是如何

GPT-4帮助企业实现数字化转型的五种方法May 05, 2023 pm 12:19 PM

GPT-4帮助企业实现数字化转型的五种方法May 05, 2023 pm 12:19 PM人工智能在过去几十年里发展势头强劲,像GPT-4这样的大型语言模型引起了用户的更多兴趣,他们想知道GPT-4如何支持数字化转型。根据行业媒体的预测,到2024年,GPT-4所基于的ChatGPT深度学习堆栈将产生10亿美元的收入。GPT-4的普及是由于人工智能技术的力量,以及高用户可访问性和广泛的通用性。科技行业的许多不同领域都可以利用GPT-4来自动化和个性化许多任务,使企业员工能够专注于更复杂的任务。以下是GPT-4在几个不同领域促进数字化转型的一些例子。1、个性化员工培训像GPT-4这样的

GPT-4救了我狗的命Apr 04, 2023 pm 12:25 PM

GPT-4救了我狗的命Apr 04, 2023 pm 12:25 PMGPT-4在发布之时公布了一项医学知识测试结果,该测试由美国医师学会开发,最终它答对了75%的问题,相比GPT3.5的53%有很大的飞跃。 这两天,一篇关于“GPT-4救了我狗的命”的帖子属实有点火:短短一两天就有数千人转发,上万人点赞,网友在评论区讨论得热火朝天。△ 是真狗命,not人的“狗命”(Doge)乍一听,大家想必很纳闷:这俩能扯上什么关系?GPT-4还能长眼睛发现狗有什么危险吗?真实的经过是这样子的:当兽医说无能为力时,他问了GPT-4发帖人名叫Cooper。他自述自己养的一条狗子,

微软 Bing Chat 聊天机器人已升级使用最新 OpenAI GPT-4 技术Apr 12, 2023 pm 10:58 PM

微软 Bing Chat 聊天机器人已升级使用最新 OpenAI GPT-4 技术Apr 12, 2023 pm 10:58 PM3 月 15 日消息,今天 OpenAI 发布了全新的 GPT-4 大型语言模型,随后微软官方宣布,Bing Chat 此前已经升级使用 OpenAI 的 GPT-4 技术。微软公司副总裁兼消费者首席营销官 Yusuf Mehdi 确认 Bing Chat 聊天机器人 AI 已经在 GPT-4 上运行,ChatGPT 基于最新版本 GPT-4,由 OpenAI 开发 。微软 Bing 博客网站上的一篇帖子进一步证实了这一消息。微软表示,如果用户在过去五周内的任何时间使用过新的 Bing 预览版,

当GPT-4反思自己错了:性能提升近30%,编程能力提升21%Apr 04, 2023 am 11:55 AM

当GPT-4反思自己错了:性能提升近30%,编程能力提升21%Apr 04, 2023 am 11:55 AMGPT-4 的思考方式,越来越像人了。 人类在做错事时,会反思自己的行为,避免再次出错,如果让 GPT-4 这类大型语言模型也具备反思能力,性能不知道要提高多少了。众所周知,大型语言模型 (LLM) 在各种任务上已经表现出前所未有的性能。然而,这些 SOTA 方法通常需要对已定义的状态空间进行模型微调、策略优化等操作。由于缺乏高质量的训练数据、定义良好的状态空间,优化模型实现起来还是比较难的。此外,模型还不具备人类决策过程所固有的某些品质,特别是从错误中学习的能力。不过现在好了,在最近的一篇论文

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.