Backend Development

Backend Development Python Tutorial

Python Tutorial How Scrapy improves crawling stability and crawling efficiency

How Scrapy improves crawling stability and crawling efficiencyScrapy is a powerful web crawler framework written in Python, which can help users quickly and efficiently crawl the required information from the Internet. However, in the process of using Scrapy to crawl, you often encounter some problems, such as crawling failure, incomplete data or slow crawling speed. These problems will affect the efficiency and stability of the crawler. Therefore, this article will explore how Scrapy improves crawling stability and crawling efficiency.

- Set request headers and User-Agent

When crawling the web, if we do not provide any information, the website server may regard our request as unsafe or act maliciously and refuse to provide data. At this time, we can set the request header and User-Agent through the Scrapy framework to simulate a normal user request, thereby improving the stability of crawling.

You can set the request headers by defining the DEFAULT_REQUEST_HEADERS attribute in the settings.py file:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Edge/16.16299'

}Two attributes, Accept-Language and User-Agent, are set here to simulate common request headers. information. Among them, the User-Agent field is the most important because it allows the server to know the browser and operating system information we are using. Different browsers and operating systems will have different User-Agent information, so we need to set it according to the actual situation.

- Adjust the number of concurrency and delay time

In the Scrapy framework, we can adjust the number of concurrency and delay time of the crawler by setting the DOWNLOAD_DELAY and CONCURRENT_REQUESTS_PER_DOMAIN properties to achieve the best results. Excellent crawling efficiency.

DOWNLOAD_DELAY attribute is mainly used to control the interval between requests to avoid excessive burden on the server. It can also prevent websites from blocking our IP address. Generally speaking, the setting of DOWNLOAD_DELAY should be a reasonable time value to ensure that it does not put excessive pressure on the server and also ensures the integrity of the data.

CONCURRENT_REQUESTS_PER_DOMAIN attribute is used to control the number of requests made to the same domain name at the same time. The higher the value, the faster the crawling speed, but the greater the pressure on the server. Therefore, we need to adjust this value according to the actual situation to achieve the optimal crawling effect.

- Use proxy IP

When crawling websites, some websites may restrict access from the same IP address, such as setting a verification code or directly banning the IP. address. At this time, we can use proxy IP to solve this problem.

The method to use the proxy IP is to set the DOWNLOADER_MIDDLEWARES attribute in the Scrapy framework, and then write a custom middleware to obtain an available proxy IP from the proxy pool before sending the request, and then send the request to the target website. In this way, you can effectively circumvent the website's IP blocking policy and improve the stability and efficiency of crawling.

- Dealing with anti-crawler strategies

Many websites today will have anti-crawler strategies, such as setting verification codes, limiting access frequency, etc. These strategies cause a lot of trouble for our crawlers, so we need to take some effective measures to circumvent these strategies.

One solution is to use a random User-Agent and proxy IP to crawl so that the website cannot determine our true identity. Another method is to use automated tools for verification code recognition, such as Tesseract, Pillow and other libraries, to automatically analyze the verification code and enter the correct answer.

- Use distributed crawling

When crawling large-scale websites, stand-alone crawlers often have some bottlenecks, such as performance bottlenecks, IP bans, etc. At this time, we can use distributed crawling technology to disperse the data to different crawler nodes for processing, thereby improving the efficiency and stability of crawling.

Scrapy also provides some distributed crawling plug-ins, such as Scrapy-Redis, Scrapy-Crawlera, etc., which can help users quickly build a reliable distributed crawler platform.

Summary

Through the above five methods, we can effectively improve the stability and crawling efficiency of Scrapy website crawling. Of course, these are just some basic strategies, and different sites and situations may require different approaches. Therefore, in practical applications, we need to choose the most appropriate measures according to the specific situation to make the crawler work more efficient and stable.

The above is the detailed content of How Scrapy improves crawling stability and crawling efficiency. For more information, please follow other related articles on the PHP Chinese website!

如何在 Windows 11 中为应用程序或进程打开或关闭效率模式Apr 14, 2023 pm 09:46 PM

如何在 Windows 11 中为应用程序或进程打开或关闭效率模式Apr 14, 2023 pm 09:46 PMWindows 11 22H2中的新任务管理器对高级用户来说是一个福音。现在,它通过附加数据提供更好的 UI 体验,以密切关注您正在运行的流程、任务、服务和硬件组件。如果您一直在使用新的任务管理器,那么您可能已经注意到新的效率模式。它是什么?它是否有助于提高 Windows 11 系统的性能?让我们来了解一下!Windows 11 中的效率模式是什么?效率模式是任务管理器中的一

两小时就能超过人类!DeepMind最新AI速通26款雅达利游戏Jul 03, 2023 pm 08:57 PM

两小时就能超过人类!DeepMind最新AI速通26款雅达利游戏Jul 03, 2023 pm 08:57 PMDeepMind的AI智能体,又来卷自己了!注意看,这个名叫BBF的家伙,只用2个小时,就掌握了26款雅达利游戏,效率和人类相当,超越了自己一众前辈。要知道,AI智能体通过强化学习解决问题的效果一直都不错,但最大的问题就在于这种方式效率很低,需要很长时间摸索。图片而BBF带来的突破正是在效率方面。怪不得它的全名可以叫Bigger、Better、Faster。而且它还能只在单卡上完成训练,算力要求也降低许多。BBF由谷歌DeepMind和蒙特利尔大学共同提出,目前数据和代码均已开源。最高可取得人类

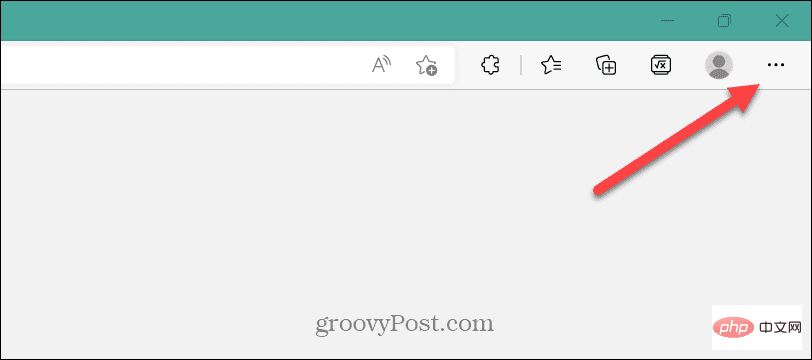

如何在 Microsoft Edge 中开启节能模式?Apr 20, 2023 pm 08:22 PM

如何在 Microsoft Edge 中开启节能模式?Apr 20, 2023 pm 08:22 PMEdge等基于Chromium的浏览器会占用很多资源,但您可以在MicrosoftEdge中启用效率模式以提高性能。MicrosoftEdge网络浏览器自其不起眼的开始以来已经走过了漫长的道路。最近,微软为浏览器添加了一种新的效率模式,旨在提高浏览器在PC上的整体性能。效率模式有助于延长电池寿命并减少系统资源使用。例如,使用Chromium构建的浏览器(如GoogleChrome和MicrosoftEdge)因占用RAM和CPU周期而臭名昭著。因此,为了

掌握Python,提高工作效率和生活品质Feb 18, 2024 pm 05:57 PM

掌握Python,提高工作效率和生活品质Feb 18, 2024 pm 05:57 PM标题:Python让生活更便捷:掌握这门语言,提升工作效率和生活品质Python作为一种强大而简单易学的编程语言,在当今的数字化时代越来越受到人们的青睐。不仅仅用于编写程序和进行数据分析,Python还可以在我们的日常生活中发挥巨大的作用。掌握这门语言,不仅能提升工作效率,还能提高生活品质。本文将通过具体的代码示例,展示Python在生活中的广泛应用,帮助读

Java开发技巧大揭秘:优化数据库事务处理效率Nov 20, 2023 pm 03:13 PM

Java开发技巧大揭秘:优化数据库事务处理效率Nov 20, 2023 pm 03:13 PM随着互联网的快速发展,数据库的重要性日益凸显。作为一名Java开发者,我们经常会涉及到数据库操作,数据库事务处理的效率直接关系到整个系统的性能和稳定性。本文将介绍一些Java开发中常用的优化数据库事务处理效率的技巧,帮助开发者提高系统的性能和响应速度。批量插入/更新操作通常情况下,一次向数据库中插入或更新单条记录的效率远低于批量操作。因此,在进行批量插入/更

学会利用sessionstorage,提高前端开发效率Jan 13, 2024 am 11:56 AM

学会利用sessionstorage,提高前端开发效率Jan 13, 2024 am 11:56 AM掌握sessionStorage的作用,提升前端开发效率,需要具体代码示例随着互联网的快速发展,前端开发领域也日新月异。在进行前端开发时,我们经常需要处理大量的数据,并将其存储在浏览器中以便后续使用。而sessionStorage就是一种非常重要的前端开发工具,可以为我们提供临时的本地存储解决方案,提高开发效率。本文将介绍sessionStorage的作用,

子网掩码:作用与网络通信效率的影响Dec 26, 2023 pm 04:28 PM

子网掩码:作用与网络通信效率的影响Dec 26, 2023 pm 04:28 PM子网掩码的作用及其对网络通信效率的影响引言:随着互联网的普及,网络通信成为现代社会中不可或缺的一部分。与此同时,网络通信的效率也成为了人们关注的焦点之一。在构建和管理网络的过程中,子网掩码是一项重要而且基础的配置选项,它在网络通信中起着关键的作用。本文将介绍子网掩码的作用,以及它对网络通信效率的影响。一、子网掩码的定义及作用子网掩码(subnetmask)

Scrapy如何提高爬取稳定性和抓取效率Jun 23, 2023 am 08:38 AM

Scrapy如何提高爬取稳定性和抓取效率Jun 23, 2023 am 08:38 AMScrapy是一款Python编写的强大的网络爬虫框架,它可以帮助用户从互联网上快速、高效地抓取所需的信息。然而,在使用Scrapy进行爬取的过程中,往往会遇到一些问题,例如抓取失败、数据不完整或爬取速度慢等情况,这些问题都会影响到爬虫的效率和稳定性。因此,本文将探讨Scrapy如何提高爬取稳定性和抓取效率。设置请求头和User-Agent在进行网络爬取时,

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Dreamweaver CS6

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools