Backend Development

Backend Development Python Tutorial

Python Tutorial Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiencyScrapy is a powerful Python crawler framework that can be used to obtain large amounts of data from the Internet. However, when developing Scrapy, we often encounter the problem of crawling duplicate URLs, which wastes a lot of time and resources and affects efficiency. This article will introduce some Scrapy optimization techniques to reduce the crawling of duplicate URLs and improve the efficiency of Scrapy crawlers.

1. Use the start_urls and allowed_domains attributes

In the Scrapy crawler, you can use the start_urls attribute to specify the URLs that need to be crawled. At the same time, you can also use the allowed_domains attribute to specify the domain names that the crawler can crawl. The use of these two attributes can help Scrapy quickly filter out URLs that do not need to be crawled, saving time and resources while improving efficiency.

2. Use Scrapy-Redis to implement distributed crawling

When a large number of URLs need to be crawled, single-machine crawling is inefficient, so you can consider using distributed crawling technology. Scrapy-Redis is a plug-in for Scrapy that uses the Redis database to implement distributed crawling and improve the efficiency of Scrapy crawlers. By setting the REDIS_HOST and REDIS_PORT parameters in the settings.py file, you can specify the address and port number of the Redis database that Scrapy-Redis connects to achieve distributed crawling.

3. Use incremental crawling technology

In Scrapy crawler development, we often encounter the need to crawl the same URL repeatedly, which will cause a lot of waste of time and resources. Therefore, incremental crawling techniques can be used to reduce repeated crawling. The basic idea of incremental crawling technology is: record the crawled URL, and during the next crawl, check whether the same URL has been crawled based on the record. If it has been crawled, skip it. In this way, crawling of duplicate URLs can be reduced and efficiency improved.

4. Use middleware to filter duplicate URLs

In addition to incremental crawling technology, you can also use middleware to filter duplicate URLs. The middleware in Scrapy is a custom processor. During the running of the Scrapy crawler, requests and responses can be processed through the middleware. We can implement URL deduplication by writing custom middleware. Among them, the most commonly used deduplication method is to use the Redis database to record a list of URLs that have been crawled, and query the list to determine whether the URL has been crawled.

5. Use DupeFilter to filter duplicate URLs

In addition to custom middleware, Scrapy also provides a built-in deduplication filter DupeFilter, which can effectively reduce the crawling of duplicate URLs. DupeFilter hashes each URL and saves unique hash values in memory. Therefore, during the crawling process, only URLs with different hash values will be crawled. Using DupeFilter does not require additional Redis server support and is a lightweight duplicate URL filtering method.

Summary:

In Scrapy crawler development, crawling of duplicate URLs is a common problem. Various optimization techniques are needed to reduce the crawling of duplicate URLs and improve the efficiency of Scrapy crawlers. . This article introduces some common Scrapy optimization techniques, including using the start_urls and allowed_domains attributes, using Scrapy-Redis to implement distributed crawling, using incremental crawling technology, using custom middleware to filter duplicate URLs, and using the built-in DupeFilter to filter duplicates URL. Readers can choose appropriate optimization methods according to their own needs to improve the efficiency of Scrapy crawlers.

The above is the detailed content of Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency. For more information, please follow other related articles on the PHP Chinese website!

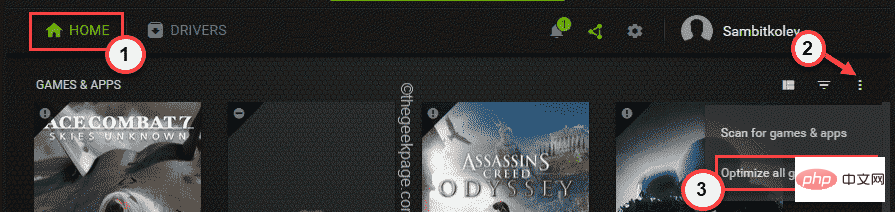

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PM

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PMGeforceExperience不仅为您下载最新版本的游戏驱动程序,它还提供更多!最酷的事情之一是它可以根据您的系统规格优化您安装的所有游戏,为您提供最佳的游戏体验。但是一些游戏玩家报告了一个问题,即GeForceExperience没有优化他们系统上的游戏。只需执行这些简单的步骤即可在您的系统上解决此问题。修复1–为所有游戏使用最佳设置您可以设置为所有游戏使用最佳设置。1.在您的系统上打开GeForceExperience应用程序。2.GeForceExperience面

Nginx性能优化与安全设置Jun 10, 2023 am 09:18 AM

Nginx性能优化与安全设置Jun 10, 2023 am 09:18 AMNginx是一种常用的Web服务器,代理服务器和负载均衡器,性能优越,安全可靠,可以用于高负载的Web应用程序。在本文中,我们将探讨Nginx的性能优化和安全设置。一、性能优化调整worker_processes参数worker_processes是Nginx的一个重要参数。它指定了可以使用的worker进程数。这个值需要根据服务器硬件、网络带宽、负载类型等

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM如果您在Windows机器上玩旧版游戏,您会很高兴知道Microsoft为它们计划了某些优化,特别是如果您在窗口模式下运行它们。该公司宣布,最近开发频道版本的内部人员现在可以利用这些功能。本质上,许多旧游戏使用“legacy-blt”演示模型在您的显示器上渲染帧。尽管DirectX12(DX12)已经利用了一种称为“翻转模型”的新演示模式,但Microsoft现在也正在向DX10和DX11游戏推出这一增强功能。迁移将改善延迟,还将为自动HDR和可变刷新率(VRR)等进一步增强打

如何使用缓存优化PHP和MySQLMay 11, 2023 am 08:52 AM

如何使用缓存优化PHP和MySQLMay 11, 2023 am 08:52 AM随着互联网的不断发展和应用的扩展,越来越多的网站和应用需要处理海量的数据和实现高流量的访问。在这种背景下,对于PHP和MySQL这样的常用技术,缓存优化成为了非常必要的优化手段。本文将在介绍缓存的概念及作用的基础上,从两个方面的PHP和MySQL进行缓存优化的实现,希望能够为广大开发者提供一些帮助。一、缓存的概念及作用缓存是指将计算结果或读取数据的结果缓存到

如何通过优化查询中的LIKE操作来提高MySQL性能May 11, 2023 am 08:11 AM

如何通过优化查询中的LIKE操作来提高MySQL性能May 11, 2023 am 08:11 AMMySQL是目前最流行的关系型数据库之一,但是在处理大量数据时,MySQL的性能可能会受到影响。其中,一种常见的性能瓶颈是查询中的LIKE操作。在MySQL中,LIKE操作是用来模糊匹配字符串的,它可以在查询数据表时用来查找包含指定字符或者模式的数据记录。但是,在大型数据表中,如果使用LIKE操作,它会对数据库的性能造成影响。为了解决这个问题,我们可

Go语言中的优化和重构的方法Jun 02, 2023 am 10:40 AM

Go语言中的优化和重构的方法Jun 02, 2023 am 10:40 AMGo语言是一门相对年轻的编程语言,虽然从语言本身的设计来看,其已经考虑到了很多优化点,使得其具备高效的性能和良好的可维护性,但是这并不代表着我们在开发Go应用时不需要优化和重构,特别是在长期的代码积累过程中,原来的代码架构可能已经开始失去优势,需要通过优化和重构来提高系统的性能和可维护性。本文将分享一些在Go语言中优化和重构的方法,希望能够对Go开发者有所帮

Snapchat优化指甲追踪效果,与OPI合推AR指甲油滤镜May 30, 2023 am 09:19 AM

Snapchat优化指甲追踪效果,与OPI合推AR指甲油滤镜May 30, 2023 am 09:19 AM5月26日消息,SnapchatAR试穿滤镜技术升级,并与OPI品牌合作,推出指甲油AR试用滤镜。据悉,为了优化AR滤镜对手指甲的追踪定位,Snap在LensStudio中推出手部和指甲分割功能,允许开发者将AR图像叠加在指甲这种细节部分。据青亭网了解,指甲分割功能在识别到人手后,会给手部和指甲分别设置掩膜,用于渲染2D纹理。此外,还会识别用户个人指甲的底色,来模拟指甲油真实上手的效果。从演示效果来看,新的AR指甲油滤镜可以很好的模拟浅蓝磨砂质地。实际上,此前Snapchat曾推出AR指甲油试用

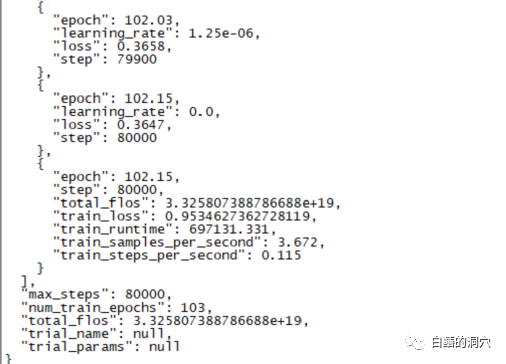

一篇学会本地知识库对LLM的性能优化Jun 12, 2023 am 09:23 AM

一篇学会本地知识库对LLM的性能优化Jun 12, 2023 am 09:23 AM昨天一个跑了220个小时的微调训练完成了,主要任务是想在CHATGLM-6B上微调出一个能够较为精确的诊断数据库错误信息的对话模型来。不过这个等了将近十天的训练最后的结果令人失望,比起我之前做的一个样本覆盖更小的训练来,差的还是挺大的。这样的结果还是有点令人失望的,这个模型基本上是没有实用价值的。看样子需要重新调整参数与训练集,再做一次训练。大语言模型的训练是一场军备竞赛,没有好的装备是玩不起来的。看样子我们也必须要升级一下实验室的装备了,否则没有几个十天可以浪费。从最近的几次失败的微调训练来看

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Chinese version

Chinese version, very easy to use

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SublimeText3 Linux new version

SublimeText3 Linux latest version