Java caching technology plays a very important role in applications and can effectively improve application performance and response speed. However, in high-concurrency scenarios, how to control the concurrency of Java cache technology to ensure the correctness and stability of applications has become an important issue that development engineers need to face.

The following are some commonly used Java cache technology concurrency control methods:

1. Synchronization lock

Synchronization lock is the most basic Java concurrency control technology, by controlling critical resources The locking method ensures that only one thread can access the resource at the same time. In caching technology, concurrent data operation control can be achieved by locking the data structure.

For example, when using HashMap for caching, you can lock it synchronously. The code example is as follows:

Map<String, Object> cacheMap = Collections.synchronizedMap(new HashMap<>());

Object value;

synchronized (cacheMap) {

value = cacheMap.get(key);

if (value == null) {

value = loadData();

cacheMap.put(key, value);

}

}However, the shortcomings of synchronous locks are also obvious and may cause performance bottlenecks. and deadlock issues.

2. ConcurrentHashMap

ConcurrentHashMap is an efficient concurrent hash table. It divides the table into multiple segments and locks each segment to achieve efficient concurrent access. When using ConcurrentHashMap for caching, since it has a built-in concurrency control mechanism, locking operations can be omitted and the performance of the program can be improved.

For example, use ConcurrentHashMap for caching. The code example is as follows:

ConcurrentMap<String, Object> cacheMap = new ConcurrentHashMap<>();

Object value = cacheMap.get(key);

if (value == null) {

value = loadData();

cacheMap.put(key, value);

}3. Read-write lock

The read-write lock is a special synchronization lock that can support both Multiple threads read shared resources and ensure that no other threads will read or write the resource during write operations. In caching technology, efficient read and write operations on cached data can be achieved by using read-write locks.

For example, when using LinkedHashMap for caching, you can use ReentrantReadWriteLock to control read-write locks. The code example is as follows:

Map<String, Object> cacheMap = new LinkedHashMap<String, Object>(16, 0.75f, true) {

protected boolean removeEldestEntry(Map.Entry<String, Object> eldest) {

return size() > CACHE_MAX_SIZE;

}

};

ReentrantReadWriteLock lock = new ReentrantReadWriteLock();

Object value;

lock.readLock().lock();

try {

value = cacheMap.get(key);

if (value == null) {

lock.readLock().unlock();

lock.writeLock().lock();

try {

value = loadData();

cacheMap.put(key, value);

} finally {

lock.writeLock().unlock();

}

lock.readLock().lock();

}

} finally {

lock.readLock().unlock();

}4. Memory model

In Java The volatile keyword can ensure the visibility and orderliness of variables in a multi-threaded environment to achieve a function similar to a synchronization lock. When using caching technology, you can use the memory model to achieve concurrency control.

For example, when using double-check locking for caching, you can use the volatile keyword to ensure the visibility and orderliness of data. The code example is as follows:

volatile Map<String, Object> cacheMap;

Object value = cacheMap.get(key);

if (value == null) {

synchronized (this) {

value = cacheMap.get(key);

if (value == null) {

value = loadData();

cacheMap.put(key, value);

}

}

}The above is for Java Cache technology is a common method for concurrency control. Of course, in different scenarios, it is also necessary to flexibly choose different caching technologies and concurrency control methods according to needs. In this process, we need to continuously evaluate and optimize to ensure the performance and stability of the program.

The above is the detailed content of How to control concurrency with Java caching technology. For more information, please follow other related articles on the PHP Chinese website!

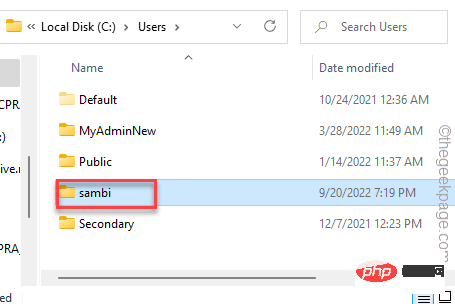

如何修复 Outlook 中缺少的 Microsoft Teams 插件May 11, 2023 am 11:01 AM

如何修复 Outlook 中缺少的 Microsoft Teams 插件May 11, 2023 am 11:01 AM团队在Outlook中有一个非常有用的加载项,当您在使用Outlook2013或更高版本的应用程序时安装以前的应用程序时,它会自动安装。安装这两个应用程序后,只需打开Outlook,您就可以找到预装的加载项。但是,一些用户报告了在Outlook中找不到Team插件的异常情况。修复1–重新注册DLL文件有时需要重新注册特定的Teams加载项dll文件。第1步-找到MICROSOFT.TEAMS.ADDINLOADER.DLL文件1.首先,您必须确保

如何在 Windows 10 中清除地址解析协议 (ARP) 缓存Apr 13, 2023 pm 07:43 PM

如何在 Windows 10 中清除地址解析协议 (ARP) 缓存Apr 13, 2023 pm 07:43 PM地址解析协议 (ARP) 用于将 MAC 地址映射到 IP 地址。网络上的所有主机都有自己的 IP 地址,但网络接口卡 (NIC) 将有 MAC 地址而不是 IP 地址。ARP 是用于将 IP 地址与 MAC 地址相关联的协议。所有这些条目都被收集并放置在 ARP 缓存中。映射的地址存储在缓存中,它们通常不会造成任何损害。但是,如果条目不正确或 ARP 缓存损坏,则会出现连接问题、加载问题或错误。因此,您需要清除 ARP 缓存并修复错误。在本文中,我们将研究如何清除 ARP 缓存的不同方法。方法

0x80070246 Windows更新错误:6修复方法May 20, 2023 pm 06:28 PM

0x80070246 Windows更新错误:6修复方法May 20, 2023 pm 06:28 PM根据几位Windows10和Windows11用户的说法,他们在尝试安装Windows更新时遇到了错误0x80070246。此错误阻止他们升级PC并享受最新功能。值得庆幸的是,在本指南中,我们列出了一些最佳解决方案,可帮助您解决Windows0PC上80070246x11的Windows更新安装错误。我们还将首先讨论可能引发问题的原因。让我们直接进入它。为什么我会收到Windows更新安装错误0x80070246?您可能有多种原因导致您在PC上收到Windows11安装错误0x80070246。

如何在Mac上清除图标缓存?Apr 22, 2023 pm 07:49 PM

如何在Mac上清除图标缓存?Apr 22, 2023 pm 07:49 PM如何在Mac上清除和重置图标缓存警告:因为您将使用终端和rm命令,所以在继续执行任何操作之前,最好使用TimeMachine或您选择的备份方法备份您的Mac。输入错误的命令可能会导致永久性数据丢失,因此请务必使用准确的语法。如果您对命令行不满意,最好完全避免这种情况。启动终端并输入以下命令并按回车键:sudorm-rfv/Library/Caches/com.apple.iconservices.store接下来,输入以下命令并按回车键:sudofind/private/var

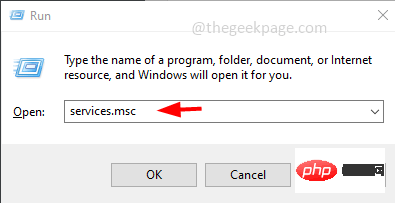

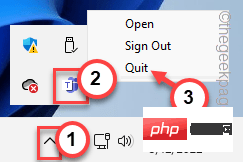

如何修复 Microsoft Teams 错误代码 caa70004 问题Apr 14, 2023 am 09:25 AM

如何修复 Microsoft Teams 错误代码 caa70004 问题Apr 14, 2023 am 09:25 AM尝试在其设备上启动 Microsoft Teams 桌面客户端的用户在空白应用页面中报告了错误代码 caa70004。错误代码说:“我们很抱歉——我们遇到了问题。”以及重新启动 Microsoft Teams 以解决问题的选项。您可以尝试实施许多解决方案并再次加入会议。解决方法——1. 您应该尝试的第一件事是重新启动 Teams 应用程序。只需在错误页面上点击“重新启动”即可。

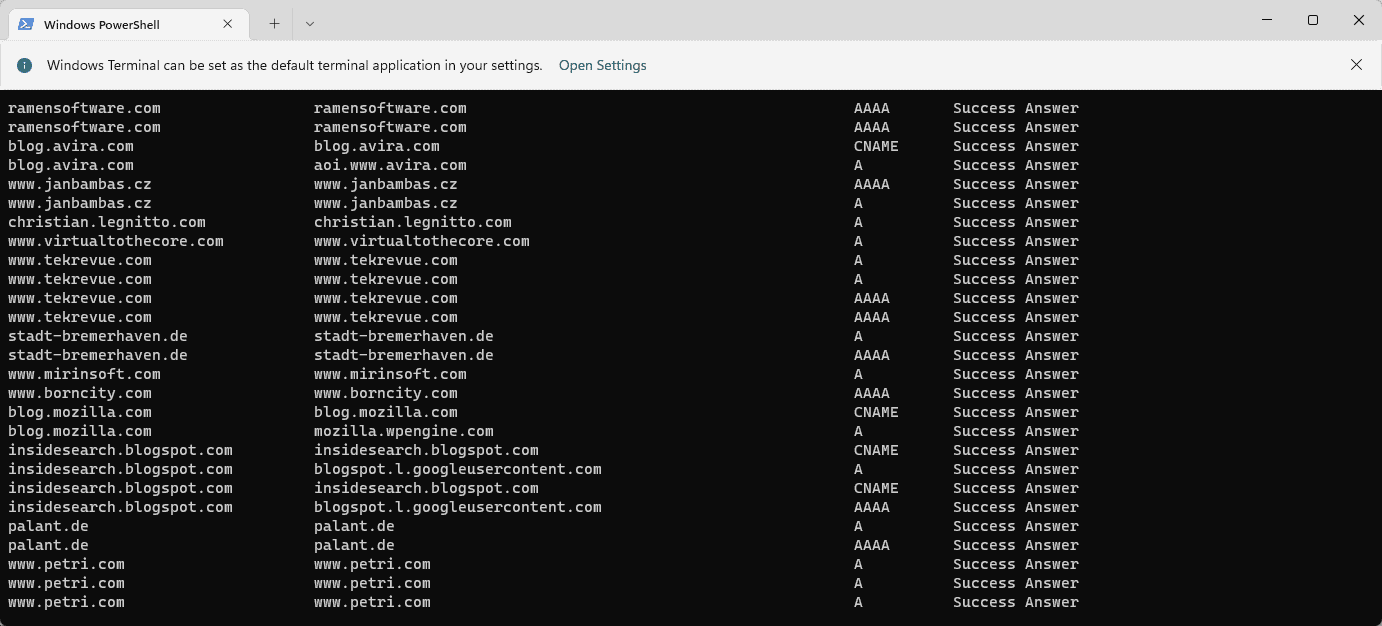

如何在 Windows 11上显示所有缓存的 DNS 条目May 21, 2023 pm 01:01 PM

如何在 Windows 11上显示所有缓存的 DNS 条目May 21, 2023 pm 01:01 PMWindows操作系统使用缓存来存储DNS条目。DNS(域名系统)是用于通信的互联网核心技术。特别是用于查找域名的IP地址。当用户在浏览器中键入域名时,加载站点时执行的首要任务之一是查找其IP地址。该过程需要访问DNS服务器。通常,互联网服务提供商的DNS服务器会自动使用,但管理员可能会切换到其他DNS服务器,因为这些服务器可能更快或提供更好的隐私。如果DNS用于阻止对某些站点的访问,则切换DNS提供商也可能有助于绕过Internet审查。Windows使用DNS解

如何在 Windows 11 上清理缓存:详细的带图片教程Apr 24, 2023 pm 09:37 PM

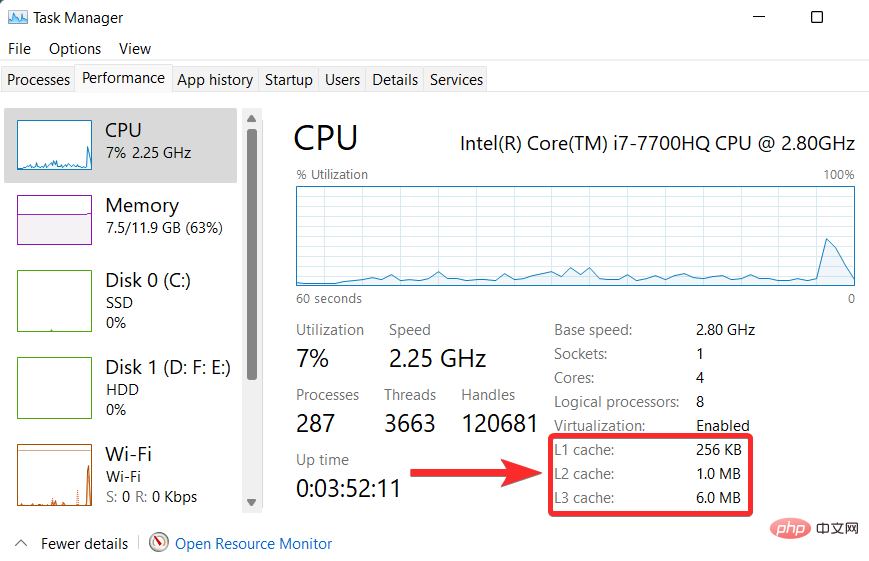

如何在 Windows 11 上清理缓存:详细的带图片教程Apr 24, 2023 pm 09:37 PM什么是缓存?缓存(发音为ka·shay)是一种专门的高速硬件或软件组件,用于存储经常请求的数据和指令,这些数据和指令又可用于更快地加载网站、应用程序、服务和系统的其他部分。缓存使最常访问的数据随时可用。缓存文件与缓存内存不同。缓存文件是指经常需要的文件,如PNG、图标、徽标、着色器等,多个程序可能需要这些文件。这些文件存储在您的物理驱动器空间中,通常是隐藏的。另一方面,高速缓存内存是一种比主内存和/或RAM更快的内存类型。它极大地减少了数据访问时间,因为与RAM相比,它更靠近CPU并且速度

vue的缓存有几种实现方式Dec 22, 2021 pm 06:00 PM

vue的缓存有几种实现方式Dec 22, 2021 pm 06:00 PMvue缓存数据有4种方式:1、利用localStorage,语法“localStorage.setItem(key,value)”;2、利用sessionStorage,语法“sessionStorage.setItem(key,value)”;3、安装并引用storage.js插件,利用该插件进行缓存;4、利用vuex,它是一个专为Vue.js应用程序开发的状态管理模式。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

Dreamweaver Mac version

Visual web development tools

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software