AMD’s “ultimate weapon” is here!

On June 13th, Eastern Time, AMD announced at the "AMD Data Center and Artificial Intelligence Technology Premiere" that it will launch the most advanced artificial intelligence GPU MI300X chip to date, which can accelerate ChatGPT and other chat robots. It uses generative artificial intelligence for processing speed and can use up to 192GB of memory.

In contrast, NVIDIA's H100 chip only supports 120GB of memory, which is generally believed to mean that NVIDIA's dominant position in this emerging market may be challenged. The MI300X will begin shipping to select customers later this year.

Although investors expect AMD to compete with NVIDIA in the field of AI chips, analysts have not followed the trend and blown away this powerful weapon of AMD. Instead, they point out very sensibly that AMD wants to compete with NVIDIA in the field of artificial intelligence chips. There is still a long way to go in the field of smart chips to challenge NVIDIA's industry leadership, and this chip alone cannot do it.

Citi chip analyst Chris Danely said in the latest report that AMD's MI300 chip seems to have achieved a huge design victory, but considering the performance limitations and history of failure, he is skeptical about the sustainability of graphics/CPU ICs. Xing expressed doubts, "While we expect AMD to continue to gain market share from Intel, its new Genoa products appear to be growing slower than expected."

On the same day, Karl Freund, founder and chief analyst of Cambrian-AI Research LLC, also wrote in Forbes that Although AMD’s newly launched chip has aroused huge interest from all parties in the market, it is not the same as Nvidia’s. Compared with the H100 chip, MI300X faces some challenges, mainly in the following four aspects:

NVIDIA began full shipping of the H100 today, and the company has by far the largest ecosystem of software and researchers in the AI industry.

Second, although the MI300X chip offers 192GB of memory, Nvidia will soon catch up at this point, and may even overtake it in the same time frame, so this is not a big advantage. The MI300X will be very expensive and won't have a significant cost advantage over Nvidia's H100.

The third is the real key: MI300 does not have a Transformer Engine (a library for accelerating Transformer models on NVIDIA GPUs) like H100, which can double the performance of large language models (LLM). If it takes a year to train a new model with thousands of (NVIDIA) GPUs, then training with AMD hardware may take another 2-3 years, or invest 3 times as many GPUs to solve the problem.

Finally, AMD has not yet disclosed any benchmarks. But performance when training and running LLM depends on the system design and GPU, so I’m looking forward to seeing some comparisons with industry competitors later this year.

However, Freund also added that MI300X may become an alternative to Nvidia’s GH200 Grace Hopper super chip. Companies like OpenAI and Microsoft need such alternatives, and while he doubts AMD will give these companies an offer they can't refuse, AMD won't take much market share away from Nvidia.

AMD’s share price has risen by 94% since the beginning of this year. Yesterday, the US stock market closed down 3.61%, while its peer Nvidia closed up 3.90%, with its market value closing above the US$1 trillion mark for the first time.

In fact, Nvidia is ahead not only because of its chips, but also because of the software tools they have provided to artificial intelligence researchers for more than a decade. Moor Insights & Strategy analyst Anshel Sag said: "Even if AMD is competitive in terms of hardware performance, people are still not convinced that its software solutions can compete with Nvidia."

is expected to drive healthy competition among technology companies

American technology critic Billy Duberstein pointed out on the 12th that with the popularity of ChatGPT, AI seems to have opened up a new field, and a competition has begun among leading companies in many industries to capture market share. Whether Nvidia or AMD wins this race, every technology company will benefit.

Duberstein said potential customers are very interested in the MI300. According to a June 8 article in Digital Times that he quoted, data center customers are desperately looking for alternatives to Nvidia products. Nvidia currently occupies a dominant position in the high-profit and high-growth market of artificial intelligence GPUs, accounting for 60% to 70% of the market share in the field of AI servers.

Duberstein went on to point out that given the current high price of Nvidia H100 servers, data center operators would like to see Nvidia have a third-party competitor, which would help reduce the price of AI chips. Therefore, this is a huge advantage for AMD and a challenge for Nvidia. This can lead to good profitability for every market participant.

Last month, Morgan Stanley analyst Joseph Moore adjusted his forecast for AMD's artificial intelligence revenue, saying it could be "several times higher" than initially expected.

Chinese companies competing for the GPU AI industry chain are expected to continue to benefit from it

Currently, major technology companies around the world are competing for AI tickets. Compared with overseas giants, Chinese large technology companies are more urgent to purchase GPUs.

According to "LatePost", after the Spring Festival this year, major Chinese Internet companies with cloud computing businesses have placed large orders with NVIDIA. Byte has ordered more than $1 billion in GPUs from Nvidia this year, and another large company's order has also exceeded at least 1 billion yuan. And Byte's orders this year alone may be close to the total number of commercial GPUs sold by Nvidia in China last year.

Guojin Securities continues to be optimistic about AMD’s new moves and continues to be optimistic about the AI industry chain. The agency believes that the continued popularity of generative AI has driven strong demand for AI chips. NVIDIA's second-quarter data center business guidance has exceeded expectations, and TSMC's 4nm, 5nm, and 7nm capacity utilization has increased significantly, which are the best proof.

Guojin Securities pointed out that according to industry chain research, the leading optical module-DSP chip manufacturer stated that AI-related business is expected to grow rapidly in the future; the leading CPU/GPU heat sink company stated that AI GPU experienced significant quarter-on-quarter growth in the second and third quarters. Leading companies said that in the next quarter, demand for server PCIe Retimer chips will accelerate and gradually increase. It is expected that between 2024 and 2025, new products in the AI industry will promote the development of the AI industry chain and bring sustained economic benefits.

The above is the detailed content of For big AI models who can't get NVIDIA GPUs, AMD chips are here!. For more information, please follow other related articles on the PHP Chinese website!

修复:Windows 11 中未安装 AMD 显卡驱动程序错误May 03, 2023 am 09:13 AM

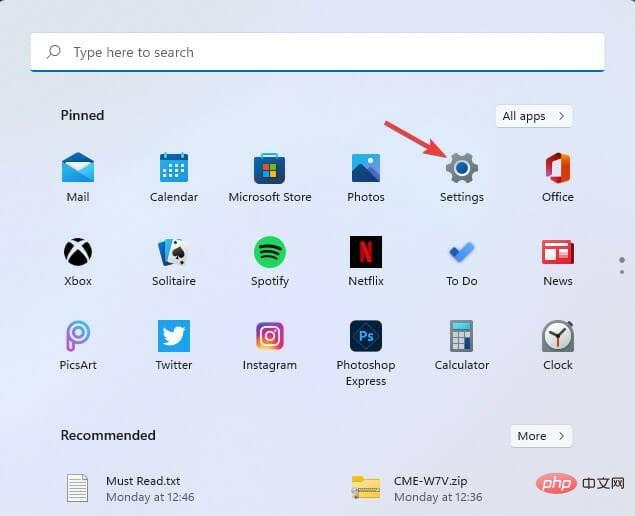

修复:Windows 11 中未安装 AMD 显卡驱动程序错误May 03, 2023 am 09:13 AM在配备AMDGPU的Windows11PC上出现未安装AMD显卡驱动程序错误。这是某些用户在选择AMDRadeon设置上下文菜单选项时发生的错误。弹出此错误消息,而不是打开AMDRadeon设置:未安装AMD显卡驱动程序,或AMD驱动程序无法正常运行。请安装适合您的AMD硬件的AMD驱动程序。该错误消息突出显示了AMD图形驱动程序的问题。这通常意味着PC具有过时、不兼容或损坏的AMD驱动程序。这是您可以修复Windows11中未安装AMD

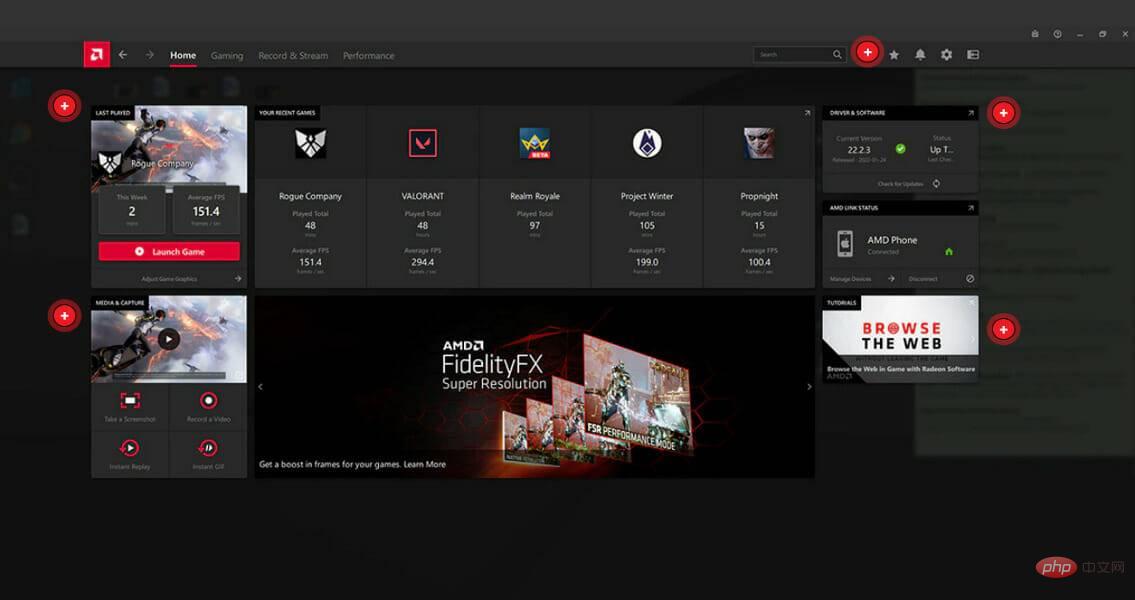

amd radeon software是什么Feb 21, 2023 am 11:58 AM

amd radeon software是什么Feb 21, 2023 am 11:58 AMamd radeon software是显卡的驱动程序,是用于Advanced Micro Devices的显卡和APU的设备驱动程序和实用程序软件包。Radeon Software驱动不只是包含显卡驱动程序,实际上还包含设置及问题报告向导、HDMI音频驱动等,如果不想对显卡做各种设置。除了识别显卡信息,Radeon Software还能识别显示器并提供丰富的显示器设置功能。

AMD RX 7800M XT在3DMark测试中获得高分,可与桌面端RTX 4070媲美Jun 07, 2023 pm 11:45 PM

AMD RX 7800M XT在3DMark测试中获得高分,可与桌面端RTX 4070媲美Jun 07, 2023 pm 11:45 PM6月7日消息,根据最新报道,AMD公司的移动显卡AMDRadeonRX7800MXT首次公布了其在3DMark跑分测试中的成绩,显示该显卡性能将超越上一代的RX6800。据可靠消息源透露,还有其他基于Navi32核心的AMD移动显卡规格被曝光,这也是用于AMDRadeonRX7700/7800系列的Navi32芯片首次亮相。从跑分结果来看,在TimeSpy测试中,AMDRX7800MXT获得了17842的分数,与桌面端的RTX4070显卡的表现相当(IT之家注:RTX4070分数为17736,而

怎样从 Windows 11 下载 AMD 显卡驱动程序May 09, 2023 pm 09:52 PM

怎样从 Windows 11 下载 AMD 显卡驱动程序May 09, 2023 pm 09:52 PM使用图形处理单元(GPU)或显卡在Windows11计算机上显示图形。因此,今天我们将了解如何在Windows11上下载AMD显卡驱动程序。内置的任务管理器软件是确定您的计算机正在使用哪个显卡以及当前使用了多少电量的最简单、最准确的方法。跟随我们将首先看看如果您从PC中删除AMD软件会发生什么,然后跳转到您可以在Windows11设备上下载图形驱动程序的方法列表。如果我删除AMD软件会怎样?AMDRadeon设置是主要的驱动程序界面,允许用户更改图形驱动程序的许多

由于 fTPM 错误,AMD PC 在 Windows 10 和 11 上出现卡顿,临时解决方法May 13, 2023 pm 07:04 PM

由于 fTPM 错误,AMD PC 在 Windows 10 和 11 上出现卡顿,临时解决方法May 13, 2023 pm 07:04 PM一两个月以来,AMD的Ryzen处理器被发现在启用固件TPM(fTPM)的Windows10和11系统上卡顿(通过Reddit)或冻结。今天,AMD在确定导致这些问题的原因后发布了一份公告。据该公司称,挂起和卡顿是由串行外设接口(SPI)闪存ROM内的扩展内存事务操作引起的。AMD已确定,选定的AMDRyzen™系统配置可能会间歇性地在位于主板上的SPI闪存(“SPIROM”)中执行与fTPM相关的扩展内存事务,这可能导致系统交互性或响应性暂时暂

AMD发布Ryzen 7000系列新成员:Ryzen 5 7500F处理器Jul 20, 2023 pm 12:01 PM

AMD发布Ryzen 7000系列新成员:Ryzen 5 7500F处理器Jul 20, 2023 pm 12:01 PM7月11日消息,据知情人士透露,AMD公司计划在中国市场推出一款全新处理器型号,名为AMDRyzen57500F。该处理器被认为是Ryzen7000系列中的一员,相较于Ryzen57600,7500F不包含集成的核显。这一消息显示,AMD将首先在中国发布Ryzen57500F处理器,并且暂无计划将其引入美国市场。AMDRyzen57500F处理器采用了Ryzen7000系列的制造工艺,而非像Ryzen57600一样采用了APU芯片。据了解,该处理器将于本月底面向中国市场的零售商和电商平台发布,并

突破性的AMD原型处理器亮相:锐龙9 5900X3D震撼亮相Jun 20, 2023 pm 03:55 PM

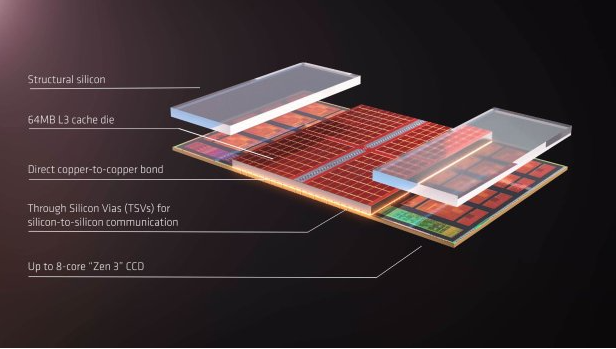

突破性的AMD原型处理器亮相:锐龙9 5900X3D震撼亮相Jun 20, 2023 pm 03:55 PM6月20日消息,近期,AMD在其奥斯汀总部向少数媒体展示了一款令人惊叹的原型产品,名为“锐龙95900X3D”。这款处理器采用了最新的3DV-Cache缓存技术,为AMD的锐龙产品线带来了显著的性能提升。据了解,这款“锐龙95900X3D”处理器集成了12个Zen4核心和128MB的3D缓存。在Windows任务管理器中,该处理器显示出192MB的三级缓存(128MB3D缓存+64MB原生缓存),以及6MB的二级缓存和768KB的一级缓存,总共达到了198.75M

2022 年 12 月星期二补丁日触发 AMD 锐龙 PC 上的冻结问题Apr 13, 2023 pm 07:28 PM

2022 年 12 月星期二补丁日触发 AMD 锐龙 PC 上的冻结问题Apr 13, 2023 pm 07:28 PM以防万一您还不知道,11 月的周二补丁日更新修复了与大多数 AMD 处理器上的 Spectre 变体 2 相关的安全漏洞。话虽如此,12 月的下一次跟进,即 2022 年的最后一次跟进,显然在配备 AMD CPU 的系统上并不是最好的。为什么?好吧,因为一些用户报告了其AMD Ryzen系统上的冻结问题。这似乎发生在带有KB11更新的Windows 11 22H2上5021255。Windows 11 版本 22H2 上出现问题老实说,这不是微软第一次或最后一次使用旨在实际改进它的补丁来破坏系统

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Linux new version

SublimeText3 Linux latest version

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

WebStorm Mac version

Useful JavaScript development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft