Technology peripherals

Technology peripherals AI

AI Microsoft provides GPT large model to the US government, how to ensure security?

Microsoft provides GPT large model to the US government, how to ensure security?

The craze for generative artificial intelligence has swept across the U.S. federal government. Microsoft announced the launch of the Azure OpenAI service, allowing Azure government customers to access GPT-3, GPT-4, and Embeddings.

Government agencies will have access to ChatGPT use cases through the service without sacrificing "the rigorous security and compliance standards they need to meet government requirements for sensitive data," Microsoft said in a statement. ."

Microsoft claims that it has developed an architecture that allows government customers to "securely access large language models in commercial environments from Azure Government." Microsoft says it will be accessed through the Python SDK REST APIs or Azure AI Studio, all without exposing government data to the public internet.

Microsoft promises: "Only queries submitted to the Azure OpenAI service will be transferred to the Azure OpenAI model in the commercial environment." "Azure Government directly peers with the Microsoft Azure commercial network, not directly with the public Internet Or Microsoft Enterprise Network peering."

Microsoft reports that it encrypts all Azure traffic using the IEEE 802.1AE — or MACsec — network security standard, and that all traffic resides on the global backbone , the backbone network consists of more than 250,000 kilometers of optical fiber and submarine cable systems.

Azure OpenAI Service for government has been fully launched and is available to approved enterprise or government customers.

How confidential can the ChatGPT used by the government be?

Microsoft has always wanted to win the trust of the U.S. government — but it has also made mistakes.

News emerged that more than a terabyte of sensitive government military documents had been exposed on the public internet - and the Department of Defense and Microsoft blamed each other over the issue.

OpenAI, a Microsoft subsidiary and the creator of ChatGPT, is also less than satisfactory in terms of security. In March, a poor open source library exposed some users' chat history. Since then, a number of high-profile companies — including Apple, Amazon and several banks — have banned internal use of ChatGPT over concerns it could expose confidential internal information.

Britain’s spy agency GCHQ even warned of the risk. So, is the U.S. government doing the right thing by handing over its secrets to Microsoft, even though those secrets obviously won't be transferred to an untrusted network?

Microsoft says it won’t exclusively use government data to train its OpenAI model, so the top-secret data likely won’t be leaked in replies to others. But that doesn't mean it can be safe by default. In its announcement, Microsoft politely acknowledged that when government users use OpenAI models, some data will still be recorded.

Microsoft said: "Microsoft allows customers with additional limited access qualifications and demonstrating specific use cases to request modifications to Azure OpenAI's content management capabilities."

It added: "If Microsoft approves customer modifications Data logging requests, any questions and responses associated with approved Azure subscriptions will not be stored, and data logging in Azure Commerce will be set to off." This means that unless the government agency meets certain criteria , otherwise the question and reply—the text returned by the AI model—will be retained.

The above is the detailed content of Microsoft provides GPT large model to the US government, how to ensure security?. For more information, please follow other related articles on the PHP Chinese website!

微软深化与 Meta 的 AI 及 PyTorch 合作Apr 09, 2023 pm 05:21 PM

微软深化与 Meta 的 AI 及 PyTorch 合作Apr 09, 2023 pm 05:21 PM微软宣布进一步扩展和 Meta 的 AI 合作伙伴关系,Meta 已选择 Azure 作为战略性云供应商,以帮助加速 AI 研发。在 2017 年,微软和 Meta(彼时还被称为 Facebook)共同发起了 ONNX(即 Open Neural Network Exchange),一个开放的深度学习开发工具生态系统,旨在让开发者能够在不同的 AI 框架之间移动深度学习模型。2018 年,微软宣布开源了 ONNX Runtime —— ONNX 格式模型的推理引擎。作为此次深化合作的一部分,Me

微软提出自动化神经网络训练剪枝框架OTO,一站式获得高性能轻量化模型Apr 04, 2023 pm 12:50 PM

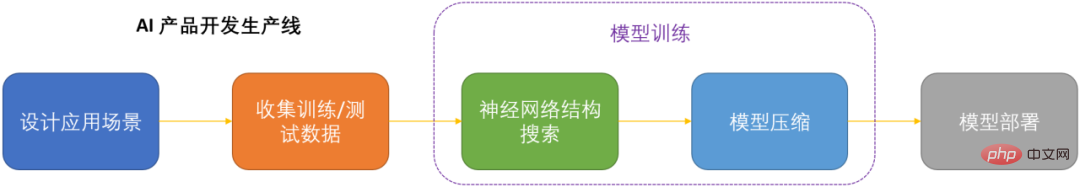

微软提出自动化神经网络训练剪枝框架OTO,一站式获得高性能轻量化模型Apr 04, 2023 pm 12:50 PMOTO 是业内首个自动化、一站式、用户友好且通用的神经网络训练与结构压缩框架。 在人工智能时代,如何部署和维护神经网络是产品化的关键问题考虑到节省运算成本,同时尽可能小地损失模型性能,压缩神经网络成为了 DNN 产品化的关键之一。DNN 压缩通常来说有三种方式,剪枝,知识蒸馏和量化。剪枝旨在识别并去除冗余结构,给 DNN 瘦身的同时尽可能地保持模型性能,是最为通用且有效的压缩方法。三种方法通常来讲可以相辅相成,共同作用来达到最佳的压缩效果。然而现存的剪枝方法大都只针对特定模型,特定任务,且需要很

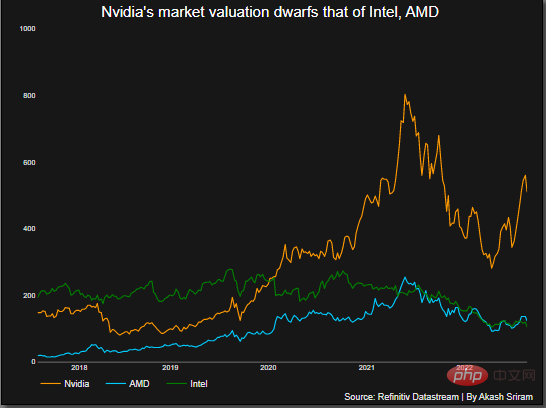

超5800亿美元!微软谷歌神仙打架,让英伟达市值飙升,约为5个英特尔Apr 11, 2023 pm 04:31 PM

超5800亿美元!微软谷歌神仙打架,让英伟达市值飙升,约为5个英特尔Apr 11, 2023 pm 04:31 PMChatGPT在手,有问必答。你可知,与它每次对话的计算成本简直让人泪目。此前,分析师称ChatGPT回复一次,需要2美分。要知道,人工智能聊天机器人所需的算力背后烧的可是GPU。这恰恰让像英伟达这样的芯片公司豪赚了一把。2月23日,英伟达股价飙升,使其市值增加了700多亿美元,总市值超5800亿美元,大约是英特尔的5倍。在英伟达之外,AMD可以称得上是图形处理器行业的第二大厂商,市场份额约为20%。而英特尔持有不到1%的市场份额。ChatGPT在跑,英伟达在赚随着ChatGPT解锁潜在的应用案

竞争加剧,微软和Adobe发布AI图像生成工具Apr 11, 2023 pm 10:55 PM

竞争加剧,微软和Adobe发布AI图像生成工具Apr 11, 2023 pm 10:55 PM随着OpenAI DALL-E和Midjourney的推出,AI艺术生成器开始变得越来越流行,它们接受文本提示并将其变成美丽的、通常是超现实的艺术品——如今,有两家大企业加入了这一行列。微软宣布,将通过Bing Image Creator把由DALL-E模型提供支持的AI图像生成功能引入Bing搜索引擎和Edge浏览器。创意软件开发商Adobe也透露,将通过名为Firefly的AI艺术生成产品来增强自己的工具。对于有权访问Bing聊天预览的用户来说,这一新的AI图像生成器已经可以在“创意”模式下

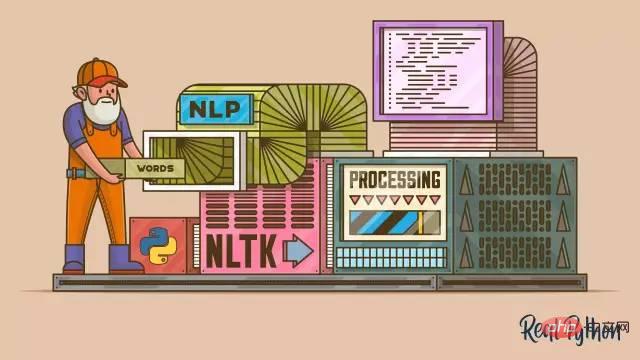

NLP模型读不懂人话?微软AdaTest挑错效率高五倍Apr 09, 2023 pm 04:11 PM

NLP模型读不懂人话?微软AdaTest挑错效率高五倍Apr 09, 2023 pm 04:11 PM自然语言处理(NLP)模型读不懂人话、将文本理解为相反的意思,是业界顽疾了。 现在微软表示,开发出解决此弊的方法。微软开发AdaTest方法来测试NLP模型 可作为跨越各种应用基础的大型模型,或称平台模型的进展已经大大改善了AI处理自然语言的能力。但自然语言处理(NLP)模型仍然远不完美,有时会以令人尴尬的方式暴露缺陷。 例如有个顶级的商用模型,将葡萄牙语中的「我不推荐这道菜」翻译成英语中的「我非常推荐这道菜」。 这些失败之所以继续存在,部分原因是寻找和修复NLP模型中的错误很难,以至于严重的

微软推出AI工具Security Copilot,帮助网络安全人员应对威胁Apr 04, 2023 pm 02:50 PM

微软推出AI工具Security Copilot,帮助网络安全人员应对威胁Apr 04, 2023 pm 02:50 PM近日微软推出了Security Copilot,这款新工具旨在通过AI助手简化网络安全人员的工作,帮助他们应对安全威胁。 网络安全人员往往要管理很多工具,和来自多个来源的海量数据。近日微软宣布推出了Security Copilot,这款新工具旨在通过AI助手简化网络安全人员的工作,帮助他们应对安全威胁。Copilot利用基于OpenAI的GPT-4最新技术,让网络安全人员能够就当前影响环境的安全问题提问并获得答案,甚至可以直接整合公司内部的知识,为团队提供有用的信息,从现有信息中进行学习,将当前

微软出品的Python小白神器,真香!Apr 12, 2023 am 10:55 AM

微软出品的Python小白神器,真香!Apr 12, 2023 am 10:55 AM大家好,我是菜鸟哥!最近逛G网,发现微软开源了一个项目叫「playwright-python」,作为一个兴起项目。Playwright 是针对 Python 语言的纯自动化工具,它可以通过单个API自动执行 Chromium,Firefox 和 WebKit 浏览器,连代码都不用写,就能实现自动化功能。虽然测试工具 selenium 具有完备的文档,但是其学习成本让一众小白们望而却步,对比之下 playwright-python 简直是小白们的神器。Playwright真的适用于Python吗?

微软公布必应整合ChatGPT的初步测试结果:搜索结果获71%测试者认可Apr 12, 2023 pm 05:37 PM

微软公布必应整合ChatGPT的初步测试结果:搜索结果获71%测试者认可Apr 12, 2023 pm 05:37 PM2月16日消息,微软在旗下必应搜索引擎和Edge浏览器中整合人工智能聊天机器人功能的举措成效初显,71%的测试者认可人工智能优化后的必应搜索结果。微软对来自169个国家的用户进行了为期一周的必应搜索引擎人工智能新功能测试,并于当地时间周三公布了初步结果。微软表示,71%的测试者认可人工智能优化后的搜索结果。人工智能聊天机器人功能也被证明很受欢迎,可以加深用户参与度。该公司表示,人们不仅出于特定目的查询内容,还使用具有人工智能聊天功能的必应搜索“以更

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.