Technology peripherals

Technology peripherals AI

AI 360 releases large visual model Zhou Hongyi: The combination of large models and the Internet of Things is the next trend

360 releases large visual model Zhou Hongyi: The combination of large models and the Internet of Things is the next trend"The original AIoT is only vertical AI, not general AI. AIoT empowered by large models is 'real AI'", May 31, 360 (601360.SH, hereinafter referred to as "360") Wisdom Lifestyle Group held a large-scale visual model and AI hardware new product launch conference. Zhou Hongyi, founder of 360 Group, attended the conference and delivered a speech - the large-scale model opened a new era of AIoT.

Zhou Hongyi said that artificial intelligence in the past was weak artificial intelligence, and the intelligent hardware built on this basis did not have real intelligence. After the emergence of large models, computers can truly understand the world for the first time and can give AIoT real intelligence. He said that the emergence of large models marks the arrival of general artificial intelligence. AI has completed the evolution from the perception layer to the cognitive layer. It is not only a disruptive revolution for traditional artificial intelligence, but also can promote autonomous driving and protein computing. , robot control and other fields of development.

"Big models will bring about a new industrial revolution." Zhou Hongyi believes that all software, APPs, websites, and all industries are worthy of being reshaped with large models, and smart hardware is a hardware-based APP. Judging from the development trend of large models, multi-modality is the only way for the development of large models. The most important change of GPT-4 is that it has multi-modal processing capabilities. Therefore, Zhou Hongyi predicted that the combination of multi-modal large models and the Internet of Things will become the next trend.

He said that the combination of multi-modal technology and intelligent hardware is the general trend. In the future, large models will become the brains of the Internet of Things, and IoT devices are equivalent to the sensing ends of large models, allowing large models to evolve "eyes and ears". It is also possible for large models to control Internet of Things devices, evolve mouths, hands and feet, thereby possessing mobility, and ultimately realize the transition from perception to cognition, and from understanding to execution.

At the meeting, Zhou Hongyi announced the release of the "360 Intelligent Brain-Visual Large Model". He said that the large language model is the basis for building a large visual model. The core of multi-modal capability enhancement is the cognitive and cognitive capabilities of the large language model. Reasoning and decision-making skills. At the same time, the large visual model is also an important component of the "360 Intelligent Brain", allowing the "360 Intelligent Brain" to understand pictures, videos, and sounds in the future.

It is understood that on the basis of visual perception capabilities, 360 integrates the "360 Intelligent Brain" large model with hundreds of billions of parameters, conducts cleaning training based on billions of Internet graphic and text data, and fine-tunes millions of industry data in security scenarios. Finally, a professional visual and multi-modal large model - 360 Intelligent Brain-Visual large model was created.

"At present, the capabilities of large models are mainly reflected in the software layer. When large models are connected to intelligent hardware, the capabilities of large models will move from the digital world to the physical world." Zhou Hongyi said.

The above is the detailed content of 360 releases large visual model Zhou Hongyi: The combination of large models and the Internet of Things is the next trend. For more information, please follow other related articles on the PHP Chinese website!

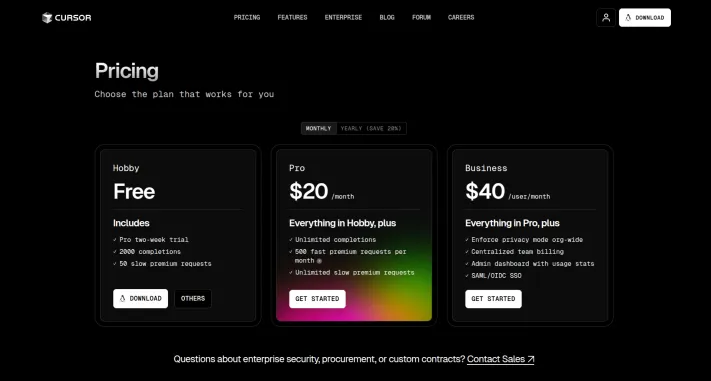

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

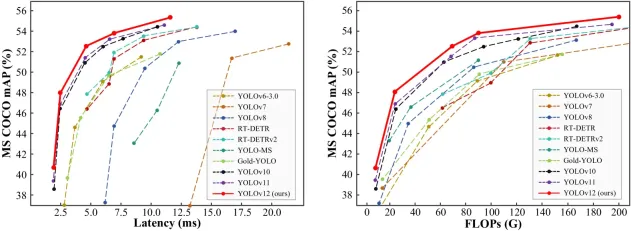

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver Mac version

Visual web development tools