Empty Bucket

We start with the simplest current limiting configuration:

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit;

proxy_pass http://login_upstream;

}

}$binary_remote_addr for client ip limit Flow;

zone=ip_limit:10m The name of the current limiting rule is ip_limit, which allows the use of 10mb of memory space to record the current limiting status corresponding to the IP;

-

rate=10r/s The current limit speed is 10 requests per second

location /login/ Limit the login flow

The current limit speed is 10 requests per second. If 10 requests arrive at an idle nginx at the same time, can they all be executed?

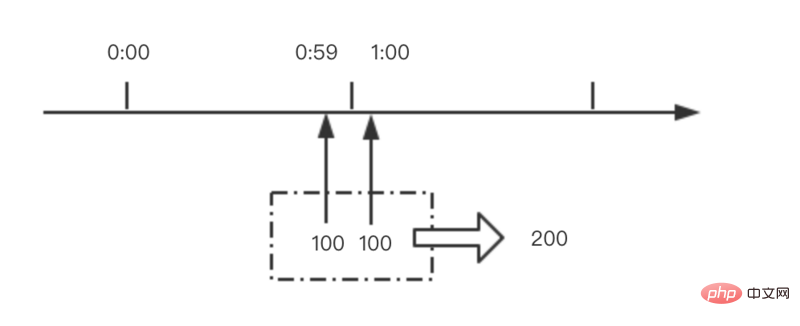

Leaky bucket leak requests are uniform. How is 10r/s a constant speed? One request is leaked every 100ms.

Under this configuration, the bucket is empty, and all requests that cannot be leaked in real time will be rejected.

So if 10 requests arrive at the same time, only one request can be executed, and the others will be rejected.

This is not very friendly. In most business scenarios, we hope that these 10 requests can be executed.

#burst

Let’s change the configuration to solve the problem in the previous section

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12;

proxy_pass http://login_upstream;

}

}burst=12 The size of the leaky bucket is set to 12

# Logically called a leaky bucket, it is implemented as a fifo queue, which temporarily caches requests that cannot be executed.

The leakage speed is still 100ms per request, but requests that come concurrently and cannot be executed temporarily can be cached first. Only when the queue is full will new requests be refused.

In this way, the leaky bucket not only limits the current, but also plays the role of peak shaving and valley filling.

Under such a configuration, if 10 requests arrive at the same time, they will be executed in sequence, one every 100ms.

Although it was executed, the delay was greatly increased due to queuing execution, which is still unacceptable in many scenarios.

nodelay

Continue to modify the configuration to solve the problem of increased delay caused by too long delay

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12 nodelay;

proxy_pass http://login_upstream;

}

}nodelay Advance the time to start executing requests , it used to be delayed until it leaked out of the bucket, but now there is no delay. It will start executing as soon as it enters the bucket

Either it will be executed immediately, or it will be rejected. Requests will no longer be delayed due to throttling.

Because requests leak out of the bucket at a constant rate, and the bucket space is fixed, in the end, on average, 5 requests are executed per second, and the purpose of current limiting is still achieved.

But this also has disadvantages. The current limit is limited, but the limit is not so uniform. Taking the above configuration as an example, if 12 requests arrive at the same time, then these 12 requests can be executed immediately, and subsequent requests can only be entered into the bucket at a constant speed, and one request will be executed every 100ms. If there are no requests for a period of time and the bucket is empty, 12 concurrent requests may be executed at the same time.

In most cases, this uneven flow rate is not a big problem. However, nginx also provides a parameter to control concurrent execution, which is the number of nodelay requests.

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12 delay=4;

proxy_pass http://login_upstream;

}

}delay=4 Start delay from the 5th request in the bucket

In this way, by controlling the value of the delay parameter, you can adjust the allowed concurrent execution The number of requests makes the requests even. It is still necessary to control this number on some resource-consuming services.

The above is the detailed content of How to configure Nginx current limiting. For more information, please follow other related articles on the PHP Chinese website!

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM本篇文章给大家带来了关于nginx的相关知识,其中主要介绍了nginx拦截爬虫相关的,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

nginx限流模块源码分析May 11, 2023 pm 06:16 PM

nginx限流模块源码分析May 11, 2023 pm 06:16 PM高并发系统有三把利器:缓存、降级和限流;限流的目的是通过对并发访问/请求进行限速来保护系统,一旦达到限制速率则可以拒绝服务(定向到错误页)、排队等待(秒杀)、降级(返回兜底数据或默认数据);高并发系统常见的限流有:限制总并发数(数据库连接池)、限制瞬时并发数(如nginx的limit_conn模块,用来限制瞬时并发连接数)、限制时间窗口内的平均速率(nginx的limit_req模块,用来限制每秒的平均速率);另外还可以根据网络连接数、网络流量、cpu或内存负载等来限流。1.限流算法最简单粗暴的

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM实验环境前端nginx:ip192.168.6.242,对后端的wordpress网站做反向代理实现复杂均衡后端nginx:ip192.168.6.36,192.168.6.205都部署wordpress,并使用相同的数据库1、在后端的两个wordpress上配置rsync+inotify,两服务器都开启rsync服务,并且通过inotify分别向对方同步数据下面配置192.168.6.205这台服务器vim/etc/rsyncd.confuid=nginxgid=nginxport=873ho

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AM

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AMnginx php403错误的解决办法:1、修改文件权限或开启selinux;2、修改php-fpm.conf,加入需要的文件扩展名;3、修改php.ini内容为“cgi.fix_pathinfo = 0”;4、重启php-fpm即可。

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM跨域是开发中经常会遇到的一个场景,也是面试中经常会讨论的一个问题。掌握常见的跨域解决方案及其背后的原理,不仅可以提高我们的开发效率,还能在面试中表现的更加

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PM

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PMnginx部署react刷新404的解决办法:1、修改Nginx配置为“server {listen 80;server_name https://www.xxx.com;location / {root xxx;index index.html index.htm;...}”;2、刷新路由,按当前路径去nginx加载页面即可。

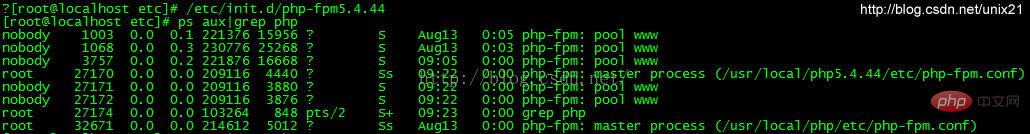

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PM

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PMlinux版本:64位centos6.4nginx版本:nginx1.8.0php版本:php5.5.28&php5.4.44注意假如php5.5是主版本已经安装在/usr/local/php目录下,那么再安装其他版本的php再指定不同安装目录即可。安装php#wgethttp://cn2.php.net/get/php-5.4.44.tar.gz/from/this/mirror#tarzxvfphp-5.4.44.tar.gz#cdphp-5.4.44#./configure--pr

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AM

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AMnginx禁止访问php的方法:1、配置nginx,禁止解析指定目录下的指定程序;2、将“location ~^/images/.*\.(php|php5|sh|pl|py)${deny all...}”语句放置在server标签内即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Chinese version

Chinese version, very easy to use

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SublimeText3 Linux new version

SublimeText3 Linux latest version