In recent years, with the rapid growth of network information, web crawler technology has played an increasingly important role in the Internet industry. Among them, the emergence of Go language has brought many advantages to the development of web crawlers, such as high speed, high concurrency, low memory usage, etc. This article will introduce some web crawler development techniques in Go language to help developers develop web crawler projects faster and better.

1. How to choose a suitable HTTP client

In the Go language, there are a variety of HTTP request libraries to choose from, such as net/http, GoRequests, fasthttp, etc. Among them, net/http is the HTTP request library that comes with the standard library. For simple HTTP requests, it can already meet the performance requirements. For scenarios that require high concurrency and high throughput, you can choose to use third-party libraries such as fasthttp to better utilize the coroutines and concurrency features of the Go language.

2. How to deal with the anti-crawler mechanism of the website

In the development of web crawlers, we often encounter the prevention of the anti-crawler mechanism of the website. In order to avoid being blocked by IP or interface, you need to adopt some techniques, such as:

1. Set User-Agent: By setting the User-Agent information in the request header, simulate the browser's access behavior to avoid being blocked by the website. Crawler behavior detected.

2. Add Referer information: Some websites need to carry specific Referer information for normal access, and relevant information needs to be added to the HTTP request header.

3. Dynamic IP proxy: Use a dynamic IP proxy pool to avoid IP being blocked by websites.

4. Set the request interval: Set the request interval appropriately to avoid too frequent requests, which will burden the website and make it easy to be blocked.

3. How to parse HTML pages

In the process of web crawling, it is often necessary to extract the required information from HTML pages, which requires the use of HTML parsing technology. In Go language, commonly used HTML parsing tools include goquery and golang.org/x/net/html. Among them, goquery can query HTML elements directly through jQuery, which is more convenient to use.

4. How to handle Cookie information

Some websites need to carry Cookie information for normal access. Therefore, in the development of web crawlers, it is necessary to better handle Cookie-related information. In the Go language, you can use the http.Cookie structure to represent cookie information, and you can also use cookiejar to save and manage cookies.

5. How to deduplicate and store data

In the development of web crawlers, data deduplication and storage are essential links. In the Go language, you can perform deduplication operations by using data structures such as map, or you can use third-party libraries such as bloomfilter. For data storage, we can choose to store the data in local files or use a database for storage.

In short, Go language provides many convenient features and tools in web crawler development. Developers can choose appropriate tools and techniques based on specific needs and situations to quickly and efficiently complete the development of web crawler projects.

The above is the detailed content of Web crawler development skills in Go language. For more information, please follow other related articles on the PHP Chinese website!

提高 Python 代码可读性的五个基本技巧Apr 12, 2023 pm 08:58 PM

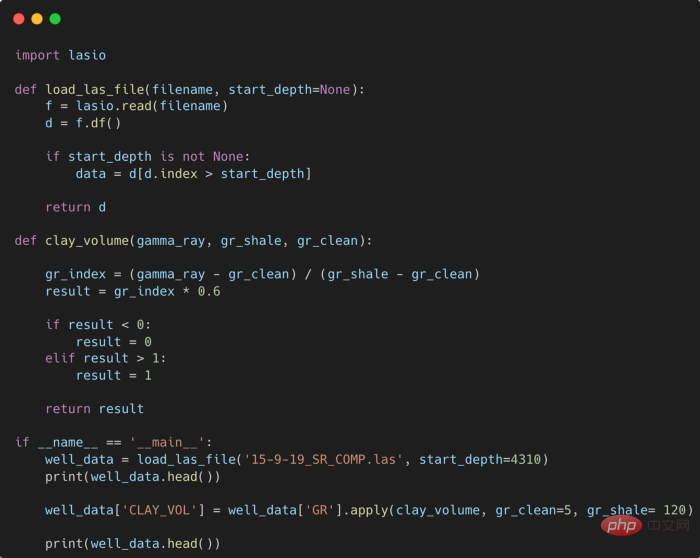

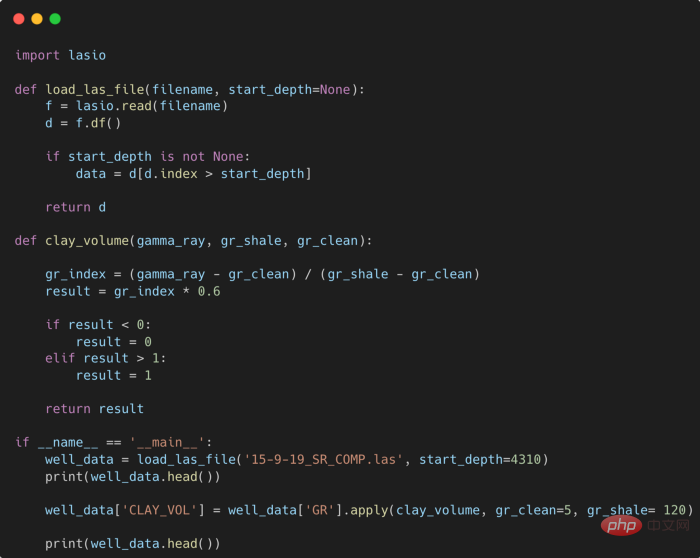

提高 Python 代码可读性的五个基本技巧Apr 12, 2023 pm 08:58 PMPython 中有许多方法可以帮助我们理解代码的内部工作原理,良好的编程习惯,可以使我们的工作事半功倍!例如,我们最终可能会得到看起来很像下图中的代码。虽然不是最糟糕的,但是,我们需要扩展一些事情,例如:load_las_file 函数中的 f 和 d 代表什么?为什么我们要在 clay 函数中检查结果?这些函数需要什么类型?Floats? DataFrames?在本文中,我们将着重讨论如何通过文档、提示输入和正确的变量名称来提高应用程序/脚本的可读性的五个基本技巧。1. Comments我们可

使用PHP开发直播功能的十个技巧May 21, 2023 pm 11:40 PM

使用PHP开发直播功能的十个技巧May 21, 2023 pm 11:40 PM随着直播业务的火爆,越来越多的网站和应用开始加入直播这项功能。PHP作为一种流行的服务器端语言,也可以用来开发高效的直播功能。当然,要实现一个稳定、高效的直播功能需要考虑很多问题。下面列出了使用PHP开发直播功能的十个技巧,帮助你更好地实现直播。选择合适的流媒体服务器PHP开发直播功能,首先需要考虑的就是流媒体服务器的选择。有很多流媒体服务器可以选择,比如常

提高Python代码可读性的五个基本技巧Apr 11, 2023 pm 09:07 PM

提高Python代码可读性的五个基本技巧Apr 11, 2023 pm 09:07 PM译者 | 赵青窕审校 | 孙淑娟你是否经常回头看看6个月前写的代码,想知道这段代码底是怎么回事?或者从别人手上接手项目,并且不知道从哪里开始?这样的情况对开发者来说是比较常见的。Python中有许多方法可以帮助我们理解代码的内部工作方式,因此当您从头来看代码或者写代码时,应该会更容易地从停止的地方继续下去。在此我给大家举个例子,我们可能会得到如下图所示的代码。这还不是最糟糕的,但有一些事情需要我们去确认,例如:在load_las_file函数中f和d代表什么?为什么我们要在clay函数中检查结果

PHP中的多表关联查询技巧May 24, 2023 am 10:01 AM

PHP中的多表关联查询技巧May 24, 2023 am 10:01 AMPHP中的多表关联查询技巧关联查询是数据库查询的重要部分,特别是当你需要展示多个相关数据库表内的数据时。在PHP应用程序中,在使用MySQL等数据库时,多表关联查询经常会用到。多表关联的含义是,将一个表中的数据与另一个或多个表中的数据进行比较,在结果中将那些满足要求的行连接起来。在进行多表关联查询时,需要考虑表之间的关系,并使用合适的关联方法。下面介绍几种多

Python中简单易用的并行加速技巧Apr 12, 2023 pm 02:25 PM

Python中简单易用的并行加速技巧Apr 12, 2023 pm 02:25 PM1.简介我们在日常使用Python进行各种数据计算处理任务时,若想要获得明显的计算加速效果,最简单明了的方式就是想办法将默认运行在单个进程上的任务,扩展到使用多进程或多线程的方式执行。而对于我们这些从事数据分析工作的人员而言,以最简单的方式实现等价的加速运算的效果尤为重要,从而避免将时间过多花费在编写程序上。而今天的文章费老师我就来带大家学习如何利用joblib这个非常简单易用的库中的相关功能,来快速实现并行计算加速效果。2.使用joblib进行并行计算作为一个被广泛使用的第三方Python库(

Go语言中的网络爬虫开发技巧Jun 02, 2023 am 09:21 AM

Go语言中的网络爬虫开发技巧Jun 02, 2023 am 09:21 AM近年来,随着网络信息的急剧增长,网络爬虫技术在互联网行业中扮演着越来越重要的角色。其中,Go语言的出现为网络爬虫的开发带来了诸多优势,如高速度、高并发、低内存占用等。本文将介绍一些Go语言中的网络爬虫开发技巧,帮助开发者更快更好地进行网络爬虫项目开发。一、如何选择合适的HTTP客户端在Go语言中,有多种HTTP请求库可供选择,如net/http、GoRequ

使用一个神器的指令,能迅速让你的GPT拥有智慧!May 09, 2023 am 08:13 AM

使用一个神器的指令,能迅速让你的GPT拥有智慧!May 09, 2023 am 08:13 AM今天给大家分享二个小技巧,第一个可以增加输出的逻辑,让框架逻辑变的更加清晰。先来看看正常情况下GPT的输出,以用户增长分析体系为例:下来我给加一个简单的指令,我们再对比看看效果:是不是效果更好一些?而且逻辑很清晰,当然上面的输出其实不止这些,只是为了举例而已。我们直接让GPT扮演一个资深的Python工程师,帮我写个学习计划吧!提问的时候只需后面加以下这句话即可!let'sthinkstepbystep接下来再看看第二个实用的指令,可以让你的文章更上一个台阶,比如我们让GPT写一个述职报告,这里

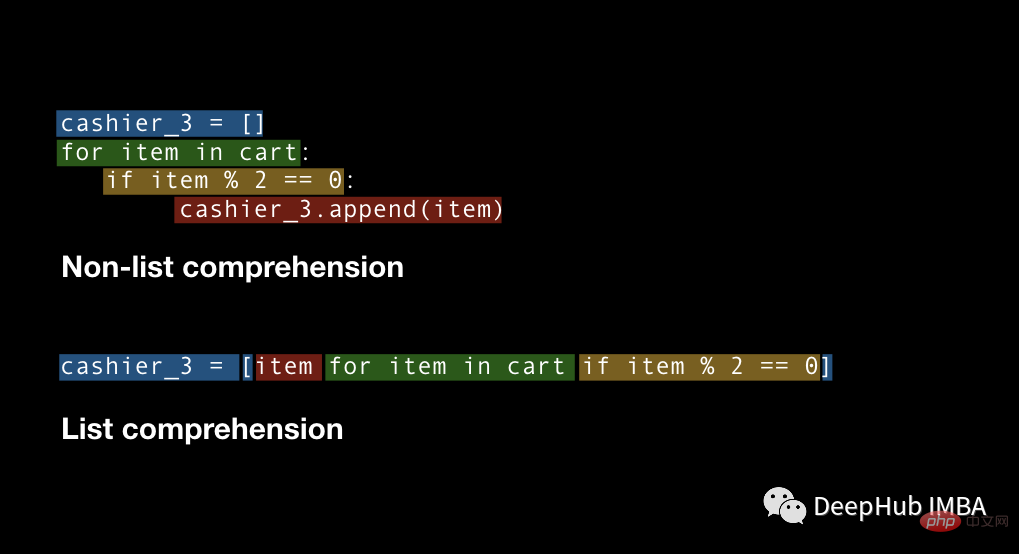

四种Python推导式开发技巧,让你的代码更高效Apr 22, 2023 am 09:40 AM

四种Python推导式开发技巧,让你的代码更高效Apr 22, 2023 am 09:40 AM对于数据科学,Python通常被广泛地用于进行数据的处理和转换,它提供了强大的数据结构处理的函数,使数据处理更加灵活,这里说的“灵活性”是什么意思?这意味着在Python中总是有多种方法来实现相同的结果,我们总是有不同的方法并且需要从中选择易于使用、省时并能更好控制的方法。要掌握所有的这些方法是不可能的。所以这里列出了在处理任何类型的数据时应该知道的4个Python技巧。列表推导式ListComprehension是创建列表的一种优雅且最符合python语言的方法。与for循环和if语句相比,列

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Linux new version

SublimeText3 Linux latest version

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!