In March of this year, in the face of the surging AI trend, the "Silicon Valley Knife King" changed his Japanese attitude and took the initiative to stand in front of the stage and announced the launch of DGX Cloud, putting AI-specific GPUs on the cloud for "renting" .

In the past, cloud computing companies were responsible for selling computing power. They purchase multiple models of graphics cards and artificial intelligence accelerators, price them based on hardware performance or usage time, and then rent them to downstream customers.

In this process, NVIDIA either sells GPUs to cloud computing platforms or directly to AI companies. For example, the first NVIDIA DGX supercomputer was delivered to OpenAI by Huang personally in 2016, and did not directly sell computing power. At best, he can be considered a "shovel seller".

As for the cloud migration of DGX, although Nvidia still needs to host it on the cloud platform, it is essentially crossing the boundary.

Why does NVIDIA, which has always been behind the scenes, step to the front of the stage this time?

NVIDIA is undoubtedly the biggest beneficiary of this round of AI tide, and there may not even be one.

The price of GPU chips used to train AI has skyrocketed. The overseas prices of A100 and H100 per chip have risen to US$15,000 and US$40,000 respectively. The price of a domestic AI server equipped with 8 A100s and 80G storage space has also increased from From 80,000/unit in the middle of last year, it has soared to the current 1.65 million/unit.

But not all the money has flowed into Nvidia's pockets, and the premium has been taken away by channels. The key is that there is no way to buy and sell Nvidia.

DGX Cloud came into being under this situation, and the key price seems to be very cheap. The DGX Cloud equipped with 8 H100 GPU modules has a monthly package fee of only US$37,000, which is equivalent to the price of a single H100.

For AI companies that are in urgent need of computing power and funds but are stretched thin, DGX Cloud is undoubtedly a pillow that falls from the sky when they are sleepy; for Nvidia, selling cloud computing power can not only make money but also bind customers.

Behind the seemingly win-win situation, there is a secret war that begins with AI.

Equal computing power

From the perspective of industry characteristics, today’s AIGC based on cloud computing power is very similar to the previous crypto asset mining, but the resources required are much higher than the latter.

Guosheng Securities once compared the similarities and differences between crypto asset mining and the AIGC industry in "The Evolution of AIGC Computing Power from a Web3 Perspective":

1 The power consumption of the AIGC industry will exceed the current power consumption of the Bitcoin mining industry in about 1.6-7.5 years. The main driving factor is the rapid growth of GPT large language models in model parameters, daily activity and number of models;

2 Similar to Bitcoin mining, the content driven by computing power in the AIGC industry is in high-intensity competition. Only by continuously and quickly producing high-quality content can participants ensure that the user attention they obtain will not decline.

This leads to two major characteristics of the AIGC industry: high cost and sustainability.

Among them, the cost side is not only the power consumption, of course, the power consumption can be used as a very intuitive evidence. For example, the Yangquan supercomputing center used by Baidu to train inference reasoning Wen Xinyiyan consumes 64,000kW·h (degree) per hour. According to the commercial electricity consumption standard of 0.45 yuan/kW·h, the annual electricity bill reaches 250 million.

The greater cost comes from the investment in computing power.

Taking ChatGPT as an example, we do not consider the computing power required for the inference process that is highly related to daily activity, but only consider the training process. According to calculations, one training of GPT-3 with 175 billion parameters requires approximately 6,000 Nvidia A100 graphics cards. If the Internet is considered Loss, approximately tens of thousands of A100s are needed.

Based on RMB 100,000 for a single A100 chip, large-scale training would require an investment of about RMB 1 billion, which ordinary manufacturers simply cannot afford. GPT-4 has larger model parameters, more identifiers for training, and requires more computing power.

Moreover, with the release of more large AIGC models, the computing power required has skyrocketed.

According to OpenAI’s calculations, from 2012 to 2018, the computing power required to train AI doubled approximately every 3-4 months, with a total increase of 300,000 times (while Moore’s Law was only 7 times during the same time growth), the computing power required for head training models increases by as much as 10 times every year, and the overall increase is exponential.

At this time, the advantages of cloud computing power are reflected. It can be summed up in 8 words: break it into parts and pay on demand.

Cloud computing power allows AIGC large model manufacturers to rent hardware provided by the cloud computing power platform directly on demand without purchasing hardware such as NVIDIA A100 graphics cards. This allows start-ups or non-head model manufacturers to also try to enter the AIGC field. .

Even this "break it into parts" approach is beneficial to all parties in the industry chain:

1) For upstream computing power manufacturers, when computing power hardware enters the off-season and inventory increases, they can smooth the fluctuations in income by selling cloud computing power and reserve "live" power for the peak season. Meet the rebounding market demand in a timely manner;

2) For midstream cloud service manufacturers, it will help increase customer flow;

3) For downstream computing power demanders, it can minimize the threshold for using computing power and drive the advent of the AIGC era for all.

If AIGC large model manufacturers are willing to transfer more resources, they can also cooperate more deeply with the cloud platform. A typical case in this regard is the cooperation between Microsoft Cloud and OpenAI. The cooperation between the two does not stop at renting computing power, but goes deep into the integration of equity and products.

In addition, in large model competitions, in addition to the obvious hardware investment cost, there is also an invisible time cost.

Generally speaking, the computing power requirements of large models are divided into two stages. The first is the process of training a large model similar to ChatGPT; the second is the inference process of commercializing this model.

And the longer this process continues, the better the model will be. So now when we look at NVIDIA DGX Cloud, it is not difficult to understand what Lao Huang did.

Using the cloud method to drive down the price of GPUs used for AI training, win over small and medium-sized companies with equal access to computing power, and then bind customers based on the sustainability factor of large model training, the "Silicon Valley Sword King" kills two birds with one stone.

It may not be Nvidia that benefits

Since December last year, the price of Nvidia A100 has increased by 37.5% in five months, and the price of A800 has increased by 20.0% in the same period.

The skyrocketing price of GPUs has undoubtedly increased the threshold for AIGC to train large models, but for leading manufacturers, they have to buy GPUs regardless of the price increase. But for waist companies, if the price does not increase, they may bite the bullet and still place orders. Once the price increases, they can only rely on GPUs. The 50 million US dollars in financing Wang Huiwen received for starting a business may not even be able to buy all the graphics cards needed for training. .

So, when Lao Huang took out DGX Cloud at this time, it was like opening a luxury car rental company, so that people who could not afford it could rent it.

Of course, another consideration behind this is to get ahead of competitors and bind more small and medium-sized customers.

In this round of AI boom, apart from OpenAI, the AI companies that are most out of the circle are none other than Midjourney and Authropic. The former is an AI drawing application that recently cooperated with the QQ channel to start domestic business. The founder of the latter comes from OpenAI, and its conversational robot Claude directly benchmarks ChatGPT.

The two companies have one thing in common, that is, they did not purchase Nvidia GPUs to build supercomputers, but instead used Google's computing services.

This service is provided by a supercomputing system integrating 4096 TPU v4, focusing on Google’s own research.

Another giant that engages in self-developed chips is Microsoft, the leader of this AI wave. It is rumored that this chip called Athena uses an advanced 5nm process and is manufactured by TSMC. The number of R&D teams is close to 300 people.

Obviously, the goal of this chip is to replace the expensive A100/H100, provide a computing power engine for OpenAI, and will eventually seize Nvidia’s cake through Microsoft’s Azure cloud service.

In addition to backstabbing from cloud computing companies, Nvidia’s major customer Tesla also wants to go it alone.

In August 2021, Musk showed the outside world the supercomputing Dojo ExaPOD built with 3,000 of his own D1 chips. Among them, the D1 chip is manufactured by TSMC and uses a 7nm process. 3,000 D1 chips directly make Dojo the fifth largest computer in the world in terms of computing power.

In comparison, although domestic companies affected by the ban also have alternative plans, they still rely heavily on Nvidia in the short term.

Domestic chips can do cloud inference work that does not require high information granularity, but most of them are currently unable to handle cloud training that requires ultra-high computing power.

Companies such as Suiyuan Technology, Biren Technology, Tianshu Zhixin, and Cambrian have all launched their own cloud products, and the theoretical performance indicators are not weak.

According to previously exposed information, Baidu’s Yangquan Supercomputing Center, which is used to train reasoning Wen Xinyiyan, in addition to the A100, also uses some domestically produced products, such as Baidu’s self-developed Kunlun core and Cambrian’s Siyuan 590. Among them, there is news that Siyuan accounts for about 10%-20% of the 2023 procurement plan.

Among them, Wen Xinyiyan’s chip layer core capabilities come from Kunlun Core 2nd generation AI chip, which adopts self-developed XPU-R architecture, 7nm process and GDDR6 high-speed video memory, with significantly improved versatility and performance; it has 256 TOPS@ The computing power level of INT8 and 128 TFLOPS@FP16 is 2-3 times higher than that of the previous generation.

In March this year, Robin Li also shared at the Yabuli China Entrepreneurs Forum that Kunlun chips are now very suitable for large model inference and will be suitable for training in the future.

End

Starting from RIVA128, NVIDIA has demonstrated its amazing market observation capabilities. Over the past decade or so, from cryptocurrency mining to the Metaverse to the AI boom, NVIDIA has taken advantage of the trend and expanded its graphics card customers from gamers to technology giants.

As the war spreads, Nvidia’s market value has also surged, from a second-tier chip company to No. 1 in the entire industry. However, Huang Renxun said that the moment of AI iPhone has arrived, so how can Nvidia be invincible even if Nokia was defeated by Apple.

References

[1] AIGC’s long option: AI cloud computing power, Guosheng Securities

[2] AIGC computing power evolution theory from the perspective of Web3, Guosheng Securities

[3] A crack in the Nvidia empire, Yuanchuan Research Institute

[4] Cloud computing power mining may be the most stable way to enter Bitcoin now, Odaily Planet Daily

[5] Expert interpretation of Baidu’s “Wen Xin Yi Yan”, Unicorn Think Tank

[6] In the era of large models, domestic GPUs are accelerating like crazy, and digital time is krypton

[7] NVIDIA Jensen Huang: AI supercomputing capabilities will be provided through Chinese cloud service providers, and the AI iPhone is coming! First Finance

[8] Overview of the AI computing power industry chain: Technology iteration promotes bottleneck breakthroughs, and the increase in AIgc scenarios drives the demand for computing power, Essence Securities

The above is the detailed content of Beyond cloud computing power, a secret war begins with AI. For more information, please follow other related articles on the PHP Chinese website!

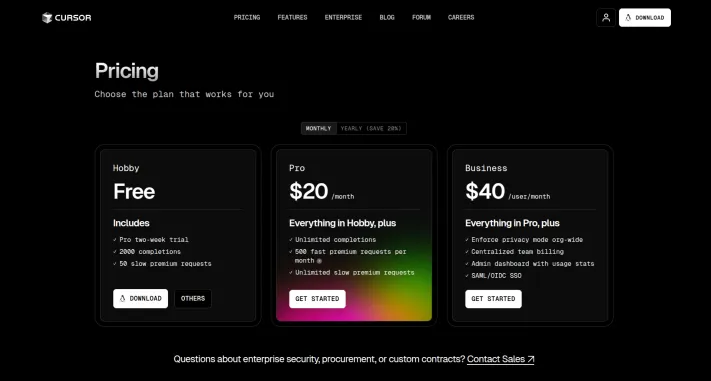

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

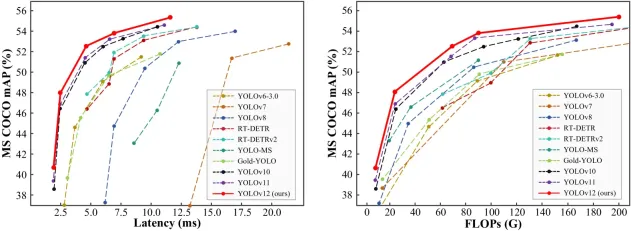

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 English version

Recommended: Win version, supports code prompts!