Affected by the boom in the development of AI (artificial intelligence) large models, the market demand for computing power has increased significantly. As one of the computing power infrastructure, AI servers can process quickly and accurately due to their advantages in graphics rendering and parallel computing of massive data. With a large amount of data, the market value has gradually become more prominent. The market demand has increased significantly recently. In addition, the core components of AI servers, GPU (image processor, acceleration chip) chips, continue to be in short supply, and GPU prices continue to rise. Recently, the price of AI servers has increased sharply.

A company revealed to a Securities Times e Company reporter that the price of the AI server it purchased in June last year increased nearly 20 times in less than a year. During the same period, GPU prices have also continued to rise. For example, the market unit price of A100 GPU has reached 150,000 yuan, which was 100,000 yuan two months ago, an increase of 50%, while the price increase of A800 is relatively smaller, with a price of about 95,000 yuan, up from 100,000 yuan. The monthly price is about 89,000 yuan.

“The price of AI servers has been changing greatly in recent years, mainly due to the continuous improvement of configurations. At the same time, the global major manufacturers of its core component GPU, NVIDIA and AMD, have limited GPU production capacity. The market GPU supply continues to be in short supply. It is expected that in the future AI server prices will still maintain an upward trend." IDC China Research Manager Index told a Securities Times e Company reporter.

It is worth mentioning that the continued shortage of upstream high-end GPU chips has also affected the shipments of corporate AI servers to a certain extent, which in turn has affected the short-term performance of related server listed companies. AI servers have been widely recognized in the industry, and related listed companies are strengthening their layout, which is very promising in the long term.

AI large models are hot

"In June last year, our company purchased 8 AI servers. Its basic configuration is 8 NVIDIA A100 GPUs and the storage is 80G. The purchase price at that time was 80,000 yuan/unit. In March this year, it has increased to 130 Ten thousand yuan/unit, it is now about 1.6 million yuan/unit, which has increased nearly 20 times in less than a year." Recently, the relevant person in charge of a testing company in Songshan Lake, Dongguan told a Securities Times e Company reporter.

I regret not buying more, because all AI servers related to Nvidia are increasing in price, not just this one. "The aforementioned person in charge said.

Coincidentally, a relevant person from a server ODM company told reporters that the company's A100 card has also increased by 15% since May, and the overall AI server has increased by about 10%.

In fact, since this year, the market has frequently reported that the price of AI servers has risen sharply.

Not long ago, a "little essay" about the sharp increase in server prices was also widely circulated. It is said that the price of an AI server from a certain manufacturer was about 750,000 to 850,000 yuan in March, but was raised to 850,000 to 1 million yuan in April.

The reason is that the surge in market demand is the main reason for the surge in AI server prices.

It is reported that with the popularity of ChatGPT, many technology companies around the world are actively engaged in the field of AIGC (generative artificial intelligence) and are increasing the research and development of large AI models. According to incomplete statistics, since Baidu first announced "Wen Xin Yi Yan" on March 16, more than 30 large-scale model products have been launched in China.

However, the implementation of large AI models requires massive data and powerful computing power to support the training and inference process. Huawei estimates that the computing power demand brought about by the AI explosion will increase 500 times in 2030 compared to 2020.

As a type of computing infrastructure unit server, AI server generally adopts a heterogeneous architecture that combines CPU (central processing unit), GPU, etc. Compared with general-purpose servers, it has graphics rendering and parallel computing of massive data. Advantages: It can process large amounts of data quickly and accurately, and can meet the powerful computing power requirements of large models. It is widely used in deep learning, high-performance computing, search engines, games and other industries, and its value has gradually become prominent.

"The increase in AI applications will push up the demand for computing power, and GPU servers will increase. It is expected that the demand for AI servers containing GPUs will continue to increase in the future, and we are optimistic about the growth of cloud service providers (CSP) or AI servers." In recent days Liu Yangwei, chairman of Foxconn Group, said during a conference call.

Index told reporters that in the past few years, accelerated computing servers (90% of accelerated computing servers are AI servers) have been the main driving force for the growth of the server market. From 2019 to 2022, the entire server market will increase by 10 billion US$5 billion, of which US$5 billion comes from the increase in the accelerated computing server market. As artificial intelligence applications enter the era of large models, the accelerated computing server market is expected to remain dynamic, and its growth rate is also expected to be greater than the general server market.

According to IDC data, the global AI server market will reach US$15.6 billion in 2021, a year-on-year growth of 39.1%, exceeding the overall global AI market growth rate of 22.5%. According to IDC, the global AI server market will reach US$31.79 billion in 2025, with a compound annual growth rate of 19%. China's AI server market size will be US$5.39 billion in 2021, a year-on-year growth of 68.6%. It is expected to reach US$10.34 billion in 2025, with a compound annual growth rate of 17.7% from 2021 to 2025.

In terms of market structure, in the global AI server market in the first half of 2021, Inspur Information ranked first with a market share of 20%, followed by Dell, HP, Lenovo, and Huawei with 14%, 10%, 6%, and 5% respectively. Following closely behind, China's AI server companies are currently at the forefront of the world.

GPU continues to be in short supply

The continued shortage and sharp price increase of the core component GPU is also one of the important reasons for the sharp increase in the price of AI servers.

It is understood that, unlike high-performance servers and general-purpose servers, AI servers are generally equipped with 4 to 8 GPUs in addition to 2 CPUs. Some high-end servers can even be equipped with 16 GPUs. Currently, the mainstream AI servers on the market It is generally configured with 2 CPUs and 8 GPUs. Therefore, the increase in demand for AI servers will directly double the market demand for GPUs and also increase the cost ratio of GPU, CPU and other components in AI servers.

According to institutional data, the cost of AI server (training) chipsets (CPU GPU) accounts for 83%, and the cost of AI server (inference) chipsets accounts for 50%.

It is worth mentioning that the current GPU market is mainly occupied by NVIDIA and AMD. NVIDIA occupies about 80% of the global market share, and NVIDIA GPU production capacity is limited, there is a continued shortage, and prices are also rising.

In terms of facing the Chinese market, in August 2022, NVIDIA's high-end graphics card A100/H100 was announced to be banned from sale in the United States. In November 2022, NVIDIA stated that it would put into production the A800 (replacement A100) for Chinese customers in the third quarter of 2022. In March this year, Nvidia stated that it had developed the H800 (replacing the H100) that can be shipped to China. Currently, domestic companies can purchase Nvidia GPU models such as A800, H800, and V100.

"Server manufacturers (including domestic ones) rarely use domestic GPUs. Most of them choose GPUs from Nvidia and AMD. However, it is difficult to buy GPUs from these two companies now, even after being banned by the United States. The A800 and H800 alternatives to A100 and H100 have been in short supply." A server industry analyst told reporters.

On May 15, the reporter learned from an NVIDIA terminal merchant that the current unit price of NVIDIA A100 is 150,000 yuan, which was 100,000 yuan two months ago, an increase of 50%. In addition, the current unit price of NVIDIA A800 is about 95,000 yuan, up from 100,000 yuan. The monthly unit price is 89,000 yuan.

"A100 has been banned, and there will be no more stock in the future. The goods I have now are some scraps I bought before the ban. They will be gone after they are sold out. Let alone the price increase or whether they are brand new. It's great if it's in stock. According to the merchant, the price of A100 will continue to rise and is expected to reach the level of 200,000 per unit in August.

Another agent revealed that the price of Nvidia A100 began to rise in December last year. As of the first half of April this year, the cumulative price increase in the five months reached 37.5%; during the same period, the cumulative price increase of A800 reached 20%.

Since an AI server is often equipped with several GPUs, the price increase of AI servers is often higher than the price increase of components.

Industry media Jiweiwang reported that due to the increase in computing power demand, the market demand for NVIDIA's top chips has not decreased even after the price increase. This has led to the NVIDIA GPU delivery cycle being lengthened. The previous delivery cycle was approximately It was one month, and now it basically takes three months or more, and even some new orders "may not be delivered until December." In order to cope with the increased order volume, Nvidia has also booked an additional 10,000 pieces of advanced packaging production capacity from TSMC to meet the production of AI top-level specifications chips. In addition, there are about 40,000 to 50,000 A100s that can be used to train large AI models in China, and the supply is quite tight. Some cloud service vendors have strictly restricted the internal use of these advanced chips to reserve them for tasks that require powerful computing.

Server shipments are constrained

The continued shortage of GPUs has even affected the shipments of the server industry.

"The current tight inventory situation of NVIDIA GPUs has caused server manufacturers to lack core components, thus affecting the shipments of related companies to some extent." "A brokerage analyst told reporters.

The relevant situation can also be seen from the first quarter reports of listed companies.

Inspur Information’s revenue in the first quarter of this year was 9.4 billion yuan, a year-on-year decrease of 45.59%, and its net profit was 210 million yuan, a year-on-year decrease of 37%. Inspur Information stated that the decline in operating income was mainly due to changes in the rhythm of customer demand compared with the same period last year. Due to reduced demand for shipments in the period.

It is understood that NVIDIA is the largest supplier of Inspur Information. Inspur Information previously purchased almost all GPU chips from NVIDIA. In 2021, NVIDIA accounted for 23.83% of Inspur Information’s procurement volume.

In March this year, the United States added 28 Chinese companies, including Inspur Information, to the U.S. Entity List. According to the regulations of the U.S. Department of Commerce, companies included in the Entity List must obtain authorization from the U.S. government in order to obtain U.S. products and technologies.

Looking at peers, Fii Industrial’s revenue in the first quarter reached 105.888 billion yuan, a year-on-year increase of 0.79%, and its net profit was 3.128 billion yuan, a year-on-year decrease of 3.91%. However, the gross profit margin increased by 0.6 percentage points year-on-year to 7.4%.

Industrial Fii insiders told reporters from Securities Times·e Company that Fii’s AI server has been used in ChatGPT during the period. The continued popularity of AI large model technology has provided space for Fii to improve its gross profit margin. The company is also working on Accelerate the proportion and development speed of AI servers and high-efficiency computing (HPC) products.

It is understood that Fii’s cloud computing server shipments continue to rank first in the world. In 2017, Fii, together with NVIDIA and Microsoft, launched the world’s first AI server HGX1. The server used by ChatGPT is HGX1. This server is currently in its fourth generation.

Foxconn Group Chairman Liu Yangwei also said that Foxconn’s server revenue alone reached NT$1.1 trillion last year, of which AI server revenue accounted for about 20%. Although the demand driven by ChatGPT is strong, Foxconn will benefit. Due to the demand for related infrastructure construction, it will also take a period of precipitation to become a stable business.

It is worth mentioning that in terms of chip supply, Fii is deeply tied to Nvidia, and the supply is not restricted.

ZTE President Xu Ziyang also said that in the field of AI, ZTE will focus on three product directions in the future: First, a new generation of intelligent computing center infrastructure products that fully support large model training and inference, including high-performance AI servers, High-performance switches, DPUs, etc., plan to launch GPU servers that will support large-bandwidth ChatGPT by the end of this year; second, the next generation digital nebula solution, using generative AI technology to conduct research in areas such as office intelligence and operational intelligence; third, It is a new generation of AI acceleration chip and model lightweight technology that can significantly reduce the cost of large model inference.

In addition, Wingtech also revealed that it is pre-researching training and inference servers for ultra-large-scale data centers. In the fourth quarter of last year, Wingtech's server products began to be shipped, and the base number that year was relatively low.

In addition to AI servers, under the trends of digital economy and digital economy, various technology companies are also optimistic about the entire server and storage related industries.

Fii Industry pointed out that the growth in computing power demand will not only drive the growth of AI server shipments, but also drive the upgrade of the entire supercomputing system architecture, including central processing unit units, computing accelerators, high-performance memory, and higher bandwidth wait. In addition, the exponential growth of computing power will bring huge energy consumption. Fii Industrial has deployed data center liquid cooling and immersed cooling products in advance. HGX4 uses two cooling technologies, water cooling and air cooling. Green products are indispensable for computing power needs. In the future of growth, its importance will become even more prominent.

ZTE Senior Vice President and President of Government and Enterprise Business Zhu Yongtao told reporters that the market opportunities brought by the new wave of digital economy are certain, and ZTE will increase its resource investment in servers and storage this year.

The index indicates that in 2009, China’s local suppliers accounted for only about 1% of global shipments. By 2022, this number will become 25%. If you include the direct supply of several ODMs in Taiwan to the Internet, This proportion has exceeded 60%, and China's local server suppliers are playing an increasingly important role in the world.

In addition, some securities firm researchers have suggested that my country's server manufacturers are facing risks in the supply of core components. At the same time, the operating development of the server industry is closely related to the country's overall macroeconomic development.

Source: China Fund News

The above is the detailed content of A surge of 20 times! AI server prices are rising like crazy. For more information, please follow other related articles on the PHP Chinese website!

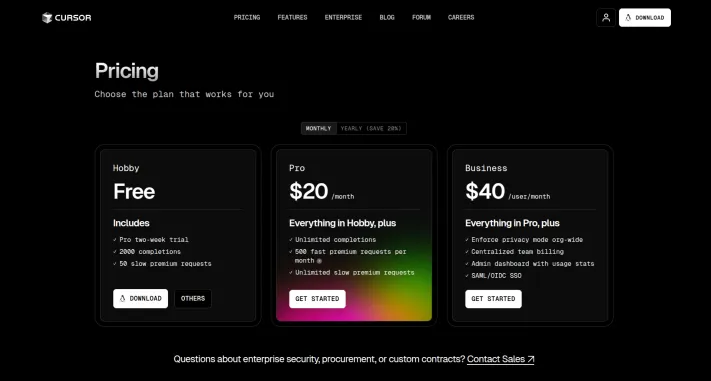

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

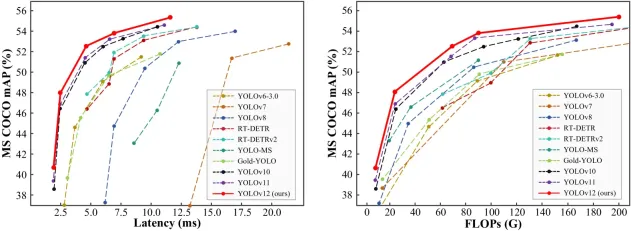

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),