Technology peripherals

Technology peripherals AI

AI Southern Science and Technology's Black Technology: Eliminate video characters with one click, the special effects artist's savior is here!

Southern Science and Technology's Black Technology: Eliminate video characters with one click, the special effects artist's savior is here!This video segmentation model from Southern University of Science and Technology can track anything in the video.

Not only can it "watch", but it can also "cut". It is also easy for it to remove individuals from the video.

In terms of operation, the only thing you need to do is a few clicks of the mouse.

The special effects artist seemed to have found a savior after seeing the news, saying bluntly that this product will change the rules of the game in the CGI industry.

This model is called TAM (Track Anything Model). Is it similar to the name of Meta’s image segmentation model SAM?

Indeed, TAM extends SAM to the video field and lights up the skill tree of dynamic object tracking.

#Video segmentation models are actually not a new technology, but traditional segmentation models do not alleviate human work.

The training data used by these models all require manual annotation, and even need to be initialized with the mask parameters of specific objects before use.

The emergence of SAM provides a prerequisite for solving this problem - at least the initialization data no longer needs to be obtained manually.

Of course, TAM does not use SAM frame by frame and then superimpose it. It also needs to build the corresponding spatiotemporal relationship.

The team integrated SAM with a memory module called XMem.

You only need to use SAM to generate initial parameters in the first frame, and XMem can guide the subsequent tracking process.

There can be many tracking targets, such as the following picture of Along the River During the Qingming Festival:

Even if the scene changes, it will not affect the performance of TAM:

We experienced it and found that TAM uses an interactive user interface, which is very simple and friendly to operate.

In terms of hard power, TAM’s tracking effect is indeed good:

However, the accuracy of the elimination function in some details needs to be improved.

From SAM to TAM

As mentioned above, TAM is based on SAM and combines memory capabilities to establish spatio-temporal association. realized.

Specifically, the first step is to initialize the model with the help of SAM's static image segmentation capabilities.

With just one click, SAM can generate the initialization mask parameters of the target object, replacing the complex initialization process in the traditional segmentation model.

With the initial parameters, the team can hand it over to XMem for semi-manual intervention training, greatly reducing human workload.

In this process, some manual prediction results will be used to compare with the output of XMem.

In the actual process, as time goes by, it becomes more and more difficult for XMem to obtain accurate segmentation results.

When the difference between the results and expectations is too large, the re-segmentation step will be entered. This step is still completed by SAM.

After SAM re-optimization, most of the output results are relatively accurate, but some still require manual adjustment.

The training process of TAM is roughly like this, and the object elimination skills mentioned at the beginning are formed by combining TAM with E2FGVI.

E2FGVI itself is also a video element elimination tool. With the support of TAM's precise segmentation, its work is more targeted.

To test TAM, the team evaluated it using the DAVIS-16 and DAVIS-17 data sets.

#The intuitive feeling is still very good, and it is indeed true from the data.

Although TAM does not require manual setting of mask parameters, its two indicators of J (regional similarity) and F (boundary accuracy) are very close to the manual model.

Even the performance on the DAVIS-2017 data set is slightly better than that of STM.

Among other initialization methods, the performance of SiamMask cannot be compared with TAM;

Although another method called MiVOS performs better than TAM, it has evolved for 8 rounds after all...

Team Profile

TAM is from the Visual Intelligence and Perception (VIP) Laboratory of Southern University of Science and Technology.

The research directions of this laboratory include text-image-sound multi-model learning, multi-model perception, reinforcement learning and visual defect detection.

Currently, the team has published more than 30 papers and obtained 5 patents.

The leader of the team is Associate Professor Zheng Feng of Southern University of Science and Technology. He graduated with a doctorate from the University of Sheffield in the UK. He has worked for the Institute of Advanced Studies of the Chinese Academy of Sciences, Tencent Youtu and other institutions. He entered Southern University of Science and Technology in 2018 and was promoted to Associate Professor.

Paper address:

https://arxiv.org/abs/2304.11968

GitHub page:

https://github.com/gaomingqi/Track-Anything

Reference link:

https://twitter.com/bilawalsidhu/status/1650710123399233536 ?s=20

The above is the detailed content of Southern Science and Technology's Black Technology: Eliminate video characters with one click, the special effects artist's savior is here!. For more information, please follow other related articles on the PHP Chinese website!

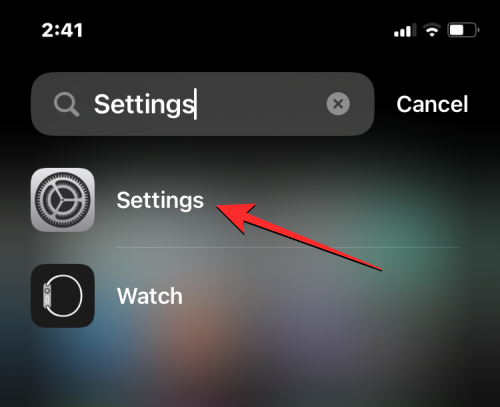

iOS 17:如何在iPhone上的相机应用程序中锁定白平衡Sep 20, 2023 am 08:41 AM

iOS 17:如何在iPhone上的相机应用程序中锁定白平衡Sep 20, 2023 am 08:41 AM白平衡是一项相机功能,可根据照明条件调整演色性。此iPhone设置可确保白色物体在照片或视频中显示为白色,从而补偿由于典型照明而导致的任何颜色变化。如果您想在整个视频拍摄过程中保持白平衡一致,可以将其锁定。在这里,我们将指导您如何为iPhone视频保持固定的白平衡。如何在iPhone上锁定白平衡必需:iOS17更新。(检查“常规>软件更新”下的“设置”>)。在iPhone上打开“设置”应用。在“设置”中,向下滚动并选择“相机”。在“相机”屏幕上,点击“录制视频”。在这

使用Python访问各种音频和视频文件的元数据Sep 05, 2023 am 11:41 AM

使用Python访问各种音频和视频文件的元数据Sep 05, 2023 am 11:41 AM我们可以使用Mutagen和Python中的eyeD3模块访问音频文件的元数据。对于视频元数据,我们可以使用电影和Python中的OpenCV库。元数据是提供有关其他数据(例如音频和视频数据)的信息的数据。音频和视频文件的元数据包括文件格式、文件分辨率、文件大小、持续时间、比特率等。通过访问这些元数据,我们可以更有效地管理媒体并分析元数据以获得一些有用的信息。在本文中,我们将了解Python提供的一些用于访问音频和视频文件元数据的库或模块。访问音频元数据一些用于访问音频文件元数据的库是-使用诱变

ppt视频换个电脑就无法播放怎么办Feb 23, 2023 am 11:29 AM

ppt视频换个电脑就无法播放怎么办Feb 23, 2023 am 11:29 AMppt视频换个电脑就无法播放是因为路径不对,其解决办法:1、将PPT和视频放入U盘的同一个文件夹内;2、双击打开该PPT,找到想要插入视频的页数,点击“插入”按钮;3、在弹出的对话框内选择想要插入的视频即可。

视频切片授权什么意思Sep 27, 2023 pm 02:55 PM

视频切片授权什么意思Sep 27, 2023 pm 02:55 PM视频切片授权是指在视频服务中,将视频文件分割成多个小片段并进行授权的过程。这种授权方式能提供更好的视频流畅性、适应不同网络条件和设备,并保护视频内容的安全性。通过视频切片授权,用户可以更快地开始播放视频,减少等待和缓冲时间,视频切片授权可以根据网络条件和设备类型动态调整视频参数,提供最佳的播放效果,视频切片授权还有助于保护视频内容的安全性,防止未经授权的用户进行盗播和侵权行为。

如何使用Golang将多个图片转换为视频Aug 22, 2023 am 11:29 AM

如何使用Golang将多个图片转换为视频Aug 22, 2023 am 11:29 AM如何使用Golang将多个图片转换为视频随着互联网的发展和智能设备的普及,视频成为了一种重要的媒体形式。有时候我们可能需要将多个图片转换为视频,以便展示图片的连续变化或者制作幻灯片。本文将介绍如何使用Golang编程语言将多个图片转换为视频。在开始之前,请确保你已经安装了Golang以及相关的开发环境。步骤1:导入相关的包首先,我们需要导入一些相关的Gola

iOS 17:如何录制FaceTime视频或音频消息Sep 21, 2023 am 10:13 AM

iOS 17:如何录制FaceTime视频或音频消息Sep 21, 2023 am 10:13 AM在iOS17中,当您FaceTime通话某人无法接听时,您可以留下视频或音频信息,具体取决于您使用的通话方式。如果您使用的是FaceTime视频,则可以留下视频信息,如果您使用的是FaceTime音频,则可以留下音频信息。您所要做的就是以通常的方式与某人进行FaceTime。未接来电后,您将看到“录制视频”选项,该选项可让您创建消息。录制完视频后,您会看到视频的预览,如果效果不佳,还可以选择重新录制。以下是在运行iOS17的设备上留下FaceTime消息的工作原理,以未接视频通话为例,在Face

Vue 中如何实现视频播放器?Jun 25, 2023 am 09:46 AM

Vue 中如何实现视频播放器?Jun 25, 2023 am 09:46 AM随着互联网的不断发展,视频已经成为了人们日常生活中必不可少的娱乐方式之一。为了给用户提供更好的视频观看体验,许多网站和应用程序都开始使用视频播放器,使得用户可以在网页中直接观看视频。而Vue作为目前非常流行的前端框架之一,也提供了很多简便且实用的方法来实现视频播放器。下面,我们将简要介绍一下在Vue中实现视频播放器的方法。一、使用HTML5的video标签H

电脑打不开视频是什么原因Jun 27, 2023 pm 01:42 PM

电脑打不开视频是什么原因Jun 27, 2023 pm 01:42 PM电脑打不开视频原因有:1、视频文件不完整;2、没有支持此视频的播放器;3、视频文件的后缀被修改过;4、视频文件关联不正确;5、没有使用插件。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),