Translator | Li Rui

Reviewer | Sun Shujuan

What is text classification?

Text classification is the process of classifying text into one or more different categories to organize, structure and filter it into any parameters. For example, text classification is used in legal documents, medical studies and documents, or simply in product reviews. Data is more important than ever; many businesses spend huge sums of money trying to gain as much insight as possible.

With text/document data becoming much richer than other data types, using new methods is imperative. Since data is inherently unstructured and extremely rich, organizing it in an easy-to-understand way to make sense of it can significantly increase its value. Use text classification and machine learning to automatically construct relevant text faster and more cost-effectively.

The following will define text classification, how it works, some of the best-known algorithms, and provide datasets that may be helpful in starting your text classification journey.

Why use machine learning text classification?

- Scale: Manual data entry, analysis, and organization are tedious and slow. Machine learning allows automated analysis regardless of the size of the data set.

- Consistency: Human error occurs due to personnel fatigue and insensitivity to the material in the data set. Machine learning increases scalability and significantly improves accuracy due to the unbiased and consistent nature of the algorithm.

- Speed: Sometimes you may need to access and organize data quickly. Machine learning algorithms can parse data and deliver information in an easy-to-understand way.

6 General Steps

#Some basic methods can classify different text documents to a certain extent, but the most commonly used methods are Machine learning. Text classification models need to go through six basic steps before they can be deployed.

1. Provide high-quality data sets

Datasets are raw data blocks that are used as data sources for models. In the case of text classification, supervised machine learning algorithms are used, providing labeled data to the machine learning model. Labeled data is data that is predefined for an algorithm and is labeled with information.

2. Filter and process data

Since the machine learning model can only understand numerical values, the provided text needs to be tokenized and text embedded so that the model can correctly identify the data.

Tokenization is the process of splitting a text document into smaller parts called tokens. Tokens can be represented as whole words, subwords, or individual characters. For example, you can tag your work more intelligently like this:

- Tag word: Smarter

- Tag subword: Smart-er

- Tag character: S-m-a-r-t-e-r

Why is tokenization important? Because text classification models can only process data at a token-based level and cannot understand and process complete sentences. The model requires further processing of the given raw data set to easily digest the given data. Remove unnecessary features, filter out null and infinite values, and more. Reorganizing the entire dataset will help prevent any bias during the training phase.

3. Split the data set into training and test data sets

We hope to train the data on 80% of the data set while retaining 20% of the data set to test the algorithm. accuracy.

4. Training Algorithm

By running the model using a training dataset, the algorithm can classify the provided text into different categories by identifying hidden patterns and insights.

5. Test and check the performance of the model

Next, test the integrity of the model using the test data set mentioned in step 3. The test dataset will be unlabeled to test the accuracy of the model against actual results. In order to accurately test the model, the test data set must contain new test cases (data that is different from the previous training data set) to avoid overfitting the model.

6. Tuning the model

Tune the machine learning model by adjusting different hyperparameters of the model without overfitting or generating high variance. A hyperparameter is a parameter whose value controls the learning process of the model. Now it's ready to deploy.

How does text classification work?

Word Embedding

During the filtering process mentioned above, machine and deep learning algorithms can only understand numerical values, forcing developers to perform some word embedding techniques on the data set. Word embedding is the process of representing words as real-valued vectors that encode the meaning of a given word.

- Word2Vec: This is an unsupervised word embedding method developed by Google. It utilizes neural networks to learn from large text datasets. As the name suggests, the Word2Vec method converts each word into a given vector.

- GloVe: Also known as global vector, it is an unsupervised machine learning model used to obtain vector representations of words. Similar to the Word2Vec method, the GloVe algorithm maps words into a meaningful space, where the distance between words is related to semantic similarity.

- TF-IDF: TF-IDF is the abbreviation of Term Frequency-Inverse Text Frequency, which is a word embedding algorithm used to evaluate the importance of words in a given document. TF-IDF assigns each word a given score to represent its importance in a set of documents.

Text Classification Algorithms

The following are the three most famous and effective text classification algorithms. It is important to remember that there are further defined algorithms embedded in each method.

1. Linear Support Vector Machine

The linear support vector machine algorithm is considered to be one of the best text classification algorithms at present. It draws a given data point according to a given feature, and then Draw a line of best fit that splits and sorts the data into categories.

2. Logistic regression

Logistic regression is a subcategory of regression, mainly focusing on classification problems. It uses decision boundaries, regression, and distance to evaluate and classify data sets.

3. Naive Bayes

The Naive Bayes algorithm classifies different objects based on the features provided by the objects. Group boundaries are then drawn to infer these group classifications for further resolution and classification.

What issues should be avoided when setting up text classification

1. Overcrowded training data

Providing low-quality data to the algorithm will leading to poor future predictions. A common problem for machine learning practitioners is that training models are fed too many datasets and include unnecessary features. Excessive use of irrelevant data will lead to a decrease in model performance. And when it comes to selecting and organizing data sets, less is more.

An incorrect ratio of training to test data can greatly affect the performance of the model and affect the shuffling and filtering of data. Accurate data points will not be interfered with by other unwanted factors, and the trained model will perform more efficiently.

When training the model, select a data set that meets the model requirements, filter unnecessary values, shuffle the data set, and test the accuracy of the final model. Simpler algorithms require less computing time and resources, and the best models are the simplest ones that can solve complex problems.

2. Overfitting and underfitting

When training reaches its peak, the accuracy of the model gradually decreases as training continues. This is called overfitting; because training lasts too long, the model starts learning unexpected patterns. Be careful when achieving high accuracy on the training set, as the main goal is to develop a model whose accuracy is rooted in the test set (data the model has not seen before).

On the other hand, underfitting means that the training model still has room for improvement and has not yet reached its maximum potential. Poorly trained models stem from the length of training or over-regularizing the dataset. This exemplifies what it means to have concise and precise data.

Finding the sweet spot is crucial when training a model. Splitting the dataset 80/20 is a good start, but tuning parameters may be what a particular model needs to perform optimally.

3. Incorrect text format

Although not mentioned in detail in this article, using the correct text format for text classification problems will yield better results. Some methods of representing text data include GloVe, Word2Vec, and embedding models.

Using the correct text format will improve the way the model reads and interprets the data set, which in turn helps it understand patterns.

Text Classification Application

- Filter spam: By searching for certain keywords, emails can be classified as useful or spam.

- Text Classification: By using text classification, the application can classify different items (articles, books, etc.) into different categories by classifying related text (such as item names and descriptions, etc.). Using these techniques improves the experience because it makes it easier for users to navigate within the database.

- Identifying Hate Speech: Some social media companies use text classification to detect and ban offensive comments or posts.

- Marketing and Advertising: Businesses can make specific changes to satisfy their customers by understanding how users respond to certain products. It can also recommend certain products based on user reviews of similar products. Text classification algorithms can be used in conjunction with recommender systems, another deep learning algorithm used by many online websites to gain repeat business.

Popular text classification datasets

With a large number of labeled and ready-to-use datasets, you can search for the perfect dataset that meets your model requirements at any time.

While you may have some problems deciding which one to use, some of the best-known datasets available to the public are recommended below.

- IMDB Dataset

- Amazon Reviews Dataset

- Yelp Reviews Dataset

- SMS Spam Collection

- Opin Rank Review Dataset

- Twitter US Airline Sentiment Dataset

- Hate Speech and Offensive Language Dataset

- Clickbait Dataset

Websites like Kaggle contain various datasets covering all topics . You can try running the model on several of the above data sets for practice.

Text Classification in Machine Learning

As machine learning has had a huge impact over the past decade, businesses are trying every possible way to leverage machine learning to automate processes. Reviews, posts, articles, journals, and documents are all invaluable in the text. And by using text classification in a variety of creative ways to extract user insights and patterns, businesses can make data-backed decisions; professionals can access and learn valuable information faster than ever before.

Original title:What Is Text Classification?, author: Kevin Vu

The above is the detailed content of What is text classification?. For more information, please follow other related articles on the PHP Chinese website!

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

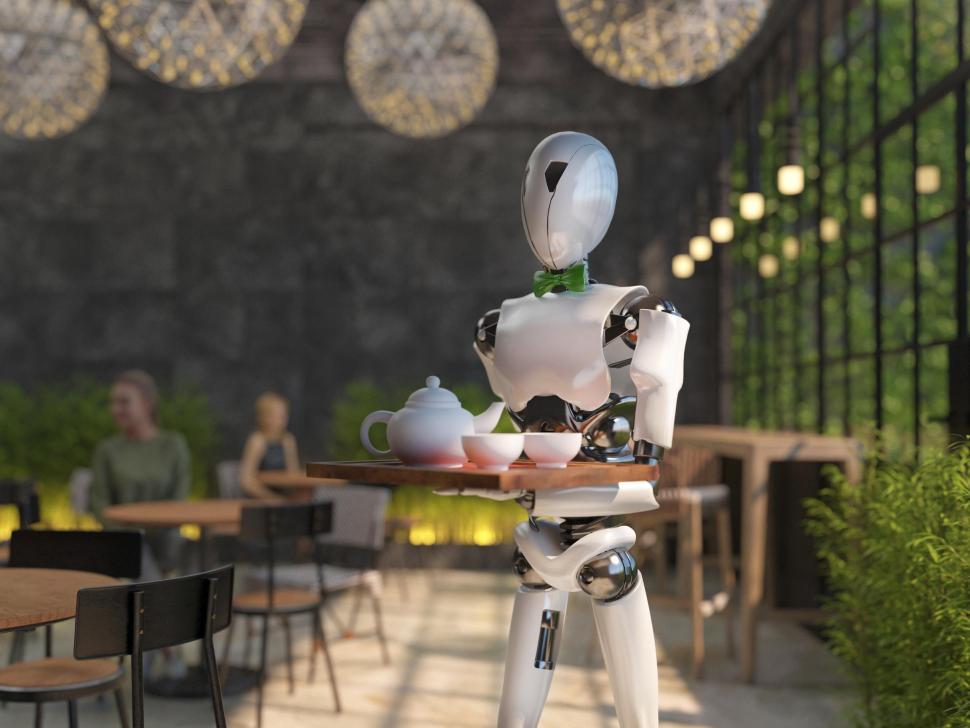

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.