Technology peripherals

Technology peripherals AI

AI God restores complex objects and high-frequency details, 4K-NeRF high-fidelity view synthesis is here

God restores complex objects and high-frequency details, 4K-NeRF high-fidelity view synthesis is hereUltra-high resolution is welcomed by many researchers as a standard for recording and displaying high-quality images and videos. Compared with lower resolutions (1K HD format), scenes captured at high resolutions are usually The details are very clear, and the pixel information is amplified by small patches. However, there are still many challenges in applying this technology to image processing and computer vision.

In this article, researchers from Alibaba focus on new view synthesis tasks and propose a framework called 4K-NeRF. Its NeRF-based volume rendering method can be implemented in High-fidelity view synthesis at 4K ultra-high resolution.

##Paper address: https://arxiv.org/abs/2212.04701

Project homepage: https://github.com/frozoul/4K-NeRF

Without further ado, let’s take a look at the effect first (below The videos have been downsampled. For the original 4K video, please refer to the original project).

MethodNext let’s take a look at how this research was implemented.

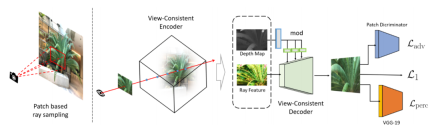

4K-NeRF pipeline (as shown below): Using patch-based ray sampling technology, jointly train VC-Encoder (View-Consistent) (based on DEVO) on a lower resolution Three-dimensional geometric information is encoded in space, and then passed through a VC-Decoder to achieve high-frequency, fine- and high-quality rendering and enhanced view consistency.

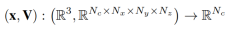

This study instantiates the encoder based on the formula defined in DVGO [32], and the learned voxel grid-based representation is explicitly Geographically encoded geometry:

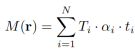

For each sampling point, trilinear interpolation of the density estimate is equipped with a softplus activation function to generate the Volume density value:

Color is estimated using a small MLP:

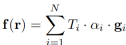

In this way, the characteristic value of each ray (or pixel) can be obtained by accumulating the characteristics of the sampling points along the set line r:

To better exploit the geometric properties embedded in the VC-Encoder, this study also generated a depth map by estimating the depth of each ray r along the sampled ray axis. The estimated depth map provides a strong guide to the three-dimensional structure of the scene generated by the Encoder above: Built using blocks (neither non-parametric normalization nor downsampling operations) and interleaved upsampling operations. In particular, instead of simply concatenating the feature F and the depth map M, this study joins the depth signal in the depth map and injects it into each block through a learned transformation to modulate block activations.

Different from the pixel-level mechanism in traditional NeRF methods, the method of this study aims to capture the spatial information between rays (pixels). Therefore, the strategy of random ray sampling in NeRF is not suitable here. Therefore, this study proposes a patch-based ray sampling training strategy to facilitate capturing the spatial dependence between ray features. During training, the image of the training view is first divided into patches p of size N_p×N_p to ensure that the sampling probability on the pixels is uniform. When the image space dimension cannot be accurately divided by the patch size, the patch needs to be truncated until the edge to obtain a set of training patches. Then one (or more) patches are randomly selected from the set, and the rays of the pixels in the patch form a mini-batch for each iteration.

To solve the problem of blurring or over-smoothing visual effects on fine details, this research adds adversarial loss and perceptual loss to regularize fine detail synthesis. The perceptual loss estimates the similarity between the predicted patch

estimates the similarity between the predicted patch and the true value p in the feature space through the pre-trained 19-layer VGG network:

and the true value p in the feature space through the pre-trained 19-layer VGG network:

This study uses  loss instead of MSE to supervise the reconstruction of high-frequency details

loss instead of MSE to supervise the reconstruction of high-frequency details

In addition, the study also added an auxiliary MSE loss, and the final total loss function form is as follows:

Experimental effect

Qualitative analysis

##The experiment compares 4K-NeRF with other models , it can be seen that methods based on ordinary NeRF have varying degrees of detail loss and blurring. In contrast, 4K-NeRF delivers high-quality photorealistic rendering of these complex and high-frequency details, even on scenes with a limited training field of view.

Quantitative analysis

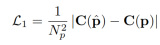

This study is compared with several current methods on the basis of 4k data, including Plenoxels, DVGO, JaxNeRF, MipNeRF-360 and NeRF-SR. The experiment not only uses the evaluation indicators of image recovery as a comparison, but also provides inference time and cache memory for comprehensive evaluation reference. The results are as follows:

Although the results are not much different from the results of some methods in some indicators, they benefit from their voxel-based method in reasoning. Stunning performance is achieved both in terms of efficiency and memory cost, allowing a 4K image to be rendered in 300 ms.

Summary and Future Outlook

This study explores the capabilities of NeRF in modeling fine details, proposing a novel framework to enhance its ability to recover views in scenes at extremely high resolutions Consistent expressiveness of fine details. In addition, this research also introduces a pair of encoder-decoder modules that maintain geometric consistency, effectively model geometric properties in lower spaces, and utilize local correlations between geometry-aware features to achieve views in full-scale space The enhanced consistency and patch-based sampling training framework also allows the method to integrate supervision from perceptron-oriented regularization. This research hopes to incorporate the effects of the framework into dynamic scene modeling, as well as neural rendering tasks as future directions.

The above is the detailed content of God restores complex objects and high-frequency details, 4K-NeRF high-fidelity view synthesis is here. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

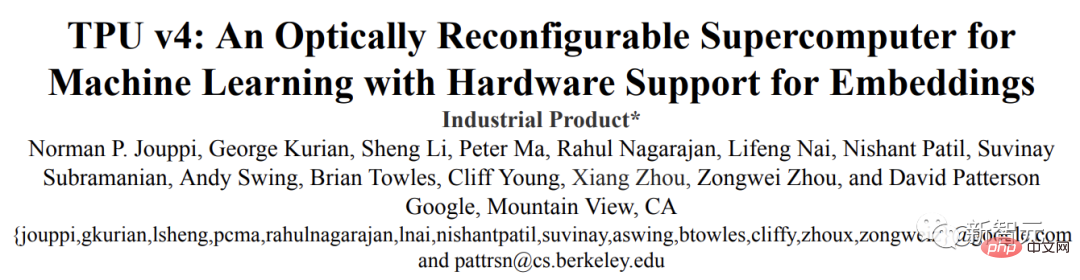

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

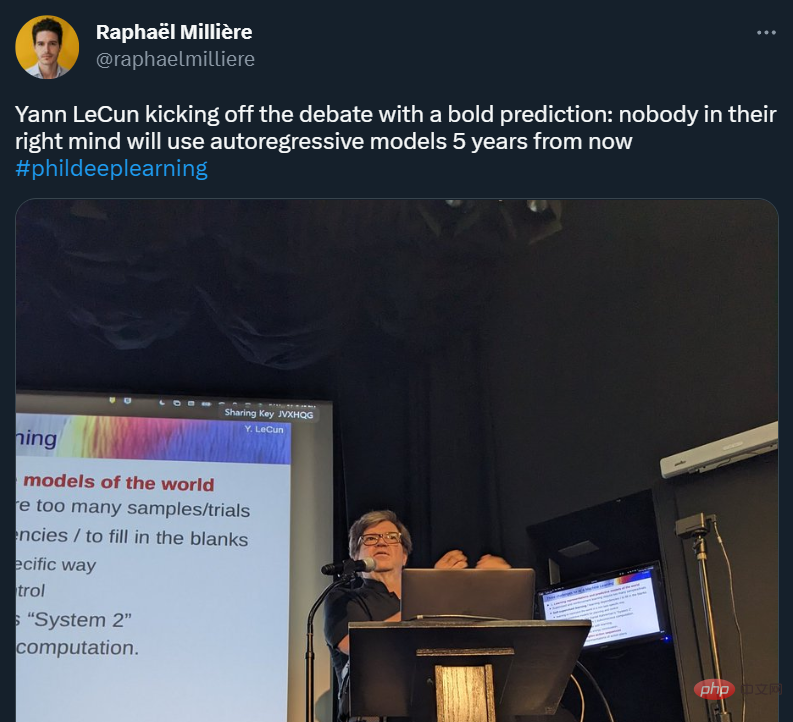

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

WebStorm Mac version

Useful JavaScript development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software