Technology peripherals

Technology peripherals AI

AI OpenAI's new generation model is an open source explosion! Faster and stronger than Diffusion, a work by Tsinghua alumnus Song Yang

OpenAI's new generation model is an open source explosion! Faster and stronger than Diffusion, a work by Tsinghua alumnus Song YangOpenAI's new generation model is an open source explosion! Faster and stronger than Diffusion, a work by Tsinghua alumnus Song Yang

The field of image generation seems to be changing again.

Just now, OpenAI open sourced a consistency model that is faster and better than the diffusion model:

You can generate high-quality images without adversarial training!

As soon as this blockbuster news was released, it immediately detonated the academic circle.

Although the paper itself was released in a low-key manner in March, at that time it was generally believed that it was just a cutting-edge research of OpenAI and the details would not really be made public.

Unexpectedly, an open source came directly this time. Some netizens immediately started testing the effect and found that it only takes about 3.5 seconds to generate about 64 256×256 images:

Game over!

This is the image effect generated by this netizen, it looks pretty good:

Also Netizens joked: This time OpenAI is finally open!

It is worth mentioning that the first author of the paper, OpenAI scientist Song Yang, is a Tsinghua alumnus. At the age of 16, he entered Tsinghua’s basic mathematics and science class through the Leadership Program.

Let’s take a look at what kind of research OpenAI has open sourced this time.

What kind of blockbuster research has been open sourced?

As an image generation AI, the biggest feature of the Consistency Model is that it is fast and good.

Compared with the diffusion model, it has two main advantages:

First, it can directly generate high-quality image samples without adversarial training.

Secondly, compared to the diffusion model which may require hundreds or even thousands of iterations, the consistency model only needs one or two steps to handle a variety of image tasks - including coloring, Denoising, super-scoring, etc., can all be done in a few steps without requiring explicit training for these tasks. (Of course, if few-sample learning is performed, the generation effect will be better)

So how does the consistency model achieve this effect?

So how does the consistency model achieve this effect?

From a principle point of view, the birth of the consistency model is related to the ODE (ordinary differential equation) generation diffusion model.

As can be seen in the figure, ODE will first convert the image data into noise step by step, and then perform a reverse solution to learn to generate images from the noise.

In this process, the authors tried to map any point on the ODE trajectory (such as Xt, Xt and Xr) to its origin (such as X0) for generative modeling.

Subsequently, this mapped model was named the consistency model because their outputs are all at the same point on the same trajectory:

Based on this The idea is that the consistency model no longer needs to go through long iterations to generate a relatively high-quality image, but can be generated in one step.

Based on this The idea is that the consistency model no longer needs to go through long iterations to generate a relatively high-quality image, but can be generated in one step.

The following figure is a comparison of the consistency model (CD) and the diffusion model (PD) on the image generation index FID.

Among them, PD is the abbreviation of progressive distillation (progressive distillation), a latest diffusion model method proposed by Stanford and Google Brain last year, and CD (consistency distillation) is the consistency distillation method.

It can be seen that in almost all data sets, the image generation effect of the consistency model is better than that of the diffusion model. The only exception is the 256×256 room data set:

In addition, the authors also compared diffusion models, consistency models, GAN and other models on various other data sets:

In addition, the authors also compared diffusion models, consistency models, GAN and other models on various other data sets:

However, some netizens mentioned that the images generated by the open source AI consistency model are still too small:

It’s sad that this open source The images generated by the version are still too small. It would be very exciting if an open source version that generates larger images could be provided.

# Some netizens also speculated that OpenAI may not have been trained yet. But maybe after training, we may not be able to get the code (manual dog head).

But regarding the significance of this work, TechCrunch said:

If you have a bunch of GPUs, then use the diffusion model to iterate more than 1,500 times in a minute or two, and the effect of generating images will certainly be extremely good. OK

But if you want to generate images in real time on your mobile phone or during a chat conversation, then obviously the diffusion model is not the best choice.

The consistency model is the next important move of OpenAI.

I hope OpenAI will open source a wave of image generation AI with higher resolution~

Tsinghua alumnus Song Yang is the first author of the paper

Song Yang is the first author of the paper and is currently a research scientist at OpenAI.

#When he was 14 years old, he was selected into the "Tsinghua University New Centenary Leadership Program" with unanimous votes from 17 judges. In the college entrance examination the following year, he became the top scorer in science in Lianyungang City and was successfully admitted to Tsinghua University.

In 2016, Song Yang graduated from Tsinghua University’s basic mathematics and physics class, and then went to Stanford for further study. In 2022, Song Yang received a PhD in computer science from Stanford and then joined OpenAI.

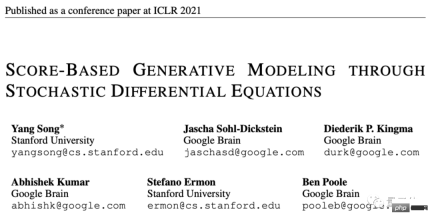

During his doctoral period, his first paper "Score-Based Generative Modeling through Stochastic Differential Equations" also won the ICLR 2021 Outstanding Paper Award.

According to information on his personal homepage, starting from January 2024, Song Yang will officially join the Department of Electronics and Department of Computational Mathematical Sciences at the California Institute of Technology as an assistant professor.

Project address:

https://www.php.cn/link/4845b84d63ea5fa8df6268b8d1616a8f

Paper address:

https://www.php.cn/link/5f25fbe144e4a81a1b0080b6c1032778

Reference link:

[1]https://twitter.com/alfredplpl/status/1646217811898011648

[2]https://twitter.com/_akhaliq/status/1646168119658831874

The above is the detailed content of OpenAI's new generation model is an open source explosion! Faster and stronger than Diffusion, a work by Tsinghua alumnus Song Yang. For more information, please follow other related articles on the PHP Chinese website!

Tool Calling in LLMsApr 14, 2025 am 11:28 AM

Tool Calling in LLMsApr 14, 2025 am 11:28 AMLarge language models (LLMs) have surged in popularity, with the tool-calling feature dramatically expanding their capabilities beyond simple text generation. Now, LLMs can handle complex automation tasks such as dynamic UI creation and autonomous a

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AMCan a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM“History has shown that while technological progress drives economic growth, it does not on its own ensure equitable income distribution or promote inclusive human development,” writes Rebeca Grynspan, Secretary-General of UNCTAD, in the preamble.

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AM

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AMEasy-peasy, use generative AI as your negotiation tutor and sparring partner. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AM

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AMThe TED2025 Conference, held in Vancouver, wrapped its 36th edition yesterday, April 11. It featured 80 speakers from more than 60 countries, including Sam Altman, Eric Schmidt, and Palmer Luckey. TED’s theme, “humanity reimagined,” was tailor made

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AM

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AMJoseph Stiglitz is renowned economist and recipient of the Nobel Prize in Economics in 2001. Stiglitz posits that AI can worsen existing inequalities and consolidated power in the hands of a few dominant corporations, ultimately undermining economic

What is Graph Database?Apr 14, 2025 am 11:19 AM

What is Graph Database?Apr 14, 2025 am 11:19 AMGraph Databases: Revolutionizing Data Management Through Relationships As data expands and its characteristics evolve across various fields, graph databases are emerging as transformative solutions for managing interconnected data. Unlike traditional

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AM

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AMLarge Language Model (LLM) Routing: Optimizing Performance Through Intelligent Task Distribution The rapidly evolving landscape of LLMs presents a diverse range of models, each with unique strengths and weaknesses. Some excel at creative content gen

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 Linux new version

SublimeText3 Linux latest version

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.