Why use nginx?

At present, the main competitor of nginx is apache. Here, I will make a simple comparison between the two to help everyone better understand the advantages of nginx.

1. As a web server:

Compared with apache, nginx uses fewer resources, supports more concurrent connections, and reflects higher efficiency. Making nginx especially popular with web hosting providers. In the case of high connection concurrency, nginx is a good alternative to the apache server: nginx is one of the software platforms often chosen by bosses in the virtual host business in the United States. It can support responses of up to 50,000 concurrent connections. Thanks to nginx for We chose epoll and kqueue as the development model.

nginx as a load balancing server: nginx can directly support rails and php programs internally to provide external services, or it can support external services as an http proxy server. nginx is written in C, and its system resource overhead and CPU usage efficiency are much better than perlbal.

2. nginx configuration is simple, apache is complex:

nginx is particularly easy to start, and can run almost 7*24 without interruption, even if it runs for several months No reboot is required. You can also upgrade the software version without interrupting service.

nginx's static processing performance is more than 3 times higher than that of apache. Apache's support for php is relatively simple. nginx needs to be used with other backends. Apache has more components than nginx.

3. The core difference is:

apache is a synchronous multi-process model, one connection corresponds to one process; nginx is asynchronous, with multiple connections (10,000 levels) ) can correspond to a process.

4. The areas of expertise of the two are:

nginx’s advantage is to handle static requests, with low cpu memory usage, and apache is suitable for handling dynamic requests, so now Generally, the front end uses nginx as a reverse proxy to resist the pressure, and apache serves as the back end to handle dynamic requests.

Basic usage of nginx

System platform: centos release 6.6 (final) 64-bit.

1. Install compilation tools and library files

##2. First install pcre

1. The function of pcre is to enable nginx to support the rewrite function. Download the pcre installation package, download address:

3. Install nginx

#1. Download nginx, download address:

4. nginx configuration

Create the user www for running nginx: ##Configure nginx.conf, Replace /usr/local/webserver/nginx/conf/nginx.conf with the following content

##Configure nginx.conf, Replace /usr/local/webserver/nginx/conf/nginx.conf with the following content

Check the correctness of the configuration file ngnix.conf command:

Check the correctness of the configuration file ngnix.conf command:

5. Start nginx

nginx startup command is as follows:

6. Access the site

Access our configured site ip from the browser:

nginx Common Instructions Description

1. main global configuration

nginx interacts with specific business functions at runtime (such as http service or email service proxy), such as the number of working processes, running identity, etc.

woker_processes 2

In the top-level main section of the configuration file, the number of worker processes in the worker role, the master process receives and assigns requests to workers for processing. This value can be simply set to the number of cores of the cpu grep ^processor /proc/cpuinfo | wc -l, which is also the auto value. If ssl and gzip are turned on, it should be set to the same or even double the number of logical cpu, which can reduce i /o operation. If the nginx server has other services, you can consider reducing them appropriately.

worker_cpu_affinity

is also written in the main part. In high concurrency situations, the performance loss caused by on-site reconstruction of registers and so on caused by multi-cpu core switching is reduced by setting CPU stickiness. Such as worker_cpu_affinity 0001 0010 0100 1000; (quad-core).

worker_connections 2048

Write in the events section. The maximum number of connections that each worker process can handle (initiate) concurrently (including the number of connections with clients or back-end proxied servers). nginx serves as a reverse proxy server. The calculation formula is: maximum number of connections = worker_processes * worker_connections/4, so the maximum number of client connections here is 1024. It doesn’t matter if this can be increased to 8192, depending on the situation, but it cannot exceed the subsequent worker_rlimit_nofile. When nginx is used as an http server, the calculation formula is divided by 2.

worker_rlimit_nofile 10240

Write in the main part. The default is no setting and can be limited to the maximum operating system limit of 65535.

use epoll

Write in the events section. Under the Linux operating system, nginx uses the epoll event model by default. Thanks to this, nginx is quite efficient under the Linux operating system. At the same time, nginx uses an efficient event model kqueue similar to epoll on the openbsd or freebsd operating system. Use select only when the operating system does not support these efficient models.

2. http server

Some configuration parameters related to providing http services. For example: whether to use keepalive, whether to use gzip for compression, etc.

sendfile on

Turn on the efficient file transfer mode. The sendfile instruction specifies whether nginx calls the sendfile function to output files, reducing context switching from user space to kernel space. Set it to on for ordinary applications. If it is used for disk IO heavy load applications such as downloading, it can be set to off to balance the disk and network I/O processing speed and reduce the system load.

keepalive_timeout 65 : Long connection timeout, in seconds. This parameter is very sensitive and involves the type of browser, the timeout setting of the back-end server, and the setting of the operating system. It can be set separately. An article. When a long connection requests a large number of small files, it can reduce the cost of reestablishing the connection. However, if a large file is uploaded, failure to complete the upload within 65 seconds will result in failure. If the setup time is too long and there are many users, maintaining the connection for a long time will occupy a lot of resources.

send_timeout: Used to specify the timeout period for responding to the client. This timeout is limited to the time between two connection activities. If this time is exceeded without any activity on the client, nginx will close the connection.

client_max_body_size 10m

The maximum number of bytes of a single file allowed to be requested by the client. If a larger file is uploaded, please set its limit value

client_body_buffer_size 128k

The maximum number of bytes that the buffer proxy buffers the client request

Module http_proxy :

This module implements the function of nginx as a reverse proxy server, including caching function (see also article)

proxy_connect_timeout 60

nginx connects to the back-end server timeout (proxy Connection timeout)

proxy_read_timeout 60

After a successful connection, the timeout between two successful response operations to the back-end server (agent receiving timeout)

proxy_buffer_size 4k

Set the proxy The buffer size of the server (nginx) that reads and saves user header information from the back-end realserver. The default size is the same as proxy_buffers. In fact, you can set this command value smaller

proxy_buffers 4 32k

proxy_buffers buffer, nginx caches the response from the back-end realserver for a single connection. If the average web page is below 32k, set it like this

proxy_busy_buffers_size 64k

Buffer size under high load (proxy_buffers*2)

proxy_max_temp_file_size

When proxy_buffers cannot accommodate the response content of the back-end server, part of it will be saved to a temporary file on the hard disk. This value is used to set the maximum temporary file size. The default is 1024m. , it has nothing to do with proxy_cache. Values greater than this will be returned from the upstream server. Set to 0 to disable.

proxy_temp_file_write_size 64k

When the cache is proxied by the server to respond to temporary files, this option limits the size of the temporary file written each time. proxy_temp_path (can be specified during compilation) to which directory to write to.

proxy_pass, proxy_redirect see location section.

Module http_gzip:

gzip on: Turn on gzip compression output to reduce network transmission.

gzip_min_length 1k: Set the minimum number of bytes of the page allowed for compression. The number of page bytes is obtained from the content-length of the header. The default value is 20. It is recommended to set the number of bytes to be greater than 1k. If it is less than 1k, it may become more and more compressed.

gzip_buffers 4 16k: Set the system to obtain several units of cache for storing the gzip compression result data stream. 4 16k means applying for memory in units of 16k, which is 4 times the size of the original data to be installed in units of 16k.

gzip_http_version 1.0: Used to identify the version of the http protocol. Early browsers did not support gzip compression, and users would see garbled characters, so this option was added to support earlier versions. If you use nginx's reverse proxy and want to enable gzip compression, please set it to 1.0 since the end communication is http/1.0.

gzip_comp_level 6: gzip compression ratio, 1 has the smallest compression ratio and the fastest processing speed, 9 has the largest compression ratio but the slowest processing speed (fast transmission but consumes more CPU)

gzip_types: Match the mime type for compression. Regardless of whether it is specified, the "text/html" type will always be compressed.

gzip_proxied any: Enabled when nginx is used as a reverse proxy, it determines whether to enable or disable compression of the results returned by the back-end server. The premise of matching is that the back-end server must return a message containing "via" " header.

gzip_vary on: It is related to the http header. Vary: accept-encoding will be added to the response header, which allows the front-end cache server to cache gzip-compressed pages, for example, using Squid Cache nginx compressed data.

3. server virtual host

http service supports several virtual hosts. Each virtual host has a corresponding server configuration item, which contains the configuration related to the virtual host. When providing a proxy for mail services, you can also create several servers. Each server is distinguished by its listening address or port.

listen

The listening port is 80 by default. If it is less than 1024, it must be started as root. It can be in the form of listen *:80, listen 127.0.0.1:80, etc.

server_name

Server name, such as localhost, www.example.com, can be matched by regular expressions.

Module http_stream

This module uses a simple scheduling algorithm to achieve load balancing from the client IP to the back-end server. Upstream is followed by the name of the load balancer, and the back-end realserver is host: port options; methods are organized in {}. If only one backend is proxied, you can also write it directly in proxy_pass.

4. location

In http service, a series of configuration items corresponding to certain specific URLs.

root /var/www/html

Define the default website root directory location of the server. If locationurl matches a subdirectory or file, root has no effect and is usually placed in the server directive or under /.

index index.jsp index.html index.htm

Define the file name for default access under the path, usually followed by root

proxy_pass http:/backend

The request is redirected to the backend definition The server list, that is, the reverse proxy, corresponds to the upstream load balancer. You can also proxy_pass http://ip:port.

proxy_redirect off;

proxy_set_header host $host;

proxy_set_header x-real-ip $remote_addr;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

These four are set up this way for the time being. If we delve deeper, each of them involves very complicated content, which will also be explained in another article.

Regarding the writing of location matching rules, it can be said to be particularly critical and basic. Please refer to the article nginx configuration location summary and rewrite rule writing;

5. Others

5.1 Access control allow/deny

nginx’s access control module will be installed by default, and the writing method is also very simple. You can have multiple allow and deny, allowing or prohibiting a certain Access to each IP or IP segment will stop matching if any one of the rules is met. For example:

We also commonly use htpasswd of the httpd-devel tool to set the login password for the accessed path:

This generates a password file encrypted by crypt by default. Open the two lines of comments in nginx-status above and restart nginx to take effect.

5.2 List directory autoindex

nginx does not allow listing of the entire directory by default. If you need this function, open the nginx.conf file and add autoindex on; in the location, server or http section. It is best to add the other two parameters:

autoindex_exact_size off; Default When on, the exact size of the file is displayed in bytes. After changing to off, the approximate size of the file is displayed, the unit is kb or mb or gb autoindex_localtime on;

The default is off, and the displayed file time is gmt time. After changing to on, the displayed file time is the server time of the file

The above is the detailed content of Nginx quick start example analysis. For more information, please follow other related articles on the PHP Chinese website!

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM本篇文章给大家带来了关于nginx的相关知识,其中主要介绍了nginx拦截爬虫相关的,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

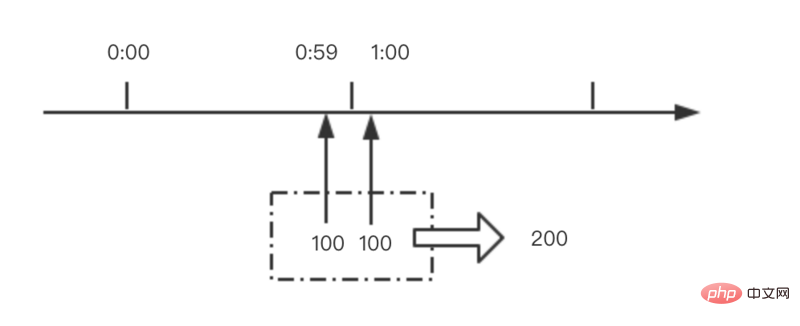

nginx限流模块源码分析May 11, 2023 pm 06:16 PM

nginx限流模块源码分析May 11, 2023 pm 06:16 PM高并发系统有三把利器:缓存、降级和限流;限流的目的是通过对并发访问/请求进行限速来保护系统,一旦达到限制速率则可以拒绝服务(定向到错误页)、排队等待(秒杀)、降级(返回兜底数据或默认数据);高并发系统常见的限流有:限制总并发数(数据库连接池)、限制瞬时并发数(如nginx的limit_conn模块,用来限制瞬时并发连接数)、限制时间窗口内的平均速率(nginx的limit_req模块,用来限制每秒的平均速率);另外还可以根据网络连接数、网络流量、cpu或内存负载等来限流。1.限流算法最简单粗暴的

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM实验环境前端nginx:ip192.168.6.242,对后端的wordpress网站做反向代理实现复杂均衡后端nginx:ip192.168.6.36,192.168.6.205都部署wordpress,并使用相同的数据库1、在后端的两个wordpress上配置rsync+inotify,两服务器都开启rsync服务,并且通过inotify分别向对方同步数据下面配置192.168.6.205这台服务器vim/etc/rsyncd.confuid=nginxgid=nginxport=873ho

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AM

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AMnginx php403错误的解决办法:1、修改文件权限或开启selinux;2、修改php-fpm.conf,加入需要的文件扩展名;3、修改php.ini内容为“cgi.fix_pathinfo = 0”;4、重启php-fpm即可。

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM跨域是开发中经常会遇到的一个场景,也是面试中经常会讨论的一个问题。掌握常见的跨域解决方案及其背后的原理,不仅可以提高我们的开发效率,还能在面试中表现的更加

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PM

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PMnginx部署react刷新404的解决办法:1、修改Nginx配置为“server {listen 80;server_name https://www.xxx.com;location / {root xxx;index index.html index.htm;...}”;2、刷新路由,按当前路径去nginx加载页面即可。

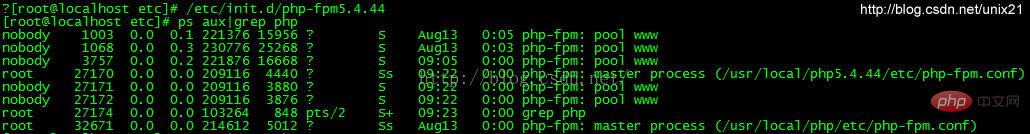

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PM

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PMlinux版本:64位centos6.4nginx版本:nginx1.8.0php版本:php5.5.28&php5.4.44注意假如php5.5是主版本已经安装在/usr/local/php目录下,那么再安装其他版本的php再指定不同安装目录即可。安装php#wgethttp://cn2.php.net/get/php-5.4.44.tar.gz/from/this/mirror#tarzxvfphp-5.4.44.tar.gz#cdphp-5.4.44#./configure--pr

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AM

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AMnginx禁止访问php的方法:1、配置nginx,禁止解析指定目录下的指定程序;2、将“location ~^/images/.*\.(php|php5|sh|pl|py)${deny all...}”语句放置在server标签内即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver Mac version

Visual web development tools

SublimeText3 Chinese version

Chinese version, very easy to use

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Linux new version

SublimeText3 Linux latest version