Technology peripherals

Technology peripherals AI

AI An easy and objective way to introduce large models to avoid over-interpretation

An easy and objective way to introduce large models to avoid over-interpretation1. Preface

This article aims to provide readers without computer science background with some information about ChatGPT and its similar artificial intelligence systems (such as GPT-3, GPT-4, Bing Chat, Bard, etc. ) principle of how it works. ChatGPT is a chatbot built on a large language model for conversational interaction. These terms can be obscure, so I'll explain them. At the same time, we will discuss the core concepts behind them, and this article does not require the reader to have any technical or mathematical background knowledge. We will make heavy use of metaphors to explain related concepts in order to understand them better. We will also discuss the implications of these techniques and what we should or should not expect to be able to do with large language models like ChatGPT.

Next, we will start with the basic "What is artificial intelligence" in a way that does not use professional terms as much as possible, and gradually discuss in depth the terms and concepts related to large language models and ChatGPT, and will use metaphors to explain them. At the same time, we'll also talk about what these technologies mean and what we should or shouldn't expect them to be able to do.

2. What is Artificial Intelligence

First, let’s start with some basic terms that you may hear often. So what is artificial intelligence?

Artificial intelligence: refers to an entity that can exhibit behaviors similar to what humans would consider intelligent. There are some problems with using "intelligence" to define artificial intelligence, because "intelligence" itself does not have a clear definition. However, this definition is still appropriate. It basically means that if we see something man-made that performs interesting, useful, and seemingly difficult behaviors, then we might say that they are intelligent. For example, in computer games, we often refer to computer-controlled characters as “AI”. Most of these roles are simple programs based on if-then-else code (e.g., "If the player is in range, fire, otherwise move to the nearest stone and hide"). But if the characters can keep us engaged and entertained while not doing anything patently stupid, then we might think they're more complex than they actually are.

Once we understand how something works, we may not think it is magical, but expect something more complex behind the scenes. It all depends on how well we know what's going on behind the scenes.

The important point is that artificial intelligence is not magic. Because it's not magic, it can be explained.

3. What is machine learning

Another term often associated with artificial intelligence is machine learning.

Machine learning: A method of creating behavior by collecting data, forming a model, and then executing the model. Sometimes it's difficult to manually create a bunch of if-then-else statements to capture some complex phenomenon (like language). In this case, we try to find large amounts of data and model it using algorithms that can find patterns in the data.

So what is a model? A model is a simplified version of a complex phenomenon. For example, a car model is a smaller, simpler version of a real car that shares many of the properties of the real car, but is of course not meant to completely replace the original version. Model cars may look realistic and are useful when experimenting.

Just like we can build a smaller, simpler car, we can also build a smaller, simpler model of human language. We use the term "large language model" because these models are very large from the perspective of the amount of memory (video memory) they need to use. The largest models currently in production, such as ChatGPT, GPT-3, and GPT-4, are so large that they require supercomputers running on data center servers to create and run.

4. What is a neural network

There are many ways to learn a model through data, and neural networks are one of them. This technology is loosely based on the structure of the human brain, which consists of a series of interconnected neurons that pass electrical signals between them, allowing us to complete a variety of tasks. The basic concept of neural networks was invented in the 1940s, and the basic concept of how to train neural networks was invented in the 1980s. At that time, neural networks were very inefficient. It was not until computer hardware upgrades around 2017 that we could They can be used on a large scale.

However, I personally prefer to use the metaphor of a circuit to simulate a neural network. Through resistance, the flow of current through wires, we can simulate the working of neural networks.

Imagine we want to make a self-driving car that can drive on the highway. We installed distance sensors on the front, back and sides of the car. The distance sensor reports a value of 1 when an object is approaching, and a value of 0 when there is no detectable object nearby.

We also installed robots to operate the steering wheel, brake and accelerate. When the throttle receives a value of 1, it uses maximum acceleration, while a value of 0 means no acceleration. Likewise, a value of 1 sent to the braking mechanism means emergency braking, while 0 means no braking. The steering mechanism accepts a value between -1 and 1, with negative numbers turning left, positive numbers turning right, and 0 meaning staying straight.

Of course we must record driving data. When the path ahead is clear, you speed up. When there's a car in front of you, you slow down. When a car comes too close from the left, you swerve to the right and change lanes, assuming of course there is no car on the right. This process is very complex and requires different operations (turn left or right, accelerate or decelerate, brake) based on different combinations of sensor information, so each sensor needs to be connected to each robot mechanism.

What happens when you drive on the road? Electrical current flows from all sensors to all robot actuators, and the vehicle turns left, right, accelerates and brakes simultaneously. It will create a mess.

Get out the resistors and start placing them in different parts of the circuit so that current can flow more freely between certain sensors and certain robotic arms. For example, we would like the current to flow more freely from the front proximity sensor to the brakes rather than the steering mechanism. We also installed elements called gates that would either stop current from flowing until enough charge had accumulated to trigger the switch (only allowing current to flow when both the front and rear proximity sensors reported a high number), or only allow the current to flow when the input power Send power forward when the intensity is low (send more power to the accelerator when the forward proximity sensor reports a low value).

But where should we place these resistors and gates? I don't know either. Place them randomly in various locations. Then try again. Maybe the car drives better this time, which means it sometimes brakes and steers when the data says it's best to brake and steer, etc., but it doesn't get it right every time. And there are some things it does worse (it accelerates when the data suggests it sometimes needs to brake). So we kept randomly trying different combinations of resistors and gates. Eventually, we'll stumble upon a combination that's good enough, and we'll declare success. For example, this combination:

(Actually, we won’t add or remove doors, but we will modify them so that they can be activated from below with lower energy, Either more energy needs to come out from below, or a lot of energy is released only when there is very little energy below. Machine learning purists may feel uncomfortable with this description. Technically, this is done by tuning This is done with a bias on the gate, this is not usually shown in such diagrams, but from a circuit metaphor perspective it can be thought of as a cable that plugs in directly to the power supply and can act like all the other wires Modify it like a cable.)

#It is not good to try randomly. An algorithm called backpropagation makes a pretty good guess at changing circuit configurations. The details of the algorithm don't matter, just know that it fine-tunes the circuit to make it behave closer to what the data suggests, and after thousands of fine-tunings, you can eventually get results that match the data.

We call resistors and gates parameters because they are actually everywhere, and what the backpropagation algorithm does is declares each resistor to be stronger or weaker. Therefore, if we know the layout and parameter values of the circuit, the entire circuit can be replicated on other cars.

The above is the detailed content of An easy and objective way to introduce large models to avoid over-interpretation. For more information, please follow other related articles on the PHP Chinese website!

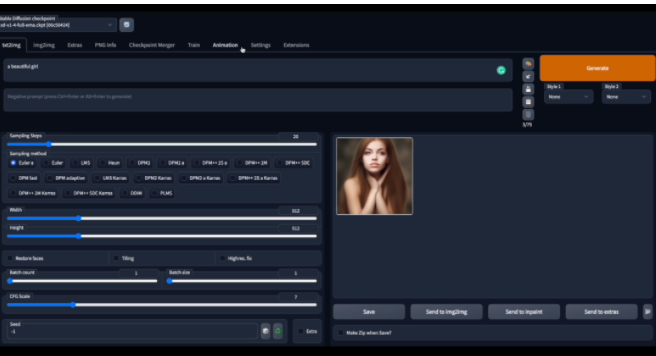

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM自从 ChatGPT、Stable Diffusion 发布以来,各种相关开源项目百花齐放,着实让人应接不暇。今天,着重挑选几个优质的开源项目分享给大家,对我们的日常工作、学习生活,都会有很大的帮助。

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AM

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AMWord文档拆分后的子文档字体格式变了的解决办法:1、在大纲模式拆分文档前,先选中正文内容创建一个新的样式,给样式取一个与众不同的名字;2、选中第二段正文内容,通过选择相似文本的功能将剩余正文内容全部设置为新建样式格式;3、进入大纲模式进行文档拆分,操作完成后打开子文档,正文字体格式就是拆分前新建的样式内容。

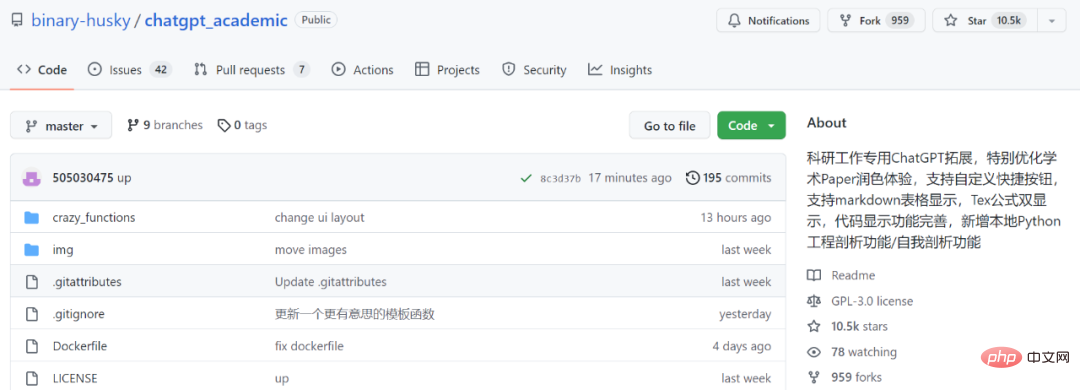

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM用 ChatGPT 辅助写论文这件事,越来越靠谱了。 ChatGPT 发布以来,各个领域的从业者都在探索 ChatGPT 的应用前景,挖掘它的潜力。其中,学术文本的理解与编辑是一种极具挑战性的应用场景,因为学术文本需要较高的专业性、严谨性等,有时还需要处理公式、代码、图谱等特殊的内容格式。现在,一个名为「ChatGPT 学术优化(chatgpt_academic)」的新项目在 GitHub 上爆火,上线几天就在 GitHub 上狂揽上万 Star。项目地址:https://github.com/

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。 阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。使用 Python 和 C

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM面对一夜爆火的 ChatGPT ,我最终也没抵得住诱惑,决定体验一下,不过这玩意要注册需要外国手机号以及科学上网,将许多人拦在门外,本篇博客将体验当下爆火的 ChatGPT 以及无需注册和科学上网,拿来即用的 ChatGPT 使用攻略,快来试试吧!

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AM

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AMChatGPT可以联网后,OpenAI还火速介绍了一款代码生成器,在这个插件的加持下,ChatGPT甚至可以自己生成机器学习模型了。 上周五,OpenAI刚刚宣布了惊爆的消息,ChatGPT可以联网,接入第三方插件了!而除了第三方插件,OpenAI也介绍了一款自家的插件「代码解释器」,并给出了几个特别的用例:解决定量和定性的数学问题;进行数据分析和可视化;快速转换文件格式。此外,Greg Brockman演示了ChatGPT还可以对上传视频文件进行处理。而一位叫Andrew Mayne的畅销作

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM本篇文章给大家带来了关于php的相关知识,其中主要介绍了我是怎么用ChatGPT学习PHP中AOP的实现,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver Mac version

Visual web development tools