1. The birth of Wen Xinyiyan

"Wen Xinyiyan completed training on the largest high-performance GPU cluster in the country's AI field."

As early as 2021 In June 2019, in order to meet future large model training tasks, Baidu Intelligent Cloud began to plan the construction of a new high-performance GPU cluster, and jointly completed the design of an IB network architecture that can accommodate more than 10,000 cards in conjunction with NVIDIA. Each node between the nodes in the cluster Each GPU card is connected through the IB network, and the cluster construction will be completed in April 2022, providing single cluster EFLOPS level computing power.

In March 2023, Wen Xinyiyan was born on this high-performance cluster and continues to iterate new capabilities. Currently, the size of this cluster is still expanding.

Dr. Junjie Lai, General Manager of Solutions and Engineering at NVIDIA China: GPU clusters interconnected by high-speed IB networks are key infrastructure in the era of large models. NVIDIA and Baidu Intelligent Cloud jointly built the largest high-performance GPU/IB cluster in the domestic cloud computing market, which will accelerate Baidu's greater breakthrough in the field of large models.

2. High-performance cluster design

High-performance cluster is not a simple accumulation of computing power. It also requires special design and optimization to bring out the full potential of the cluster. computing power.

In distributed training, GPUs will continuously communicate between and within machines. While using high-performance networks such as IB and RoCE to provide high-throughput and low-latency services for inter-machine communication, it is also necessary to specially design the internal network connections of the servers and the communication topology in the cluster network to meet the communication requirements of large model training. requirements.

Achieving the ultimate design optimization requires a deep understanding of what each operation in the AI task means to the infrastructure. Different parallel strategies in distributed training, that is, how to split models, data, and parameters, will produce different data communication requirements. For example, data parallelism and model parallelism will introduce a large number of intra-machine and inter-machine Allreduce operations respectively, and expert parallelism will Producing inter-machine All2All operations, 4D hybrid parallelism will introduce communication operations generated by various parallel strategies.

To this end, Baidu Smart Cloud optimizes the design from both stand-alone servers and cluster networks to build high-performance GPU clusters.

In terms of stand-alone servers, Baidu Smart Cloud’s super AI computer X-MAN has now evolved to its fourth generation. X-MAN 4.0 establishes high-performance inter-card communication for GPUs, providing 134 GB/s of Allreduce bandwidth within a single machine. This is currently Baidu’s server product with the highest degree of customization and the most specialized materials. In the MLCommons 1.1 list, X-MAN 4.0 ranks TOP2 in stand-alone hardware performance with the same configuration.

In terms of cluster network, a three-layer Clos architecture optimized for large model training is specially designed to ensure the performance and acceleration of the cluster during large-scale training. Compared with the traditional method, this architecture has been optimized with eight rails to minimize the number of hops in the communication between any card with the same number in different machines, and provides support for the Allreduce operation of the same card with the largest share of network traffic in AI training. High throughput and low latency network services.

This network architecture can support ultra-large-scale clusters with a maximum of 16,000 cards. This scale is the largest scale of all IB network box networking at this stage. The cluster's network performance is stable and consistent at a level of 98%, which is close to a state of stable communication. Verified by the large model algorithm team, hundreds of billions of model training jobs were submitted on this ultra-large-scale cluster, and the overall training efficiency at the same machine size was 3.87 times that of the previous generation cluster.

However, building large-scale, high-performance heterogeneous clusters is only the first step to successfully implement large models. To ensure the successful completion of AI large model training tasks, more systematic optimization of software and hardware is required.

3. Challenges of large model training

In the past few years, the parameter size of large models will increase at a rate of 10 times per year. Around 2020, a model with hundreds of billions of parameters will be considered a large model. By 2022, it will already require hundreds of billions of parameters to be called a large model.

Before large models, the training of an AI model was usually sufficient for a single machine with a single card or a single machine with multiple cards. The training cycle ranged from hours to days. Now, in order to complete the training of large models with hundreds of billions of parameters, large cluster distributed training with hundreds of servers and thousands of GPU/XPU cards has become a must, and the training cycle has also been extended to months.

In order to train GPT-3 with 175 billion parameters (300 billion token data), 1 block of A100 takes 32 years based on half-precision peak performance calculation, and 1024 blocks of A100 takes 34 days based on resource utilization of 45%. . Of course, even if time is not taken into account, one A100 cannot train a model with a parameter scale of 100 billion, because the model parameters have exceeded the memory capacity of a single card.

To conduct large model training in a distributed training environment, the training cycle is shortened from decades to dozens of days for a single card. It is necessary to break through various challenges such as computing walls, video memory walls, and communication walls, so that all resources in the cluster can can be fully utilized to speed up the training process and shorten the training cycle.

The computing wall refers to the huge difference between the computing power of a single card and the total computing power of the model. The single card computing power of A100 is only 312 TFLOPS, while GPT-3 requires a total computing power of 314 ZFLOPs. There is a difference of 9 orders of magnitude.

Video memory wall refers to the inability of a single card to completely store the parameters of a large model. GPT-3's 175 billion parameters alone require 700 GB of video memory (each parameter is calculated as 4 bytes), while the NVIDIA A100 GPU only has 80 GB of video memory.

The essence of the computing wall and the video memory wall is the contradiction between the limited single card capability and the huge storage and computing requirements of the model. This can be solved through distributed training, but after distributed training, you will encounter the problem of communication wall.

Communication wall, mainly because each computing unit of the cluster needs frequent parameter synchronization under distributed training, and communication performance will affect the overall computing speed. If the communication wall is not handled well, it is likely that the cluster will become larger and the training efficiency will decrease. Successfully breaking through the communication wall is reflected in the strong scalability of the cluster, that is, the multi-card acceleration capability of the cluster matches the scale. The linear acceleration ratio of multiple cards is an indicator for evaluating the acceleration capabilities of multiple cards in a cluster. The higher the value, the better.

These walls begin to appear during multi-machine and multi-card training. As the parameters of the large model become larger and larger, the corresponding cluster size also becomes larger and larger, and these three walls become higher and higher. At the same time, during long-term training of large clusters, equipment failures may occur, which may affect or interrupt the training process.

4. The process of large model training

Generally speaking, from the perspective of infrastructure, the entire process of large model training can be roughly divided into the following two stages:

Phase 1 : Parallel strategy and training optimization

After submitting the large model to be trained, the AI framework will comprehensively consider the structure of the large model and other information, as well as the capabilities of the training cluster, to formulate a parallel training strategy for this training task. , and complete AI task placement. This process is to disassemble the model and place the task, that is, how to disassemble the large model and how to place the disassembled parts into each GPU/XPU of the cluster.

For AI tasks placed to run in GPU/XPU, the AI framework will jointly train the cluster to perform full-link optimization at the single-card runtime and cluster communication levels to accelerate the operation of each AI task during the large model training process. Efficiency, including data loading, operator calculation, communication strategy, etc. For example, ordinary operators running in AI tasks are replaced with optimized high-performance operators, and communication strategies that adapt to the current parallel strategy and training cluster network capabilities are provided.

Phase 2: Resource Management and Task Scheduling

The large model training task starts running according to the parallel strategy formulated above, and the training cluster provides various high-performance resources for the AI task. For example, in what environment does the AI task run, how to provide resource docking for the AI task, what storage method does the AI task use to read and save data, what type of network facilities does the GPU/XPU communicate through, etc.

At the same time, during the operation process, the training cluster will combine with the AI framework to provide a reliable environment for long-term training of large models through elastic fault tolerance and other methods. For example, how to observe and perceive the running status of various resources and AI tasks in the cluster, etc., and how to schedule resources and AI tasks when the cluster changes, etc.

From the dismantling of the above two stages, we can find that the entire large model training process relies on the close cooperation of the AI framework and the training cluster to complete the breakthrough of the three walls and jointly ensure large model training Efficient and stable.

5. Full-stack integration, "AI Big Base" accelerates large model training

Combined with years of technology accumulation and engineering practice in the fields of AI and large models, Baidu launched the full-stack at the end of 2022 The self-developed AI infrastructure "AI Big Base" includes a three-layer technology stack of "chip-framework-model". It has key self-developed technologies and leading products at all levels, corresponding to Kunlun Core, PaddlePaddle, and WeChat. Big model of the heart.

Based on these three layers of technology stack, Baidu Intelligent Cloud has launched two major AI engineering platforms, "AI Middle Platform" and "Baidu Baige·AI Heterogeneous Computing Platform", which are respectively in development and resources. Improve efficiency at all levels, break through the three walls, and accelerate the training process.

Among them, "AI middle platform" relies on the AI framework to develop parallel strategies and optimized environments for the large model training process, covering the entire life cycle of training. "Baidu Baige" realizes efficient chip enablement and provides management of various AI resources and task scheduling capabilities.

Baidu's "AI Big Base" has carried out full-stack integration and system optimization of each layer of the technology stack, completing the technology integration construction of cloud and intelligence. End-to-end optimization and acceleration of large model training can be achieved.

Hou Zhenyu, Vice President of Baidu Group: Large model training is a systematic project. The cluster size, training time, and cost have all increased a lot compared to the past. Without full-stack optimization, it would be difficult to ensure the successful completion of large model training. Baidu's technical investment and engineering practices in large models over the years have enabled us to establish a complete set of software stack capabilities to accelerate the training of large models.

Next, we will combine the two stages of the large model training process mentioned above to describe how the various layers of the technology stack of the "AI Big Base" are integrated with each other. System optimization to achieve end-to-end optimization and acceleration of large model training.

5.1 Parallel Strategy and Training Optimization

Model Splitting

Flying Paddle can provide data parallelism, model parallelism, pipeline parallelism, parameter grouping and slicing, and expert parallelism for large model training and other rich parallel strategies. These parallel strategies can meet the needs of training large models with parameters ranging from billions to hundreds of billions, or even trillions, and achieve breakthroughs in computing and video memory walls. In April 2021, Feipiao was the first in the industry to propose a 4D hybrid parallel strategy, which can support the training of hundreds of billions of large models to be completed at the monthly level.

Topology awareness

Baidu Baige has cluster topology awareness capabilities specially prepared for large model training scenarios, including intra-node architecture awareness, inter-node architecture awareness, etc., such as the computing power inside each server. Information such as power, CPU and GPU/XPU, GPU/XPU and GPU/XPU link methods, and GPU/XPU and GPU/XPU network link methods between servers.

Automatic Parallel

Before the large model training task starts running, Feipiao can form a unified distributed resource graph for the cluster based on the topology awareness capabilities of Baidu Baige platform. At the same time, the flying paddle forms a unified logical calculation view based on the large model to be trained.

Based on these two pictures, Feipiao automatically searches for the optimal model segmentation and hardware combination strategy for the model, and allocates model parameters, gradients, and optimizer status to different GPUs/GPUs according to the optimal strategy. On XPU, complete the placement of AI tasks to improve training performance.

For example, model parallel AI tasks are placed on different GPUs on the same server, and these GPUs are linked through the NVSwitch inside the server. Place data-parallel and pipeline-parallel AI tasks on GPUs of the same number on different servers, and these GPUs are linked through IB or RoCE. Through this method of placing AI tasks according to the type of AI tasks, cluster resources can be used efficiently and the training of large models can be accelerated.

End-to-end adaptive training

During the running of the training task, if the cluster changes, such as a resource failure, or the cluster scale changes, Baidu Baige will perform fault tolerance replacement or elastic expansion and contraction. Since the locations of the nodes participating in the calculation have changed, the communication mode between them may no longer be optimal. Flying Paddle can automatically adjust model segmentation and AI task placement strategies based on the latest cluster information. At the same time, Baidu Baige completes the scheduling of corresponding tasks and resources.

Feipiao’s unified resource and computing view and automatic parallel capabilities, combined with Baidu Baige’s elastic scheduling capabilities, realize end-to-end adaptive distributed training of large models, covering the entire life of cluster training cycle.

This is an in-depth interaction between the AI framework and the AI heterogeneous computing power platform. It realizes the system optimization of the trinity of computing power, framework and algorithm, supports automatic and flexible training of large models, and has an end-to-end measured performance of 2.1 times. The performance improvement ensures the efficiency of large-scale training.

Training Optimization

After completing the splitting of the model and the placement of AI tasks, during the training process, in order to ensure that the operator operates in various mainstream AI frameworks such as Flying Paddle and Pytorch and various computing cards It can accelerate calculations, and Baidu Baige platform has a built-in AI acceleration suite. The AI acceleration suite includes data layer storage acceleration, training and inference acceleration library AIAK, which optimizes the entire link from the dimensions of data loading, model calculation, distributed communication and other dimensions.

Among them, the optimization of data loading and model calculation can effectively improve the operating efficiency of a single card; the optimization of distributed communication, combined with high-performance networks such as cluster IB or RoCE and specially optimized communication topology, as well as reasonable AI task placement Strategies to jointly solve the communication wall problem.

Baidu Baige’s multi-card acceleration ratio in a kilo-card scale cluster has reached 90%, allowing the overall computing power of the cluster to be fully released.

In the test results of MLPerf Training v2.1 released in November 2022, the model training performance results submitted by Baidu using Fei Paddle plus Baidu Baige ranked first in the world under the same GPU configuration, end-to-end Both training time and training throughput exceed the NGC PyTorch framework.

5.2 Resource Management and Task Scheduling

Resource Management

Baidu Baige can provide various computing, network, storage and other AI resources, including Baidu Taihang elastic bare metal server BBC, IB network, RoCE network, and parallel file storage PFS , object storage BOS, data lake storage acceleration RapidFS and other various cloud computing resources suitable for large model training.

When a task is running, these high-performance resources can be reasonably combined to further improve the efficiency of AI operations and realize computing acceleration of AI tasks throughout the process. Before the AI task starts, the training data in the object storage BOS can be warmed up, and the data can be loaded into the data lake storage acceleration RapidFS through the elastic RDMA network. The elastic RDMA network can reduce communication latency by 2 to 3 times compared to traditional networks, and accelerates the reading of AI task data based on high-performance storage. Finally, AI task calculations are performed through the high-performance Baidu Taihang elastic bare metal server BBC or cloud server BCC.

Elastic Fault Tolerance

When running an AI task, it not only requires high-performance resources, but also needs to ensure the stability of the cluster and minimize the occurrence of resource failures to avoid interrupting training. However, resource failure cannot be absolutely avoided. The AI framework and the training cluster need to jointly ensure that the training task can be recovered from the most recent state after being interrupted, thereby providing a reliable environment for long-term training of large models.

Baidu's self-developed heterogeneous collection library ECCL supports communication between Kunlun cores and other heterogeneous chips, and supports the perception of slow nodes and faulty nodes. Through Baidu Baige's resource elasticity and fault-tolerance strategy, slow nodes and faulty nodes are eliminated, and the latest architecture topology is fed back to Feipiao to re-arrange tasks and allocate corresponding training tasks to other XPU/GPUs to ensure smooth training. Run efficiently.

6. AI inclusiveness in the era of large models

Large models are a milestone technology for artificial intelligence to move towards general intelligence. Mastering large models well is a must-answer on the path to complete intelligent upgrades. Ultra-large-scale computing power and full-stack integrated software optimization are the best answers to this must-answer question.

In order to help society and industry quickly train their own large models and seize the opportunity of the times, Baidu Intelligent Cloud released the Yangquan Intelligent Computing Center at the end of 2022, equipped with the full-stack capabilities of Baidu's "AI Big Base", which can provide 4 EFLOPS of heterogeneous computing power. This is currently the largest and most technologically advanced data center in Asia.

Currently, Baidu Smart Cloud has opened all the capabilities of the "AI Big Base" to the outside world, realizing inclusive AI in the big model era, through central clouds in various regions, edge clouds BEC, local computing clusters LCC, private It is delivered in various forms such as Cloud ABC Stack, making it easy for society and industry to obtain intelligent services.

The above is the detailed content of AI large base, the answer to the era of large models. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

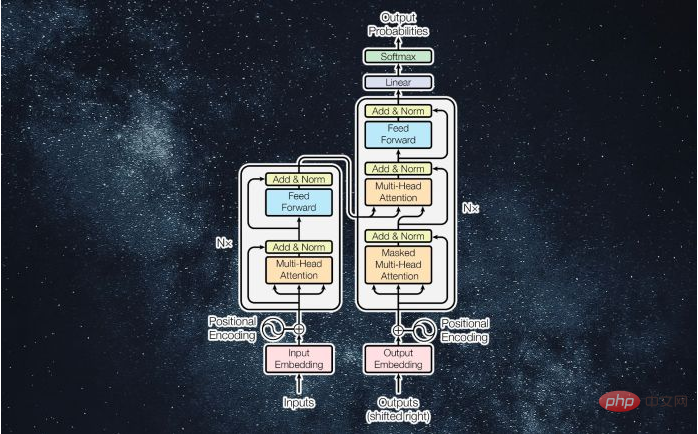

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Chinese version

Chinese version, very easy to use