Technology peripherals

Technology peripherals AI

AI After basic models with tens of billions and hundreds of billions of parameters, are we entering a data-centric era?

After basic models with tens of billions and hundreds of billions of parameters, are we entering a data-centric era?In recent years, the emergence of basic models such as GPT-3, CLIP, DALL-E, Imagen, and Stabile Diffusion has been amazing. The powerful generative capabilities and contextual learning capabilities demonstrated by these models were unimaginable just a few years ago. This article explores the commercialization of these large-scale technologies. These models are now not just the domain of industry giants. Their value is increasingly reflected in the description of the field and key issues, and at their core is data. The impact of the rapid development of the underlying model has yet to be determined, so much is based on speculation.

##prompt: "taco cat" (don't take it too seriously)

From a machine learning perspective, the concept of a task is absolutely fundamental - we create training data to specify the task and generalize through training. Therefore, for decades, there have been two main views in the industry:

- #"Useless input, useless output", that is, the data/feature information input to the model determines the success or failure of the model.

- "Too many parameters will lead to overfitting." In the past 20 years, the development of general and sparse models has become popular. The common belief is that sparse models have fewer parameters, which helps reduce overfitting and thus generalizes better.

These views are generally reasonable, but they are also somewhat misleading.

Basic models are changing our understanding of tasks because they can be trained on a wide range of data and used for a variety of tasks. Even if some users do not clearly understand their target tasks, they can easily apply these models without requiring specific training. These models can be controlled using natural language or an interface, allowing domain experts to drive use of the models and want to immediately experience the magic in new environments. In this exploration process, the first step for users is not to curate a specific training data set, but to play with, ideate, and quickly iterate on their ideas. With the basic model in hand, we wanted to learn more about how it transferred to a range of tasks, including many we hadn't yet envisioned.

In order to benefit from the next wave of artificial intelligence development, we may need to re-examine the limitations (and wisdom) of previous mainstream views. In this article we will start from there, explore what changes can be seen in the base model, and end with a discussion of how we see the base model fitting in with traditional approaches.

Useless input, useless output—that’s it?Taskless base models are exploding. So far, a lot of it has been about model architecture and engineering, but signs of how these models are coming together are starting to show. Is there any precedent for data becoming the foundation and the fundamental point of differentiation? We have seen the back and forth between model-centric and data-centric approaches in supervised machine learning.

In a series of projects in the second half of the 2010s, feature quality was key. In the old model, features were tools that encoded domain knowledge. These features are less stable, and processing practitioners need to master low-level details on how to characterize this information to obtain more stable and reliable predictions.

Deep learning succeeds because people are poor at these things. The deep learning revolution is in full swing, and new models are emerging one after another on arXiv, which is really shocking. These models take previously manual operations, such as feature engineering, and fully automate them. The model is excellent and can successfully characterize raw data such as text and images through deep learning. This is a huge increase in productivity. However, these models are not perfect and continued understanding of this area remains important. So, how do you incorporate this into your model?

We can see that users use training data as a carrier to efficiently input information, interpret applications and interact with the model. This all happens in the "dark", without tools, theories and abstracts. We thought users should be able to make some basic programming abstractions over their own data, and so the Snorkel project (and then the company) was born. At the knowledge level, we have thus entered the era of data-centric AI and weak supervision. We can learn two important lessons from this:

- Once a certain technology stabilizes, its value will return to data. In this case, with the emergence of technologies such as TensorFlow, PyTorch, MXNet, Theano, etc., deep learning technology began to be commercialized, but the description of a specific problem did not give a wide range of data distribution, task specifications, etc. Therefore, success depends on how to introduce relevant information into the model;

- We can (and need to) deal with noise. Basic mathematics and engineering can in principle help with noise processing. It is difficult for users to perfectly express their knowledge in training data, and the quality of different data sources may vary. When studying the basic theory of weak supervision, we found that models can learn a lot from noisy data (not all useless data is bad). That said, avoid entering useless information — but don’t be too picky about the data, either.

prompt: "noisy image". Have you seen anything interesting from the noisy image?

# Simply put, data encodes your questions and analysis - even if the technology is commoditized, the value of data remains. So, it’s not that useless information is good, but don’t make this distinction too absolute. Data is useful or useless depending on whether it is exploited in the most effective way.

The basic model is trained based on a large amount of data and is widely used in various tasks, bringing new challenges to data management. As models/architectures continue to become commoditized, we need to understand how to efficiently manage large amounts of data to ensure the generalizability of models.

Will too many parameters lead to overfitting?

Why do we see magical contextual features? How do modeling choices (architecture and algorithms) contribute to this? Do the magic properties of large language models come from mysterious model configurations?

About a decade ago, a rough machine learning generalization theory held that if a model was too parsimonious (i.e., unable to fit too many spurious features), then it would generalize. One may have a more precise description of this, which are major achievements in theoretical fields such as VC dimension, Rademacher complexity, etc. In the process, we discovered that it seems that a small number of parameters are also necessary for generalization. But this is not the case. Overparameterization is a major problem, but now we have large models as counterexamples: these large models (more parameters than data points) can fit all kinds of functions that are mind-bogglingly complex, but they are still general ized (even with random labels).

The idea of over-parameterization is misleading to us, and recent insights have opened up new directions. We see some magical features emerge in these large models, but the prevailing belief is that these features are only enabled by certain machine-trained architectures that few people have access to. One direction for our and other research efforts is to try to implement these magical features in simple, classical models. Our recent state-space models are based on decades of signal processing work (and therefore fit classical models) and exhibit some contextual capabilities.

What’s even more surprising is that even the classic BERT bidirectional model has contextual capabilities! I believe there are still many people writing related papers. You can send them to us and we will read them carefully and cite them. We believe that the magical features of contextual learning are all around us, and that the universe is more magical than we understand. Or, looking at it more dispassionately, maybe humans are just not that good at understanding conditional probability.

Things all seem to be working fine within the large model framework. The magic features of the underlying model appear stable and commercializable, and the data is seen as the point of differentiation within it.

Maybe now is the era of data-centric basic models?

Are we repeating the data-centric supervised learning shift? In other words, are models and engineering becoming commoditized?

The rise of commoditized models and open source information. We are seeing basic models being commoditized and put into use – well, it feels very “deep learning”. For us, the greatest evidence of a model's commoditization is its availability. There are two main types of influence: people have a need (stability, etc.) and big companies can take advantage. Open source arose not because of hobbyist interest, but because large corporations and others outside of government decided they needed something like this (see The Rise of Python ).

Waiting for the latest super company to launch a new super model?

Where does the biggest difference come from? data! These tools are increasingly available, but the underlying models are not necessarily immediately available. How does that handle deployment? Waiting for the new super company to launch a new super model? This can be said to be a way! But we call it nihilism! Whether this model will be open source or not is hard to say - but what about underlying model applications that sit on private data that can't be sent to the API? Will the model have 100 trillion parameters – and how many users can access and use it? What is the training content of the model? The model is mainly trained based on public data...

So there is almost no guarantee that it will know what you care about? How do you maintain the magical properties of the base model so that it works for you? It’s necessary to effectively manage underlying model data (data is critical!) and to take full advantage of great open source models when testing (adapting input and contextual data while testing is critical!):

Data management and data-centric scaling? Prediction: Smarter methods of collecting data sets lead to small, beautiful models. Those scaling law papers that opened our eyes deserve attention: such as OpenAI, which originally studied scaling law, and DeepMind’s Chinchilla. Although we have a default reference architecture (transforms), the number of tokens represents the information content of the data to a certain extent. Experience tells us that data vary widely in subject matter and quality. We have a hunch that what really matters is the actual bits of information with overlap and order—information-theoretic concepts like entropy may drive the evolution of large- and small-based models.

Information input and calculation during testing. The base model isn't necessarily immediately available, but the calculations can make a big difference when tested in new ways. Given the cost and lack of privacy of using closed source model APIs, we recently launched an open source base model with 30x smaller parameters that can be beaten at the specification benchmark level by efficiently using small models at test time OpenAI's closed-source model - This approach is called Ask Me Anything (AMA) Prompting. At test time, users control the underlying model through prompts or natural language descriptions of tasks they are interested in, and prompt design can have a huge impact on performance. Obtaining prompts accurately is complex and arduous, so the AMA recommends using a series of noisy prompts of different qualities and using statistical theory to deal with the noise problem. There are many sources of inspiration for AMA: Maieutic Prompting, Reframing GPT-k, AI chain and more! The key is that we can do calculations at test time in new ways - no need to prompt the model just once! This is not only about data management at training time, but also about adjusting input and contextual data at test time.

prompt: "really small AI model"

From the AMA We see that small models already have excellent reasoning capabilities that match a variety of tasks, while the key value of large models seems to be in memorizing factual data. Small models perform poorly on facts, so how do we introduce data and information to solve this problem? Oddly enough, we use SGD to store facts in a neural network, converting them into fuzzy floating point values... the abstraction seems much less efficient than a DRAM-backed key-value store. However, looking at the results of the AMA, the difference between small and large models is much smaller in terms of time-varying or domain-specialized facts... We at Apple need to be able to edit the facts we return when building self-supervised models (for business reasons), and also need to be fitted with other software tools to run the service. So it is very important to have the model call index. Time will tell whether the above is sufficient reason to use this type of model.

Where will this lead us? Basic models sit alongside traditional methods. Assuming that data-centric models are progressing at both the exploration and deployment ends, for fast iteration and task-agnostic workflows - the exploration phase, we make the ready-made general base model more useful and efficient through data management/test time strategies. Users will leave the exploration phase with a clearer task definition, use data-centric AI and manage training data (your own data is important), in a Snorkel manner by leveraging and combining multiple prompts and/or base models. Train smaller, faster “proprietary” models. These models can be deployed in real production environments and are more accurate for specific tasks and specific data! Or the underlying model can be used to improve weakly supervised techniques—for which some lab and Snorkel members won UAI awards.

In the final analysis, data is related to the final production of the model. Data is the only thing that is not commoditized. We still believe that Snorkel's view of data is the way forward - you need programming abstractions, a way to express, combine and iteratively correct disparate data sources and supervision signals to train deployable models for the ultimate task.

The above is the detailed content of After basic models with tens of billions and hundreds of billions of parameters, are we entering a data-centric era?. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

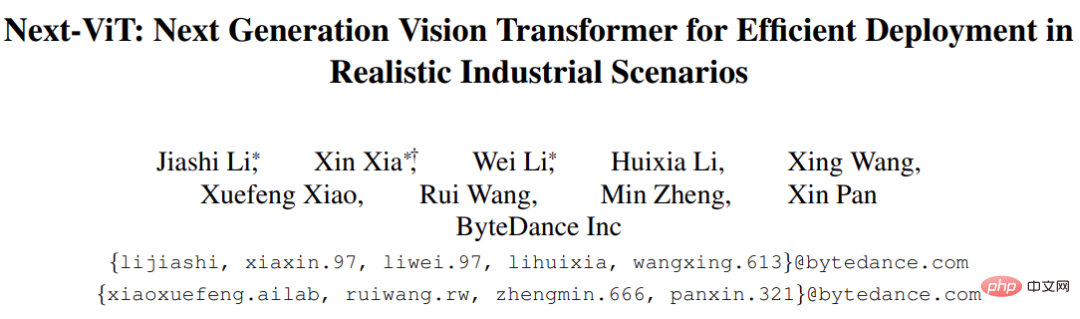

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

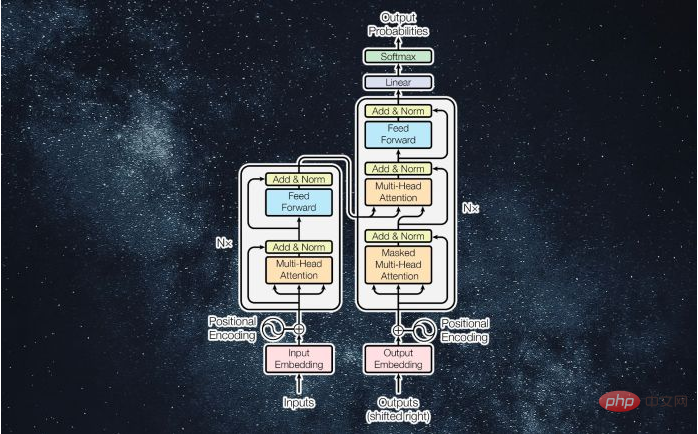

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

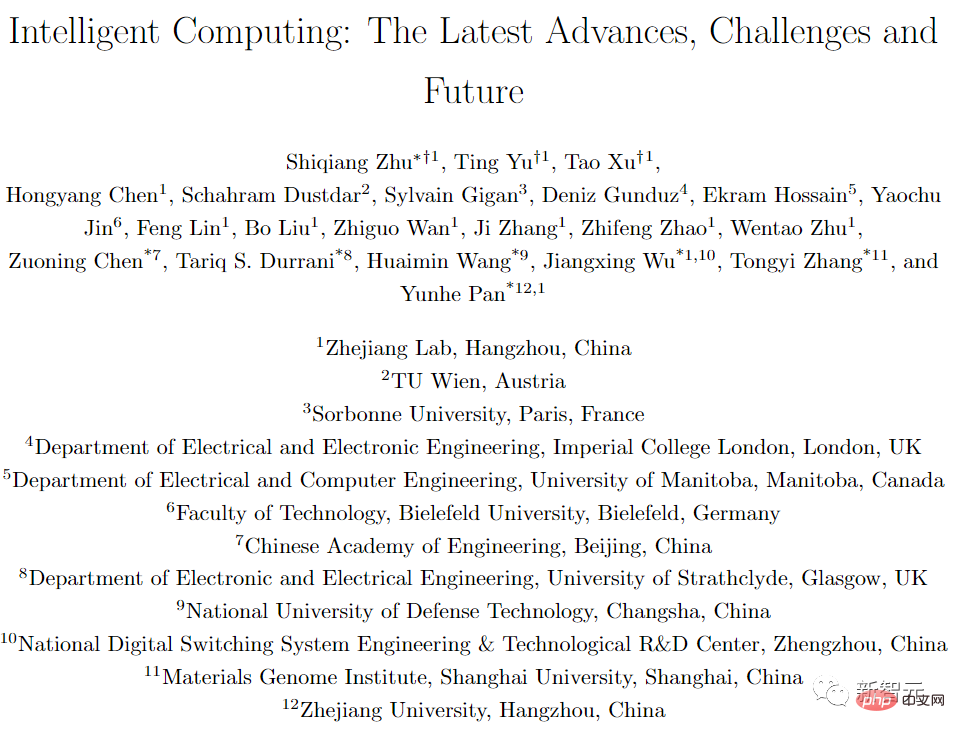

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment