Technology peripherals

Technology peripherals AI

AI Yann LeCun says giant models cannot achieve the goal of approaching human intelligence

Yann LeCun says giant models cannot achieve the goal of approaching human intelligence"Language only carries a small part of all human knowledge; most human knowledge and all animal knowledge are non-linguistic; therefore, large language models cannot approach human-level intelligence," this is Turing Award winner Yann LeCun's latest thinking on the prospects of artificial intelligence.

Yesterday, his new article co-authored with New York University postdoc Jacob Browning was published in "NOEMA", triggering People's discussions.

In the article, the author discusses the currently popular large-scale language model and believes that it has obvious limits. The direction of future efforts in the field of AI may be to give machines priority in understanding other levels of knowledge in the real world.

Let’s see what they say.

Some time ago, former Google AI ethics researcher Blake Lemoine claimed that the AI chatbot LaMDA is as conscious as a human, which caused an uproar in the field.

LaMDA is actually a large language model (LLM) designed to predict the next possible word for any given text. Since many conversations are predictable to some degree, these systems can infer how to keep the conversation efficient. LaMDA does such a good job at this kind of task that Blake Lemoine began to wonder whether AI has “consciousness.”

Researchers in the field have different views on this matter: some people scoff at the idea of machines being conscious; some people think that the LaMDA model may not be, but the next model may be conscious. . Others point out that it is not difficult for machines to "cheat" humans.

The diversity of responses highlights a deeper problem: As LLMs become more common and powerful, it seems increasingly difficult to agree on our views on these models . Over the years, these systems have surpassed many "common sense" language reasoning benchmarks, but these systems appear to have little committed common sense when tested, and are even prone to nonsense and making illogical and dangerous suggestions. This raises a troubling question: How can these systems be so intelligent yet have such limited capabilities?

In fact, the most fundamental problem is not artificial intelligence, but the limitation of language. Once we give up the assumption about the connection between consciousness and language, these systems are destined to have only a superficial understanding of the world and never come close to the "comprehensive thinking" of humans. In short, while these models are already some of the most impressive AI systems on the planet, these AI systems will never be as intelligent as us humans.

For much of the 19th and 20th centuries, a dominant theme in philosophy and science was: knowledge is merely language. This means that understanding one thing requires only understanding the content of a sentence and relating that sentence to other sentences. According to this logic, the ideal language form would be a logical-mathematical form composed of arbitrary symbols connected by strict inference rules.

The philosopher Wittgenstein said: "The sum total of true propositions is natural science." This position was established in the 20th century and later caused a lot of controversy.

Some highly educated intellectuals still hold the view: "Everything we can know can be contained in an encyclopedia, so just reading all the contents of the encyclopedia will make us We have a comprehensive understanding of everything." This view also inspired much of the early work on Symbolic AI, which made symbolic processing the default paradigm. For these researchers, AI knowledge consists of large databases of real sentences connected to each other by hand-made logic. The goal of the AI system is to output the right sentence at the right time, that is, to process symbols in an appropriate way. .

This concept is the basis of the Turing test: if a machine "says" everything it is supposed to say, that means it knows what it is talking about because it knows the correct Sentences and when to use them use the above artificial intelligence knowledge.

But this view has been severely criticized. The counterargument is that just because a machine can talk about things, it does not mean that it understands what is being said. This is because language is only a highly specific and very limited representation of knowledge. All languages, whether programming languages, symbolic logic languages, or everyday spoken language, enable a specific type of representational mode; it is good at expressing discrete objects and properties and the relationships between them at a very high level of abstraction.

However, all modes of representation involve compression of information about things, but differ in what is left and what is left out in the compression. The representation mode of language may miss some specific information, such as describing irregular shapes, the movement of objects, the functions of complex mechanisms, or the meticulous brushstrokes in paintings, etc. Some non-linguistic representation schemes can express this information in an easy-to-understand way, including iconic knowledge, distributed knowledge, etc.

The Limitations of Language

To understand the shortcomings of the language representation model, we must first realize how much information language conveys. In fact, language is a very low-bandwidth method of transmitting information, especially when isolated words or sentences convey little information without context. Furthermore, the meaning of many sentences is very ambiguous due to the large number of homophones and pronouns. As researchers such as Chomsky have pointed out: Language is not a clear and unambiguous communication tool.

But humans don’t need perfect communication tools because we share a system of understanding non-verbal language. Our understanding of a sentence often depends on a deep understanding of the context in which the sentence is placed, allowing us to infer the meaning of the linguistic expression. We often talk directly about the matter at hand, such as a football match. Or communicating to a social role in a situation, such as ordering food from a waiter.

The same goes for reading passages of text—a task that undermines AI’s access to common sense but is a popular way to teach context-free reading comprehension skills to children. This approach focuses on using general reading comprehension strategies to understand text—but research shows that the amount of background knowledge a child has about the topic is actually a key factor in comprehension. Understanding whether a sentence or paragraph is correct depends on a basic grasp of the subject matter.

"It is clear that these systems are mired in superficial understanding and will never come close to the full range of human thought."

Words and the inherent contextual properties of sentences are at the core of LLM's work. Neural networks typically represent knowledge as know-how, that is, the proficient ability to grasp patterns that are highly context-sensitive and to summarize regularities (concrete and abstract) that are necessary to process inputs in an elaborate way but are suitable only for limited tasks .

In LLM, it's about the system identifying patterns at multiple levels of existing text, seeing both how words are connected in a paragraph and how sentences are constructed. How they are connected together in larger paragraphs. The result is that a model's grasp of language is inevitably context-sensitive. Each word is understood not according to its dictionary meaning, but according to its role in various sentences. Since many words—such as "carburetor," "menu," "tuning," or "electronics"—are used almost exclusively in specific fields, even an isolated sentence with one of these words will predictably take out of context .

In short, LLM is trained to understand the background knowledge of each sentence, looking at surrounding words and sentences to piece together what is going on. This gives them endless possibilities to use different sentences or phrases as input and come up with reasonable (although hardly flawless) ways to continue a conversation or fill out the rest of an article. A system trained on human-written paragraphs for use in daily communication should possess the general understanding necessary to be able to hold high-quality conversations.

Shallow understanding

Some people are reluctant to use the word "understanding" in this context or call LLM "intelligent". The semantics cannot be said yet. Understanding convinces anyone. Critics accuse these systems of being a form of imitation—and rightly so. This is because LLM's understanding of language, while impressive, is superficial. This superficial realization feels familiar: classrooms full of “jargon-speaking” students who have no idea what they are talking about—in effect imitating their professors or the text they are reading. It's just part of life. We are often unclear about what we know, especially in terms of knowledge gained from language.

LLM acquires this superficial understanding of everything. Systems like GPT-3 are trained by masking out part of a sentence, or predicting the next word in a paragraph, forcing the machine to guess the word most likely to fill the gap and correct incorrect guesses. The system eventually becomes adept at guessing the most likely words, making itself an effective predictive system.

This brings some real understanding: to any question or puzzle, there are usually only a few right answers, but an infinite number of wrong answers. This forces the system to learn language-specific skills, such as interpreting jokes, solving word problems, or solving logic puzzles, in order to predict the correct answers to these types of questions on a regular basis.

These skills and related knowledge allow machines to explain how complex things work, simplify difficult concepts, rewrite and retell stories, and acquire many other language-related abilities. As Symbolic AI posits – instead of a vast database of sentences linked by logical rules, machines represent knowledge as contextual highlights used to come up with a reasonable next sentence given the previous line.

"Abandoning the idea that all knowledge is verbal makes us realize how much of our knowledge is non-verbal."

But the ability to explain a concept in language is different from the ability to actually use it. The system can explain how to perform long division while actually not being able to do it, or it can explain what is inconsistent with it and yet happily continue explaining it. Contextual knowledge is embedded in one form - the ability to verbalize knowledge of language - but not in another - as skills in how to do things, such as being empathetic or dealing with difficult issues sensitively.

The latter kind of expertise is essential for language users, but it does not enable them to master language skills - the language component is not primary. This applies to many concepts, even those learned from lectures and books: While science classes do have a lecture component, students' scores are primarily based on their work in the lab. Especially outside of the humanities, being able to talk about something is often not as useful or important as the basic skills needed to make things work.

Once we dig a little deeper, it’s easy to see how shallow these systems actually are: Their attention spans and memories are roughly equivalent to a paragraph. It’s easy to miss this if we’re having a conversation, as we tend to focus on the last one or two comments and grapple with the next reply.

But, the trick to more complex conversations—active listening, recalling and revisiting previous comments, sticking to a topic to make a specific point while avoiding distractions, etc.— All require more attention and memory than machines possess.

This further reduces the types of things they can understand: it's easy to trick them by changing the topic, changing the language, or being weird every few minutes. Step back too far and the system will start over from scratch, lump your new views in with old comments, switch chat languages with you, or believe anything you say. The understanding necessary to develop a coherent worldview is far beyond the capabilities of machines.

Beyond Language

Abandoning the idea that all knowledge is linguistic makes us realize that a considerable part of our knowledge is non-linguistic. While books contain a lot of information we can unpack and use, the same goes for many other items: IKEA’s instructions don’t even bother to write captions next to the diagrams, and AI researchers often look at diagrams in papers to grasp network architecture before By browsing the text, travelers can follow the red or green lines on the map to navigate to where they want to go.

The knowledge here goes beyond simple icons, charts and maps. Humanity has learned much directly from exploring the world, showing us what matter and people can and cannot express. The structure of matter and the human environment convey a lot of information visually: the doorknob is at hand height, the handle of a hammer is softer, etc. Nonverbal mental simulations in animals and humans are common and useful for planning scenarios and can be used to create or reverse engineer artifacts.

Likewise, by imitating social customs and rituals, we can teach the next generation a variety of skills, from preparing food and medicine to calming down during stressful times. Much of our cultural knowledge is iconic, or in the form of precise movements passed down from skilled practitioners to apprentices. These subtle patterns of information are difficult to express and convey in words, but are still understandable to others. This is also the precise type of contextual information that neural networks are good at picking up and refining.

"A system trained solely on language will never come close to human intelligence, even if it is trained from now on until the heat death of the universe."

Language is important because it can convey large amounts of information in a small format, especially with the advent of printing and the Internet, which allows content to be reproduced and widely distributed. But compressing information with language doesn't come without a cost: decoding a dense passage requires a lot of effort. Humanities classes may require extensive outside reading, with much of class time spent reading difficult passages. Building a deep understanding is time-consuming and laborious, but informative.

This explains why a language-trained machine can know so much and yet understand nothing—it is accessing a small portion of human knowledge through a tiny bottleneck. But that small slice of human knowledge can be about anything, whether it's love or astrophysics. So it's a bit like a mirror: it gives the illusion of depth and can reflect almost anything, but it's only a centimeter thick. If we try to explore its depths, we'll hit a wall.

Do the right thing

This doesn’t make machines any dumber, but it also shows that there are inherent limits to how smart they can be. A system trained solely on language will never come close to human intelligence, even if it is trained from now on until the heat death of the universe. This is a wrong way to build a knowledge system. But if we just scratch the surface, machines certainly seem to be getting closer to humans. And in many cases, surface is enough. Few of us actually apply the Turing Test to other people, actively questioning their depth of understanding and forcing them to do multi-digit multiplication problems. Most conversations are small talk.

However, we should not confuse the superficial understanding that LLM possesses with the deep understanding that humans gain by observing the wonders of the world, exploring it, practicing in it, and interacting with cultures and other people Mixed together. Language may be a useful component in expanding our understanding of the world, but language does not exhaust intelligence, a point we understand from the behavior of many species, such as corvids, octopuses, and primates.

On the contrary, deep non-verbal understanding is a necessary condition for language to be meaningful. Precisely because humans have a deep understanding of the world, we can quickly understand what others are saying. This broader, context-sensitive learning and knowledge is a more fundamental, ancient knowledge that underlies the emergence of physical biological sentience, making survival and prosperity possible.

This is also the more important task that artificial intelligence researchers focus on when looking for common sense in artificial intelligence. LLMs have no stable body or world to perceive - so their knowledge begins and ends more with words, and this common sense is always superficial. The goal is to have AI systems focus on the world they’re talking about, rather than the words themselves—but LLM doesn’t grasp the difference. This deep understanding cannot be approximated through words alone, which is the wrong direction to take.

The extensive experience of humans processing various large language models clearly shows how little can be obtained from speech alone.

The above is the detailed content of Yann LeCun says giant models cannot achieve the goal of approaching human intelligence. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

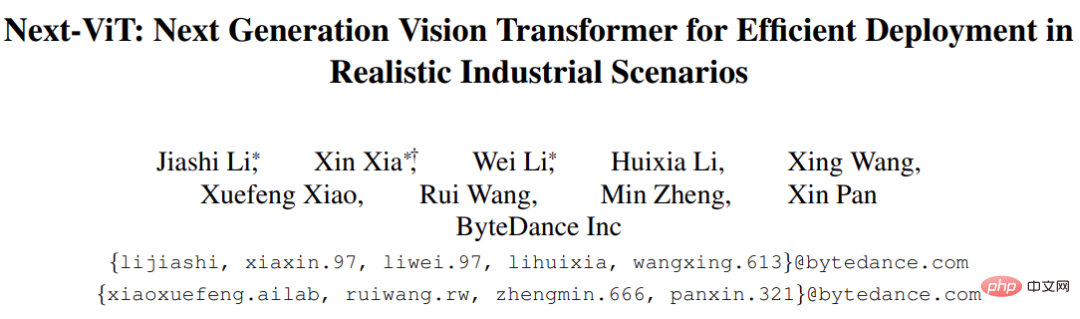

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

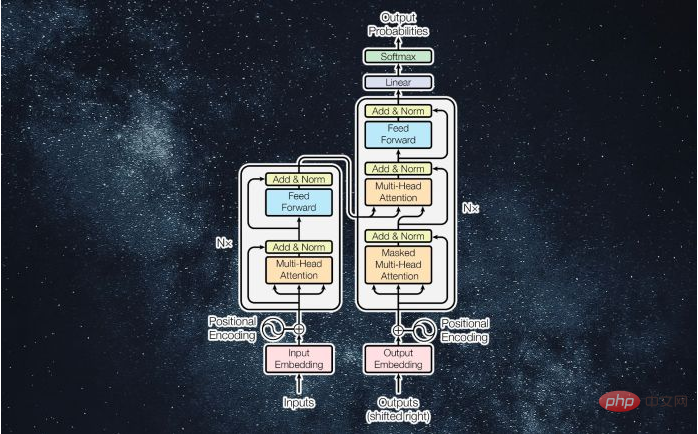

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

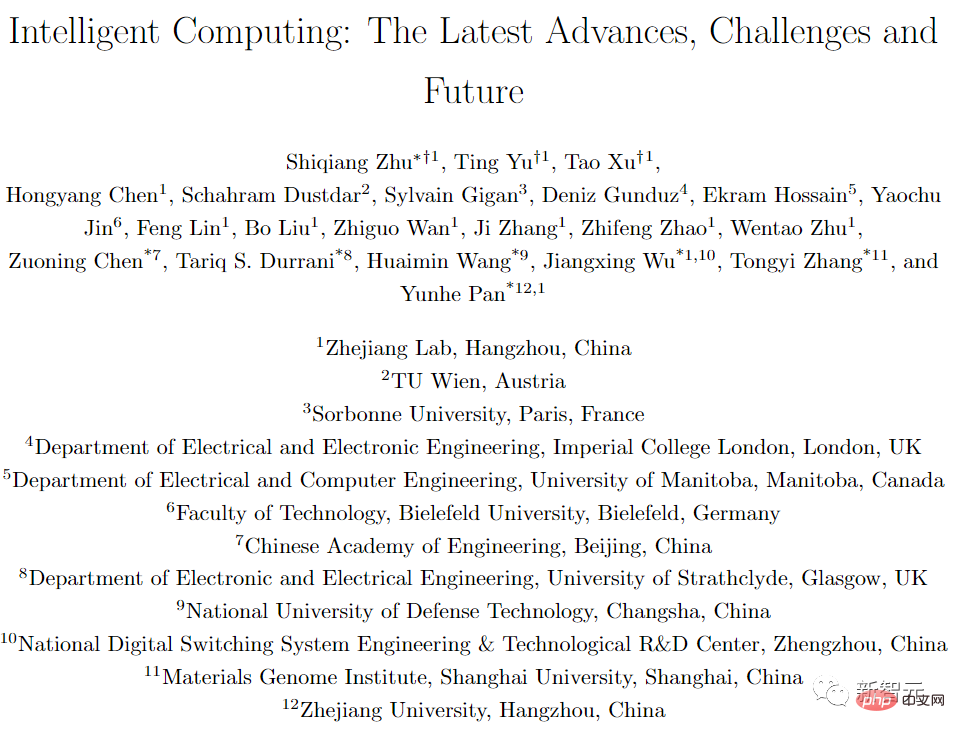

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.