The first version of this article was written in May 2018, and it was recently published in December 2022. I have received a lot of support and understanding from my bosses over the past four years.

(This experience also hopes to give some encouragement to students who are submitting papers. If you write the paper well, you will definitely win. Don’t give up easily!)

arXiv The early version is: Query Attack via Opposite-Direction Feature:Towards Robust Image Retrieval

##paper Link: https://link.springer.com/article/10.1007/s11263-022-01737-y

Paper backup link: https://zdzheng .xyz/files/IJCV_Retrieval_Robustness_CameraReady.pdf

Code: https://github.com/layumi/U_turn

Authors: Zhedong Zheng, Liang Zheng, Yi Yang and Fei Wu

Compared with earlier versions,

- We have made some adjustments to the formula;

- have added many new related works discussions;

- has been added Multi-scale Query attack/black box attack/defense experiments from three different angles;

- Add new methods and comparisons on Food256, Market-1501, CUB, Oxford, Paris and other data sets New ways to visualize.

- Attacked the PCB structure in reid and WiderResNet in Cifar10.

In actual use. For example, for example, we want to attack the image retrieval system of Google or Baidu to make big news (fog). We can download an image of a dog, calculate the features through the imagenet model (or other models, preferably a model close to the retrieval system), and calculate the adversarial noise plus by turning the features around (the method in this article). Back to the dog. Then use the image search function for the dog after the attack. You can see that Baidu and Google’s system cannot return dog-related content. Although we humans can still recognize that this is an image of a dog.

P.S. At that time, I also tried to attack Google to search for images. People can still recognize that it is an image of a dog, but Google often returns "mosaic" related images. I estimate that Google does not all use deep features, or it is quite different from the imagenet model. As a result, after an attack, it often tends to be "mosaic" instead of other entity categories (airplanes and the like). Of course mosaic can be considered a success to some extent!

What#1. The original intention of this article is actually very simple. The existing reid model or landscape retrieval model has reached a Recall-1 recall rate of more than 95%. So can we design a way to attack the retrieval model? On the one hand, let’s explore the background of the REID model. On the other hand, the attack is for better defense. Let’s study the defense anomaly case.

2. The difference between the retrieval model and the traditional classification model is that the retrieval model uses extracted features to compare the results (sorting), which is quite different from the traditional classification model. , as shown in the table below.

3. Another characteristic of the retrieval problem is open set, which means that the categories during testing are often not the same as those during training. seen. If you are familiar with the cub data set, under the retrieval setting, there are more than 100 kinds of birds in the training set during training, and more than 100 kinds of birds in the test set. There are no overlap types in these two 100 kinds. Matching and ranking rely purely on extracted visual features. Therefore, some classification attack methods are not suitable for attacking the retrieval model, because the graident based on category prediction during the attack is often inaccurate.

4. When testing the retrieval model, there are two parts of data: one is the query image query, and the other is the image library gallery (the amount of data is large and generally inaccessible). Considering the practical feasibility, our method will mainly target the image of the attack query to cause wrong retrieval results.

How

1. A natural idea is to attack features. So how to attack features? Based on our previous observations on cross entropy loss, (please refer to the article large-margin softmax loss). Often when we use classification loss, feature f will have a radial distribution. This is because the cos similarity is calculated between the feature and the weight W of the last classification layer during learning. As shown in the figure below, after we finish learning the model, samples of the same class will be distributed near W of that class, so that f*W can reach the maximum value.

2. So we proposed a very simple method, which is to make the features turn around. As shown in the figure below, there are actually two common classification attack methods that can also be visualized together. For example (a), this is to suppress the category with the highest classification probability (such as Fast Gradient), by giving -Wmax, so there is a red gradient propagation direction along the inverse Wmax; as (b), there is another way to suppress the least likely category. Features of possible categories are pulled up (such as Least-likely), so the red gradient is along Wmin.

3. These two classification attack methods are of course very direct and effective in traditional classification problems. However, since the test sets in the retrieval problem are all unseen categories (unseen bird species), the distribution of natural f does not closely fit Wmax or Wmin. Therefore, our strategy is very simple. Since we have f, then We can just move f to -f, as shown in Figure (c).

In this way, in the feature matching stage, the results that originally ranked high will, ideally, be ranked lowest when calculated as cos similarity with -f, changing from close to 1 to close to -1.

Achieved the effect of our attack retrieval sorting.

4. A small extension. In retrieval problems, we also often use multi-scale for query augmentation, so we also studied how to maintain the attack effect in this case. (The main difficulty is that the resize operation may smooth some small but critical jitters.)

In fact, our method of dealing with it is also very simple. Just like the model ensemble, we combine multiple Just make the ensemble average of the scale's adversarial gradient.

Experiment

1. Under three data sets and three indicators, we fixed the jitter amplitude, which is the epsilon of the abscissa, and compared which one under the same jitter amplitude. One method can make the retrieval model make more mistakes. Our method is that the yellow lines are all at the bottom, which means the attack effect is better.

2. At the same time, we also provide quantitative experimental results on 5 data sets (Food, CUB, Market, Oxford, Paris)

3. In order to demonstrate the mechanism of the model, we also tried to attack the classification model on Cifar10.

You can see that our strategy of changing the characteristics of the last layer also has strong suppression power against the top-5. For top-1, since there is no candidate category, it will be slightly lower than least-likely, but it is almost the same.

4. Black box attack

We also tried to use the attack sample generated by ResNet50 to attack A black-box DenseNet model (the parameters of this model are not available to us). It is found that better migration attack capabilities can also be achieved.

5. Counter Defense

We use online adversarial training to train a defense model. We found that it is still ineffective when accepting new white-box attacks, but it is more stable in small jitters (drops less points) than a completely defenseless model.

6. Visualization of feature movement

This is also my favorite experiment. We use Cifar10 to change the dimension of the last classification layer to 2 to plot the changes in features of the classification layer.

As shown in the figure below, as the jitter amplitude epsilon increases, we can see that the characteristics of the sample slowly "turn around". For example, most of the orange features have moved to the opposite side.

The above is the detailed content of The reversal feature makes the re-id model go from 88.54% to 0.15%. For more information, please follow other related articles on the PHP Chinese website!

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AM

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AMsummary: Small Language Model (SLM) is designed for efficiency. They are better than the Large Language Model (LLM) in resource-deficient, real-time and privacy-sensitive environments. Best for focus-based tasks, especially where domain specificity, controllability, and interpretability are more important than general knowledge or creativity. SLMs are not a replacement for LLMs, but they are ideal when precision, speed and cost-effectiveness are critical. Technology helps us achieve more with fewer resources. It has always been a promoter, not a driver. From the steam engine era to the Internet bubble era, the power of technology lies in the extent to which it helps us solve problems. Artificial intelligence (AI) and more recently generative AI are no exception

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AM

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AMHarness the Power of Google Gemini for Computer Vision: A Comprehensive Guide Google Gemini, a leading AI chatbot, extends its capabilities beyond conversation to encompass powerful computer vision functionalities. This guide details how to utilize

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AM

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AMThe AI landscape of 2025 is electrifying with the arrival of Google's Gemini 2.0 Flash and OpenAI's o4-mini. These cutting-edge models, launched weeks apart, boast comparable advanced features and impressive benchmark scores. This in-depth compariso

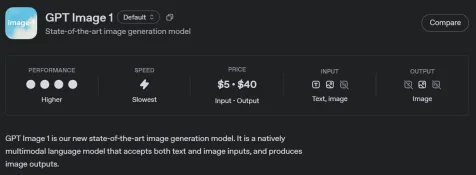

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AM

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AMOpenAI's latest multimodal model, gpt-image-1, revolutionizes image generation within ChatGPT and via its API. This article explores its features, usage, and applications. Table of Contents Understanding gpt-image-1 Key Capabilities of gpt-image-1

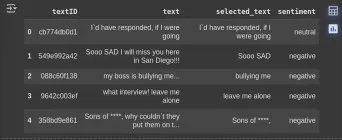

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AM

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AMData preprocessing is paramount for successful machine learning, yet real-world datasets often contain errors. Cleanlab offers an efficient solution, using its Python package to implement confident learning algorithms. It automates the detection and

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AM

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AMThe term "AI-ready workforce" is frequently used, but what does it truly mean in the supply chain industry? According to Abe Eshkenazi, CEO of the Association for Supply Chain Management (ASCM), it signifies professionals capable of critic

How One Company Is Quietly Working To Transform AI ForeverApr 26, 2025 am 11:12 AM

How One Company Is Quietly Working To Transform AI ForeverApr 26, 2025 am 11:12 AMThe decentralized AI revolution is quietly gaining momentum. This Friday in Austin, Texas, the Bittensor Endgame Summit marks a pivotal moment, transitioning decentralized AI (DeAI) from theory to practical application. Unlike the glitzy commercial

Nvidia Releases NeMo Microservices To Streamline AI Agent DevelopmentApr 26, 2025 am 11:11 AM

Nvidia Releases NeMo Microservices To Streamline AI Agent DevelopmentApr 26, 2025 am 11:11 AMEnterprise AI faces data integration challenges The application of enterprise AI faces a major challenge: building systems that can maintain accuracy and practicality by continuously learning business data. NeMo microservices solve this problem by creating what Nvidia describes as "data flywheel", allowing AI systems to remain relevant through continuous exposure to enterprise information and user interaction. This newly launched toolkit contains five key microservices: NeMo Customizer handles fine-tuning of large language models with higher training throughput. NeMo Evaluator provides simplified evaluation of AI models for custom benchmarks. NeMo Guardrails implements security controls to maintain compliance and appropriateness

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment