Technology peripherals

Technology peripherals AI

AI Not just DALL·E! Now AI painters can model and make videos. I can't even imagine what will happen in the future.

Not just DALL·E! Now AI painters can model and make videos. I can't even imagine what will happen in the future.Recently, Stability.ai, a company founded and funded by Emad Mostaque, announced the public release of artistic works created by AI.

You might think this is just another attempt at AI in the arts, but it’s actually much more than that. There are two reasons. First, unlike DALL-E 2, Stable Diffusion is open source. This means that anyone can leverage its backbone to build applications for specific text-to-image creation tasks for free. Additionally, the developers of Midjourney implemented a feature that allows users to combine it with Stable Diffusion, which has led to some amazing results.

Just imagine what will happen in the next few months. Second, unlike the DALL-E mini and Disco Diffusion, Stable Diffusion can create stunningly realistic and artistic work, nothing to envy of OpenAI or Google's models. People even claim that it is the new SOTA among "generative search engines". (Unless otherwise stated, all images in this article were created using Stable Diffusion).

Stable Diffusion embodies the best features of the AI art world: it is arguably the best AI art model available, and it is open source. This is simply unheard of and will have a huge impact. What’s even more interesting is that news about these services may reach you through the most unexpected sources. Your parents, your children, your partner, your friends or your colleagues. These people are often outsiders to what is happening in the field of artificial intelligence, and they are about to discover the latest trends in this field. Art could be the way AI finally knocks on the door of those who are blind to the future. Isn't this very poetic?

Not just open source DALL·E 2

Stability.ai was born to create "open AI tools that allow us to realize our potential." Not just a research model that never gets into the hands of most people, but a tool with real-world applications, open for me and you to use and explore.

This is what makes it different from other tech companies, like OpenAI, which jealously guards the secrets of its best systems (GPT-3 and DALL-E 2), or Google, which never even Intend to release your own (PaLM, LaMDA, Imagen or Parti) as a private beta. This public release of Stability.ai goes beyond sharing model weights and code—which, while critical to the health of science and technology, are not something most people care about. And also provides a code-free, ready-to-use website for those of us who don’t want to or don’t know how to code.

The website is called DreamStudio Lite, which is free to use and can generate up to 200 pictures. Like DALL-E 2, it has a paid subscription model, which gets you 1,000 images for £10 (OpenAI refills with 15 credits per month, but to get more credits you have to buy the 115 pack for $15). The cost of DALL-E is US$0.03/image, while the cost of Stable Diffusion is £0.01/image. Additionally, Stable Diffusion can be used at scale via API (cost scales linearly, so you can get 100K generations for £1000). In addition to image generation, Stability.ai will soon announce DreamStudio Pro (audio/video) and Enterprise (studio). Another feature that DreamStudio may implement soon is the ability to generate images from other images instead of the usual text-to-image setup. Like this:

On the website, there is also a resource about prompt engineering. If you are new to this area, you may be able to use it. Plus, unlike DALL-E 2, you can control parameters to influence the outcome and retain more agency over it. Stability.ai has done everything to facilitate access to models. OpenAI was the first and had to go slower to assess the potential risks and biases inherent in the model, but they didn't need to keep the model in closed beta for so long or build such a business model that restricted creativity. Both Midjourney and Stable Diffusion have proven this.

Security Open Source > Privacy and Control

Open source technology has its own limitations. Openness should come before privacy and tight control, but not before security. As the company explains in the announcement, it is "a license that allows for both commercial and non-commercial use," with a focus on open and responsible downstream use of the model. It also mandates that derivative works be subject to at least the same user-based restrictions.

The open source model is a good model in itself, but if we don’t want this technology to end up hurting people, or adding more arrogance to the internet in the form of misinformation, It is equally important to establish reasonable guardrails. “Because these models are trained on a wide range of image-text pairs scraped from the Internet, the models may reproduce some social biases and produce unsafe content, so open mitigation strategies and public discussion of these biases can allow everyone to Be part of this conversation." In any case, open security > privacy and control.

The power of open source to change the world

With a solid foundation of ethical values and openness, Stable Diffusion promises to outperform its competitors in real-world impact.

For those who want to download it and run it on their computers, you should know that it requires 6.9Gb of VRAM - which is suitable for high-end consumer-grade GPUs, making it smaller than the DALL-E 2 To be lightweight, but still out of reach for most users. The rest, like me, can start using Dream Studio right away.

Stable Diffusion is widely regarded as the best AI art model currently available and will become the foundation for countless applications, networks and services, redefining how we create and interact with art. interactive. But now, apps specifically designed for different use cases will be built from the ground up for everyone to use. People are enhancing children's drawings, making collages with outer drawings and inner drawings, designing magazine covers, drawing comics, creating morphed and animated videos, generating images from images, and more. Some of these applications are already possible in DALL-E and Midjourney, but Stable Diffusion can push the current creative revolution into the next stage. In the words of Andrej Karpathy, former Tesla AI director and Li Feifei's disciple, "artistic creation has entered a new era of human AI cooperation."

Like Stable Diffusion AI art models involve a new class of tools and should be understood with a new frame of mind for the new reality we live in. We cannot simply draw analogies or parallels to other eras and expect to be able to accurately explain or predict the future. Some things will be similar, some won't. We must treat this coming future as uncharted territory.

Written at the end

There is no doubt that the public release of Stable Diffusion is the most important and influential event ever in the field of artificial intelligence art models, And this is just the beginning.

Emad Mostaque, one of the authors, said on Twitter: "Expect quality to continue to rise across the board as we release faster, better and more specific models. Not just images, but audio next month, Then move on to 3D, video. Language, code, and more training.

We are on the verge of a multi-year revolution in the way we interact, connect and understand art, and creativity in general. And not just in the philosophical, intellectual realm, but as something that everyone now shares and experiences. The creative world will change forever and we must have open and respectful conversations to create a better future for all. Only when open source technology is used responsibly can we create the change we want to see.

The above is the detailed content of Not just DALL·E! Now AI painters can model and make videos. I can't even imagine what will happen in the future.. For more information, please follow other related articles on the PHP Chinese website!

谷歌三件套指的是哪三个软件Sep 30, 2022 pm 01:54 PM

谷歌三件套指的是哪三个软件Sep 30, 2022 pm 01:54 PM谷歌三件套指的是:1、google play商店,即下载各种应用程序的平台,类似于移动助手,安卓用户可以在商店下载免费或付费的游戏和软件;2、Google Play服务,用于更新Google本家的应用和Google Play提供的其他第三方应用;3、谷歌服务框架(GMS),是系统软件里面可以删除的一个APK程序,通过谷歌平台上架的应用和游戏都需要框架的支持。

为什么中国不卖google手机Mar 30, 2023 pm 05:31 PM

为什么中国不卖google手机Mar 30, 2023 pm 05:31 PM中国不卖google手机的原因:谷歌已经全面退出中国市场了,所以不能在中国销售,在国内是没有合法途径销售。在中国消费市场中,消费者大都倾向于物美价廉以及功能实用的产品,所以竞争实力本就因政治因素大打折扣的谷歌手机主体市场一直不在中国大陆。

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

谷歌并未放弃TensorFlow,将于2023年发布新版,明确四大支柱Apr 12, 2023 am 11:52 AM

谷歌并未放弃TensorFlow,将于2023年发布新版,明确四大支柱Apr 12, 2023 am 11:52 AM2015 年,谷歌大脑开放了一个名为「TensorFlow」的研究项目,这款产品迅速流行起来,成为人工智能业界的主流深度学习框架,塑造了现代机器学习的生态系统。从那时起,成千上万的开源贡献者以及众多的开发人员、社区组织者、研究人员和教育工作者等都投入到这一开源软件库上。然而七年后的今天,故事的走向已经完全不同:谷歌的 TensorFlow 失去了开发者的拥护。因为 TensorFlow 用户已经开始转向 Meta 推出的另一款框架 PyTorch。众多开发者都认为 TensorFlow 已经输掉

LLM之战,谷歌输了!越来越多顶尖研究员跳槽OpenAIApr 07, 2023 pm 05:48 PM

LLM之战,谷歌输了!越来越多顶尖研究员跳槽OpenAIApr 07, 2023 pm 05:48 PM前几天,谷歌差点遭遇一场公关危机,Bert一作、已跳槽OpenAI的前员工Jacob Devlin曝出,Bard竟是用ChatGPT的数据训练的。随后,谷歌火速否认。而这场争议,也牵出了一场大讨论:为什么越来越多Google顶尖研究员跳槽OpenAI?这场LLM战役它还能打赢吗?知友回复莱斯大学博士、知友「一堆废纸」表示,其实谷歌和OpenAI的差距,是数据的差距。「OpenAI对LLM有强大的执念,这是Google这类公司完全比不上的。当然人的差距只是一个方面,数据的差距以及对待数据的态度才

参数少量提升,性能指数爆发!谷歌:大语言模型暗藏「神秘技能」Apr 11, 2023 pm 11:16 PM

参数少量提升,性能指数爆发!谷歌:大语言模型暗藏「神秘技能」Apr 11, 2023 pm 11:16 PM由于可以做一些没训练过的事情,大型语言模型似乎具有某种魔力,也因此成为了媒体和研究员炒作和关注的焦点。当扩展大型语言模型时,偶尔会出现一些较小模型没有的新能力,这种类似于「创造力」的属性被称作「突现」能力,代表我们向通用人工智能迈进了一大步。如今,来自谷歌、斯坦福、Deepmind和北卡罗来纳大学的研究人员,正在探索大型语言模型中的「突现」能力。解码器提示的 DALL-E神奇的「突现」能力自然语言处理(NLP)已经被基于大量文本数据训练的语言模型彻底改变。扩大语言模型的规模通常会提高一系列下游N

四分钟对打300多次,谷歌教会机器人打乒乓球Apr 10, 2023 am 09:11 AM

四分钟对打300多次,谷歌教会机器人打乒乓球Apr 10, 2023 am 09:11 AM让一位乒乓球爱好者和机器人对打,按照机器人的发展趋势来看,谁输谁赢还真说不准。机器人拥有灵巧的可操作性、腿部运动灵活、抓握能力出色…… 已被广泛应用于各种挑战任务。但在与人类互动紧密的任务中,机器人的表现又如何呢?就拿乒乓球来说,这需要双方高度配合,并且球的运动非常快速,这对算法提出了重大挑战。在乒乓球比赛中,首要的就是速度和精度,这对学习算法提出了很高的要求。同时,这项运动具有高度结构化(具有固定的、可预测的环境)和多智能体协作(机器人可以与人类或其他机器人一起对打)两大特点,使其成为研究人

超5800亿美元!微软谷歌神仙打架,让英伟达市值飙升,约为5个英特尔Apr 11, 2023 pm 04:31 PM

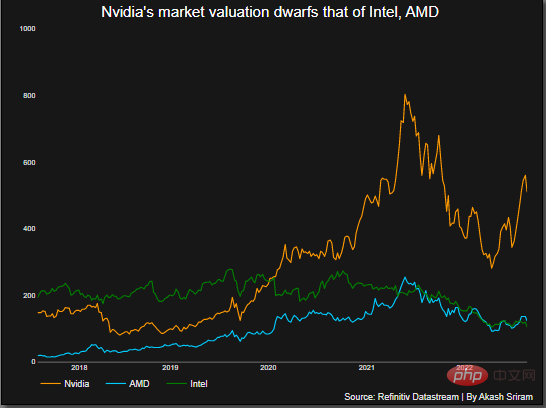

超5800亿美元!微软谷歌神仙打架,让英伟达市值飙升,约为5个英特尔Apr 11, 2023 pm 04:31 PMChatGPT在手,有问必答。你可知,与它每次对话的计算成本简直让人泪目。此前,分析师称ChatGPT回复一次,需要2美分。要知道,人工智能聊天机器人所需的算力背后烧的可是GPU。这恰恰让像英伟达这样的芯片公司豪赚了一把。2月23日,英伟达股价飙升,使其市值增加了700多亿美元,总市值超5800亿美元,大约是英特尔的5倍。在英伟达之外,AMD可以称得上是图形处理器行业的第二大厂商,市场份额约为20%。而英特尔持有不到1%的市场份额。ChatGPT在跑,英伟达在赚随着ChatGPT解锁潜在的应用案

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.