Technology peripherals

Technology peripherals AI

AI MiniGPT-4 looks at pictures, chats, and can also sketch and build websites; the video version of Stable Diffusion is here

MiniGPT-4 looks at pictures, chats, and can also sketch and build websites; the video version of Stable Diffusion is hereMiniGPT-4 looks at pictures, chats, and can also sketch and build websites; the video version of Stable Diffusion is here

Table of Contents

- #Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models

- MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models

- OpenAssistant Conversations - Democratizing Large Language Model Alignment

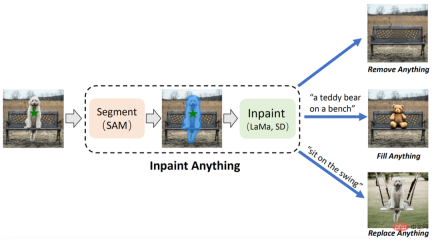

- Inpaint Anything: Segment Anything Meets Image Inpainting

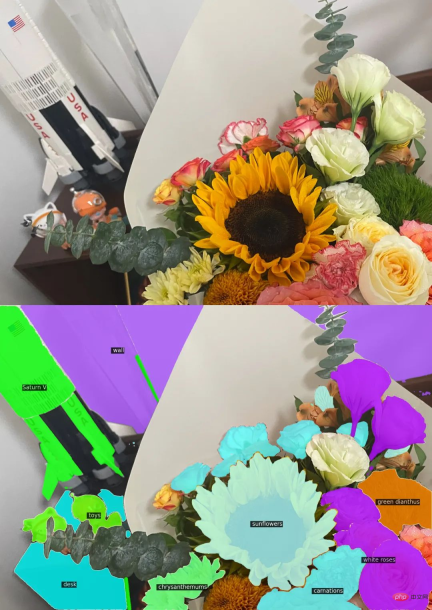

- Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP

- Plan4MC: Skill Reinforcement Learning and Planning for Open-World Minecraft Tasks

- T2Ranking: A large-scale Chinese Benchmark for Passage Ranking

- ArXiv Weekly Radiostation: NLP, CV, ML More selected papers (with audio)

Paper 1: Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models

- Authors: Andreas Blattmann, Robin Rombach, etc.

- Paper address: https://arxiv.org/pdf/2304.08818.pdf

Abstract:Recently, researchers from the University of Munich, NVIDIA and other institutions have used The latent diffusion model (LDM) enables high-resolution long video synthesis.

In the paper, the researchers applied the video model to real-world problems and generated high-resolution long videos. They focus on two related video generation problems, one is video synthesis of high-resolution real-world driving data, which has great potential as a simulation engine in autonomous driving environments, and the other is text-guided video generation for creative content generation.

To this end, researchers proposed the Video Latent Diffusion Model (Video LDM) and extended LDM to a computationally intensive task - high-resolution video generation. In contrast to previous video generation DM work, they pre-trained Video LDM only on images (or used available pre-trained image LDM), allowing the utilization of large-scale image datasets.

Then the temporal dimension is introduced into the latent space DM and the pre-trained spatial layer is fixed while only training these temporal layers on the encoded image sequence (i.e. video), thereby converting the LDM image generator Convert to video generator (picture below left). Finally, the decoder of the LDM is fine-tuned in a similar manner to achieve temporal consistency in pixel space (right image below).

Recommendation: Video version Stable Diffusion: NVIDIA achieves the highest 1280×2048, the longest 4.7 seconds.

Paper 2: MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models

- Authors: Zhu Deyao, Chen Jun, Shen Xiaoqian, Li Xiang, Mohamed H. Elhoseiny

- Paper address: https://minigpt-4.github .io/

##Abstract: A team from King Abdullah University of Science and Technology (KAUST) developed a GPT-4 Similar product - MiniGPT-4. MiniGPT-4 demonstrates many capabilities similar to GPT-4, such as generating detailed image descriptions and creating websites from handwritten drafts. Additionally, the authors observed other emerging capabilities of MiniGPT-4, including creating stories and poems based on given images, providing solutions to problems shown in images, teaching users how to cook based on food photos, etc.

MiniGPT-4 uses a projection layer to align a frozen visual encoder and a frozen LLM (Vicuna). MiniGPT-4 consists of a pre-trained ViT and Q-Former visual encoder, a separate linear projection layer, and an advanced Vicuna large language model. MiniGPT-4 only requires training linear layers to align visual features with Vicuna.

Example demonstration: Creating a website from a sketch.

Recommendation: Nearly 10,000 stars in 3 days, experience GPT-4 image recognition ability without any difference, see MiniGPT-4 Chat with pictures and build a website with sketches.

Paper 3: OpenAssistant Conversations - Democratizing Large Language Model Alignment

- ##Author: Andreas Köpf, Yannic Kilcher, etc.

- Paper address: https://drive.google.com/file/d/10iR5hKwFqAKhL3umx8muOWSRm7hs5FqX/view

Abstract: To democratize large-scale alignment research, researchers from institutions such as LAION AI (which provides the open source data used by Stable diffusion.) A large amount of text-based input and feedback is collected to create OpenAssistant Conversations, a diverse and unique dataset specifically designed to train language models or other AI applications.

This dataset is a human-generated, human-annotated assistant-style conversation corpus covering a wide range of topics and writing styles, consisting of 161,443 messages distributed across 66,497 conversation trees , in 35 different languages. The corpus is the product of a global crowdsourcing effort involving more than 13,500 volunteers. It is an invaluable tool for any developer looking to create SOTA instruction models. And the entire dataset is freely accessible to anyone.

In addition, to prove the effectiveness of the OpenAssistant Conversations data set, the study also proposes a chat-based assistant OpenAssistant, which can understand tasks, interact with third-party systems, and dynamically retrieve information . This is arguably the first fully open source large-scale instruction fine-tuning model trained on human data.

The results show that OpenAssistant’s responses are more popular than GPT-3.5-turbo (ChatGPT).

OpenAssistant Conversations data is collected using the web-app interface, including 5 steps: prompt, mark prompt, add reply message For prompters or assistants, mark responses, and rank assistant responses.

Recommended: ChatGPT The world's largest open source replacement.

##Paper 4: Inpaint Anything: Segment Anything Meets Image Inpainting

- ## Author: Tao Yu, Runseng Feng et al

- ## Paper address: http://arxiv.org/abs/2304.06790

- Abstract:

IA has three main functions: (i) Remove Anything: Users only need to click on the object they want to remove, and IA will remove the object without leaving a trace, achieving efficient "magic" Eliminate"; (ii) Fill Anything: At the same time, the user can further tell IA what they want to fill in the object through text prompt (Text Prompt), and IA will then drive the embedded AIGC (AI-Generated Content) Models (such as Stable Diffusion [2]) generate corresponding content-filled objects to achieve "content creation" at will; (iii) Replace Anything: The user can also click to select the objects that need to be retained and tell IA using text prompts If you want to replace the background of an object with something, you can replace the background of the object with the specified content to achieve a vivid "environment transformation". The overall framework of IA is shown in the figure below: Recommendation: No need for fine marking, click on the object to remove the object, Content filling and scene replacement. Paper 5: Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP Abstract: Meta and UTAustin jointly proposed a new open language style model (open-vocabulary segmentation, OVSeg), which allows the Segment Anything model to know the categories to be separated. From an effect point of view, OVSeg can be combined with Segment Anything to complete fine-grained open language segmentation. For example, in Figure 1 below, identify the types of flowers: sunflowers, white roses, chrysanthemums, carnations, green dianthus. Recommendation: Meta/UTAustin proposes a new open class segmentation model. Paper 6: Plan4MC: Skill Reinforcement Learning and Planning for Open-World Minecraft Tasks Abstract: The team from Peking University and Beijing Zhiyuan Artificial Intelligence Research Institute proposed Plan4MC, a method to efficiently solve Minecraft multitasking without expert data. The author combines reinforcement learning and planning methods to decompose solving complex tasks into two parts: learning basic skills and skill planning. The authors use intrinsic reward reinforcement learning methods to train three types of fine-grained basic skills. The agent uses a large language model to build a skill relationship graph, and obtains task planning through searching on the graph. In the experimental part, Plan4MC can currently complete 24 complex and diverse tasks, and the success rate has been greatly improved compared to all baseline methods. Recommendation: Use ChatGPT and reinforcement learning to play "Minecraft", Plan4MC overcomes 24 complex tasks. Paper 7: T2Ranking: A large-scale Chinese Benchmark for Passage Ranking ##Abstract:Paragraph sorting is a very important and challenging topic in the field of information retrieval, and has attracted much attention from academia and industry. wide attention from the industry. The effectiveness of the paragraph ranking model can improve search engine user satisfaction and help information retrieval-related applications such as question and answer systems, reading comprehension, etc. In this context, some benchmark datasets such as MS-MARCO, DuReader_retrieval, etc. were constructed to support related research work on paragraph sorting. However, most of the commonly used data sets focus on English scenes. For Chinese scenes, existing data sets have limitations in data scale, fine-grained user annotation, and solution to the problem of false negative examples. Against this background, this study constructed a new Chinese paragraph ranking benchmark data set based on real search logs: T2Ranking.

Recommendation: 300,000 real queries, 2 million Internet paragraphs, Chinese paragraph ranking benchmark data set released. ArXiv Weekly Radiostation

10 papers this week The selected NLP papers are: 2. Exploring the Trade-Offs: Unified Large Language Models vs Local Fine-Tuned Models for Highly-Specific Radiology NLI Task. (from Wei Liu, Dinggang Shen) 4. Stochastic Parrots Looking for Stochastic Parrots : LLMs are Easy to Fine-Tune and Hard to Detect with other LLMs. (from Rachid Guerraoui) 5. Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models. ( from Kai-Wei Chang, Song-Chun Zhu, Jianfeng Gao) 6. MER 2023: Multi-label Learning, Modality Robustness, and Semi-Supervised Learning. (from Meng Wang, Erik Cambria, Guoying Zhao) 7. GeneGPT: Teaching Large Language Models to Use NCBI Web APIs. (from Zhiyong Lu) 8 . A Survey on Biomedical Text Summarization with Pre-trained Language Model. (from Sophia Ananiadou) 9. Emotion fusion for mental illness detection from social media: A survey. (from Sophia Ananiadou) ) 10. Language Models Enable Simple Systems for Generating Structured Views of Heterogeneous Data Lakes. (from Christopher Ré)

this The 10 CV selected papers of the week are: 2. Align-DETR: Improving DETR with Simple IoU-aware BCE loss. (from Xiangyu Zhang) 3. Exploring Incompatible Knowledge Transfer in Few-shot Image Generation . (from Shuicheng Yan) 4. Learning Situation Hyper-Graphs for Video Question Answering. (from Mubarak Shah) 5. Video Generation Beyond a Single Clip. (from Ming-Hsuan Yang) 6. A Data-Centric Solution to NonHomogeneous Dehazing via Vision Transformer. (from Huan Liu) 7. Neuromorphic Optical Flow and Real-time Implementation with Event Cameras. (from Luca Benini, Davide Scaramuzza) 8. Language Guided Local Infiltration for Interactive Image Retrieval. (from Lei Zhang) 9. LipsFormer: Introducing Lipschitz Continuity to Vision Transformers. (from Lei Zhang) 10. UVA: Towards Unified Volumetric Avatar for View Synthesis, Pose rendering, Geometry and Texture Editing. (from Dacheng Tao) 本周 10 篇 ML 精选论文是: 1. Bridging RL Theory and Practice with the Effective Horizon. (from Stuart Russell) 2. Towards transparent and robust data-driven wind turbine power curve models. (from Klaus-Robert Müller) 3. Open-World Continual Learning: Unifying Novelty Detection and Continual Learning. (from Bing Liu) 4. Learning in latent spaces improves the predictive accuracy of deep neural operators. (from George Em Karniadakis) 5. Decouple Graph Neural Networks: Train Multiple Simple GNNs Simultaneously Instead of One. (from Xuelong Li) 6. Generalization and Estimation Error Bounds for Model-based Neural Networks. (from Yonina C. Eldar) 7. RAFT: Reward rAnked FineTuning for Generative Foundation Model Alignment. (from Tong Zhang) 8. Adaptive Consensus Optimization Method for GANs. (from Pawan Kumar) 9. Angle based dynamic learning rate for gradient descent. (from Pawan Kumar) 10. AGNN: Alternating Graph-Regularized Neural Networks to Alleviate Over-Smoothing. (from Wenzhong Guo)

The above is the detailed content of MiniGPT-4 looks at pictures, chats, and can also sketch and build websites; the video version of Stable Diffusion is here. For more information, please follow other related articles on the PHP Chinese website!

Gemma Scope: Google's Microscope for Peering into AI's Thought ProcessApr 17, 2025 am 11:55 AM

Gemma Scope: Google's Microscope for Peering into AI's Thought ProcessApr 17, 2025 am 11:55 AMExploring the Inner Workings of Language Models with Gemma Scope Understanding the complexities of AI language models is a significant challenge. Google's release of Gemma Scope, a comprehensive toolkit, offers researchers a powerful way to delve in

Who Is a Business Intelligence Analyst and How To Become One?Apr 17, 2025 am 11:44 AM

Who Is a Business Intelligence Analyst and How To Become One?Apr 17, 2025 am 11:44 AMUnlocking Business Success: A Guide to Becoming a Business Intelligence Analyst Imagine transforming raw data into actionable insights that drive organizational growth. This is the power of a Business Intelligence (BI) Analyst – a crucial role in gu

How to Add a Column in SQL? - Analytics VidhyaApr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics VidhyaApr 17, 2025 am 11:43 AMSQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Business Analyst vs. Data AnalystApr 17, 2025 am 11:38 AM

Business Analyst vs. Data AnalystApr 17, 2025 am 11:38 AMIntroduction Imagine a bustling office where two professionals collaborate on a critical project. The business analyst focuses on the company's objectives, identifying areas for improvement, and ensuring strategic alignment with market trends. Simu

What are COUNT and COUNTA in Excel? - Analytics VidhyaApr 17, 2025 am 11:34 AM

What are COUNT and COUNTA in Excel? - Analytics VidhyaApr 17, 2025 am 11:34 AMExcel data counting and analysis: detailed explanation of COUNT and COUNTA functions Accurate data counting and analysis are critical in Excel, especially when working with large data sets. Excel provides a variety of functions to achieve this, with the COUNT and COUNTA functions being key tools for counting the number of cells under different conditions. Although both functions are used to count cells, their design targets are targeted at different data types. Let's dig into the specific details of COUNT and COUNTA functions, highlight their unique features and differences, and learn how to apply them in data analysis. Overview of key points Understand COUNT and COU

Chrome is Here With AI: Experiencing Something New Everyday!!Apr 17, 2025 am 11:29 AM

Chrome is Here With AI: Experiencing Something New Everyday!!Apr 17, 2025 am 11:29 AMGoogle Chrome's AI Revolution: A Personalized and Efficient Browsing Experience Artificial Intelligence (AI) is rapidly transforming our daily lives, and Google Chrome is leading the charge in the web browsing arena. This article explores the exciti

AI's Human Side: Wellbeing And The Quadruple Bottom LineApr 17, 2025 am 11:28 AM

AI's Human Side: Wellbeing And The Quadruple Bottom LineApr 17, 2025 am 11:28 AMReimagining Impact: The Quadruple Bottom Line For too long, the conversation has been dominated by a narrow view of AI’s impact, primarily focused on the bottom line of profit. However, a more holistic approach recognizes the interconnectedness of bu

5 Game-Changing Quantum Computing Use Cases You Should Know AboutApr 17, 2025 am 11:24 AM

5 Game-Changing Quantum Computing Use Cases You Should Know AboutApr 17, 2025 am 11:24 AMThings are moving steadily towards that point. The investment pouring into quantum service providers and startups shows that industry understands its significance. And a growing number of real-world use cases are emerging to demonstrate its value out

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Dreamweaver CS6

Visual web development tools