Technology peripherals

Technology peripherals AI

AI Can Stable Diffusion surpass algorithms such as JPEG and improve image compression while maintaining clarity?

Can Stable Diffusion surpass algorithms such as JPEG and improve image compression while maintaining clarity?Can Stable Diffusion surpass algorithms such as JPEG and improve image compression while maintaining clarity?

Text-basedImage generation models are very popular. Not only diffusion models are popular, but also the open source Stable Diffusion model.

Recently, a Swiss software engineer, Matthias Bühlmann, accidentally discovered that Stable Diffusion can not only be used to generate images; Compress bitmap images, even higher compression rate than JPEG and WebP.

For example, a photo of a llama, the original image is 768KB, it can be compressed to 5.66KB using JPEG, and Stable Diffusion can further compress it to 4.98KB , and can retain more high-resolution details and fewer compression artifacts, which is visibly better than other compression algorithms to the naked eye.

However, this compression method also has flaws, that is, is not suitable for compressing face and text images, in some cases Next, some original images will even be generated with no content.

Although retraining an autoencoder can also achieve a compression effect similar to Stable Diffusion, but using Stable Diffusion One of the main advantages is that someone has invested millions of funds to help you train one, so why do you spend money to train a compression model again?

How Stable Diffusion compresses images

Diffusion models are challenging the dominance of generative models, and the corresponding open source Stable Diffusion model is also setting off an artistic revolution in the machine learning community.

Stable Diffusion is obtained by connecting three trained neural networks in series, that is, a variational autoencoder (VAE) , U-Net model and a text encoder.

The variational autoencoder encodes and decodes the image in the image space to obtain the representation vector of the image in the latent space , represented by a vector with lower resolution (64x64) with higher precision (4x32bit) The source image (3x8 or 4x8bit of 512x512) .

VAE's training process of encoding images into latent space mainly relies on self-supervised learning, that is, the input and output are both source images. Therefore, as the model is further trained, different versions of the model will The latent space representation may look different.

After remapping and interpreting the latent space representation into a 4-channel color image using Stable Diffusion v1.4, it looks like the middle image in the figure below, in the source image Key features are still visible.

It should be noted that VAE round-trip encoding once is not lossless.

For example, after decoding, theANNA name on the blue tape is not as clear as the source image, and the readability is significantly reduced.

The variational autoencoder in Stable Diffusion v1.4 is not very good at representing small text and face images, I don’t know if it will be improved in v1.5. The main compression algorithm of Stable Diffusion is to use this latent space representation of images to generate new images from short text descriptions. Start from the random noise represented by the latent space, use a fully trained U-Net to iteratively remove the noise from the latent space image, and output the model with a simpler representation that it believes is in this noise The prediction of "seeing" is a bit like when we look at clouds, restore the shapes or faces in our minds from irregular graphics. When using Stable Diffusion to generate images, this iterative denoising step is guided by a third component, the text encoder, which provides U-Net with information about it Information on what one should try to see in the noise. However, for compression tasks, does not require a text encoder, so the experimental process only created an empty string encoding Used to tell U-Net to perform unguided denoising during the image reconstruction process. In order to use Stable Diffusion as an image compression codec, the algorithm needs to effectively compress the latent representation produced by VAE. It can be found in experiments that downsampling the latent representation or directly using existing lossy image compression methods will greatly reduce the quality of the reconstructed image. But the author found that VAE decoding seems to be very effective in quantization of latent representations. Scaling, clamping, and remapping of potentials from floating point to 8-bit unsigned integers produces only small visible reconstruction errors. #By quantizing the 8-bit latent representation, the data size represented by the image is now 64*64*4*8bit=16kB, which is much smaller than uncompressed The source image is 512*512*3*8bit=768kB If the number of bits of the latent representation is less than 8 bits, it will not produce better results. If palettizingand dithering are further performed on the image, the quantization effect will be improved again. Created a palette representation using 256*4*8 bit vectors and a latent representation of Floyd-Steinberg dithering, further compressing the data size to 64*64*8 256*4 *8bit=5kB The dithering of the latent space palette introduces noise, thus distorting the decoding results. However, since Stable Diffusion is based on the removal of latent noise, U-Net can be used to remove the noise caused by jitter. After 4 iterations, the reconstruction result is visually very close to the unquantized version. Although the amount of data is greatly reduced (the source image is 155 times larger than the compressed image), the effect is very good, but it also introduces Some artifacts (such as the heart pattern in the original image that is not present). Interestingly, this compression scheme introduces artifacts that have a greater impact on image content than image quality, and images compressed in this way may contain these types of compression artifacts. The author also used zlib to perform lossless compression on the palette and index. In the test samples, most of the compression results were less than 5kb, but this compression method still has more room for optimization. In order to evaluate this compression codec, the author did not use any standard test images found on the Internet, because the images on the Internet are likely to be trained by Stable Diffusion Concentrations have occurred, and compressing such images may result in an unfair contrast advantage. To make the comparison as fair as possible, the author used the highest quality encoder settings from the Python image library, as well as adding lossless data compression of the compressed JPG data using the mozjpeg library. It’s worth noting that while Stable Diffusion’s results subjectively look much better than JPG and WebP compressed images, they are not significantly better in terms of standard measurements like PSNR or SSIM. , but no worse. It's just that the types of artifacts introduced are less obvious because they affect image content more than they affect image quality. This compression method is also a bit dangerous, although the quality of the reconstructed features is high, the content may be affected by compression artifacts, even if it looks very sharp. For example, in a test image, although Stable Diffusion as a codec does a much better job of maintaining the quality of the image, even camera grain are preserved (something that most traditional compression algorithms struggle to achieve), but their content is still affected by compression artifacts, and fine features like building shapes may change. While it is certainly impossible to identify more true values in a JPG compressed image than in a Stable Diffusion compressed image, the Stable Diffusion compression results The high visual quality can be deceptive, as compression artifacts in JPG and WebP are easier to spot. If you also want to reproduce the experiment, the author has open sourced the code on . Finally, the author stated that the experiment designed in the article is still quite simple, but the effect is still surprising, .

The above is the detailed content of Can Stable Diffusion surpass algorithms such as JPEG and improve image compression while maintaining clarity?. For more information, please follow other related articles on the PHP Chinese website!

An AI Space Company Is BornMay 12, 2025 am 11:07 AM

An AI Space Company Is BornMay 12, 2025 am 11:07 AMThis article showcases how AI is revolutionizing the space industry, using Tomorrow.io as a prime example. Unlike established space companies like SpaceX, which weren't built with AI at their core, Tomorrow.io is an AI-native company. Let's explore

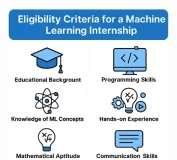

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

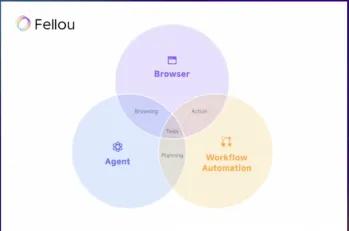

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft