Technology peripherals

Technology peripherals AI

AI Microsoft's open source fine-tuned instruction set helps develop a home version of GPT-4, supporting bilingual generation in Chinese and English.

Microsoft's open source fine-tuned instruction set helps develop a home version of GPT-4, supporting bilingual generation in Chinese and English."Instruction" is a key factor in the breakthrough progress of the ChatGPT model, which can make the output of the language model more consistent with "human preferences."

But the annotation of instructions requires a lot of manpower. Even with open source language models, it is difficult for academic institutions and small companies with insufficient funds to train their own ChatGPT.

Recently, Microsoft researchers used the previously proposed Self-Instruct technology, for the first time tried to use the GPT-4 model to automatically generate a language model Required trim instruction data.

Paper link: https://arxiv.org/pdf/2304.03277.pdf

Code link: https://github.com/Instruction-Tuning-with-GPT-4/GPT-4-LLM

Experimental results on the LLaMA model based on the Meta open source show that 52,000 English and Chinese instruction-following data generated by GPT-4 outperform instructions generated by previous state-of-the-art models in new tasks Data, the researchers also collected feedback and comparison data from GPT-4 for comprehensive evaluation and reward model training.

Training data

Data collection

The researchers reused the Alpaca model released by Stanford University 52,000 instructions are used, each of which describes the task that the model should perform, and follows the same prompting strategy as Alpaca, taking into account the situation with and without input, as the optional context or input of the task; use Large language models output answers to instructions.

In the Alpaca dataset, the output is generated using GPT-3.5 (text-davinci-003), but in In this paper, the researchers chose to use GPT-4 to generate data, including the following four data sets:

1. English Instruction-Following Data: For each of the 52,000 instructions collected in Alpaca, an English GPT-4 answer is provided.

Future work is to follow an iterative process and build a new data set using GPT-4 and self-instruct .

2. Chinese Instruction-Following Data: Use ChatGPT to translate 52,000 instructions into Chinese, and ask GPT-4 to answer these instructions in Chinese, and This builds a Chinese instruction-following model based on LLaMA and studies the cross-language generalization ability of instruction tuning.

3. Comparison Data: Requires GPT-4 to provide a rating from 1 to 10 for its own reply, and evaluate GPT-4, GPT The responses of the three models -3.5 and OPT-IML are scored to train the reward model.

4. The answer to the unnatural instruction: The answer to GPT-4 is 68,000 Decoded on a dataset of (instruction, input, output) triples, this subset is used to quantify the difference in scale between GPT-4 and the instruction-tuned model.

Statistics

The researchers compared the English output reply sets of GPT-4 and GPT-3.5: for each output, the root verb and the direct-object noun were extracted, and in each The frequency of unique verb-noun pairs is calculated over the output sets.

Verb-noun pairs with frequency higher than 10

The 25 most frequent verb-noun pairs

Comparison of frequency distribution of output sequence length

It can be seen that GPT-4 tends to generate more data than GPT-3.5 For long sequences, the long tail phenomenon of GPT-3.5 data in Alpaca is more obvious than the output distribution of GPT-4. This may be because the Alpaca data set involves an iterative data collection process, and similar instruction instances are removed in each iteration. This is not available in current one-time data generation.

Although the process is simple, the instruction-following data generated by GPT-4 exhibits more powerful alignment performance.

Instruction tuning language model

Self-Instruct tuning

Researchers based on LLaMA After 7B checkpoint supervised fine-tuning, two models were trained: LLaMA-GPT4 was trained on 52,000 English instruction-following data generated by GPT-4; LLaMA-GPT4-CN was trained on 52,000 Chinese items generated by GPT-4 Trained on instruction-following data.

Two models were used to study the data quality of GPT-4 and the cross-language generalization properties of instruction-tuned LLMs in one language.

Reward model

Reinforcement Learning from Human Feedback (RLHF) aims to Align LLM behavior with human preferences to make the language model’s output more useful to humans.

A key component of RLHF is reward modeling. The problem can be formulated as a regression task to predict the reward score given the prompt and reply. This approach usually requires large-scale Comparative data, that is, comparing the responses of two models to the same cue.

Existing open source models, such as Alpaca, Vicuna and Dolly, do not use RLHF due to the high cost of labeling comparison data, and recent research shows that GPT-4 can Identify and fix your own errors and accurately judge the quality of your responses.

To promote research on RLHF, researchers created comparative data using GPT-4; to evaluate data quality, The researchers trained a reward model based on OPT 1.3B to score different replies: for one prompt and K replies, GPT-4 provides a score between 1 and 10 for each reply.

Experimental Results

Evaluating the performance of self-instruct tuned models for never-before-seen tasks on GPT-4 data remains a difficult task .

Since the main goal is to evaluate the model's ability to understand and comply with various task instructions, to achieve this, the researchers utilized three types of evaluations, confirmed by the results of the study, "Using GPT-4 generated data is an effective method for tuning large language model instructions compared to data automatically generated by other machines.

Human Evaluation

#To evaluate the quality of large language model alignment after tuning this instruction, the researchers followed previously proposed alignment criteria: If An assistant is helpful, honest, and harmless (HHH) if it is aligned with human evaluation criteria, which are also widely used to evaluate the degree to which AI systems are consistent with human values.

Helpfulness: Whether it can help humans achieve their goals, a model that can accurately answer questions is helpful.

Honesty: Whether to provide true information and express its uncertainty when necessary to avoid misleading human users, a model that provides false information is dishonest.

Harmlessness: If it does not cause harm to humans, a model that generates hate speech or promotes violence is not harmless.

Based on the HHH alignment criteria, the researchers used the crowdsourcing platform Amazon Mechanical Turk to conduct manual evaluation of the model generation results.

The two models proposed in the article were fine-tuned on the data generated by GPT-4 and GPT-3 respectively. It can be seen that LLaMA-GPT4 is much better than Alpaca (19.74%) fine-tuned on GPT-3 in terms of helpfulness with a proportion of 51.2%. However, under the standards of honesty and harmlessness, it is basically a tie. GPT-3 is slightly better.

When compared with the original GPT-4, it can be found that the two are quite consistent in the three standards. That is, the performance of LLaMA after tuning the GPT-4 instructions is similar to the original GPT-4.

GPT-4 automatic evaluation

Inspired by Vicuna, researchers also chose to use GPT-4 for evaluation The quality of the responses generated by different chatbot models to 80 unseen questions. Responses were collected from the LLaMA-GPT-4(7B) and GPT-4 models, and answers from other models were obtained from previous research, and then asked GPT-4 scores the reply quality between two models on a scale from 1 to 10 and compares the results with other strong competing models (ChatGPT and GPT-4).

The evaluation results show that feedback data and reward models are effective in improving the performance of LLaMA; using GPT-4 LLaMA performs instruction tuning and often performs better than text-davinci-003 tuning (i.e. Alpaca) and no tuning (i.e. LLaMA); the performance of 7B LLaMA GPT4 exceeds that of 13B Alpaca and LLaMA, but is different from GPT-4 Compared with other large commercial chatbots, there is still a gap.

When further studying the performance of the Chinese chatbot, GPT-4 was first used to translate the chatbot’s questions from English as well. Into Chinese, using GPT-4 to obtain the answer, two interesting observations can be obtained:

1. It can be found that the relative score indicators of GPT-4 evaluation are quite consistent. , both in terms of different adversary models (i.e. ChatGPT or GPT-4) and languages (i.e. English or Chinese).

2. Only for the results of GPT-4, the translated replies performed better than the Chinese-generated replies, probably because of GPT-4 It is trained in a richer English corpus than Chinese, so it has stronger English instruction-following capabilities.

Unnatural Instruction Evaluation

From the average In terms of ROUGE-L score, Alpaca is better than LLaMA-GPT 4 and GPT-4. It can be noticed that LLaMA-GPT4 and GPT4 gradually perform better when the ground truth reply length increases, and finally perform better when the length exceeds 4. High performance means instructions can be followed better when the scene is more creative.

In different subsets, the behavior of LLaMA-GPT4 and GPT-4 is almost the same; when the sequence length is short, both LLaMA-GPT4 and GPT-4 can generate simple Replies that provide basic factual answers but add extra words to make the reply more chat-like may result in a lower ROUGE-L score.

The above is the detailed content of Microsoft's open source fine-tuned instruction set helps develop a home version of GPT-4, supporting bilingual generation in Chinese and English.. For more information, please follow other related articles on the PHP Chinese website!

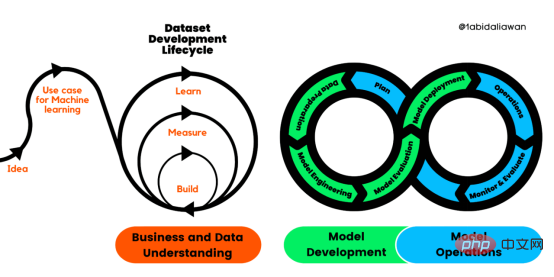

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor