Technology peripherals

Technology peripherals AI

AI Study: AI coding assistants may lead to unsafe code, developers should use caution

Study: AI coding assistants may lead to unsafe code, developers should use cautionStudy: AI coding assistants may lead to unsafe code, developers should use caution

Researchers say that relying on AI (artificial intelligence) assistants when writing code can make developers overconfident in their development work, resulting in less secure code.

A recent study conducted by Stanford University found that AI-based coding assistants such as GitHub’s Copilot can leave developers confused about the quality of their work, leading to software There may be vulnerabilities and it is less secure. One AI expert says it's important for developers to manage their expectations when using AI assistants for such tasks.

more security vulnerabilities introduced by AI coding

The study conducted a trial with 47 developers, 33 of whom used AI assistants while writing code, compared with a control group 14 people write code alone. They must complete five security-related programming tasks, including encrypting or decrypting strings using symmetric keys. They can all get help using a web browser.

AI assistant tools for coding and other tasks are becoming increasingly popular, and Microsoft-owned GitHub will launch Copilot as a technology preview in 2021 to increase developer productivity.

Microsoft pointed out in a research report released in September this year that GitHub makes developers more efficient. 88% of respondents said Copilot is more efficient when coding, and 59% said its main benefits were completing repetitive tasks faster and completing coding faster.

Researchers at Stanford University wanted to know whether users were writing more insecure code with AI assistants, and found that this was indeed the case. Developers who use AI assistants remain disillusioned about the quality of their code, they say.

The team wrote in the paper: "We observed that developers who received help from an AI assistant were more likely to introduce security vulnerabilities in most programming tasks than developers in the control group, but also in More likely to rate unsafe answers as safe. Additionally, the study found that developers who invested more in creating queries to their AI assistants (such as adopting AI assistant features or adjusting parameters) were more likely to ultimately provide secure solutions. ."

This research project used only three programming languages: Python, C and Verilog. It involves a relatively small number of participants with varying development experience, ranging from recent college graduates to seasoned professionals, using specially developed applications that are monitored by administrators.

The first experiment was written in Python, and those written with the help of AI assistants were more likely to be unsafe or incorrect. In the control group without the help of AI assistants, 79% of developers wrote code without quality problems; in the control group with AI assistant help, only 67% of developers wrote code without quality problems.

Use AI Coding Assistants with Care

Things get worse when it comes to the security of the code they create, as developers who employ AI assistants are more likely to provide unsafe solutions, or use Simple password to encrypt and decrypt strings. They are also less likely to perform plausibility checks on the final values to ensure the process is working as expected.

The findings suggest that less experienced developers may be inclined to trust AI assistants, but at the risk of introducing new security vulnerabilities, researchers at Stanford University said. Therefore, this research will help improve and guide the design of future AI code assistants.

Peter van der Putten, director of the AI lab at software provider Pegasystems, said that although the scale is small, the research is very interesting and the results can inspire further research into the use of AI assistants in coding and other areas. use. "It's also consistent with what some of our broader research into dependence on AI assistants has concluded," he said. He cautioned that developers adopting AI assistants should gain access to them in an incremental manner. Trust the tool, don't rely too much on it, and understand its limitations. He said, “Acceptance of a technology depends not only on expectations for quality and performance, but also on whether it saves time and effort. Overall, people have a positive attitude towards the use of AI assistants, as long as their Expectations are managed. This means defining best practices for how to use these tools, as well as adopting potential additional features to test code quality."

The above is the detailed content of Study: AI coding assistants may lead to unsafe code, developers should use caution. For more information, please follow other related articles on the PHP Chinese website!

What is the Chain of Numerical Reasoning in Prompt Engineering?Apr 17, 2025 am 10:08 AM

What is the Chain of Numerical Reasoning in Prompt Engineering?Apr 17, 2025 am 10:08 AMIntroduction Prompt engineering is crucial in the rapidly evolving fields of artificial intelligence and natural language processing. Among its techniques, Chain of Numerical Reasoning (CoNR) stands out as a highly effective method for enhancing AI

Top Python Libraries Used by Kaggle GrandmastersApr 17, 2025 am 10:03 AM

Top Python Libraries Used by Kaggle GrandmastersApr 17, 2025 am 10:03 AMUnlocking the Secrets of Kaggle Grandmasters: Top Python Libraries Revealed Kaggle, the premier platform for data science competitions, boasts a select group of elite performers: the Kaggle Grandmasters. These individuals consistently deliver innova

10 Ways AI PCs Will Transform Your Workplace - Analytics VidhyaApr 17, 2025 am 09:59 AM

10 Ways AI PCs Will Transform Your Workplace - Analytics VidhyaApr 17, 2025 am 09:59 AMThe Future of Work: How AI PCs Will Revolutionize the Workplace The integration of artificial intelligence (AI) into personal computers – AI PCs – represents a significant leap forward in workplace technology. AI PCs, defined as the fusion of AI and

How to Freeze Panes in Excel?Apr 17, 2025 am 09:56 AM

How to Freeze Panes in Excel?Apr 17, 2025 am 09:56 AMDetailed explanation of Excel freeze pane function: efficiently process large data sets Microsoft Excel is one of the excellent tools for organizing and analyzing data, and the Freeze Pane feature is one of its highlights. This feature allows you to pin specific rows or columns so that they remain visible while browsing the rest of the spreadsheets, simplifying data monitoring and comparison. This article will dive into how to use Excel freeze pane functionality and provide some practical tips and examples. Functional Overview Excel's freeze pane feature keeps specific rows or columns visible when scrolling through large data sets, making it easier to monitor and compare data. Improve navigation efficiency, keep titles visible, and simplify data comparisons in large spreadsheets. Provides via the View tab and Freeze

Neo4j vs. Amazon Neptune: Graph Databases in Data EngineeringApr 17, 2025 am 09:52 AM

Neo4j vs. Amazon Neptune: Graph Databases in Data EngineeringApr 17, 2025 am 09:52 AMNavigating the Complexities of Interconnected Data: Neo4j vs. Amazon Neptune In today's data-rich world, efficiently managing intricate, interconnected information is paramount. While traditional databases remain relevant, they often struggle with hi

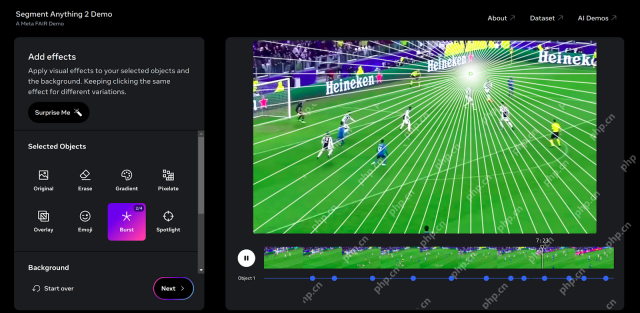

Meta SAM 2: Architecture, Applications & Limitations - Analytics VidhyaApr 17, 2025 am 09:40 AM

Meta SAM 2: Architecture, Applications & Limitations - Analytics VidhyaApr 17, 2025 am 09:40 AMMeta's Segment Anything Model 2 (SAM-2): A Giant Leap in Real-Time Image and Video Segmentation Meta has once again pushed the boundaries of artificial intelligence with SAM-2, a groundbreaking advancement in computer vision that builds upon the impr

AI Workflows and Data Strategies for Consumer ExperiencesApr 17, 2025 am 09:39 AM

AI Workflows and Data Strategies for Consumer ExperiencesApr 17, 2025 am 09:39 AMEnhancing Digital Consumer Experiences with AI: A Data-Driven Approach The digital landscape is fiercely competitive. This article explores how artificial intelligence (AI) significantly improves consumer experiences on digital platforms. We'll exam

What is the Positional Encoding in Stable Diffusion? - Analytics VidhyaApr 17, 2025 am 09:34 AM

What is the Positional Encoding in Stable Diffusion? - Analytics VidhyaApr 17, 2025 am 09:34 AMStable Diffusion: Unveiling the Power of Positional Encoding in Text-to-Image Generation Imagine generating breathtaking, high-resolution images from simple text descriptions. This is the power of Stable Diffusion, a cutting-edge text-to-image model

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

WebStorm Mac version

Useful JavaScript development tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),