Machine Learning: Don't underestimate the power of tree models

Due to their complexity, neural networks are often considered the “holy grail” for solving all machine learning problems. Tree-based methods, on the other hand, have not received equal attention, mainly due to the apparent simplicity of such algorithms. However, these two algorithms may seem different, but they are like two sides of the same coin, both important.

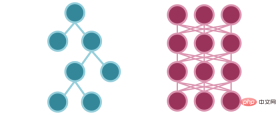

Tree Model VS Neural Network

Tree-based methods are usually better than neural networks. Essentially, tree-based methods and neural network-based methods are placed in the same category because they both approach the problem through step-by-step deconstruction, rather than splitting the entire data set through complex boundaries like support vector machines or logistic regression. .

Obviously, tree-based methods gradually segment the feature space along different features to optimize information gain. What is less obvious is that neural networks also approach tasks in a similar way. Each neuron monitors a specific part of the feature space (with multiple overlaps). When input enters this space, certain neurons are activated.

Neural networks view this piece-by-piece model fitting from a probabilistic perspective, while tree-based methods take a deterministic perspective. Regardless, the performance of both depends on the depth of the model, since their components are associated with various parts of the feature space.

A model with too many components (nodes for a tree model, neurons for a neural network) will overfit, while a model with too few components simply won’t give Meaningful predictions. (Both of them start by memorizing data points, rather than learning generalization.)

If you want to understand more intuitively how the neural network divides the feature space, you can read this introduction Article on the Universal Approximation Theorem: https://medium.com/analytics-vidhya/you-dont-understand-neural-networks-until-you-understand-the-universal-approximation-theory-85b3e7677126.

While there are many powerful variations of decision trees, such as Random Forest, Gradient Boosting, AdaBoost, and Deep Forest, in general, tree-based methods are essentially simplifications of neural networks Version.

Tree-based methods solve the problem piece by piece through vertical and horizontal lines to minimize entropy (optimizer and loss). Neural networks use activation functions to solve problems piece by piece.

Tree-based methods are deterministic rather than probabilistic. This brings some nice simplifications like automatic feature selection.

The activated condition nodes in the decision tree are similar to the activated neurons (information flow) in the neural network.

The neural network transforms the input through fitting parameters and indirectly guides the activation of subsequent neurons. Decision trees explicitly fit parameters to guide the flow of information. (This is the result of deterministic versus probabilistic.)

The flow of information in the two models is similar, just in the tree model The flow method is simpler.

Selection of 1 and 0 in tree model VS Probabilistic selection of neural network

Of course, this is an abstract conclusion, and it may even be possible Controversial. Granted, there are many obstacles to making this connection. Regardless, this is an important part of understanding when and why tree-based methods are better than neural networks.

For decision trees, working with structured data in tabular or tabular form is natural. Most people agree that using neural networks to perform regression and prediction on tabular data is overkill, so some simplifications are made here. The choice of 1s and 0s, rather than probabilities, is the main source of the difference between the two algorithms. Therefore, tree-based methods can be successfully applied to situations where probabilities are not required, such as structured data.

For example, tree-based methods show good performance on the MNIST dataset because each number has several basic features. There is no need to calculate probabilities and the problem is not very complex, which is why a well-designed tree ensemble model can perform as well as or better than modern convolutional neural networks.

Generally, people tend to say that "tree-based methods just remember the rules", which is correct. Neural networks are the same, except they can remember more complex, probability-based rules. Rather than explicitly giving a true/false prediction for a condition like x>3, the neural network amplifies the input to a very high value, resulting in a sigmoid value of 1 or generating a continuous expression.

On the other hand, since neural networks are so complex, there is a lot that can be done with them. Both convolutional and recurrent layers are outstanding variants of neural networks because the data they process often require the nuances of probability calculations.

There are very few images that can be modeled with ones and zeros. Decision tree values cannot handle datasets with many intermediate values (e.g. 0.5) that's why it performs well on MNIST dataset where pixel values are almost all black or white but pixels of other datasets The value is not (e.g. ImageNet). Similarly, the text has too much information and too many anomalies to express in deterministic terms.

This is why neural networks are mainly used in these fields, and why neural network research stagnated in the early days (before the beginning of the 21st century) when large amounts of image and text data were not available. . Other common uses of neural networks are limited to large-scale predictions, such as YouTube video recommendation algorithms, which are very large and must use probabilities.

The data science team at any company will probably use tree-based models instead of neural networks, unless they are building a heavy-duty application like blurring the background of a Zoom video. But in daily business classification tasks, tree-based methods make these tasks lightweight due to their deterministic nature, and their methods are the same as neural networks.

In many practical situations, deterministic modeling is more natural than probabilistic modeling. For example, to predict whether a user will purchase an item from an e-commerce website, a tree model is a good choice because users naturally follow a rule-based decision-making process. A user’s decision-making process might look something like this:

- Have I had a positive shopping experience on this platform before? If so, continue.

- Do I need this item now? (For example, should I buy sunglasses and swimming trunks for the winter?) If so, continue.

- Based on my user demographics, is this a product I'm interested in purchasing? If yes, continue.

- Is this thing too expensive? If not, continue.

- Have other customers rated this product highly enough for me to feel comfortable purchasing it? If yes, continue.

Generally speaking, humans follow a rule-based and structured decision-making process. In these cases, probabilistic modeling is unnecessary.

Conclusion

- It is best to think of tree-based methods as scaled-down versions of neural networks to perform characterization in a simpler way Classification, optimization, information flow transfer, etc.

- The main difference in usage between tree-based methods and neural network methods is deterministic (0/1) and probabilistic data structures. Structured (tabular) data can be better modeled using deterministic models.

- Don’t underestimate the power of the tree method.

The above is the detailed content of Machine Learning: Don't underestimate the power of tree models. For more information, please follow other related articles on the PHP Chinese website!

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AM

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AMGoogle is leading this shift. Its "AI Overviews" feature already serves more than one billion users, providing complete answers before anyone clicks a link.[^2] Other players are also gaining ground fast. ChatGPT, Microsoft Copilot, and Pe

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AM

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AMIn 2022, he founded social engineering defense startup Doppel to do just that. And as cybercriminals harness ever more advanced AI models to turbocharge their attacks, Doppel’s AI systems have helped businesses combat them at scale— more quickly and

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AM

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AMVoila, via interacting with suitable world models, generative AI and LLMs can be substantively boosted. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AM

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AMLabor Day 2050. Parks across the nation fill with families enjoying traditional barbecues while nostalgic parades wind through city streets. Yet the celebration now carries a museum-like quality — historical reenactment rather than commemoration of c

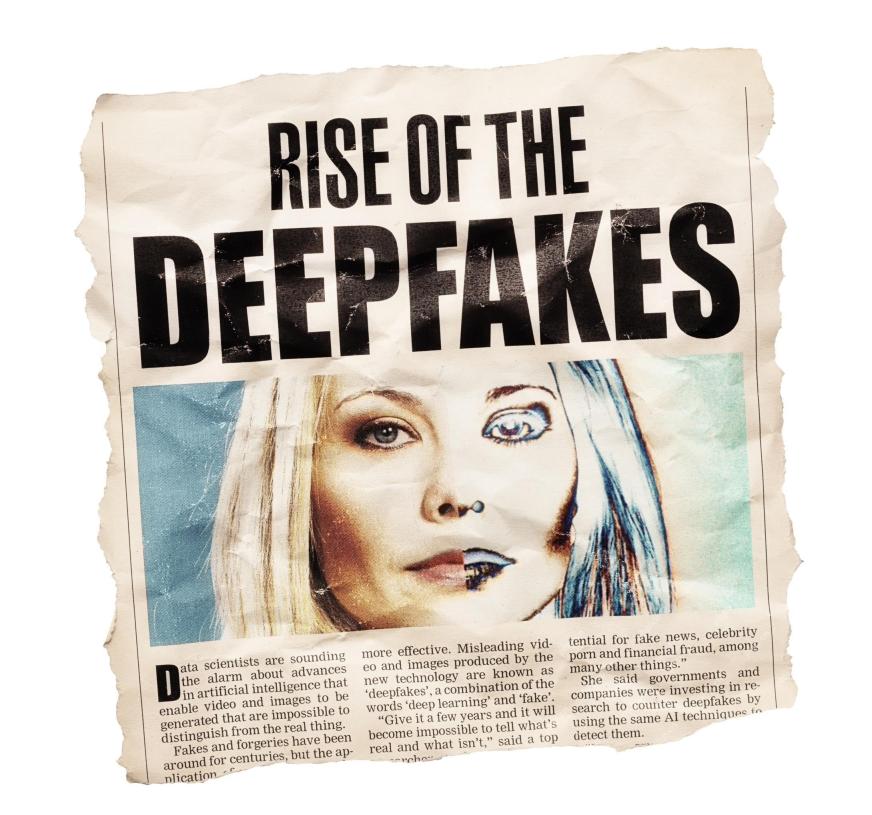

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AM

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AMTo help address this urgent and unsettling trend, a peer-reviewed article in the February 2025 edition of TEM Journal provides one of the clearest, data-driven assessments as to where that technological deepfake face off currently stands. Researcher

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AM

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AMFrom vastly decreasing the time it takes to formulate new drugs to creating greener energy, there will be huge opportunities for businesses to break new ground. There’s a big problem, though: there’s a severe shortage of people with the skills busi

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AM

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AMYears ago, scientists found that certain kinds of bacteria appear to breathe by generating electricity, rather than taking in oxygen, but how they did so was a mystery. A new study published in the journal Cell identifies how this happens: the microb

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AM

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AMAt the RSAC 2025 conference this week, Snyk hosted a timely panel titled “The First 100 Days: How AI, Policy & Cybersecurity Collide,” featuring an all-star lineup: Jen Easterly, former CISA Director; Nicole Perlroth, former journalist and partne

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

WebStorm Mac version

Useful JavaScript development tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Linux new version

SublimeText3 Linux latest version